本文主要是分享下对 INFINI Gateway 的压测过程,使用graphite观测压力测试qps的过程。如有什么错漏的地方,还请多多包涵,不多逼逼,进入正题

硬件配置

| 主机 | 型号 | CPU | 内存/带宽 | 系统 |

|---|---|---|---|---|

| 172.31.18.148(gateway1) | aws c5a.8xlarge | x86 32核 | 64G/10G | Ubuntu 20.04.1 LTS |

| 172.31.24.102(gateway2) | aws c6g.8xlarge | arm 32核 | 64G/10G | Ubuntu 20.04.1 LTS |

| 172.31.23.133(test) | aws c5a.8xlarge | x86 32核 | 64G/10G | Ubuntu 20.04.1 LTS |

测试准备

系统调优(所有节点)

修改系统参数

vi /etc/sysctl.conf

net.netfilter.nf_conntrack_max = 262144

net.nf_conntrack_max = 262144

net.ipv4.ip_forward = 1

net.ipv4.conf.default.accept_redirects = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.accept_redirects = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.ip_nonlocal_bind=1

fs.file-max=10485760

net.core.rmem_max=4194304

net.core.wmem_max=4194304

net.ipv4.tcp_tw_reuse=1

net.ipv4.tcp_timestamps=1

net.core.somaxconn=32768

net.ipv4.tcp_syncookies=1

net.ipv4.tcp_max_syn_backlog=65535

net.ipv4.tcp_synack_retries=0

net.core.netdev_max_backlog=65535

net.core.rmem_max=4194304

net.core.wmem_max=4194304

#修改默认的本地端口范围

net.ipv4.ip_local_port_range='1024 65535'

net.ipv4.tcp_tw_reuse=1

net.ipv4.tcp_timestamps=1保存并执行 sysctl -p

修改用户单进程的最大文件数,

用户登录时生效

echo '* soft nofile 1048576' >> /etc/security/limits.conf

echo '* hard nofile 1048576' >> /etc/security/limits.conf 用户单进程的最大文件数 当前会话生效

ulimit -n 1048576

安装docker,graphite(gateway 安装)

sudo apt-get update

sudo apt-get install docker.io

docker run -d --name graphite --restart=always -p 80:80 -p 2003-2004:2003-2004 -p 2023-2024:2023-2024 -p 8125:8125/udp -p 8126:8126 graphiteapp/graphite-statsd下载安装gateway

下载最新版infini-gateway(https://github.com/medcl/infini-gateway/releases),下载后无需安装即可使用

修改配置文件

解压后将gateway.yml配置文件修改成如下:

path.data: data

path.logs: log

entry:

- name: es_gateway #your gateway endpoint

enabled: true

router: not_found #configure your gateway's routing flow

network:

binding: 0.0.0.0:8000

# skip_occupied_port: false

reuse_port: true #you can start multi gateway instance, they share same port, to full utilize system's resources

tls:

enabled: false #if your es is using https, the gateway entrypoint should enable https too

flow:

- name: not_found #testing flow

filter:

- name: not_found

type: echo

parameters:

str: '{"message":"not found"}'

repeat: 1

- name: cache_first

filter: #comment out any filter sections, like you don't need cache or rate-limiter

- name: get_cache_1

type: get_cache

parameters:

pass_patterns: ["_cat","scroll", "scroll_id","_refresh","_cluster","_ccr","_count","_flush","_ilm","_ingest","_license","_migration","_ml","_rollup","_data_stream","_open", "_close"]

# hash_factor:

# header:

# - "*"

# path: true

# query_args:

# - id

# must_cache:

# method:

# - GET

# path:

# - _search

# - _async_search

- name: rate_limit_1

type: rate_limit

parameters:

message: "Hey, You just reached our request limit!"

rules: #configure match rules against request's PATH, eg: /_cluster/health, match the first rule and return

- pattern: "/(?P<index_name>medcl)/_search" #use index name, will match: /medcl/_search, with limit medcl with max_qps ~=3

max_qps: 3 #setting max qps after match

group: index_name #use regex group name to extract the throttle bucket name

- pattern: "/(?P<index_name>.*?)/_search" #use regex pattern to match index, will match any /$index/_search, and limit each index with max_qps ~=100

max_qps: 100

group: index_name

- name: elasticsearch_1

type: elasticsearch

parameters:

elasticsearch: default #elasticsearch configure reference name

max_connection: 1000 #max tcp connection to upstream, default for all nodes

max_response_size: -1 #default for all nodes

balancer: weight

discovery:

enabled: true

- name: set_cache_1

type: set_cache

- name: request_logging

filter:

- name: request_header_filter

type: request_header_filter

parameters:

include:

CACHE: true

- name: request_logging_1

type: request_logging

parameters:

queue_name: request_logging

router:

- name: default

tracing_flow: request_logging #a flow will execute after request finish

default_flow: cache_first

rules: #rules can't be conflicted with each other, will be improved in the future

- id: 1 # this rule means match every requests, and sent to `cache_first` flow

method:

- "*"

pattern:

- /

flow:

- cache_first # after match, which processing flow will go through

- name: not_found

default_flow: not_found

elasticsearch:

- name: default

enabled: false

endpoint: http://localhost:9200 # if your elasticsearch is using https, your gateway should be listen on as https as well

version: 7.6.0 #optional, used to select es adaptor, can be done automatically after connect to es

index_prefix: gateway_

basic_auth: #used to discovery full cluster nodes, or check elasticsearch's health and versions

username: elastic

password: Bdujy6GHehLFaapFI9uf

statsd:

enabled: true

host: 127.0.0.1

port: 8125

namespace: gateway.

modules:

- name: elastic

enabled: false

elasticsearch: default

init_template: true

- name: pipeline

enabled: true

runners:

- name: primary

enabled: true

max_go_routine: 1

threshold_in_ms: 0

timeout_in_ms: 5000

pipeline_id: request_logging_index

pipelines:

- name: request_logging_index

start:

joint: json_indexing

enabled: false

parameters:

index_name: "gateway_requests"

elasticsearch: "default"

input_queue: "request_logging"

num_of_messages: 1

timeout: "60s"

worker_size: 6

bulk_size: 5000

process: []

queue:

min_msg_size: 1

max_msg_size: 500000000

max_bytes_per_file: 50*1024*1024*1024

sync_every_in_seconds: 30

sync_timeout_in_seconds: 10

read_chan_buffer: 0开始测试

本文中使用的压测工具http-loader,见附件

为了能充分利用服务器多核资源,测试的时候直接启用多个进程

压测x86 gateway服务器

在gateway1上启动五个gateway

./gateway-amd64&

./gateway-amd64&

./gateway-amd64&

./gateway-amd64&

./gateway-amd64&测试gateway返回内容

curl http://172.31.18.148:8000

输出

{"message":"not found"}

在gateway2,test机上同时执行(1000个并发压测10分钟)

./http-loader -c 1000 -d 600 http://172.31.18.148:8000观测gateway1服务器指标

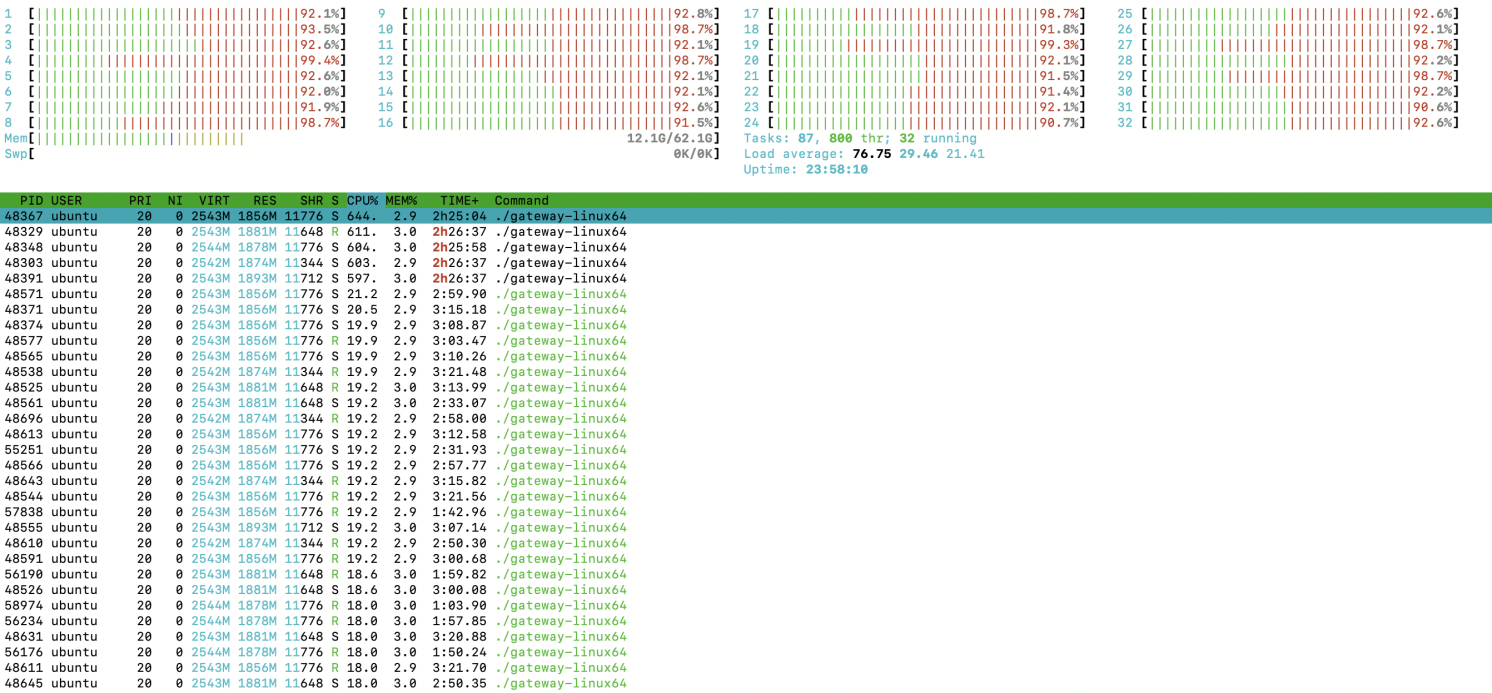

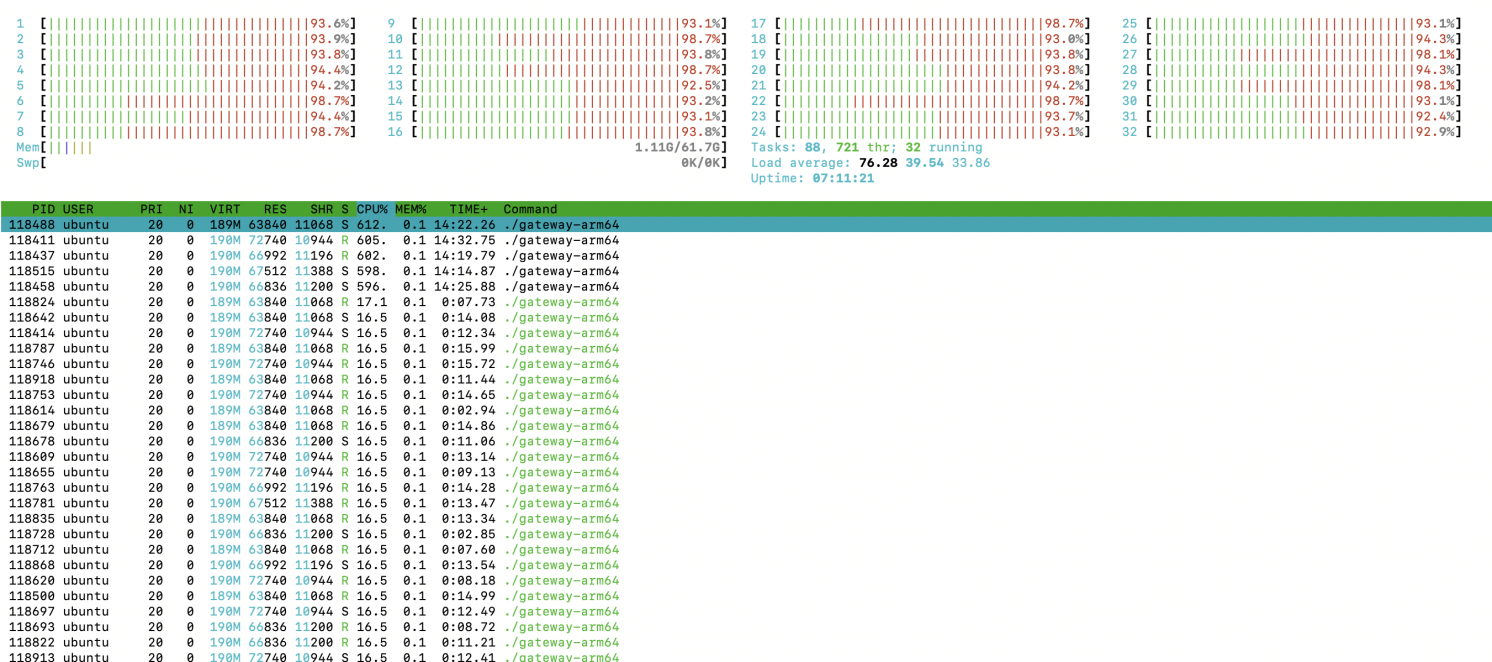

使用 htop 查看系统负载情况,如下图:

这里我们看到cpu基本跑满,说明gateway已经压到极限了

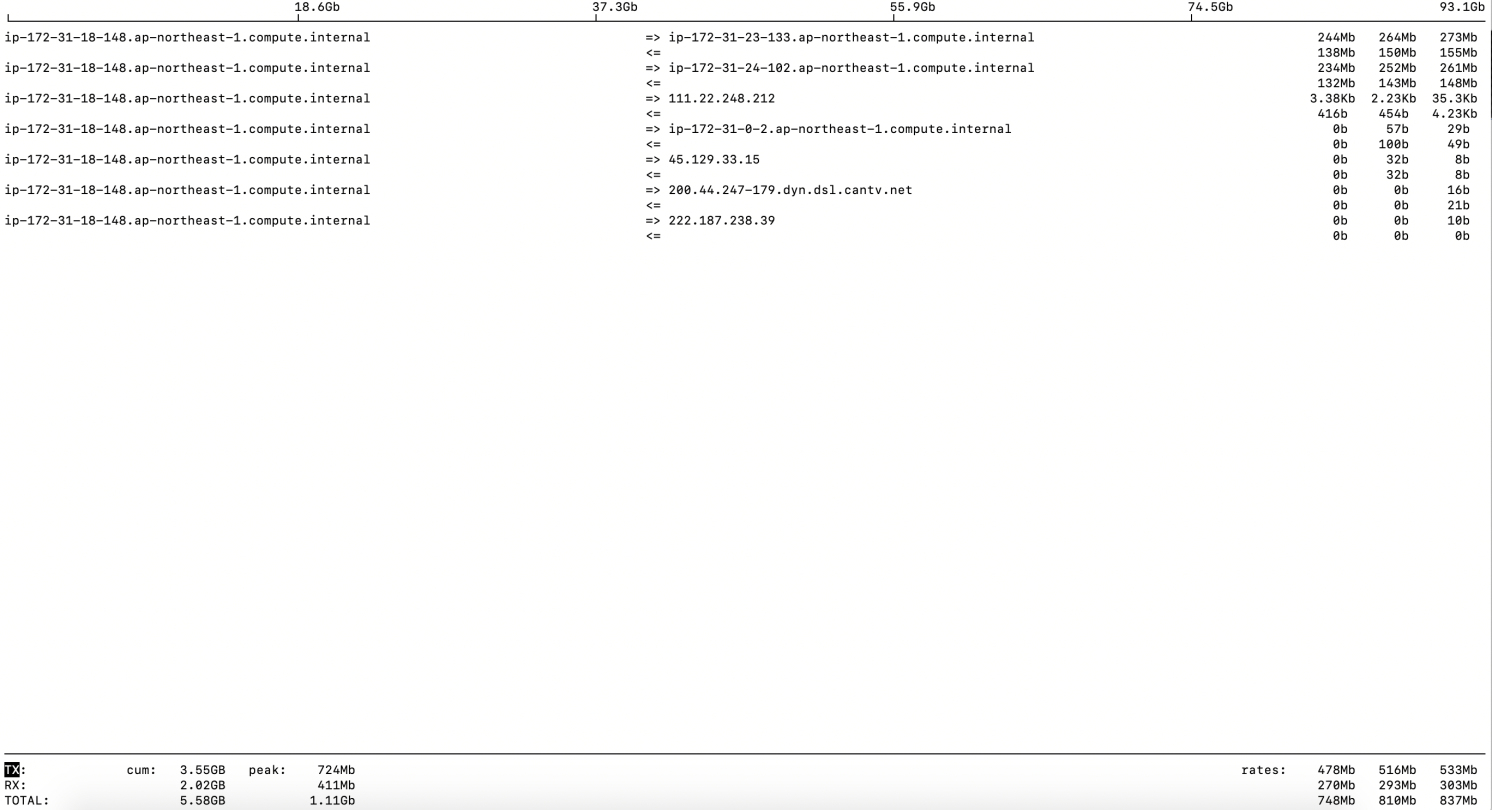

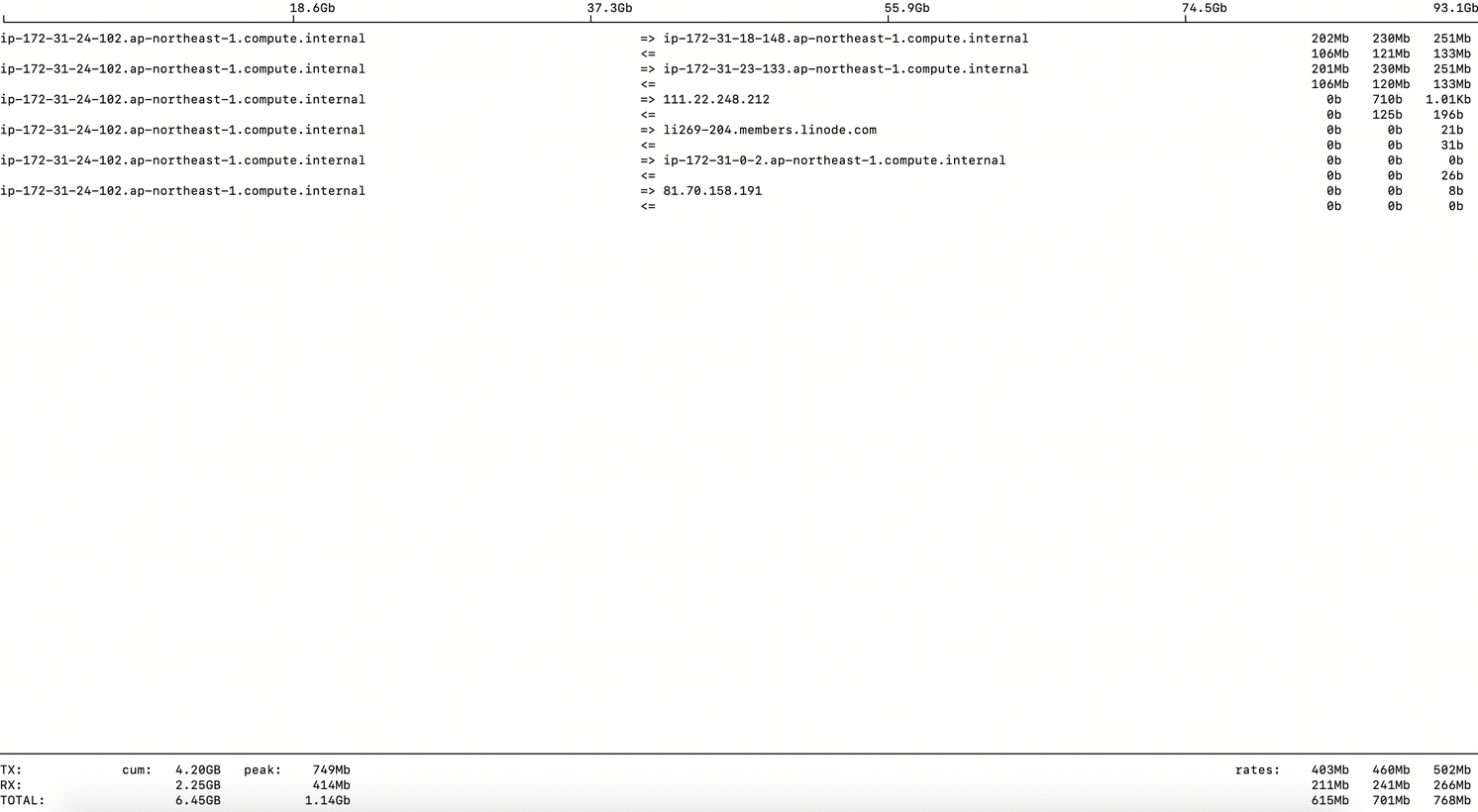

使用iftop查看系统网络流量情况,如下图:

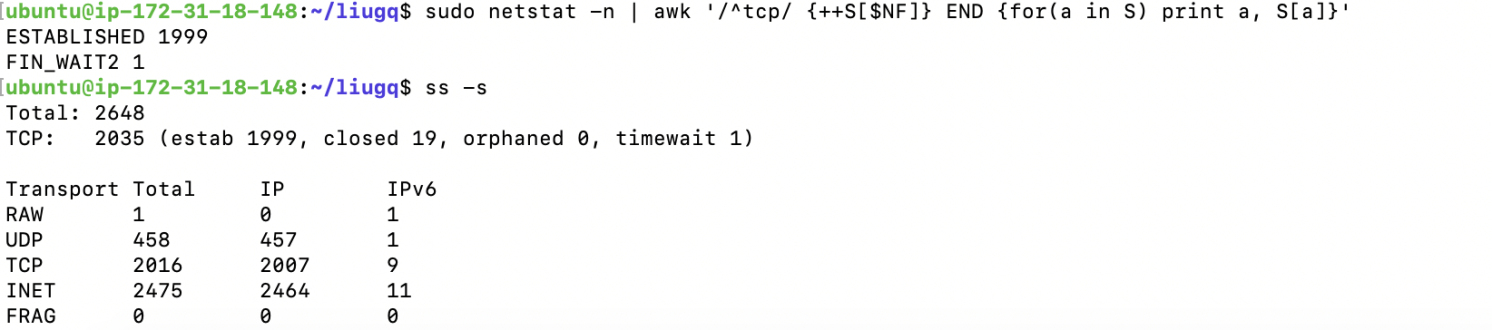

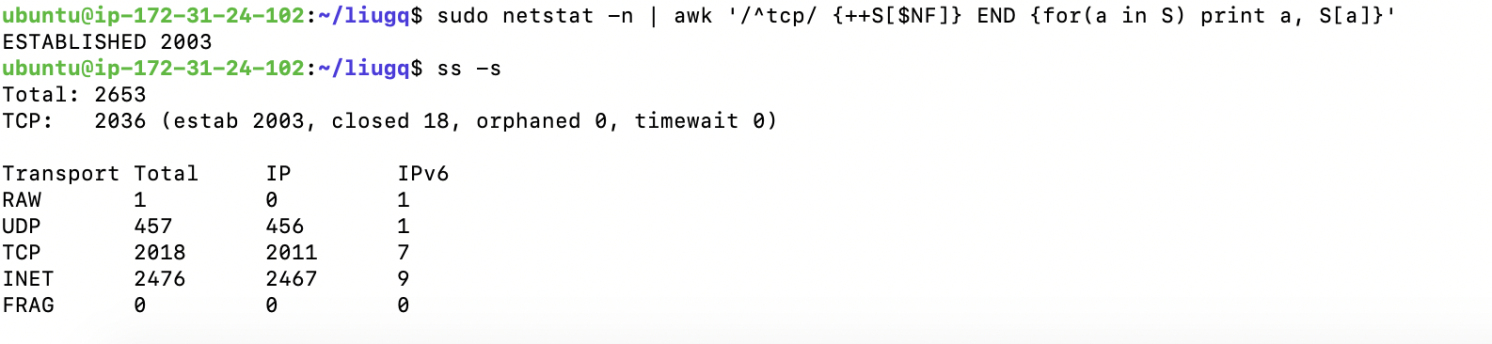

使用netstat,ss查看tcp连接数,如下图:

sudo netstat -n | awk '/^tcp/ {++S[$NF]} END {for(a in S) print a, S[a]}'

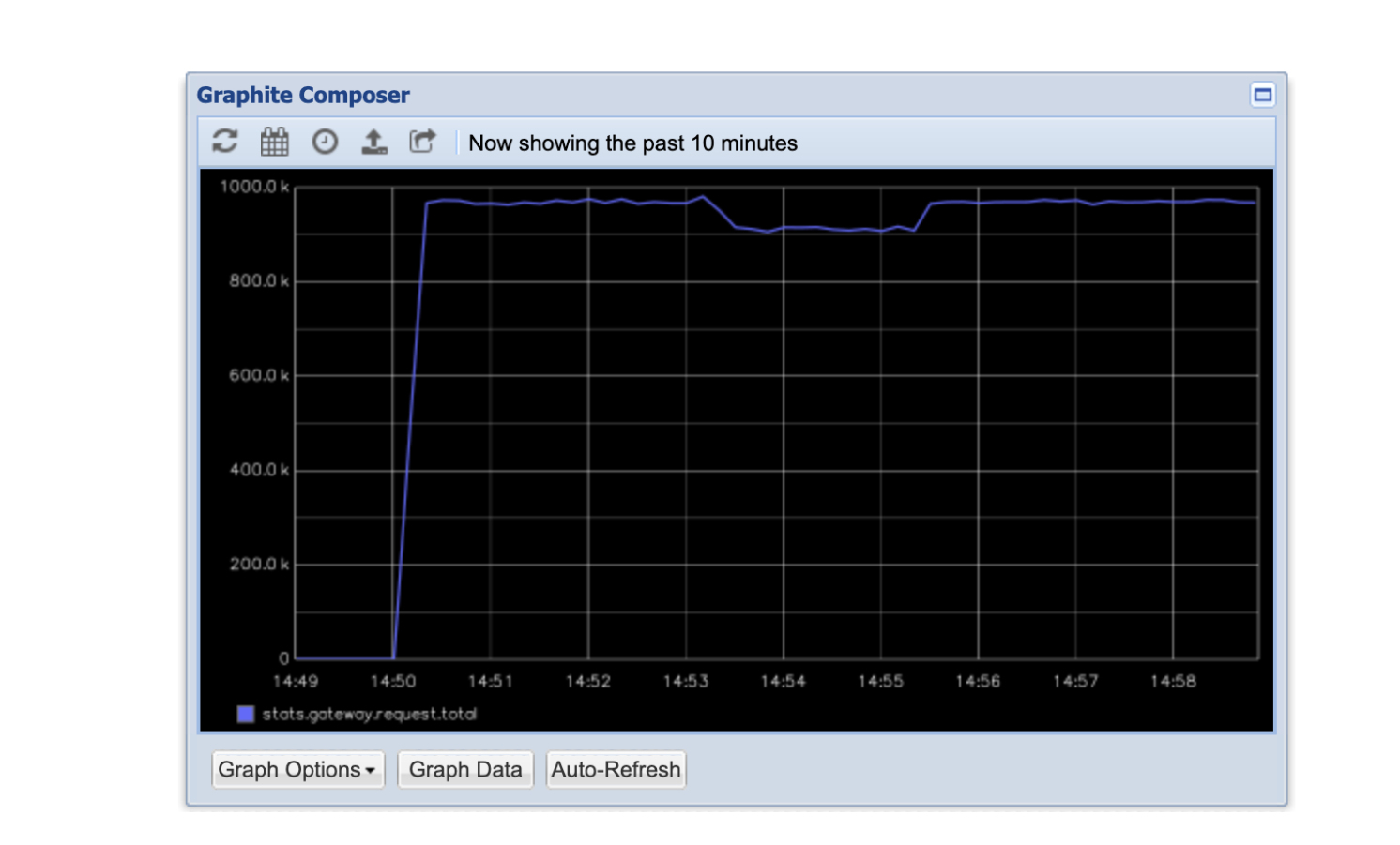

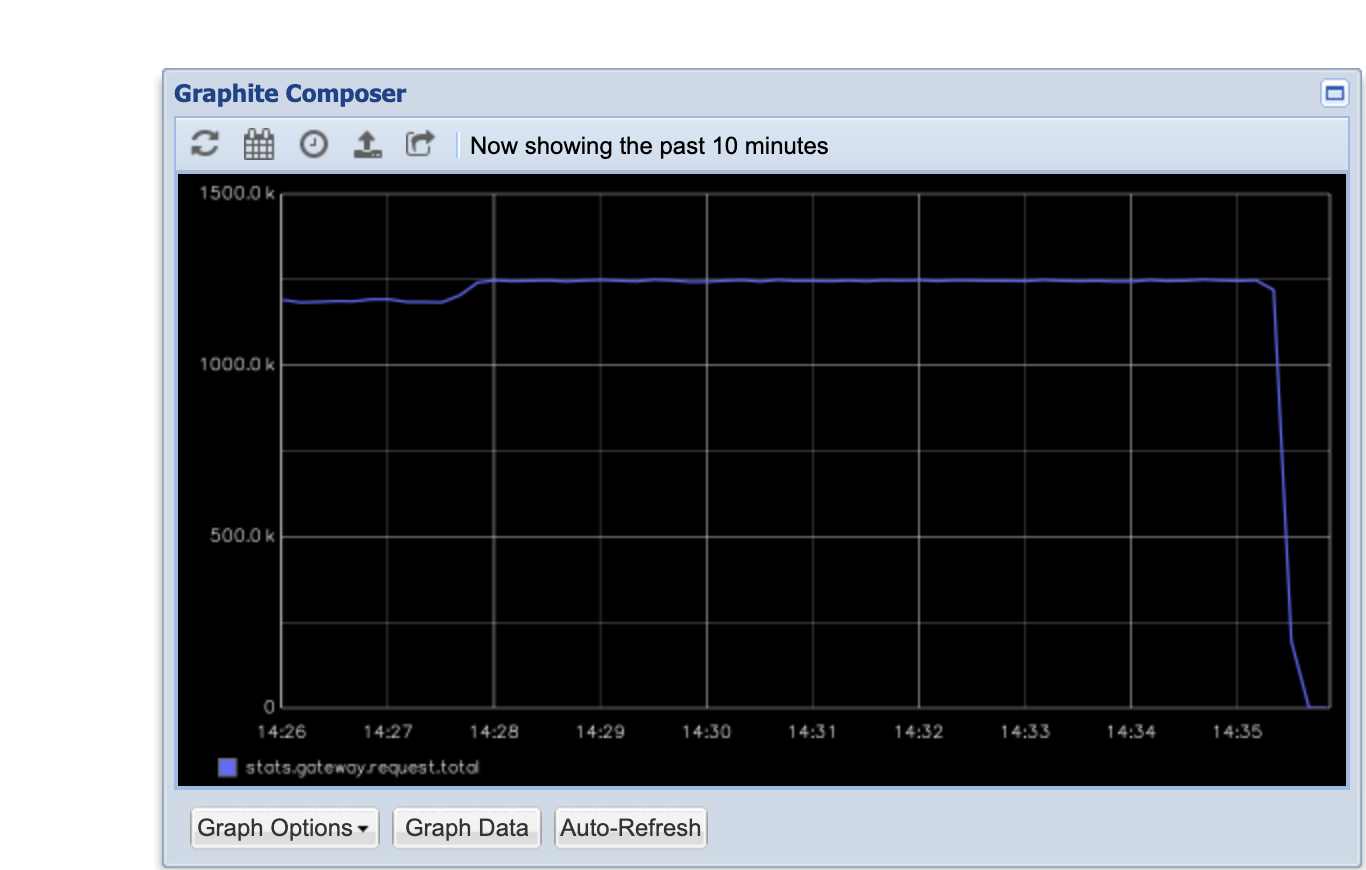

ss -s查看graphite statsd qps指标,如下图:

压测arm gateway服务器

在gateway2上启动五个gateway

./gateway-arm64&

./gateway-arm64&

./gateway-arm64&

./gateway-arm64&

./gateway-arm64&测试gateway返回内容

curl http://172.31.18.148:8000

输出

{"message":"not found"}在gateway1,test机上同时执行(1000个并发压测10分钟)

./http-loader -c 1000 -d 600 http://172.31.18.148:8000观测gateway2服务器指标

使用htop查看系统负载情况,如下图:

使用iftop查看系统网络流量情况,如下图:

使用netstat,ss查看tcp连接数,如下图:

sudo netstat -n | awk '/^tcp/ {++S[$NF]} END {for(a in S) print a, S[a]}'

ss -s查看graphite statsd qps指标,如下图:

这里我们可以看到qps基本稳定在125万的qps

压测总结

单机压测x86架构机器上能达到95多万的qps, arm架构机器上能达到125万的qps,可见性能还是非常优秀的。由于文章篇幅原因,gateway单进程的压测就不贴了,有兴趣的同学可以自己下载测试下。

本文地址:http://elasticsearch.cn/article/14174