@[toc] 搭建Elasitc stack集群时,我们往往把大部分注意力放在集群的搭建,索引的优化,分片的设置上等具体的调优参数上,很少有人会去关心Elasitc stack的日志配置的问题,大概是觉得,日志应该是一个公共的问题,默认的配置应该已经为我们处理好了。但很不幸,在不同的机器配置或者不同的运营策略下,如果采用默认的配置,会给我们带来麻烦。

默认配置带来的麻烦

以下例子是默认情况下,当Elasitc stack集群运行超过3个月之后的情况:

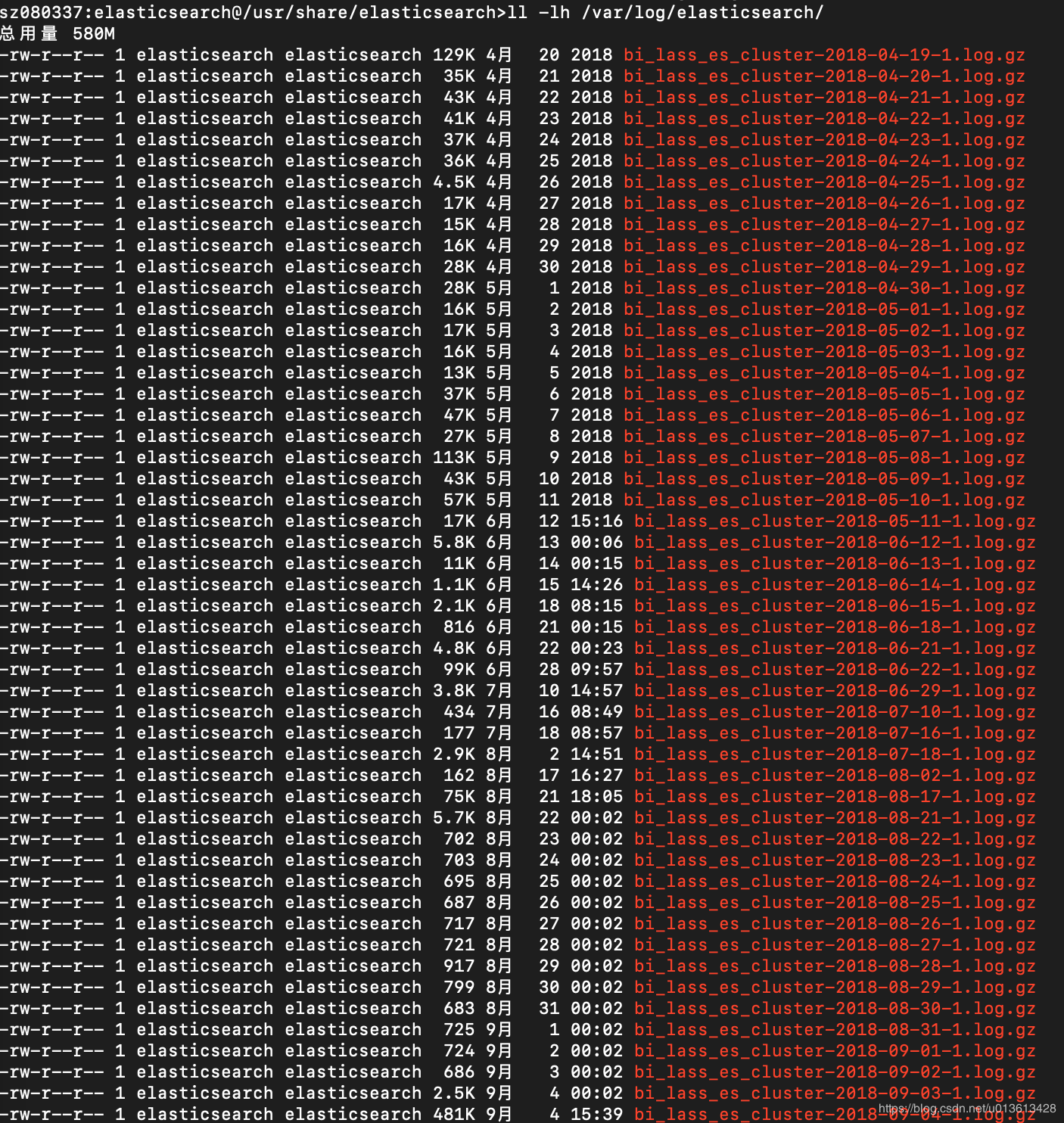

elasticsearch

elasticsearch默认情况下会每天rolling一个文件,当到达2G的时候,才开始清除超出的部分,当一个文件只有几十K的时候,文件会一直累计下来。

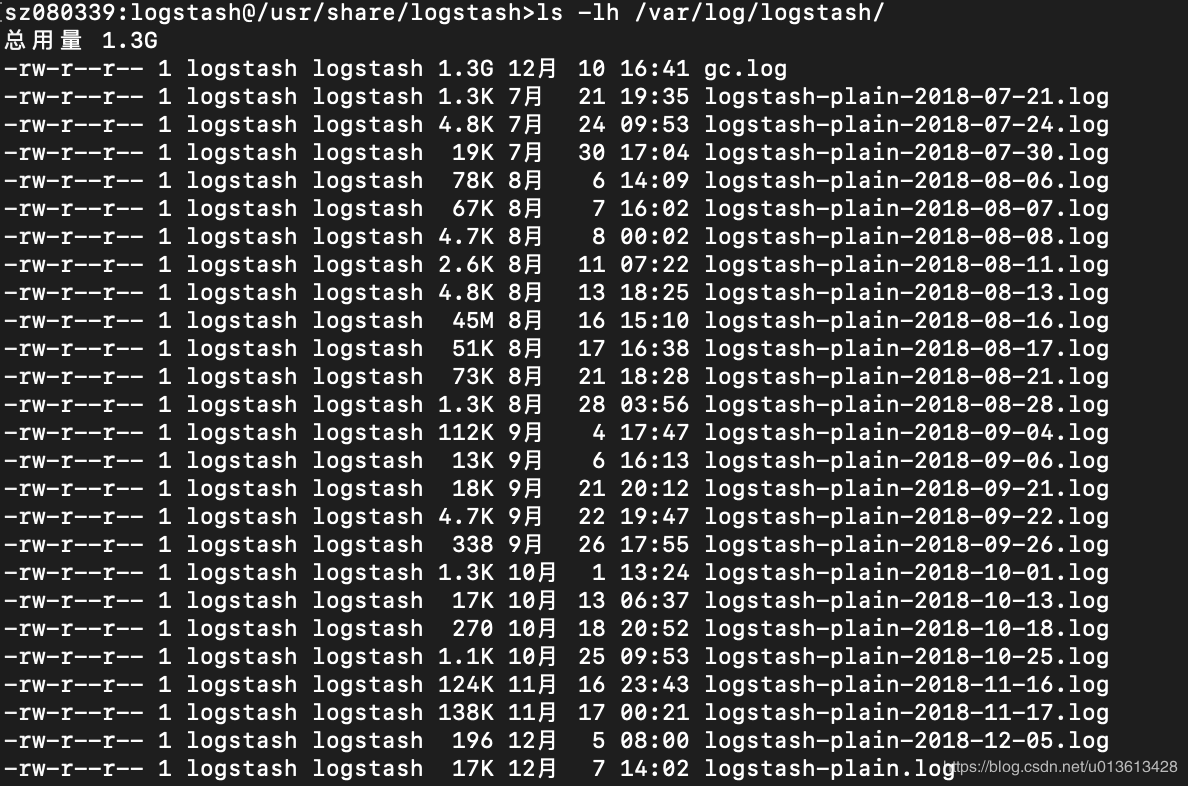

logstash

一直增长的gc文件和不停增多的rolling日志文件

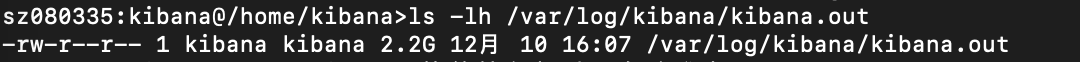

kibana

默认日志输出到kibana.out文件当中,这个文件会变得越来越大

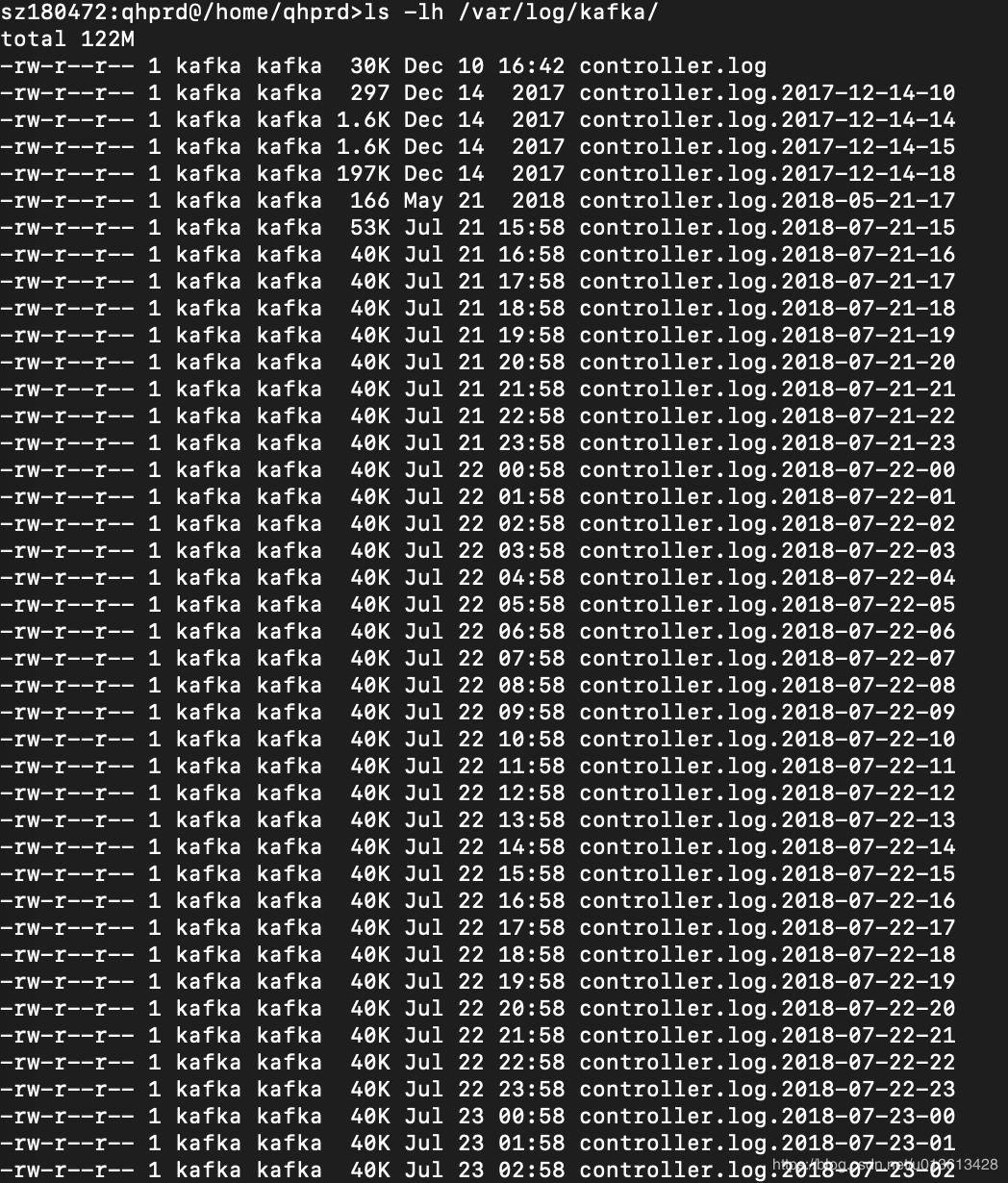

kafka

这里提到kafka是因为在大部分的架构当中,我们都会用到kafka作为中间件数据缓冲区,因此不得不维护kafka集群。同样,如果不做特定的配置,也会遇到日志的问题:不停增多的rolling日志文件

原因是kafka的默认log4j配置是使用DailyRollingFileAppender每隔一个小时生成一个文件 '.'yyyy-MM-dd-HH:

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.kafkaAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.kafkaAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.kafkaAppender.File=${kafka.logs.dir}/server.log

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.kafkaAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.stateChangeAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.stateChangeAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.stateChangeAppender.File=${kafka.logs.dir}/state-change.log

log4j.appender.stateChangeAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.stateChangeAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.requestAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.requestAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.requestAppender.File=${kafka.logs.dir}/kafka-request.log

log4j.appender.requestAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.requestAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.cleanerAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.cleanerAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.cleanerAppender.File=${kafka.logs.dir}/log-cleaner.log

log4j.appender.cleanerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.cleanerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.controllerAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.controllerAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.controllerAppender.File=${kafka.logs.dir}/controller.log

log4j.appender.controllerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.controllerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.authorizerAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.authorizerAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.authorizerAppender.File=${kafka.logs.dir}/kafka-authorizer.log

log4j.appender.authorizerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.authorizerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n解决方案

因此,对于我们需要维护的这几个组件,需要配置合理的日志rotate策略。一个比较常用的策略就是时间+size,每天rotate一个日志文件或者每当日志文件大小超过256M,rotate一个新的日志文件,并且最多保留7天之内的日志文件。

elasticsearch

通过修改log4j2.properties文件来解决。该文件在/etc/elasticsesarch目录下(或者config目录)。

默认配置是:

appender.rolling.type = RollingFile

appender.rolling.name = rolling

appender.rolling.fileName = ${sys:es.logs.base_path}${sys:file.separator}${sys:es.logs.cluster_name}.log

appender.rolling.layout.type = PatternLayout

appender.rolling.layout.pattern = [%d{ISO8601}][%-5p][%-25c{1.}] [%node_name]%marker %.-10000m%n

appender.rolling.filePattern = ${sys:es.logs.base_path}${sys:file.separator}${sys:es.logs.cluster_name}-%d{yyyy-MM-dd}-%i.log.gz

appender.rolling.policies.type = Policies

appender.rolling.policies.time.type = TimeBasedTriggeringPolicy

appender.rolling.policies.time.interval = 1

appender.rolling.policies.time.modulate = true

appender.rolling.policies.size.type = SizeBasedTriggeringPolicy

appender.rolling.policies.size.size = 256MB

appender.rolling.strategy.type = DefaultRolloverStrategy

appender.rolling.strategy.fileIndex = nomax

appender.rolling.strategy.action.type = Delete

appender.rolling.strategy.action.basepath = ${sys:es.logs.base_path}

appender.rolling.strategy.action.condition.type = IfFileName

appender.rolling.strategy.action.condition.glob = ${sys:es.logs.cluster_name}-*

appender.rolling.strategy.action.condition.nested_condition.type = IfAccumulatedFileSize

appender.rolling.strategy.action.condition.nested_condition.exceeds = 2GB 以上默认配置,会保存2GB的日志,只有累计的日志大小超过2GB的时候,才会删除旧的日志文件。 建议改为如下配置,仅保留最近7天的日志

appender.rolling.strategy.type = DefaultRolloverStrategy

appender.rolling.strategy.action.type = Delete

appender.rolling.strategy.action.basepath = ${sys:es.logs.base_path}

appender.rolling.strategy.action.condition.type = IfFileName

appender.rolling.strategy.action.condition.glob = ${sys:es.logs.cluster_name}-*

appender.rolling.strategy.action.condition.nested_condition.type = IfLastModified

appender.rolling.strategy.action.condition.nested_condition.age = 7D这里必须注意,log4j2会因为末尾的空格导致无法识别配置

logstash

与elasticsearch类似,通过修改log4j2.properties文件来解决。该文件在/etc/logstash目录下(或者config目录)。

默认配置是不会删除历史日志的:

status = error

name = LogstashPropertiesConfig

appender.console.type = Console

appender.console.name = plain_console

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = [%d{ISO8601}][%-5p][%-25c] %m%n

appender.json_console.type = Console

appender.json_console.name = json_console

appender.json_console.layout.type = JSONLayout

appender.json_console.layout.compact = true

appender.json_console.layout.eventEol = true

appender.rolling.type = RollingFile

appender.rolling.name = plain_rolling

appender.rolling.fileName = ${sys:ls.logs}/logstash-${sys:ls.log.format}.log

appender.rolling.filePattern = ${sys:ls.logs}/logstash-${sys:ls.log.format}-%d{yyyy-MM-dd}.log

appender.rolling.policies.type = Policies

appender.rolling.policies.time.type = TimeBasedTriggeringPolicy

appender.rolling.policies.time.interval = 1

appender.rolling.policies.time.modulate = true

appender.rolling.layout.type = PatternLayout

appender.rolling.layout.pattern = [%d{ISO8601}][%-5p][%-25c] %-.10000m%n需手动加上:

appender.rolling.strategy.type = DefaultRolloverStrategy

appender.rolling.strategy.action.type = Delete

appender.rolling.strategy.action.basepath = ${sys:ls.logs}

appender.rolling.strategy.action.condition.type = IfFileName

appender.rolling.strategy.action.condition.glob = ${sys:ls.logs}/logstash-${sys:ls.log.format}

appender.rolling.strategy.action.condition.nested_condition.type = IfLastModified

appender.rolling.strategy.action.condition.nested_condition.age = 7Dkibana

在kibana的配置文件中,只有以下几个选项:

logging.dest:

Default: stdout Enables you specify a file where Kibana stores log output.

logging.quiet:

Default: false Set the value of this setting to true to suppress all logging output other than error messages.

logging.silent:

Default: false Set the value of this setting to true to suppress all logging output.

logging.verbose:

Default: false Set the value of this setting to true to log all events, including system usage information and all requests. Supported on Elastic Cloud Enterprise.

logging.timezone

Default: UTC Set to the canonical timezone id (e.g. US/Pacific) to log events using that timezone. A list of timezones can be referenced at https://en.wikipedia.org/wiki/List_of_tz_database_time_zones.我们可以指定输出的日志文件与日志内容,但是却不可以配置日志的rotate。这时,我们需要使用logrotate,这个linux默认安装的工具。 首先,我们要在配置文件里面指定生成pid文件:

pid.file: "pid.log"然后,修改/etc/logrotate.conf:

/var/log/kibana {

missingok

notifempty

sharedscripts

daily

rotate 7

copytruncate

/bin/kill -HUP $(cat /usr/share/kibana/pid.log 2>/dev/null) 2>/dev/null

endscript

}kafka

如果不想写脚本清理过多的文件的话,需要修改config/log4j.properties文件。使用RollingFileAppender代替DailyRollingFileAppender,同时设置MaxFileSize和MaxBackupIndex。即修改为:

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.kafkaAppender=org.apache.log4j.RollingFileAppender

log4j.appender.kafkaAppender.File=${kafka.logs.dir}/server.log

log4j.appender.kafkaAppender.MaxFileSize=10MB

log4j.appender.kafkaAppender.MaxBackupIndex=10

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.kafkaAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.stateChangeAppender=org.apache.log4j.RollingFileAppender

log4j.appender.stateChangeAppender.File=${kafka.logs.dir}/state-change.log

log4j.appender.stateChangeAppender.MaxFileSize=10M

log4j.appender.stateChangeAppender.MaxBackupIndex=10

log4j.appender.stateChangeAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.stateChangeAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.requestAppender=org.apache.log4j.RollingFileAppender

log4j.appender.requestAppender.File=${kafka.logs.dir}/kafka-request.log

log4j.appender.requestAppender.MaxFileSize=10MB

log4j.appender.requestAppender.MaxBackupIndex=10

log4j.appender.requestAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.requestAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.cleanerAppender=org.apache.log4j.RollingFileAppender

log4j.appender.cleanerAppender.File=${kafka.logs.dir}/log-cleaner.log

log4j.appender.cleanerAppender.MaxFileSize=10MB

log4j.appender.cleanerAppender.MaxBackupIndex=10

log4j.appender.cleanerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.cleanerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.controllerAppender=org.apache.log4j.RollingFileAppender

log4j.appender.controllerAppender.File=${kafka.logs.dir}/controller.log

log4j.appender.controllerAppender.MaxFileSize=10MB

log4j.appender.controllerAppender.MaxBackupIndex=10

log4j.appender.controllerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.controllerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.authorizerAppender=org.apache.log4j.RollingFileAppender

log4j.appender.authorizerAppender.File=${kafka.logs.dir}/kafka-authorizer.log

log4j.appender.authorizerAppender.MaxFileSize=10MB

log4j.appender.authorizerAppender.MaxBackupIndex=10

log4j.appender.authorizerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.authorizerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

# Turn on all our debugging info

#log4j.logger.kafka.producer.async.DefaultEventHandler=DEBUG, kafkaAppender

#log4j.logger.kafka.client.ClientUtils=DEBUG, kafkaAppender

#log4j.logger.kafka.perf=DEBUG, kafkaAppender

#log4j.logger.kafka.perf.ProducerPerformance$ProducerThread=DEBUG, kafkaAppender

#log4j.logger.org.I0Itec.zkclient.ZkClient=DEBUG

log4j.logger.kafka=INFO, kafkaAppender

log4j.logger.kafka.network.RequestChannel$=WARN, requestAppender

log4j.additivity.kafka.network.RequestChannel$=false

#log4j.logger.kafka.network.Processor=TRACE, requestAppender

#log4j.logger.kafka.server.KafkaApis=TRACE, requestAppender

#log4j.additivity.kafka.server.KafkaApis=false

log4j.logger.kafka.request.logger=WARN, requestAppender

log4j.additivity.kafka.request.logger=false

log4j.logger.kafka.controller=TRACE, controllerAppender

log4j.additivity.kafka.controller=false

log4j.logger.kafka.log.LogCleaner=INFO, cleanerAppender

log4j.additivity.kafka.log.LogCleaner=false

log4j.logger.state.change.logger=TRACE, stateChangeAppender

log4j.additivity.state.change.logger=false

#Change this to debug to get the actual audit log for authorizer.

log4j.logger.kafka.authorizer.logger=WARN, authorizerAppender

log4j.additivity.kafka.authorizer.logger=false本文地址:http://elasticsearch.cn/article/6189