Rabbitmq中数据如下:

logstash配置如下:

{"host":{"serviceName":"streamService2","address":"192.168.127.1","port":9983},"spans":[{"begin":1500877798555,"end":1500877798821,"name":"http:/service1","traceId":777698211216806359,"parents":[777698211216806359],"spanId":4799424895103297227,"remote":true,"exportable":true,"logs":[{"timestamp":1500877798557,"event":"sr"},{"timestamp":1500877798821,"event":"ss"}]},{"begin":1500877798556,"end":1500877798821,"name":"http:/service1","traceId":777698211216806359,"parents":[4799424895103297227],"spanId":-3738027106310948226,"exportable":true,"tags":{"http.url":"http://localhost:9983/service1","http.host":"localhost","http.path":"/service1","http.method":"GET"}}]}

logstash配置如下:

input{

rabbitmq {

host => "10.129.41.82"

subscription_retry_interval_seconds => "5"

vhost => "/"

exchange => "sleuth"

queue => "sleuth.sleuth"

durable => "true"

key => "#"

user => "test"

password => "test"

}

}

filter{

json{

source => "message"

target => "mqdata"

}

}

output{

#stdout{

# codec => rubydebug

# }

elasticsearch {

hosts => "10.129.39.154"

index => "logstash-mq-%{+YYYY.MM.dd}"

document_type => "mq"

workers => 10

template_overwrite => true

}

}

5 个回复

logstashHard

赞同来自:

logstash配置文件:

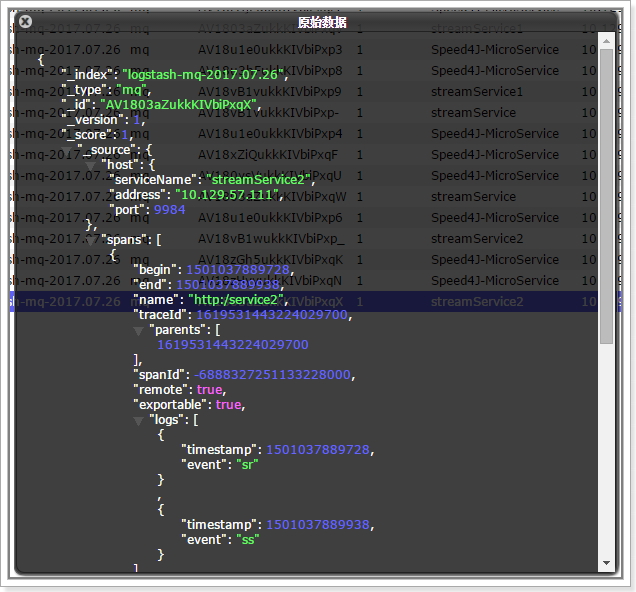

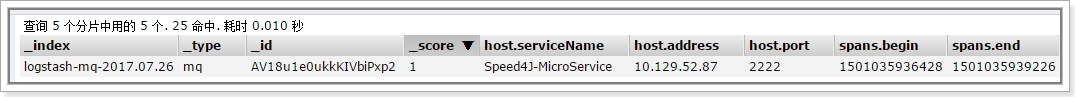

最后在ES上展示效果如下:

logstashHard

赞同来自:

logstashHard

赞同来自:

发现,还是什么用都没有,仍然是原格式,

logstashHard

赞同来自:

但是现在数据格式变化了,后面的一些处理瞬时感到没有头绪了。

medcl - 今晚打老虎。

赞同来自:

默认应该不用加JSONfilter就行了,你试试在RabbitMQ的input加上:

codec => "json" 呢?