[2019-12-18T03:05:27,812][INFO ][logstash.javapipeline ] Pipeline terminated {"pipeline.id"=>".monitoring-logstash"}

[2019-12-18T03:05:32,700][WARN ][org.logstash.execution.ShutdownWatcherExt] {"inflight_count"=>0, "stalling_threads_info"=>{"other"=>[{"thread_id"=>26, "name"=>"[main]<beats", "current_call"=>"[...] /vendor/bundle/jruby/2.5.0/gems/logstash-input-beats-6.0.3-java/lib/logstash/inputs/beats.rb:204:in `run'"}, {"thread_id"=>22, "name"=>"[main]>worker0", "current_call"=>"[...]/vendor/bundle/jruby/2. 5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `sleep'"}, {"thread_id"=>23, "name"=>"[main]>worker1", "current_call"=>"[...]/vendor/bundle/jruby/2.5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `s leep'"}, {"thread_id"=>24, "name"=>"[main]>worker2", "current_call"=>"[...]/vendor/bundle/jruby/2.5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `sleep'"}, {"thread_id"=>25, "name"=>"[main]>worker3 ", "current_call"=>"[...]/vendor/bundle/jruby/2.5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `sleep'"}]}}

[2019-12-18T03:05:32,702][ERROR][org.logstash.execution.ShutdownWatcherExt] The shutdown process appears to be stalled due to busy or blocked plugins. Check the logs for more information.

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by com.headius.backport9.modules.Modules (file:/usr/share/logstash/logstash-core/lib/jars/jruby-complete-9.2.8.0.jar) to field java.io.FileDescriptor.fd

WARNING: Please consider reporting this to the maintainers of com.headius.backport9.modules.Modules

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Thread.exclusive is deprecated, use Thread::Mutex

Sending Logstash logs to /usr/share/logstash/logs which is now configured via log4j2.properties

[2019-12-18T03:05:50,006][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-12-18T03:05:50,014][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.5.0"}

[2019-12-18T03:05:50,935][INFO ][logstash.licensechecker.licensereader] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[http://logstash_internal:xxxxxx@11.5.0.75:9200/, http://log stash_internal:xxxxxx@11.5.0.75:9300/, http://logstash_internal:xxxxxx@11.5.0.75:9400/]}}

[2019-12-18T03:05:51,151][WARN ][logstash.licensechecker.licensereader] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:51,187][INFO ][logstash.licensechecker.licensereader] ES Output version determined {:es_version=>7}

[2019-12-18T03:05:51,189][WARN ][logstash.licensechecker.licensereader] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2019-12-18T03:05:51,232][WARN ][logstash.licensechecker.licensereader] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:51,285][WARN ][logstash.licensechecker.licensereader] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:51,414][INFO ][logstash.monitoring.internalpipelinesource] Monitoring License OK

[2019-12-18T03:05:51,415][INFO ][logstash.monitoring.internalpipelinesource] Validated license for monitoring. Enabling monitoring pipeline.

[2019-12-18T03:05:52,564][INFO ][org.reflections.Reflections] Reflections took 31 ms to scan 1 urls, producing 20 keys and 40 values

[2019-12-18T03:05:52,878][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[http://logstash_internal:xxxxxx@11.5.0.75:9200/, http://logstash_i nternal:xxxxxx@11.5.0.75:9300/, http://logstash_internal:xxxxxx@11.5.0.75:9400/]}}

[2019-12-18T03:05:52,994][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:53,002][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>7}

[2019-12-18T03:05:53,003][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2019-12-18T03:05:53,038][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:53,078][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:53,122][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://11.5.0.75:9200", "http://11.5.0.75:9300", "htt p://11.5.0.75:9400"]}

[2019-12-18T03:05:53,173][INFO ][logstash.outputs.elasticsearch] Using default mapping template

[2019-12-18T03:05:53,189][WARN ][org.logstash.instrument.metrics.gauge.LazyDelegatingGauge] A gauge metric of an unknown type (org.jruby.specialized.RubyArrayOneObject) has been create for key: clus ter_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[2019-12-18T03:05:53,192][INFO ][logstash.javapipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_infl ight"=>500, "pipeline.sources"=>["/usr/share/logstash/config/logstash.conf"], :thread=>"#<Thread:0x7aaa6383 run>"}

[2019-12-18T03:05:53,253][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_inte rval"=>"5s", "number_of_shards"=>1}, "mappings"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"s tring_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timest amp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longi tude"=>{"type"=>"half_float"}}}}}}}

[2019-12-18T03:05:53,672][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2019-12-18T03:05:53,683][INFO ][logstash.javapipeline ] Pipeline started {"pipeline.id"=>"main"}

[2019-12-18T03:05:53,763][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>}

[2019-12-18T03:05:53,789][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2019-12-18T03:05:54,571][WARN ][logstash.outputs.elasticsearch] You are using a deprecated config setting "document_type" set in elasticsearch. Deprecated settings will continue to work, but are sc heduled for removal from logstash in the future. Document types are being deprecated in Elasticsearch 6.0, and removed entirely in 7.0. You should avoid this feature If you have any questions about this, please visit the #logstash channel on freenode irc. {:name=>"document_type", :plugin=><LogStash::Outputs::ElasticSearch bulk_path=>"/_monitoring/bulk?system_id=logstash&system_api_version=7&in terval=1s", password=><password>, hosts=>[http://11.5.0.75:9200, http://11.5.0.75:9300, http://11.5.0.75:9400], sniffing=>false, manage_template=>false, id=>"deae027919f5ef1882789000c6a25a5d167dfce4 5722e4735a81864a8b0dd062", user=>"logstash_internal", document_type=>"%{[@metadata][document_type]}", enable_metric=>true, codec=><LogStash::Codecs::Plain id=>"plain_bf874fe4-d4ea-4159-911d-3da2dcb5 f2ff", enable_metric=>true, charset=>"UTF-8">, workers=>1, template_name=>"logstash", template_overwrite=>false, doc_as_upsert=>false, script_type=>"inline", script_lang=>"painless", script_var_name =>"event", scripted_upsert=>false, retry_initial_interval=>2, retry_max_interval=>64, retry_on_conflict=>1, ilm_enabled=>"auto", ilm_rollover_alias=>"logstash", ilm_pattern=>"{now/d}-000001", ilm_po licy=>"logstash-policy", action=>"index", ssl_certificate_verification=>true, sniffing_delay=>5, timeout=>60, pool_max=>1000, pool_max_per_route=>100, resurrect_delay=>5, validate_after_inactivity=> 10000, http_compression=>false>}

[2019-12-18T03:05:54,595][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[http://logstash_internal:xxxxxx@11.5.0.75:9200/, http://logstash_i nternal:xxxxxx@11.5.0.75:9300/, http://logstash_internal:xxxxxx@11.5.0.75:9400/]}}

[2019-12-18T03:05:54,603][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:54,613][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>7}

[2019-12-18T03:05:54,613][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2019-12-18T03:05:54,671][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:54,717][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:54,779][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://11.5.0.75:9200", "http://11.5.0.75:9300", "htt p://11.5.0.75:9400"]}

[2019-12-18T03:05:54,783][INFO ][logstash.javapipeline ] Starting pipeline {:pipeline_id=>".monitoring-logstash", "pipeline.workers"=>1, "pipeline.batch.size"=>2, "pipeline.batch.delay"=>50, "pip eline.max_inflight"=>2, "pipeline.sources"=>["monitoring pipeline"], :thread=>"#<Thread:0x70698f4a run>"}

[2019-12-18T03:05:54,821][INFO ][logstash.javapipeline ] Pipeline started {"pipeline.id"=>".monitoring-logstash"}

[2019-12-18T03:05:54,858][INFO ][logstash.agent ] Pipelines running {:count=>2, :running_pipelines=>[:".monitoring-logstash", :main], :non_running_pipelines=>}

[2019-12-18T03:05:55,101][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

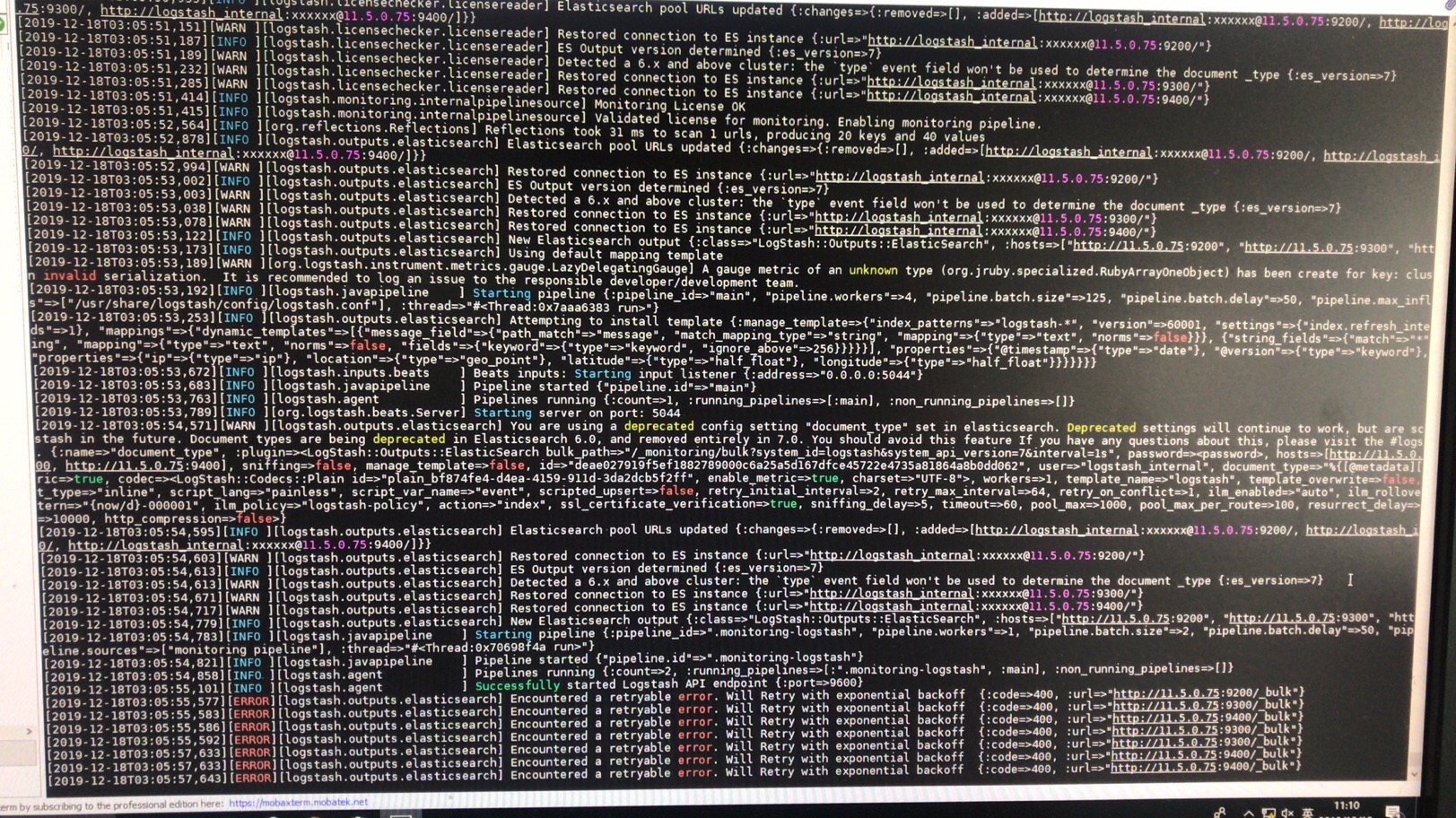

[2019-12-18T03:05:55,577][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9200/_bulk"}

[2019-12-18T03:05:55,583][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9300/_bulk"}

[2019-12-18T03:05:55,586][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9400/_bulk"}

[2019-12-18T03:05:55,592][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9300/_bulk"}

[2019-12-18T03:05:57,633][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9300/_bulk"}

[2019-12-18T03:05:57,633][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9400/_bulk"}

[2019-12-18T03:05:57,643][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9400/_bulk"}

[2019-12-18T03:05:57,644][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9200/_bulk"}

liogstash启动报错不能写入es,角色和用户配置都正确,启动后一直报错 [2019-12-18T03:05:57,644][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9200/_bulk"}

[2019-12-18T03:05:32,700][WARN ][org.logstash.execution.ShutdownWatcherExt] {"inflight_count"=>0, "stalling_threads_info"=>{"other"=>[{"thread_id"=>26, "name"=>"[main]<beats", "current_call"=>"[...] /vendor/bundle/jruby/2.5.0/gems/logstash-input-beats-6.0.3-java/lib/logstash/inputs/beats.rb:204:in `run'"}, {"thread_id"=>22, "name"=>"[main]>worker0", "current_call"=>"[...]/vendor/bundle/jruby/2. 5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `sleep'"}, {"thread_id"=>23, "name"=>"[main]>worker1", "current_call"=>"[...]/vendor/bundle/jruby/2.5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `s leep'"}, {"thread_id"=>24, "name"=>"[main]>worker2", "current_call"=>"[...]/vendor/bundle/jruby/2.5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `sleep'"}, {"thread_id"=>25, "name"=>"[main]>worker3 ", "current_call"=>"[...]/vendor/bundle/jruby/2.5.0/gems/stud-0.0.23/lib/stud/interval.rb:89:in `sleep'"}]}}

[2019-12-18T03:05:32,702][ERROR][org.logstash.execution.ShutdownWatcherExt] The shutdown process appears to be stalled due to busy or blocked plugins. Check the logs for more information.

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by com.headius.backport9.modules.Modules (file:/usr/share/logstash/logstash-core/lib/jars/jruby-complete-9.2.8.0.jar) to field java.io.FileDescriptor.fd

WARNING: Please consider reporting this to the maintainers of com.headius.backport9.modules.Modules

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Thread.exclusive is deprecated, use Thread::Mutex

Sending Logstash logs to /usr/share/logstash/logs which is now configured via log4j2.properties

[2019-12-18T03:05:50,006][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-12-18T03:05:50,014][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.5.0"}

[2019-12-18T03:05:50,935][INFO ][logstash.licensechecker.licensereader] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[http://logstash_internal:xxxxxx@11.5.0.75:9200/, http://log stash_internal:xxxxxx@11.5.0.75:9300/, http://logstash_internal:xxxxxx@11.5.0.75:9400/]}}

[2019-12-18T03:05:51,151][WARN ][logstash.licensechecker.licensereader] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:51,187][INFO ][logstash.licensechecker.licensereader] ES Output version determined {:es_version=>7}

[2019-12-18T03:05:51,189][WARN ][logstash.licensechecker.licensereader] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2019-12-18T03:05:51,232][WARN ][logstash.licensechecker.licensereader] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:51,285][WARN ][logstash.licensechecker.licensereader] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:51,414][INFO ][logstash.monitoring.internalpipelinesource] Monitoring License OK

[2019-12-18T03:05:51,415][INFO ][logstash.monitoring.internalpipelinesource] Validated license for monitoring. Enabling monitoring pipeline.

[2019-12-18T03:05:52,564][INFO ][org.reflections.Reflections] Reflections took 31 ms to scan 1 urls, producing 20 keys and 40 values

[2019-12-18T03:05:52,878][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[http://logstash_internal:xxxxxx@11.5.0.75:9200/, http://logstash_i nternal:xxxxxx@11.5.0.75:9300/, http://logstash_internal:xxxxxx@11.5.0.75:9400/]}}

[2019-12-18T03:05:52,994][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:53,002][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>7}

[2019-12-18T03:05:53,003][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2019-12-18T03:05:53,038][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:53,078][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:53,122][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://11.5.0.75:9200", "http://11.5.0.75:9300", "htt p://11.5.0.75:9400"]}

[2019-12-18T03:05:53,173][INFO ][logstash.outputs.elasticsearch] Using default mapping template

[2019-12-18T03:05:53,189][WARN ][org.logstash.instrument.metrics.gauge.LazyDelegatingGauge] A gauge metric of an unknown type (org.jruby.specialized.RubyArrayOneObject) has been create for key: clus ter_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[2019-12-18T03:05:53,192][INFO ][logstash.javapipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_infl ight"=>500, "pipeline.sources"=>["/usr/share/logstash/config/logstash.conf"], :thread=>"#<Thread:0x7aaa6383 run>"}

[2019-12-18T03:05:53,253][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_inte rval"=>"5s", "number_of_shards"=>1}, "mappings"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"s tring_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timest amp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longi tude"=>{"type"=>"half_float"}}}}}}}

[2019-12-18T03:05:53,672][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2019-12-18T03:05:53,683][INFO ][logstash.javapipeline ] Pipeline started {"pipeline.id"=>"main"}

[2019-12-18T03:05:53,763][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>}

[2019-12-18T03:05:53,789][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2019-12-18T03:05:54,571][WARN ][logstash.outputs.elasticsearch] You are using a deprecated config setting "document_type" set in elasticsearch. Deprecated settings will continue to work, but are sc heduled for removal from logstash in the future. Document types are being deprecated in Elasticsearch 6.0, and removed entirely in 7.0. You should avoid this feature If you have any questions about this, please visit the #logstash channel on freenode irc. {:name=>"document_type", :plugin=><LogStash::Outputs::ElasticSearch bulk_path=>"/_monitoring/bulk?system_id=logstash&system_api_version=7&in terval=1s", password=><password>, hosts=>[http://11.5.0.75:9200, http://11.5.0.75:9300, http://11.5.0.75:9400], sniffing=>false, manage_template=>false, id=>"deae027919f5ef1882789000c6a25a5d167dfce4 5722e4735a81864a8b0dd062", user=>"logstash_internal", document_type=>"%{[@metadata][document_type]}", enable_metric=>true, codec=><LogStash::Codecs::Plain id=>"plain_bf874fe4-d4ea-4159-911d-3da2dcb5 f2ff", enable_metric=>true, charset=>"UTF-8">, workers=>1, template_name=>"logstash", template_overwrite=>false, doc_as_upsert=>false, script_type=>"inline", script_lang=>"painless", script_var_name =>"event", scripted_upsert=>false, retry_initial_interval=>2, retry_max_interval=>64, retry_on_conflict=>1, ilm_enabled=>"auto", ilm_rollover_alias=>"logstash", ilm_pattern=>"{now/d}-000001", ilm_po licy=>"logstash-policy", action=>"index", ssl_certificate_verification=>true, sniffing_delay=>5, timeout=>60, pool_max=>1000, pool_max_per_route=>100, resurrect_delay=>5, validate_after_inactivity=> 10000, http_compression=>false>}

[2019-12-18T03:05:54,595][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>, :added=>[http://logstash_internal:xxxxxx@11.5.0.75:9200/, http://logstash_i nternal:xxxxxx@11.5.0.75:9300/, http://logstash_internal:xxxxxx@11.5.0.75:9400/]}}

[2019-12-18T03:05:54,603][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:54,613][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>7}

[2019-12-18T03:05:54,613][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2019-12-18T03:05:54,671][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:54,717][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://logstash_internal:xxxxx ... ot%3B}

[2019-12-18T03:05:54,779][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://11.5.0.75:9200", "http://11.5.0.75:9300", "htt p://11.5.0.75:9400"]}

[2019-12-18T03:05:54,783][INFO ][logstash.javapipeline ] Starting pipeline {:pipeline_id=>".monitoring-logstash", "pipeline.workers"=>1, "pipeline.batch.size"=>2, "pipeline.batch.delay"=>50, "pip eline.max_inflight"=>2, "pipeline.sources"=>["monitoring pipeline"], :thread=>"#<Thread:0x70698f4a run>"}

[2019-12-18T03:05:54,821][INFO ][logstash.javapipeline ] Pipeline started {"pipeline.id"=>".monitoring-logstash"}

[2019-12-18T03:05:54,858][INFO ][logstash.agent ] Pipelines running {:count=>2, :running_pipelines=>[:".monitoring-logstash", :main], :non_running_pipelines=>}

[2019-12-18T03:05:55,101][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2019-12-18T03:05:55,577][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9200/_bulk"}

[2019-12-18T03:05:55,583][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9300/_bulk"}

[2019-12-18T03:05:55,586][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9400/_bulk"}

[2019-12-18T03:05:55,592][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9300/_bulk"}

[2019-12-18T03:05:57,633][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9300/_bulk"}

[2019-12-18T03:05:57,633][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9400/_bulk"}

[2019-12-18T03:05:57,643][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9400/_bulk"}

[2019-12-18T03:05:57,644][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9200/_bulk"}

liogstash启动报错不能写入es,角色和用户配置都正确,启动后一直报错 [2019-12-18T03:05:57,644][ERROR][logstash.outputs.elasticsearch] Encountered a retryable error. Will Retry with exponential backoff {:code=>400, :url=>"http://11.5.0.75:9200/_bulk"}

3 个回复

liuxg - Elastic

赞同来自: artcode

locatelli

赞同来自:

artcode

赞同来自: