语言检测

Elasticsearch 7.6 利用机器学习来检测文本语言

Elasticsearch • medcl 发表了文章 • 0 个评论 • 5182 次浏览 • 2020-03-07 13:22

Elasticsearch 新近发布的 7.6 版本里面包含了很多激动人心的功能,而最让我感兴趣的是利用机器学习来自动检测语言的功能。

功能初探

检测文本语言本身不是什么稀奇事,之前做爬虫的时候,就做过对网页正文进行语言的检测,有很多成熟的方案,而最好的就属 Google Chrome 团队开源的 CLD 系列(全名:Compact Language Detector)了,能够检测多达 80 种各种语言,我用过CLD2,是基于 C++ 贝叶斯分类器实现的,而 CLD3 则是基于神经网络实现的,无疑更加准确,这次 Elasticsearch 将这个非常小的功能也直接集成到了默认的发行包里面,对于使用者来说可以说是带来很大的方便。

多语言的痛点

相信很多朋友,在实际的业务场景中,对碰到过一个字段同时存在多个语种的文本内容的情况,尤其是出海的产品,比如类似大众点评的 APP 吧,一个餐馆下面,来自七洲五湖四海的朋友都来品尝过了,自然要留下点评语是不,德国的朋友使用的是德语,法国的朋友使用的是法语,广州的朋友用的是粤语,那对于开发这个 APP 的后台工程师可就犯难了,如果这些评论都存在一个字段里面,就不好设置一个统一的分词器,因为不同的语言他们的切分规则肯定是不一样的,最简单的例子,比如中文和英文,设置的分词不对,查询结果就会不精准。

相信也有很多人用过这样的解决方案,既然一个字段搞不定,那就把这个字段复制几份,英文字段存一份,中文字段存一份,用 MultiField 来做,这样虽然可以解决一部分问题,但是同样会带来容量和资源的浪费,和查询时候具体该选择哪个字段来参与查询的挑战。

而利用 7.6 的这个新功能,可以在创建索引的时候,可以自动的根据内容进行推理,从而影响索引文档的构成,进而做到特定的文本进特定的字段,从而提升查询体验和性能,关于这个功能,Elastic 官网这里也有一篇博客2,提供了详细的例子。

实战上手

看上去不错,但是鲁迅说过,网上得来终觉浅,觉知此事要躬行,来, 今天一起跑一遍看看具体怎么个用法。

功能剖析

首先,这个功能叫 Language identification,是机器学习的一个 Feature,但是不能单独使用,要结合 Ingest Node 的一个 inference ingest processor 来使用,Ingest processor 是在 Elasticsearch 里面运行的数据预处理器,部分功能类似于 Logstash 的数据解析,对于简单数据操作场景,完全可以替代掉 Logstash,简化部署架构。

Elasticsearch 在 7.6 的包里面,默认打包了提前训练好的机器学习模型,就是 Language identification 需要调用的语言检测模型,名称是固定的 lang_ident_model_1,这也是 Elasticsearch 自带的第一个模型包,大家了解一下就好。

那这个模型包在什么位置呢,我们来解刨一下:

$unzip /usr/share/elasticsearch/modules/x-pack-ml/x-pack-ml-7.6.0.jar

$/org/elasticsearch/xpack/ml/inference$ tree

.

|-- ingest

| |-- InferenceProcessor$Factory.class

| `-- InferenceProcessor.class

|-- loadingservice

| |-- LocalModel$1.class

| |-- LocalModel.class

| |-- Model.class

| `-- ModelLoadingService.class

`-- persistence

|-- InferenceInternalIndex.class

|-- TrainedModelDefinitionDoc$1.class

|-- TrainedModelDefinitionDoc$Builder.class

|-- TrainedModelDefinitionDoc.class

|-- TrainedModelProvider.class

`-- lang_ident_model_1.json

3 directories, 12 files可以看到,在 persistence 目录就有这个模型包,是 json 格式的,里面有个压缩的二进制编码后的字段。

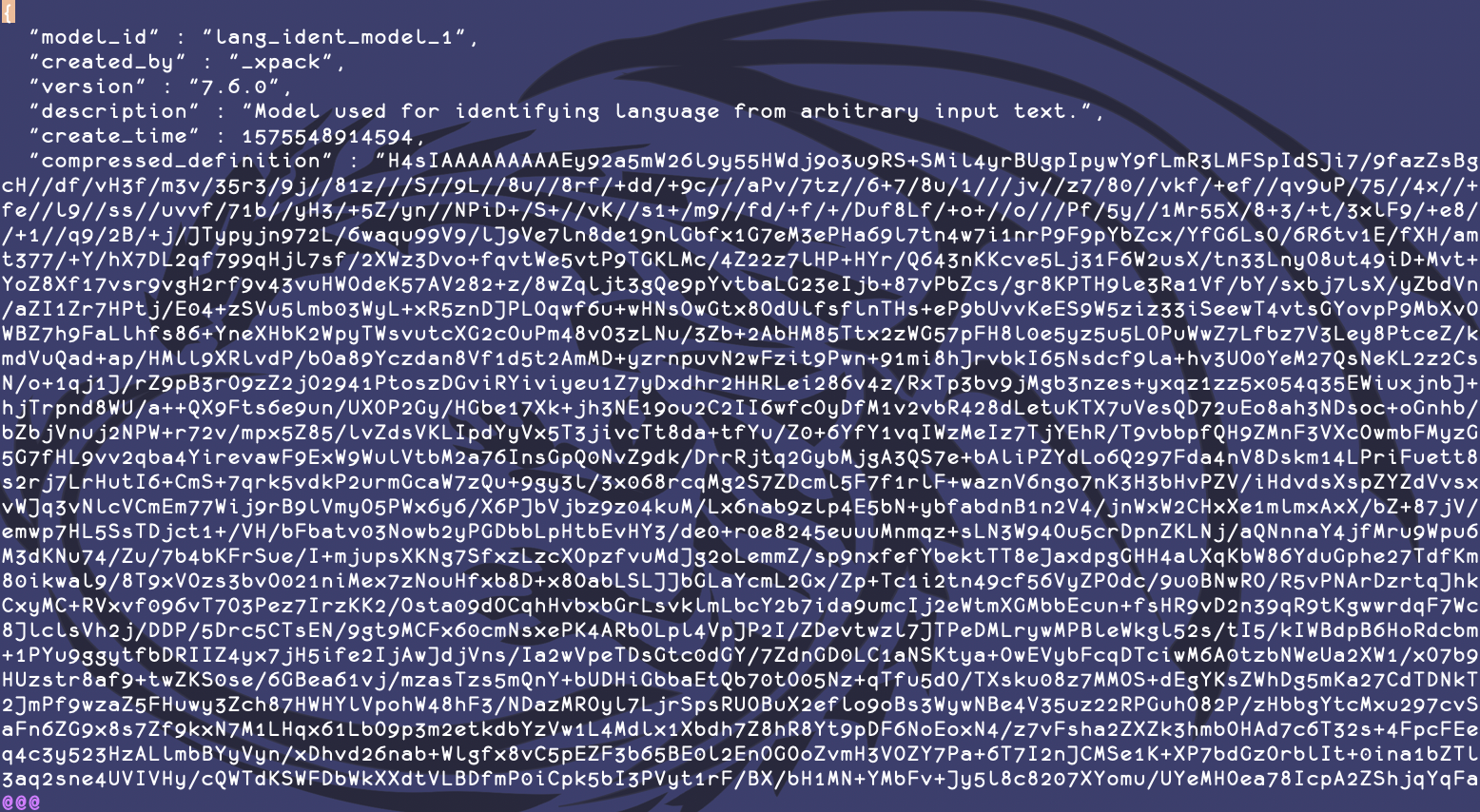

查看模型信息

我们还可以通过新的 API 来获取这个模型信息,以后模型多了之后会比较有用:

GET _ml/inference/lang_ident_model_1

{

"count" : 1,

"trained_model_configs" : [

{

"model_id" : "lang_ident_model_1",

"created_by" : "_xpack",

"version" : "7.6.0",

"description" : "Model used for identifying language from arbitrary input text.",

"create_time" : 1575548914594,

"tags" : [

"lang_ident",

"prepackaged"

],

"input" : {

"field_names" : [

"text"

]

},

"estimated_heap_memory_usage_bytes" : 1053992,

"estimated_operations" : 39629,

"license_level" : "basic"

}

]

}Ingest Pipeline 模拟测试

好了,基本的了解就到这里了,我们开始动手吧,既然要和 Ingest 结合使用,自然免不了要定义 Ingest Pipeline,也就是说定一个解析规则,索引的时候会调用这个规则来处理输入的索引文档。Ingest Pipeline 的调试是个问题,好在Ingest 提供了模拟调用的方法,我们测试一下:

POST _ingest/pipeline/_simulate

{

"pipeline":{

"processors":[

{

"inference":{

"model_id":"lang_ident_model_1",

"inference_config":{

"classification":{

"num_top_classes":5

}

},

"field_mappings":{

}

}

}

]

},

"docs":[

{

"_source":{

"text":"新冠病毒让你在家好好带着,你服不服"

}

}

]

}上面是借助 Ingest 的推理 Process 来模拟调用这个机器学习模型进行文本判断的方法,第一部分是设置 processor 的定义,设置了一个 inference processor,也就是要进行语言模型的检测,第二部分 docs 则是输入了一个 json 文档,作为测试的输入源,运行结果如下:

{

"docs" : [

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"text" : "新冠病毒让你在家好好带着,你服不服",

"ml" : {

"inference" : {

"top_classes" : [

{

"class_name" : "zh",

"class_probability" : 0.9999872511022145,

"class_score" : 0.9999872511022145

},

{

"class_name" : "ja",

"class_probability" : 1.061491174235718E-5,

"class_score" : 1.061491174235718E-5

},

{

"class_name" : "hy",

"class_probability" : 6.304673023324264E-7,

"class_score" : 6.304673023324264E-7

},

{

"class_name" : "ta",

"class_probability" : 4.1374037676410867E-7,

"class_score" : 4.1374037676410867E-7

},

{

"class_name" : "te",

"class_probability" : 2.0709260170937159E-7,

"class_score" : 2.0709260170937159E-7

}

],

"predicted_value" : "zh",

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T15:58:44.783736Z"

}

}

}

]

}可以看到,第一条返回结果,zh 表示中文语言类型,可能性为 0.9999872511022145,基本上无限接近肯定了,这个是中文文本,而第二位和剩下的就明显得分比较低了,如果你看到是他们的得分开头是 1.x 和 6.x 等,是不是觉得,不对啊,后面的得分怎么反而大一些,哈哈,你仔细看会发现它后面其实还有 -E 啥的尾巴呢,这个是科学计数法,其实数值远远小于 0。

创建一个 Pipeline

简单模拟倒是证明这个功能 work 了,那具体怎么使用,一起看看吧。

首先创建一个 Pipeline:

PUT _ingest/pipeline/lang_detect_add_tag

{

"description": "检测文本,添加语种标签",

"processors": [

{

"inference": {

"model_id": "lang_ident_model_1",

"inference_config": {

"classification": {

"num_top_classes": 2

}

},

"field_mappings": {

"contents": "text"

}

}

},

{

"set": {

"field": "tag",

"value": "{{ml.inference.predicted_value}}"

}

}

]

}可以看到,我们定义了一个 ID 为 lang_detect_add_tag 的 Ingest Pipeline,并且我们设置了这个推理模型的参数,只返回 2 个分类结果,和设置了 content 字段作为检测对象。同时,我们还定义了一个新的 set processor,这个的意思是设置一个名为 tag 的字段,它的值是来自于一个其它的字段的变量引用,也就是把检测到的文本对应的语种存成一个标签字段。

测试这个 Pipeline

这个 Pipeline 创建完之后,我们同样可以对这个 Pipeline 进行模拟测试,模拟的好处是不会实际创建索引,方便调试。

POST /_ingest/pipeline/lang_detect_add_tag/_simulate

{

"docs": [

{

"_index": "index",

"_id": "id",

"_source": {

"contents": "巴林境内新型冠状病毒肺炎确诊病例累计达56例"

}

},

{

"_index": "index",

"_id": "id",

"_source": {

"contents": "Watch live: WHO gives a coronavirus update as global cases top 100,000"

}

}

]

}返回结果:

{

"docs" : [

{

"doc" : {

"_index" : "index",

"_type" : "_doc",

"_id" : "id",

"_source" : {

"tag" : "zh",

"contents" : "巴林境内新型冠状病毒肺炎确诊病例累计达56例",

"ml" : {

"inference" : {

"top_classes" : [

{

"class_name" : "zh",

"class_probability" : 0.999812378112116,

"class_score" : 0.999812378112116

},

{

"class_name" : "ja",

"class_probability" : 1.8175264877915687E-4,

"class_score" : 1.8175264877915687E-4

}

],

"predicted_value" : "zh",

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:21:26.981249Z"

}

}

},

{

"doc" : {

"_index" : "index",

"_type" : "_doc",

"_id" : "id",

"_source" : {

"tag" : "en",

"contents" : "Watch live: WHO gives a coronavirus update as global cases top 100,000",

"ml" : {

"inference" : {

"top_classes" : [

{

"class_name" : "en",

"class_probability" : 0.9896669173070857,

"class_score" : 0.9896669173070857

},

{

"class_name" : "tg",

"class_probability" : 0.0033122788575614993,

"class_score" : 0.0033122788575614993

}

],

"predicted_value" : "en",

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:21:26.981261Z"

}

}

}

]

}继续完善 Pipeline

可以看到,两个文档分别都正确识别了语种,并且创建了对应的 tag 字段,不过这个时候,文档里面的 ml 对象字段,就显得有点多余了,可以使用 remove processor 来删除这个字段。

PUT _ingest/pipeline/lang_detect_add_tag

{

"description": "检测文本,添加语种标签",

"processors": [

{

"inference": {

"model_id": "lang_ident_model_1",

"inference_config": {

"classification": {

"num_top_classes": 2

}

},

"field_mappings": {

"contents": "text"

}

}

},

{

"set": {

"field": "tag",

"value": "{{ml.inference.predicted_value}}"

}

},

{

"remove": {

"field": "ml"

}

}

]

}索引文档并调用 Pipeline

那索引的时候,怎么使用这个 Pipeline 呢,看下面的例子:

POST news/_doc/1?pipeline=lang_detect_add_tag

{

"contents":"""

On Friday, he added: "In a globalised world, the only option is to stand together. All countries should really make sure that we stand together." Meanwhile, Italy—the country worst affected in Europe—reported 41 new COVID-19 deaths in just 24 hours. The country's civil protection agency said on Thursday evening that 3,858 people had been infected and 148 had died.

"""

}

GET news/_doc/1上面的这个例子就不贴返回值了,大家自己试试。

另外一个例子

那回到最开始的场景,如果要根据检测结果来分别存储文本到不同的字段,怎么做呢,这里贴一下官网博客的例子:

POST _ingest/pipeline/_simulate

{

"pipeline": {

"processors": [

{

"inference": {

"model_id": "lang_ident_model_1",

"inference_config": {

"classification": {

"num_top_classes": 1

}

},

"field_mappings": {

"contents": "text"

},

"target_field": "_ml.lang_ident"

}

},

{

"rename": {

"field": "contents",

"target_field": "contents.default"

}

},

{

"rename": {

"field": "_ml.lang_ident.predicted_value",

"target_field": "contents.language"

}

},

{

"script": {

"lang": "painless",

"source": "ctx.contents.supported = (['de', 'en', 'ja', 'ko', 'zh'].contains(ctx.contents.language))"

}

},

{

"set": {

"if": "ctx.contents.supported",

"field": "contents.{{contents.language}}",

"value": "{{contents.default}}",

"override": false

}

}

]

},

"docs": [

{

"_source": {

"contents": "Das leben ist kein Ponyhof"

}

},

{

"_source": {

"contents": "The rain in Spain stays mainly in the plains"

}

},

{

"_source": {

"contents": "オリンピック大会"

}

},

{

"_source": {

"contents": "로마는 하루아침에 이루어진 것이 아니다"

}

},

{

"_source": {

"contents": "授人以鱼不如授人以渔"

}

},

{

"_source": {

"contents": "Qui court deux lievres a la fois, n’en prend aucun"

}

},

{

"_source": {

"contents": "Lupus non timet canem latrantem"

}

},

{

"_source": {

"contents": "This is mostly English but has a touch of Latin since we often just say, Carpe diem"

}

}

]

}返回结果:

{

"docs" : [

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"de" : "Das leben ist kein Ponyhof",

"default" : "Das leben ist kein Ponyhof",

"language" : "de",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "de",

"class_probability" : 0.9996006023972855,

"class_score" : 0.9996006023972855

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211596Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"en" : "The rain in Spain stays mainly in the plains",

"default" : "The rain in Spain stays mainly in the plains",

"language" : "en",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "en",

"class_probability" : 0.9988809847231199,

"class_score" : 0.9988809847231199

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211611Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "オリンピック大会",

"language" : "ja",

"ja" : "オリンピック大会",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "ja",

"class_probability" : 0.9993823252841599,

"class_score" : 0.9993823252841599

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211618Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "로마는 하루아침에 이루어진 것이 아니다",

"language" : "ko",

"ko" : "로마는 하루아침에 이루어진 것이 아니다",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "ko",

"class_probability" : 0.9999939196272863,

"class_score" : 0.9999939196272863

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211624Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "授人以鱼不如授人以渔",

"language" : "zh",

"zh" : "授人以鱼不如授人以渔",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "zh",

"class_probability" : 0.9999810103320087,

"class_score" : 0.9999810103320087

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211629Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "Qui court deux lievres a la fois, n’en prend aucun",

"language" : "fr",

"supported" : false

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "fr",

"class_probability" : 0.9999669852240882,

"class_score" : 0.9999669852240882

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211635Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "Lupus non timet canem latrantem",

"language" : "la",

"supported" : false

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "la",

"class_probability" : 0.614050940088811,

"class_score" : 0.614050940088811

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.21164Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"en" : "This is mostly English but has a touch of Latin since we often just say, Carpe diem",

"default" : "This is mostly English but has a touch of Latin since we often just say, Carpe diem",

"language" : "en",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "en",

"class_probability" : 0.9997901768317939,

"class_score" : 0.9997901768317939

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211646Z"

}

}

}

]

}可以看到 Ingest Processor 非常灵活,且功能强大,所有的相关操作都可以在 Ingest processor 里面进行处理,再结合脚本做一下规则判断,对原始的字段重命名即可满足我们的文档处理需求。

小结

今天我们聊了聊 Language Identity 这个功能,也聊了聊 Ingest Pipeline 的使用,怎么样,这个功能是不是很赞呀,如果有类似使用场景的朋友,可以自己试试看。另外值得注意的是,如果文本长度太小可能会识别不准,CLD3 设计的文本长度要超过 200 个字符。

相关链接

- CLD2: https://github.com/CLD2Owners/cld2

- CLD3: https://github.com/google/cld3

- Multilingual search using language identification in Elasticsearch :https://www.elastic.co/blog/multilingual-search-using-language-identification-in-elasticsearch

- ML Lang Ident 手册:https://www.elastic.co/guide/en/machine-learning/7.6/ml-lang-ident.html

- Ingest Processor 手册:https://www.elastic.co/guide/en/elasticsearch/reference/7.6/inference-processor.html

Elasticsearch 7.6 利用机器学习来检测文本语言

Elasticsearch • medcl 发表了文章 • 0 个评论 • 5182 次浏览 • 2020-03-07 13:22

Elasticsearch 新近发布的 7.6 版本里面包含了很多激动人心的功能,而最让我感兴趣的是利用机器学习来自动检测语言的功能。

功能初探

检测文本语言本身不是什么稀奇事,之前做爬虫的时候,就做过对网页正文进行语言的检测,有很多成熟的方案,而最好的就属 Google Chrome 团队开源的 CLD 系列(全名:Compact Language Detector)了,能够检测多达 80 种各种语言,我用过CLD2,是基于 C++ 贝叶斯分类器实现的,而 CLD3 则是基于神经网络实现的,无疑更加准确,这次 Elasticsearch 将这个非常小的功能也直接集成到了默认的发行包里面,对于使用者来说可以说是带来很大的方便。

多语言的痛点

相信很多朋友,在实际的业务场景中,对碰到过一个字段同时存在多个语种的文本内容的情况,尤其是出海的产品,比如类似大众点评的 APP 吧,一个餐馆下面,来自七洲五湖四海的朋友都来品尝过了,自然要留下点评语是不,德国的朋友使用的是德语,法国的朋友使用的是法语,广州的朋友用的是粤语,那对于开发这个 APP 的后台工程师可就犯难了,如果这些评论都存在一个字段里面,就不好设置一个统一的分词器,因为不同的语言他们的切分规则肯定是不一样的,最简单的例子,比如中文和英文,设置的分词不对,查询结果就会不精准。

相信也有很多人用过这样的解决方案,既然一个字段搞不定,那就把这个字段复制几份,英文字段存一份,中文字段存一份,用 MultiField 来做,这样虽然可以解决一部分问题,但是同样会带来容量和资源的浪费,和查询时候具体该选择哪个字段来参与查询的挑战。

而利用 7.6 的这个新功能,可以在创建索引的时候,可以自动的根据内容进行推理,从而影响索引文档的构成,进而做到特定的文本进特定的字段,从而提升查询体验和性能,关于这个功能,Elastic 官网这里也有一篇博客2,提供了详细的例子。

实战上手

看上去不错,但是鲁迅说过,网上得来终觉浅,觉知此事要躬行,来, 今天一起跑一遍看看具体怎么个用法。

功能剖析

首先,这个功能叫 Language identification,是机器学习的一个 Feature,但是不能单独使用,要结合 Ingest Node 的一个 inference ingest processor 来使用,Ingest processor 是在 Elasticsearch 里面运行的数据预处理器,部分功能类似于 Logstash 的数据解析,对于简单数据操作场景,完全可以替代掉 Logstash,简化部署架构。

Elasticsearch 在 7.6 的包里面,默认打包了提前训练好的机器学习模型,就是 Language identification 需要调用的语言检测模型,名称是固定的 lang_ident_model_1,这也是 Elasticsearch 自带的第一个模型包,大家了解一下就好。

那这个模型包在什么位置呢,我们来解刨一下:

$unzip /usr/share/elasticsearch/modules/x-pack-ml/x-pack-ml-7.6.0.jar

$/org/elasticsearch/xpack/ml/inference$ tree

.

|-- ingest

| |-- InferenceProcessor$Factory.class

| `-- InferenceProcessor.class

|-- loadingservice

| |-- LocalModel$1.class

| |-- LocalModel.class

| |-- Model.class

| `-- ModelLoadingService.class

`-- persistence

|-- InferenceInternalIndex.class

|-- TrainedModelDefinitionDoc$1.class

|-- TrainedModelDefinitionDoc$Builder.class

|-- TrainedModelDefinitionDoc.class

|-- TrainedModelProvider.class

`-- lang_ident_model_1.json

3 directories, 12 files可以看到,在 persistence 目录就有这个模型包,是 json 格式的,里面有个压缩的二进制编码后的字段。

查看模型信息

我们还可以通过新的 API 来获取这个模型信息,以后模型多了之后会比较有用:

GET _ml/inference/lang_ident_model_1

{

"count" : 1,

"trained_model_configs" : [

{

"model_id" : "lang_ident_model_1",

"created_by" : "_xpack",

"version" : "7.6.0",

"description" : "Model used for identifying language from arbitrary input text.",

"create_time" : 1575548914594,

"tags" : [

"lang_ident",

"prepackaged"

],

"input" : {

"field_names" : [

"text"

]

},

"estimated_heap_memory_usage_bytes" : 1053992,

"estimated_operations" : 39629,

"license_level" : "basic"

}

]

}Ingest Pipeline 模拟测试

好了,基本的了解就到这里了,我们开始动手吧,既然要和 Ingest 结合使用,自然免不了要定义 Ingest Pipeline,也就是说定一个解析规则,索引的时候会调用这个规则来处理输入的索引文档。Ingest Pipeline 的调试是个问题,好在Ingest 提供了模拟调用的方法,我们测试一下:

POST _ingest/pipeline/_simulate

{

"pipeline":{

"processors":[

{

"inference":{

"model_id":"lang_ident_model_1",

"inference_config":{

"classification":{

"num_top_classes":5

}

},

"field_mappings":{

}

}

}

]

},

"docs":[

{

"_source":{

"text":"新冠病毒让你在家好好带着,你服不服"

}

}

]

}上面是借助 Ingest 的推理 Process 来模拟调用这个机器学习模型进行文本判断的方法,第一部分是设置 processor 的定义,设置了一个 inference processor,也就是要进行语言模型的检测,第二部分 docs 则是输入了一个 json 文档,作为测试的输入源,运行结果如下:

{

"docs" : [

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"text" : "新冠病毒让你在家好好带着,你服不服",

"ml" : {

"inference" : {

"top_classes" : [

{

"class_name" : "zh",

"class_probability" : 0.9999872511022145,

"class_score" : 0.9999872511022145

},

{

"class_name" : "ja",

"class_probability" : 1.061491174235718E-5,

"class_score" : 1.061491174235718E-5

},

{

"class_name" : "hy",

"class_probability" : 6.304673023324264E-7,

"class_score" : 6.304673023324264E-7

},

{

"class_name" : "ta",

"class_probability" : 4.1374037676410867E-7,

"class_score" : 4.1374037676410867E-7

},

{

"class_name" : "te",

"class_probability" : 2.0709260170937159E-7,

"class_score" : 2.0709260170937159E-7

}

],

"predicted_value" : "zh",

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T15:58:44.783736Z"

}

}

}

]

}可以看到,第一条返回结果,zh 表示中文语言类型,可能性为 0.9999872511022145,基本上无限接近肯定了,这个是中文文本,而第二位和剩下的就明显得分比较低了,如果你看到是他们的得分开头是 1.x 和 6.x 等,是不是觉得,不对啊,后面的得分怎么反而大一些,哈哈,你仔细看会发现它后面其实还有 -E 啥的尾巴呢,这个是科学计数法,其实数值远远小于 0。

创建一个 Pipeline

简单模拟倒是证明这个功能 work 了,那具体怎么使用,一起看看吧。

首先创建一个 Pipeline:

PUT _ingest/pipeline/lang_detect_add_tag

{

"description": "检测文本,添加语种标签",

"processors": [

{

"inference": {

"model_id": "lang_ident_model_1",

"inference_config": {

"classification": {

"num_top_classes": 2

}

},

"field_mappings": {

"contents": "text"

}

}

},

{

"set": {

"field": "tag",

"value": "{{ml.inference.predicted_value}}"

}

}

]

}可以看到,我们定义了一个 ID 为 lang_detect_add_tag 的 Ingest Pipeline,并且我们设置了这个推理模型的参数,只返回 2 个分类结果,和设置了 content 字段作为检测对象。同时,我们还定义了一个新的 set processor,这个的意思是设置一个名为 tag 的字段,它的值是来自于一个其它的字段的变量引用,也就是把检测到的文本对应的语种存成一个标签字段。

测试这个 Pipeline

这个 Pipeline 创建完之后,我们同样可以对这个 Pipeline 进行模拟测试,模拟的好处是不会实际创建索引,方便调试。

POST /_ingest/pipeline/lang_detect_add_tag/_simulate

{

"docs": [

{

"_index": "index",

"_id": "id",

"_source": {

"contents": "巴林境内新型冠状病毒肺炎确诊病例累计达56例"

}

},

{

"_index": "index",

"_id": "id",

"_source": {

"contents": "Watch live: WHO gives a coronavirus update as global cases top 100,000"

}

}

]

}返回结果:

{

"docs" : [

{

"doc" : {

"_index" : "index",

"_type" : "_doc",

"_id" : "id",

"_source" : {

"tag" : "zh",

"contents" : "巴林境内新型冠状病毒肺炎确诊病例累计达56例",

"ml" : {

"inference" : {

"top_classes" : [

{

"class_name" : "zh",

"class_probability" : 0.999812378112116,

"class_score" : 0.999812378112116

},

{

"class_name" : "ja",

"class_probability" : 1.8175264877915687E-4,

"class_score" : 1.8175264877915687E-4

}

],

"predicted_value" : "zh",

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:21:26.981249Z"

}

}

},

{

"doc" : {

"_index" : "index",

"_type" : "_doc",

"_id" : "id",

"_source" : {

"tag" : "en",

"contents" : "Watch live: WHO gives a coronavirus update as global cases top 100,000",

"ml" : {

"inference" : {

"top_classes" : [

{

"class_name" : "en",

"class_probability" : 0.9896669173070857,

"class_score" : 0.9896669173070857

},

{

"class_name" : "tg",

"class_probability" : 0.0033122788575614993,

"class_score" : 0.0033122788575614993

}

],

"predicted_value" : "en",

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:21:26.981261Z"

}

}

}

]

}继续完善 Pipeline

可以看到,两个文档分别都正确识别了语种,并且创建了对应的 tag 字段,不过这个时候,文档里面的 ml 对象字段,就显得有点多余了,可以使用 remove processor 来删除这个字段。

PUT _ingest/pipeline/lang_detect_add_tag

{

"description": "检测文本,添加语种标签",

"processors": [

{

"inference": {

"model_id": "lang_ident_model_1",

"inference_config": {

"classification": {

"num_top_classes": 2

}

},

"field_mappings": {

"contents": "text"

}

}

},

{

"set": {

"field": "tag",

"value": "{{ml.inference.predicted_value}}"

}

},

{

"remove": {

"field": "ml"

}

}

]

}索引文档并调用 Pipeline

那索引的时候,怎么使用这个 Pipeline 呢,看下面的例子:

POST news/_doc/1?pipeline=lang_detect_add_tag

{

"contents":"""

On Friday, he added: "In a globalised world, the only option is to stand together. All countries should really make sure that we stand together." Meanwhile, Italy—the country worst affected in Europe—reported 41 new COVID-19 deaths in just 24 hours. The country's civil protection agency said on Thursday evening that 3,858 people had been infected and 148 had died.

"""

}

GET news/_doc/1上面的这个例子就不贴返回值了,大家自己试试。

另外一个例子

那回到最开始的场景,如果要根据检测结果来分别存储文本到不同的字段,怎么做呢,这里贴一下官网博客的例子:

POST _ingest/pipeline/_simulate

{

"pipeline": {

"processors": [

{

"inference": {

"model_id": "lang_ident_model_1",

"inference_config": {

"classification": {

"num_top_classes": 1

}

},

"field_mappings": {

"contents": "text"

},

"target_field": "_ml.lang_ident"

}

},

{

"rename": {

"field": "contents",

"target_field": "contents.default"

}

},

{

"rename": {

"field": "_ml.lang_ident.predicted_value",

"target_field": "contents.language"

}

},

{

"script": {

"lang": "painless",

"source": "ctx.contents.supported = (['de', 'en', 'ja', 'ko', 'zh'].contains(ctx.contents.language))"

}

},

{

"set": {

"if": "ctx.contents.supported",

"field": "contents.{{contents.language}}",

"value": "{{contents.default}}",

"override": false

}

}

]

},

"docs": [

{

"_source": {

"contents": "Das leben ist kein Ponyhof"

}

},

{

"_source": {

"contents": "The rain in Spain stays mainly in the plains"

}

},

{

"_source": {

"contents": "オリンピック大会"

}

},

{

"_source": {

"contents": "로마는 하루아침에 이루어진 것이 아니다"

}

},

{

"_source": {

"contents": "授人以鱼不如授人以渔"

}

},

{

"_source": {

"contents": "Qui court deux lievres a la fois, n’en prend aucun"

}

},

{

"_source": {

"contents": "Lupus non timet canem latrantem"

}

},

{

"_source": {

"contents": "This is mostly English but has a touch of Latin since we often just say, Carpe diem"

}

}

]

}返回结果:

{

"docs" : [

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"de" : "Das leben ist kein Ponyhof",

"default" : "Das leben ist kein Ponyhof",

"language" : "de",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "de",

"class_probability" : 0.9996006023972855,

"class_score" : 0.9996006023972855

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211596Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"en" : "The rain in Spain stays mainly in the plains",

"default" : "The rain in Spain stays mainly in the plains",

"language" : "en",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "en",

"class_probability" : 0.9988809847231199,

"class_score" : 0.9988809847231199

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211611Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "オリンピック大会",

"language" : "ja",

"ja" : "オリンピック大会",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "ja",

"class_probability" : 0.9993823252841599,

"class_score" : 0.9993823252841599

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211618Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "로마는 하루아침에 이루어진 것이 아니다",

"language" : "ko",

"ko" : "로마는 하루아침에 이루어진 것이 아니다",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "ko",

"class_probability" : 0.9999939196272863,

"class_score" : 0.9999939196272863

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211624Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "授人以鱼不如授人以渔",

"language" : "zh",

"zh" : "授人以鱼不如授人以渔",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "zh",

"class_probability" : 0.9999810103320087,

"class_score" : 0.9999810103320087

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211629Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "Qui court deux lievres a la fois, n’en prend aucun",

"language" : "fr",

"supported" : false

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "fr",

"class_probability" : 0.9999669852240882,

"class_score" : 0.9999669852240882

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211635Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"default" : "Lupus non timet canem latrantem",

"language" : "la",

"supported" : false

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "la",

"class_probability" : 0.614050940088811,

"class_score" : 0.614050940088811

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.21164Z"

}

}

},

{

"doc" : {

"_index" : "_index",

"_type" : "_doc",

"_id" : "_id",

"_source" : {

"contents" : {

"en" : "This is mostly English but has a touch of Latin since we often just say, Carpe diem",

"default" : "This is mostly English but has a touch of Latin since we often just say, Carpe diem",

"language" : "en",

"supported" : true

},

"_ml" : {

"lang_ident" : {

"top_classes" : [

{

"class_name" : "en",

"class_probability" : 0.9997901768317939,

"class_score" : 0.9997901768317939

}

],

"model_id" : "lang_ident_model_1"

}

}

},

"_ingest" : {

"timestamp" : "2020-03-06T16:31:36.211646Z"

}

}

}

]

}可以看到 Ingest Processor 非常灵活,且功能强大,所有的相关操作都可以在 Ingest processor 里面进行处理,再结合脚本做一下规则判断,对原始的字段重命名即可满足我们的文档处理需求。

小结

今天我们聊了聊 Language Identity 这个功能,也聊了聊 Ingest Pipeline 的使用,怎么样,这个功能是不是很赞呀,如果有类似使用场景的朋友,可以自己试试看。另外值得注意的是,如果文本长度太小可能会识别不准,CLD3 设计的文本长度要超过 200 个字符。

相关链接

- CLD2: https://github.com/CLD2Owners/cld2

- CLD3: https://github.com/google/cld3

- Multilingual search using language identification in Elasticsearch :https://www.elastic.co/blog/multilingual-search-using-language-identification-in-elasticsearch

- ML Lang Ident 手册:https://www.elastic.co/guide/en/machine-learning/7.6/ml-lang-ident.html

- Ingest Processor 手册:https://www.elastic.co/guide/en/elasticsearch/reference/7.6/inference-processor.html