跨集群

在 Kibana 里统一访问来自不同集群的索引

资料分享 • medcl 发表了文章 • 0 个评论 • 6042 次浏览 • 2022-04-21 15:29

在 Kibana 里统一访问来自不同集群的索引

现在有这么一个需求,客户根据需要将数据按照业务维度划分,将索引分别存放在了不同的三个集群, 将一个大集群拆分成多个小集群有很多好处,比如降低了耦合,带来了集群可用性和稳定性方面的好处,也避免了单个业务的热点访问造成其他业务的影响, 尽管拆分集群是很常见的玩法,但是管理起来不是那么方便了,尤其是在查询的时候,可能要分别访问三套集群各自的 API,甚至要切换三套不同的 Kibana 来访问集群的数据, 那么有没有办法将他们无缝的联合在一起呢?

极限网关!

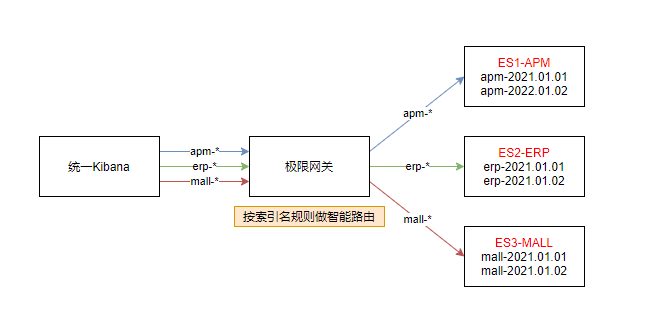

答案自然是有的,通过将 Kibana 访问 Elasticsearch 的地址切换为极限网关的地址,我们可以将请求按照索引来进行智能的路由, 也就是当访问不同的业务索引时会智能的路由到不同的集群,如下图:

上图,我们分别有 3 个不同的索引:

- apm-*

- erp-*

- mall-*

分别对应不同的三套 Elasticsearch 集群:

- ES1-APM

- ES2-ERP

- ES3-MALL

接下来我们来看如何在极限网关里面进行相应的配置来满足这个业务需求。

配置集群信息

首先配置 3 个集群的连接信息。

elasticsearch:

- name: es1-apm

enabled: true

endpoints:

- http://192.168.3.188:9206

- name: es2-erp

enabled: true

endpoints:

- http://192.168.3.188:9207

- name: es3-mall

enabled: true

endpoints:

- http://192.168.3.188:9208配置服务 Flow

然后,我们定义 3 个 Flow,分别对应用来访问 3 个不同的 Elasticsearch 集群,如下:

flow:

- name: es1-flow

filter:

- elasticsearch:

elasticsearch: es1-apm

- name: es2-flow

filter:

- elasticsearch:

elasticsearch: es2-erp

- name: es3-flow

filter:

- elasticsearch:

elasticsearch: es3-mall然后再定义一个 flow 用来进行路径的判断和转发,如下:

- name: default-flow

filter:

- switch:

remove_prefix: false

path_rules:

- prefix: apm-

flow: es1-flow

- prefix: erp-

flow: es2-flow

- prefix: mall-

flow: es3-flow

- flow: #default flow

flows:

- es1-flow根据请求路径里面的索引前缀来匹配不同的索引,并转发到不同的 Flow。

配置路由信息

接下来,我们定义路由信息,具体配置如下:

router:

- name: my_router

default_flow: default-flow指向上面定义的默认 flow 来统一请求的处理。

定义服务及关联路由

最后,我们定义一个监听为 8000 端口的服务,用来提供给 Kibana 来进行统一的入口访问,如下:

entry:

- name: es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000完整配置

最后的完整配置如下:

path.data: data

path.logs: log

entry:

- name: es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000

flow:

- name: default-flow

filter:

- switch:

remove_prefix: false

path_rules:

- prefix: apm-

flow: es1-flow

- prefix: erp-

flow: es2-flow

- prefix: mall-

flow: es3-flow

- flow: #default flow

flows:

- es1-flow

- name: es1-flow

filter:

- elasticsearch:

elasticsearch: es1-apm

- name: es2-flow

filter:

- elasticsearch:

elasticsearch: es2-erp

- name: es3-flow

filter:

- elasticsearch:

elasticsearch: es3-mall

router:

- name: my_router

default_flow: default-flow

elasticsearch:

- name: es1-apm

enabled: true

endpoints:

- http://192.168.3.188:9206

- name: es2-erp

enabled: true

endpoints:

- http://192.168.3.188:9207

- name: es3-mall

enabled: true

endpoints:

- http://192.168.3.188:9208启动网关

直接启动网关,如下:

➜ gateway git:(master) ✗ ./bin/gateway -config sample-configs/elasticsearch-route-by-index.yml

___ _ _____ __ __ __ _

/ _ \ /_\ /__ \/__\/ / /\ \ \/_\ /\_/\

/ /_\///_\\ / /\/_\ \ \/ \/ //_\\\_ _/

/ /_\\/ _ \/ / //__ \ /\ / _ \/ \

\____/\_/ \_/\/ \__/ \/ \/\_/ \_/\_/

[GATEWAY] A light-weight, powerful and high-performance elasticsearch gateway.

[GATEWAY] 1.0.0_SNAPSHOT, 2022-04-20 08:23:56, 2023-12-31 10:10:10, 51650a5c3d6aaa436f3c8a8828ea74894c3524b9

[04-21 13:41:21] [INF] [app.go:174] initializing gateway.

[04-21 13:41:21] [INF] [app.go:175] using config: /Users/medcl/go/src/infini.sh/gateway/sample-configs/elasticsearch-route-by-index.yml.

[04-21 13:41:21] [INF] [instance.go:72] workspace: /Users/medcl/go/src/infini.sh/gateway/data/gateway/nodes/c9bpg0ai4h931o4ngs3g

[04-21 13:41:21] [INF] [app.go:283] gateway is up and running now.

[04-21 13:41:21] [INF] [api.go:262] api listen at: http://0.0.0.0:2900

[04-21 13:41:21] [INF] [reverseproxy.go:255] elasticsearch [es1-apm] hosts: [] => [192.168.3.188:9206]

[04-21 13:41:21] [INF] [reverseproxy.go:255] elasticsearch [es2-erp] hosts: [] => [192.168.3.188:9207]

[04-21 13:41:21] [INF] [reverseproxy.go:255] elasticsearch [es3-mall] hosts: [] => [192.168.3.188:9208]

[04-21 13:41:21] [INF] [actions.go:349] elasticsearch [es2-erp] is available

[04-21 13:41:21] [INF] [actions.go:349] elasticsearch [es1-apm] is available

[04-21 13:41:21] [INF] [entry.go:312] entry [es_entry] listen at: http://0.0.0.0:8000

[04-21 13:41:21] [INF] [module.go:116] all modules are started

[04-21 13:41:21] [INF] [actions.go:349] elasticsearch [es3-mall] is available

[04-21 13:41:55] [INF] [reverseproxy.go:255] elasticsearch [es1-apm] hosts: [] => [192.168.3.188:9206]网关启动成功之后,就可以通过网关的 IP+8000 端口来访问目标 Elasticsearch 集群了。

测试访问

首先通过 API 来访问测试一下,如下:

➜ ~ curl http://localhost:8000/apm-2022/_search -v

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8000 (#0)

> GET /apm-2022/_search HTTP/1.1

> Host: localhost:8000

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Thu, 21 Apr 2022 05:45:44 GMT

< content-type: application/json; charset=UTF-8

< Content-Length: 162

< X-elastic-product: Elasticsearch

< X-Backend-Cluster: es1-apm

< X-Backend-Server: 192.168.3.188:9206

< X-Filters: filters->elasticsearch

<

* Connection #0 to host localhost left intact

{"took":142,"timed_out":false,"_shards":{"total":1,"successful":1,"skipped":0,"failed":0},"hits":{"total":{"value":0,"relation":"eq"},"max_score":null,"hits":[]}}% 可以看到 apm-2022 指向了后端的 es1-apm 集群。

继续测试,erp 索引的访问,如下:

➜ ~ curl http://localhost:8000/erp-2022/_search -v

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8000 (#0)

> GET /erp-2022/_search HTTP/1.1

> Host: localhost:8000

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Thu, 21 Apr 2022 06:24:46 GMT

< content-type: application/json; charset=UTF-8

< Content-Length: 161

< X-Backend-Cluster: es2-erp

< X-Backend-Server: 192.168.3.188:9207

< X-Filters: filters->switch->filters->elasticsearch->skipped

<

* Connection #0 to host localhost left intact

{"took":12,"timed_out":false,"_shards":{"total":1,"successful":1,"skipped":0,"failed":0},"hits":{"total":{"value":0,"relation":"eq"},"max_score":null,"hits":[]}}%继续测试,mall 索引的访问,如下:

➜ ~ curl http://localhost:8000/mall-2022/_search -v

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8000 (#0)

> GET /mall-2022/_search HTTP/1.1

> Host: localhost:8000

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Thu, 21 Apr 2022 06:25:08 GMT

< content-type: application/json; charset=UTF-8

< Content-Length: 134

< X-Backend-Cluster: es3-mall

< X-Backend-Server: 192.168.3.188:9208

< X-Filters: filters->switch->filters->elasticsearch->skipped

<

* Connection #0 to host localhost left intact

{"took":8,"timed_out":false,"_shards":{"total":5,"successful":5,"skipped":0,"failed":0},"hits":{"total":0,"max_score":null,"hits":[]}}% 完美转发。

修改 Kibana 配置

修改 Kibana 的配置文件: kibana.yml,替换 Elasticsearch 的地址为网关地址(http://192.168.3.200:8000),如下:

elasticsearch.hosts: ["http://192.168.3.200:8000"]重启 Kibana 让配置生效。

效果如下

可以看到,在一个 Kibana 的开发者工具里面,我们已经可以像操作一个集群一样来同时读写实际上来自三个不同集群的索引数据了。

展望

通过极限网关,我们还可以非常灵活的进行在线请求的流量编辑,动态组合不同集群的操作。

使用极限网关来进行 Elasticsearch 跨集群跨版本查询及所有其它请求

Elasticsearch • medcl 发表了文章 • 9 个评论 • 8403 次浏览 • 2021-10-16 11:31

使用场景

如果你的业务需要用到有多个集群,并且版本还不一样,是不是管理起来很麻烦,如果能够通过一个 API 来进行查询就方便了,聪明的你相信已经想到了 CCS,没错用 CCS 可以实现跨集群的查询,不过 Elasticsearch 提供的 CCS 对版本有一点的限制,并且需要提前做好 mTLS,也就是需要提前配置好两个集群之间的证书互信,这个免不了要重启维护,好像有点麻烦,那么问题来咯,有没有更好的方案呢?

😁 有办法,今天我就给大家介绍一个基于极限网关的方案,极限网关的网址:http://极限网关.com/。

假设现在有两个集群,一个集群是 v2.4.6,有不少业务数据,舍不得删,里面有很多好东西 :)还有一个集群是 v7.14.0,版本还算比较新,业务正在做的一个新的试点,没什么好东西,但是也得用 :(,现在老板们的的需求是希望通过在一个统一的接口就能访问这些数据,程序员懒得很,懂得都懂。

集群信息

- v2.4.6 集群的访问入口地址:192.168.3.188:9202

- v7.14.0 集群的访问入口地址:192.168.3.188:9206

这两个集群都是 http 协议的。

实现步骤

今天用到的是极限网关的 switch 过滤器:https://极限网关.com/docs/references/filters/switch/

网关下载下来就两个文件,一个主程序,一个配置文件,记得下载对应操作系统的包。

定义两个集群资源

elasticsearch:

- name: v2

enabled: true

endpoint: http://192.168.3.188:9202

- name: v7

enabled: true

endpoint: http://192.168.3.188:9206上面定义了两个集群,分别命名为 v2 和 v7,待会会用到这些资源。

定义一个服务入口

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 1000

network:

binding: 0.0.0.0:8000

tls:

enabled: true这里定义了一个名为 my_es_entry 的资源入口,并引用了一个名为 my_router 的请求转发路由,同时绑定了网卡的 0.0.0.0:8000 也就是所有本地网卡监听 IP 的 8000 端口,访问任意 IP 的 8000 端口就能访问到这个网关了。

另外老板也说了,Elasticsearch 用 HTTP 协议简直就是裸奔,通过这里开启 tls,可以让网关对外提供的是 HTTPS 协议,这样用户连接的 Elasticsearch 服务就自带 buffer 了,后端的 es 集群和网关直接可以做好网络流量隔离,集群不用动,简直完美。

为什么定义 TLS 不用指定证书,好用的软件不需要这么麻烦,就这样,不解释。

最后,通过设置 max_concurrency 为 1000,限制下并发数,避免野猴子把我们的后端的 Elasticsearch 给压挂了。

定义一个请求路由

router:

- name: my_router

default_flow: cross-cluster-search这里的名称 my_router 就是表示上面的服务入口的router 参数指定的值。

另外设置一个 default_flow 来将所有的请求都转发给一个名为 cross-cluster-search 的请求处理流程,还没定义,别急,马上。

定义请求处理流程

来啦,来啦,先定义两个 flow,如下,分别名为 v2-flow 和 v7-flow,每节配置的 filter 定义了一系列过滤器,用来对请求进行处理,这里就用了一个 elasticsearch 过滤器,也就是转发请求给指定的 Elasticsearch 后端服务器,了否?

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7然后,在定义额外一个名为 cross-cluster-search 的 flow,如下:

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow这个 flow 就是通过请求的路径的前缀来进行路由的过滤器,如果是 v2:开头的请求,则转发给 v2-flow 继续处理,如果是 v7: 开头的请求,则转发给 v7-flow 来处理,使用的用法和 CCS 是一样的。so easy!

对了,那是不是每个请求都需要加前缀啊,费事啊,没事,在这个 cross-cluster-search 的 filter 最后再加上一个 elasticsearch filter,前面前缀匹配不上的都会走它,假设默认都走 v7,最后完整的 flow 配置如下:

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow

- elasticsearch:

elasticsearch: v7 然后就没有然后了,因为就配置这些就行了。

启动网关

假设配置文件的路径为 sample-configs/cross-cluster-search.yml,运行如下命令:

➜ gateway git:(master) ✗ ./bin/gateway -config sample-configs/cross-cluster-search.yml

___ _ _____ __ __ __ _

/ _ \ /_\ /__ \/__\/ / /\ \ \/_\ /\_/\

/ /_\///_\\ / /\/_\ \ \/ \/ //_\\\_ _/

/ /_\\/ _ \/ / //__ \ /\ / _ \/ \

\____/\_/ \_/\/ \__/ \/ \/\_/ \_/\_/

[GATEWAY] A light-weight, powerful and high-performance elasticsearch gateway.

[GATEWAY] 1.0.0_SNAPSHOT, 2021-10-15 16:25:56, 3d0a1cd

[10-16 11:00:52] [INF] [app.go:228] initializing gateway.

[10-16 11:00:52] [INF] [instance.go:24] workspace: data/gateway/nodes/0

[10-16 11:00:52] [INF] [api.go:260] api listen at: http://0.0.0.0:2900

[10-16 11:00:52] [INF] [reverseproxy.go:257] elasticsearch [v7] hosts: [] => [192.168.3.188:9206]

[10-16 11:00:52] [INF] [entry.go:225] auto generating cert files

[10-16 11:00:52] [INF] [actions.go:223] elasticsearch [v2] is available

[10-16 11:00:52] [INF] [actions.go:223] elasticsearch [v7] is available

[10-16 11:00:53] [INF] [entry.go:296] entry [my_es_entry] listen at: https://0.0.0.0:8000

[10-16 11:00:53] [INF] [app.go:309] gateway is running now.可以看到网关输出了启动成功的日志,网关服务监听在 https://0.0.0.0:8000 。

试试访问网关

直接访问网关的 8000 端口,因为是网关自签的证书,加上 -k 来跳过证书的校验,如下:

➜ loadgen git:(master) ✗ curl -k https://localhost:8000

{

"name" : "LENOVO",

"cluster_name" : "es-v7140",

"cluster_uuid" : "npWjpIZmS8iP_p3GK01-xg",

"version" : {

"number" : "7.14.0",

"build_flavor" : "default",

"build_type" : "zip",

"build_hash" : "dd5a0a2acaa2045ff9624f3729fc8a6f40835aa1",

"build_date" : "2021-07-29T20:49:32.864135063Z",

"build_snapshot" : false,

"lucene_version" : "8.9.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}正如前面配置所配置的一样,默认请求访问的就是 v7 集群。

访问 v2 集群

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:/

{

"name" : "Solomon O'Sullivan",

"cluster_name" : "es-v246",

"cluster_uuid" : "cqlpjByvQVWDAv6VvRwPAw",

"version" : {

"number" : "2.4.6",

"build_hash" : "5376dca9f70f3abef96a77f4bb22720ace8240fd",

"build_timestamp" : "2017-07-18T12:17:44Z",

"build_snapshot" : false,

"lucene_version" : "5.5.4"

},

"tagline" : "You Know, for Search"

}查看集群信息:

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:_cluster/health\?pretty

{

"cluster_name" : "es-v246",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 5,

"active_shards" : 5,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 5,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 50.0

}插入一条文档:

➜ loadgen git:(master) ✗ curl-json -k https://localhost:8000/v2:medcl/doc/1 -d '{"name":"hello world"}'

{"_index":"medcl","_type":"doc","_id":"1","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"created":true}% 执行一个查询

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:medcl/_search\?q\=name:hello

{"took":78,"timed_out":false,"_shards":{"total":5,"successful":5,"failed":0},"hits":{"total":1,"max_score":0.19178301,"hits":[{"_index":"medcl","_type":"doc","_id":"1","_score":0.19178301,"_source":{"name":"hello world"}}]}}% 可以看到,所有的请求,不管是集群的操作,还是索引的增删改查都可以,而 Elasticsearch 自带的 CCS 是只读的,只能进行查询。

访问 v7 集群

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v7:/

{

"name" : "LENOVO",

"cluster_name" : "es-v7140",

"cluster_uuid" : "npWjpIZmS8iP_p3GK01-xg",

"version" : {

"number" : "7.14.0",

"build_flavor" : "default",

"build_type" : "zip",

"build_hash" : "dd5a0a2acaa2045ff9624f3729fc8a6f40835aa1",

"build_date" : "2021-07-29T20:49:32.864135063Z",

"build_snapshot" : false,

"lucene_version" : "8.9.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}Kibana 里面访问

完全没问题,有图有真相:

其他操作也类似,就不重复了。

完整的配置

path.data: data

path.logs: log

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000

tls:

enabled: true

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow

- elasticsearch:

elasticsearch: v7

router:

- name: my_router

default_flow: cross-cluster-search

elasticsearch:

- name: v2

enabled: true

endpoint: http://192.168.3.188:9202

- name: v7

enabled: true

endpoint: http://192.168.3.188:9206小结

好了,今天给大家分享的如何使用极限网关来进行 Elasticsearch 跨集群跨版本的操作就到这里了,希望大家周末玩的开心。😁

使用极限网关来进行 Elasticsearch 跨集群跨版本查询及所有其它请求

Elasticsearch • medcl 发表了文章 • 9 个评论 • 8403 次浏览 • 2021-10-16 11:31

使用场景

如果你的业务需要用到有多个集群,并且版本还不一样,是不是管理起来很麻烦,如果能够通过一个 API 来进行查询就方便了,聪明的你相信已经想到了 CCS,没错用 CCS 可以实现跨集群的查询,不过 Elasticsearch 提供的 CCS 对版本有一点的限制,并且需要提前做好 mTLS,也就是需要提前配置好两个集群之间的证书互信,这个免不了要重启维护,好像有点麻烦,那么问题来咯,有没有更好的方案呢?

😁 有办法,今天我就给大家介绍一个基于极限网关的方案,极限网关的网址:http://极限网关.com/。

假设现在有两个集群,一个集群是 v2.4.6,有不少业务数据,舍不得删,里面有很多好东西 :)还有一个集群是 v7.14.0,版本还算比较新,业务正在做的一个新的试点,没什么好东西,但是也得用 :(,现在老板们的的需求是希望通过在一个统一的接口就能访问这些数据,程序员懒得很,懂得都懂。

集群信息

- v2.4.6 集群的访问入口地址:192.168.3.188:9202

- v7.14.0 集群的访问入口地址:192.168.3.188:9206

这两个集群都是 http 协议的。

实现步骤

今天用到的是极限网关的 switch 过滤器:https://极限网关.com/docs/references/filters/switch/

网关下载下来就两个文件,一个主程序,一个配置文件,记得下载对应操作系统的包。

定义两个集群资源

elasticsearch:

- name: v2

enabled: true

endpoint: http://192.168.3.188:9202

- name: v7

enabled: true

endpoint: http://192.168.3.188:9206上面定义了两个集群,分别命名为 v2 和 v7,待会会用到这些资源。

定义一个服务入口

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 1000

network:

binding: 0.0.0.0:8000

tls:

enabled: true这里定义了一个名为 my_es_entry 的资源入口,并引用了一个名为 my_router 的请求转发路由,同时绑定了网卡的 0.0.0.0:8000 也就是所有本地网卡监听 IP 的 8000 端口,访问任意 IP 的 8000 端口就能访问到这个网关了。

另外老板也说了,Elasticsearch 用 HTTP 协议简直就是裸奔,通过这里开启 tls,可以让网关对外提供的是 HTTPS 协议,这样用户连接的 Elasticsearch 服务就自带 buffer 了,后端的 es 集群和网关直接可以做好网络流量隔离,集群不用动,简直完美。

为什么定义 TLS 不用指定证书,好用的软件不需要这么麻烦,就这样,不解释。

最后,通过设置 max_concurrency 为 1000,限制下并发数,避免野猴子把我们的后端的 Elasticsearch 给压挂了。

定义一个请求路由

router:

- name: my_router

default_flow: cross-cluster-search这里的名称 my_router 就是表示上面的服务入口的router 参数指定的值。

另外设置一个 default_flow 来将所有的请求都转发给一个名为 cross-cluster-search 的请求处理流程,还没定义,别急,马上。

定义请求处理流程

来啦,来啦,先定义两个 flow,如下,分别名为 v2-flow 和 v7-flow,每节配置的 filter 定义了一系列过滤器,用来对请求进行处理,这里就用了一个 elasticsearch 过滤器,也就是转发请求给指定的 Elasticsearch 后端服务器,了否?

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7然后,在定义额外一个名为 cross-cluster-search 的 flow,如下:

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow这个 flow 就是通过请求的路径的前缀来进行路由的过滤器,如果是 v2:开头的请求,则转发给 v2-flow 继续处理,如果是 v7: 开头的请求,则转发给 v7-flow 来处理,使用的用法和 CCS 是一样的。so easy!

对了,那是不是每个请求都需要加前缀啊,费事啊,没事,在这个 cross-cluster-search 的 filter 最后再加上一个 elasticsearch filter,前面前缀匹配不上的都会走它,假设默认都走 v7,最后完整的 flow 配置如下:

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow

- elasticsearch:

elasticsearch: v7 然后就没有然后了,因为就配置这些就行了。

启动网关

假设配置文件的路径为 sample-configs/cross-cluster-search.yml,运行如下命令:

➜ gateway git:(master) ✗ ./bin/gateway -config sample-configs/cross-cluster-search.yml

___ _ _____ __ __ __ _

/ _ \ /_\ /__ \/__\/ / /\ \ \/_\ /\_/\

/ /_\///_\\ / /\/_\ \ \/ \/ //_\\\_ _/

/ /_\\/ _ \/ / //__ \ /\ / _ \/ \

\____/\_/ \_/\/ \__/ \/ \/\_/ \_/\_/

[GATEWAY] A light-weight, powerful and high-performance elasticsearch gateway.

[GATEWAY] 1.0.0_SNAPSHOT, 2021-10-15 16:25:56, 3d0a1cd

[10-16 11:00:52] [INF] [app.go:228] initializing gateway.

[10-16 11:00:52] [INF] [instance.go:24] workspace: data/gateway/nodes/0

[10-16 11:00:52] [INF] [api.go:260] api listen at: http://0.0.0.0:2900

[10-16 11:00:52] [INF] [reverseproxy.go:257] elasticsearch [v7] hosts: [] => [192.168.3.188:9206]

[10-16 11:00:52] [INF] [entry.go:225] auto generating cert files

[10-16 11:00:52] [INF] [actions.go:223] elasticsearch [v2] is available

[10-16 11:00:52] [INF] [actions.go:223] elasticsearch [v7] is available

[10-16 11:00:53] [INF] [entry.go:296] entry [my_es_entry] listen at: https://0.0.0.0:8000

[10-16 11:00:53] [INF] [app.go:309] gateway is running now.可以看到网关输出了启动成功的日志,网关服务监听在 https://0.0.0.0:8000 。

试试访问网关

直接访问网关的 8000 端口,因为是网关自签的证书,加上 -k 来跳过证书的校验,如下:

➜ loadgen git:(master) ✗ curl -k https://localhost:8000

{

"name" : "LENOVO",

"cluster_name" : "es-v7140",

"cluster_uuid" : "npWjpIZmS8iP_p3GK01-xg",

"version" : {

"number" : "7.14.0",

"build_flavor" : "default",

"build_type" : "zip",

"build_hash" : "dd5a0a2acaa2045ff9624f3729fc8a6f40835aa1",

"build_date" : "2021-07-29T20:49:32.864135063Z",

"build_snapshot" : false,

"lucene_version" : "8.9.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}正如前面配置所配置的一样,默认请求访问的就是 v7 集群。

访问 v2 集群

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:/

{

"name" : "Solomon O'Sullivan",

"cluster_name" : "es-v246",

"cluster_uuid" : "cqlpjByvQVWDAv6VvRwPAw",

"version" : {

"number" : "2.4.6",

"build_hash" : "5376dca9f70f3abef96a77f4bb22720ace8240fd",

"build_timestamp" : "2017-07-18T12:17:44Z",

"build_snapshot" : false,

"lucene_version" : "5.5.4"

},

"tagline" : "You Know, for Search"

}查看集群信息:

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:_cluster/health\?pretty

{

"cluster_name" : "es-v246",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 5,

"active_shards" : 5,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 5,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 50.0

}插入一条文档:

➜ loadgen git:(master) ✗ curl-json -k https://localhost:8000/v2:medcl/doc/1 -d '{"name":"hello world"}'

{"_index":"medcl","_type":"doc","_id":"1","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"created":true}% 执行一个查询

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:medcl/_search\?q\=name:hello

{"took":78,"timed_out":false,"_shards":{"total":5,"successful":5,"failed":0},"hits":{"total":1,"max_score":0.19178301,"hits":[{"_index":"medcl","_type":"doc","_id":"1","_score":0.19178301,"_source":{"name":"hello world"}}]}}% 可以看到,所有的请求,不管是集群的操作,还是索引的增删改查都可以,而 Elasticsearch 自带的 CCS 是只读的,只能进行查询。

访问 v7 集群

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v7:/

{

"name" : "LENOVO",

"cluster_name" : "es-v7140",

"cluster_uuid" : "npWjpIZmS8iP_p3GK01-xg",

"version" : {

"number" : "7.14.0",

"build_flavor" : "default",

"build_type" : "zip",

"build_hash" : "dd5a0a2acaa2045ff9624f3729fc8a6f40835aa1",

"build_date" : "2021-07-29T20:49:32.864135063Z",

"build_snapshot" : false,

"lucene_version" : "8.9.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}Kibana 里面访问

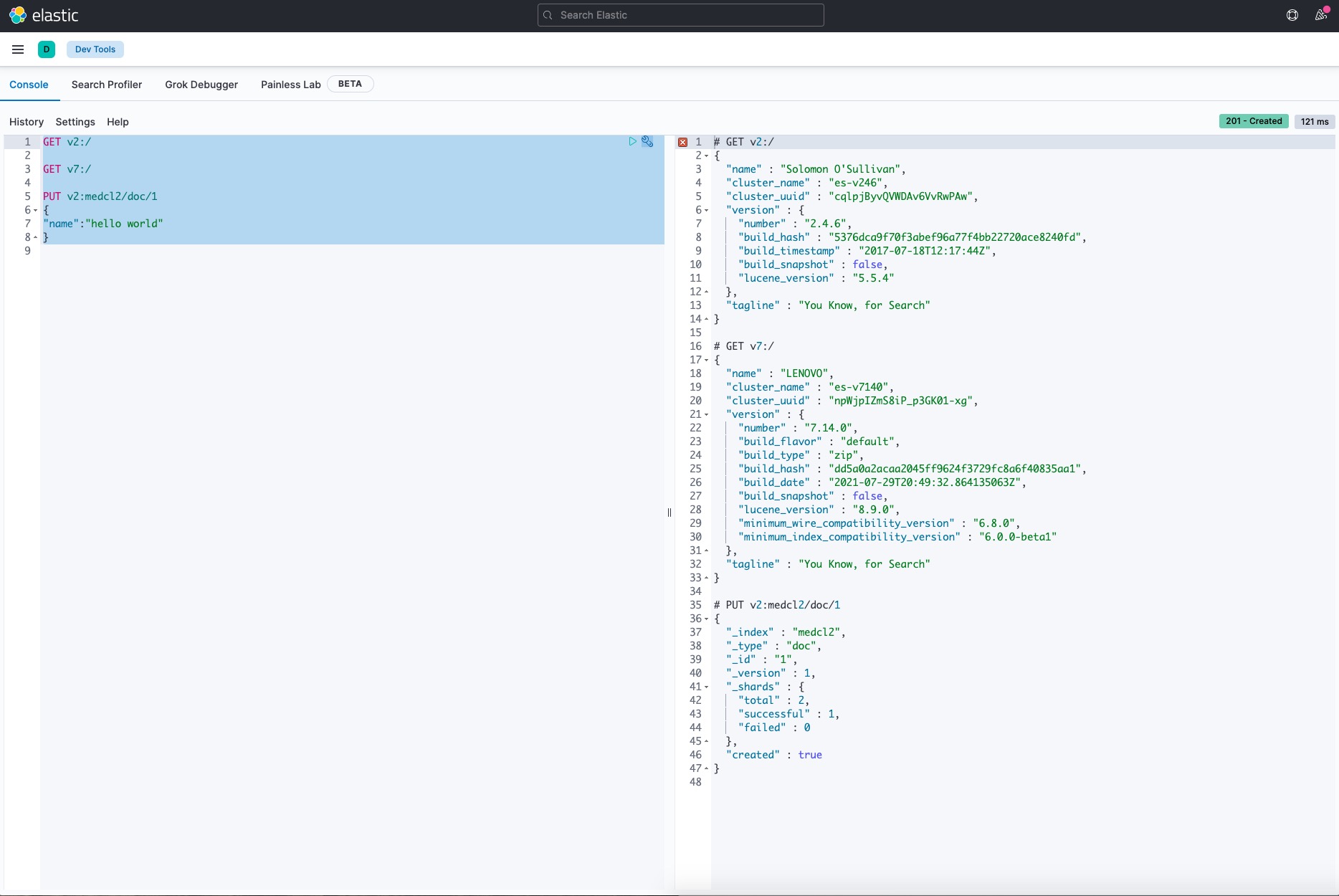

完全没问题,有图有真相:

其他操作也类似,就不重复了。

完整的配置

path.data: data

path.logs: log

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000

tls:

enabled: true

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow

- elasticsearch:

elasticsearch: v7

router:

- name: my_router

default_flow: cross-cluster-search

elasticsearch:

- name: v2

enabled: true

endpoint: http://192.168.3.188:9202

- name: v7

enabled: true

endpoint: http://192.168.3.188:9206小结

好了,今天给大家分享的如何使用极限网关来进行 Elasticsearch 跨集群跨版本的操作就到这里了,希望大家周末玩的开心。😁

在 Kibana 里统一访问来自不同集群的索引

资料分享 • medcl 发表了文章 • 0 个评论 • 6042 次浏览 • 2022-04-21 15:29

在 Kibana 里统一访问来自不同集群的索引

现在有这么一个需求,客户根据需要将数据按照业务维度划分,将索引分别存放在了不同的三个集群, 将一个大集群拆分成多个小集群有很多好处,比如降低了耦合,带来了集群可用性和稳定性方面的好处,也避免了单个业务的热点访问造成其他业务的影响, 尽管拆分集群是很常见的玩法,但是管理起来不是那么方便了,尤其是在查询的时候,可能要分别访问三套集群各自的 API,甚至要切换三套不同的 Kibana 来访问集群的数据, 那么有没有办法将他们无缝的联合在一起呢?

极限网关!

答案自然是有的,通过将 Kibana 访问 Elasticsearch 的地址切换为极限网关的地址,我们可以将请求按照索引来进行智能的路由, 也就是当访问不同的业务索引时会智能的路由到不同的集群,如下图:

上图,我们分别有 3 个不同的索引:

- apm-*

- erp-*

- mall-*

分别对应不同的三套 Elasticsearch 集群:

- ES1-APM

- ES2-ERP

- ES3-MALL

接下来我们来看如何在极限网关里面进行相应的配置来满足这个业务需求。

配置集群信息

首先配置 3 个集群的连接信息。

elasticsearch:

- name: es1-apm

enabled: true

endpoints:

- http://192.168.3.188:9206

- name: es2-erp

enabled: true

endpoints:

- http://192.168.3.188:9207

- name: es3-mall

enabled: true

endpoints:

- http://192.168.3.188:9208配置服务 Flow

然后,我们定义 3 个 Flow,分别对应用来访问 3 个不同的 Elasticsearch 集群,如下:

flow:

- name: es1-flow

filter:

- elasticsearch:

elasticsearch: es1-apm

- name: es2-flow

filter:

- elasticsearch:

elasticsearch: es2-erp

- name: es3-flow

filter:

- elasticsearch:

elasticsearch: es3-mall然后再定义一个 flow 用来进行路径的判断和转发,如下:

- name: default-flow

filter:

- switch:

remove_prefix: false

path_rules:

- prefix: apm-

flow: es1-flow

- prefix: erp-

flow: es2-flow

- prefix: mall-

flow: es3-flow

- flow: #default flow

flows:

- es1-flow根据请求路径里面的索引前缀来匹配不同的索引,并转发到不同的 Flow。

配置路由信息

接下来,我们定义路由信息,具体配置如下:

router:

- name: my_router

default_flow: default-flow指向上面定义的默认 flow 来统一请求的处理。

定义服务及关联路由

最后,我们定义一个监听为 8000 端口的服务,用来提供给 Kibana 来进行统一的入口访问,如下:

entry:

- name: es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000完整配置

最后的完整配置如下:

path.data: data

path.logs: log

entry:

- name: es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000

flow:

- name: default-flow

filter:

- switch:

remove_prefix: false

path_rules:

- prefix: apm-

flow: es1-flow

- prefix: erp-

flow: es2-flow

- prefix: mall-

flow: es3-flow

- flow: #default flow

flows:

- es1-flow

- name: es1-flow

filter:

- elasticsearch:

elasticsearch: es1-apm

- name: es2-flow

filter:

- elasticsearch:

elasticsearch: es2-erp

- name: es3-flow

filter:

- elasticsearch:

elasticsearch: es3-mall

router:

- name: my_router

default_flow: default-flow

elasticsearch:

- name: es1-apm

enabled: true

endpoints:

- http://192.168.3.188:9206

- name: es2-erp

enabled: true

endpoints:

- http://192.168.3.188:9207

- name: es3-mall

enabled: true

endpoints:

- http://192.168.3.188:9208启动网关

直接启动网关,如下:

➜ gateway git:(master) ✗ ./bin/gateway -config sample-configs/elasticsearch-route-by-index.yml

___ _ _____ __ __ __ _

/ _ \ /_\ /__ \/__\/ / /\ \ \/_\ /\_/\

/ /_\///_\\ / /\/_\ \ \/ \/ //_\\\_ _/

/ /_\\/ _ \/ / //__ \ /\ / _ \/ \

\____/\_/ \_/\/ \__/ \/ \/\_/ \_/\_/

[GATEWAY] A light-weight, powerful and high-performance elasticsearch gateway.

[GATEWAY] 1.0.0_SNAPSHOT, 2022-04-20 08:23:56, 2023-12-31 10:10:10, 51650a5c3d6aaa436f3c8a8828ea74894c3524b9

[04-21 13:41:21] [INF] [app.go:174] initializing gateway.

[04-21 13:41:21] [INF] [app.go:175] using config: /Users/medcl/go/src/infini.sh/gateway/sample-configs/elasticsearch-route-by-index.yml.

[04-21 13:41:21] [INF] [instance.go:72] workspace: /Users/medcl/go/src/infini.sh/gateway/data/gateway/nodes/c9bpg0ai4h931o4ngs3g

[04-21 13:41:21] [INF] [app.go:283] gateway is up and running now.

[04-21 13:41:21] [INF] [api.go:262] api listen at: http://0.0.0.0:2900

[04-21 13:41:21] [INF] [reverseproxy.go:255] elasticsearch [es1-apm] hosts: [] => [192.168.3.188:9206]

[04-21 13:41:21] [INF] [reverseproxy.go:255] elasticsearch [es2-erp] hosts: [] => [192.168.3.188:9207]

[04-21 13:41:21] [INF] [reverseproxy.go:255] elasticsearch [es3-mall] hosts: [] => [192.168.3.188:9208]

[04-21 13:41:21] [INF] [actions.go:349] elasticsearch [es2-erp] is available

[04-21 13:41:21] [INF] [actions.go:349] elasticsearch [es1-apm] is available

[04-21 13:41:21] [INF] [entry.go:312] entry [es_entry] listen at: http://0.0.0.0:8000

[04-21 13:41:21] [INF] [module.go:116] all modules are started

[04-21 13:41:21] [INF] [actions.go:349] elasticsearch [es3-mall] is available

[04-21 13:41:55] [INF] [reverseproxy.go:255] elasticsearch [es1-apm] hosts: [] => [192.168.3.188:9206]网关启动成功之后,就可以通过网关的 IP+8000 端口来访问目标 Elasticsearch 集群了。

测试访问

首先通过 API 来访问测试一下,如下:

➜ ~ curl http://localhost:8000/apm-2022/_search -v

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8000 (#0)

> GET /apm-2022/_search HTTP/1.1

> Host: localhost:8000

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Thu, 21 Apr 2022 05:45:44 GMT

< content-type: application/json; charset=UTF-8

< Content-Length: 162

< X-elastic-product: Elasticsearch

< X-Backend-Cluster: es1-apm

< X-Backend-Server: 192.168.3.188:9206

< X-Filters: filters->elasticsearch

<

* Connection #0 to host localhost left intact

{"took":142,"timed_out":false,"_shards":{"total":1,"successful":1,"skipped":0,"failed":0},"hits":{"total":{"value":0,"relation":"eq"},"max_score":null,"hits":[]}}% 可以看到 apm-2022 指向了后端的 es1-apm 集群。

继续测试,erp 索引的访问,如下:

➜ ~ curl http://localhost:8000/erp-2022/_search -v

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8000 (#0)

> GET /erp-2022/_search HTTP/1.1

> Host: localhost:8000

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Thu, 21 Apr 2022 06:24:46 GMT

< content-type: application/json; charset=UTF-8

< Content-Length: 161

< X-Backend-Cluster: es2-erp

< X-Backend-Server: 192.168.3.188:9207

< X-Filters: filters->switch->filters->elasticsearch->skipped

<

* Connection #0 to host localhost left intact

{"took":12,"timed_out":false,"_shards":{"total":1,"successful":1,"skipped":0,"failed":0},"hits":{"total":{"value":0,"relation":"eq"},"max_score":null,"hits":[]}}%继续测试,mall 索引的访问,如下:

➜ ~ curl http://localhost:8000/mall-2022/_search -v

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8000 (#0)

> GET /mall-2022/_search HTTP/1.1

> Host: localhost:8000

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Thu, 21 Apr 2022 06:25:08 GMT

< content-type: application/json; charset=UTF-8

< Content-Length: 134

< X-Backend-Cluster: es3-mall

< X-Backend-Server: 192.168.3.188:9208

< X-Filters: filters->switch->filters->elasticsearch->skipped

<

* Connection #0 to host localhost left intact

{"took":8,"timed_out":false,"_shards":{"total":5,"successful":5,"skipped":0,"failed":0},"hits":{"total":0,"max_score":null,"hits":[]}}% 完美转发。

修改 Kibana 配置

修改 Kibana 的配置文件: kibana.yml,替换 Elasticsearch 的地址为网关地址(http://192.168.3.200:8000),如下:

elasticsearch.hosts: ["http://192.168.3.200:8000"]重启 Kibana 让配置生效。

效果如下

可以看到,在一个 Kibana 的开发者工具里面,我们已经可以像操作一个集群一样来同时读写实际上来自三个不同集群的索引数据了。

展望

通过极限网关,我们还可以非常灵活的进行在线请求的流量编辑,动态组合不同集群的操作。

使用极限网关来进行 Elasticsearch 跨集群跨版本查询及所有其它请求

Elasticsearch • medcl 发表了文章 • 9 个评论 • 8403 次浏览 • 2021-10-16 11:31

使用场景

如果你的业务需要用到有多个集群,并且版本还不一样,是不是管理起来很麻烦,如果能够通过一个 API 来进行查询就方便了,聪明的你相信已经想到了 CCS,没错用 CCS 可以实现跨集群的查询,不过 Elasticsearch 提供的 CCS 对版本有一点的限制,并且需要提前做好 mTLS,也就是需要提前配置好两个集群之间的证书互信,这个免不了要重启维护,好像有点麻烦,那么问题来咯,有没有更好的方案呢?

😁 有办法,今天我就给大家介绍一个基于极限网关的方案,极限网关的网址:http://极限网关.com/。

假设现在有两个集群,一个集群是 v2.4.6,有不少业务数据,舍不得删,里面有很多好东西 :)还有一个集群是 v7.14.0,版本还算比较新,业务正在做的一个新的试点,没什么好东西,但是也得用 :(,现在老板们的的需求是希望通过在一个统一的接口就能访问这些数据,程序员懒得很,懂得都懂。

集群信息

- v2.4.6 集群的访问入口地址:192.168.3.188:9202

- v7.14.0 集群的访问入口地址:192.168.3.188:9206

这两个集群都是 http 协议的。

实现步骤

今天用到的是极限网关的 switch 过滤器:https://极限网关.com/docs/references/filters/switch/

网关下载下来就两个文件,一个主程序,一个配置文件,记得下载对应操作系统的包。

定义两个集群资源

elasticsearch:

- name: v2

enabled: true

endpoint: http://192.168.3.188:9202

- name: v7

enabled: true

endpoint: http://192.168.3.188:9206上面定义了两个集群,分别命名为 v2 和 v7,待会会用到这些资源。

定义一个服务入口

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 1000

network:

binding: 0.0.0.0:8000

tls:

enabled: true这里定义了一个名为 my_es_entry 的资源入口,并引用了一个名为 my_router 的请求转发路由,同时绑定了网卡的 0.0.0.0:8000 也就是所有本地网卡监听 IP 的 8000 端口,访问任意 IP 的 8000 端口就能访问到这个网关了。

另外老板也说了,Elasticsearch 用 HTTP 协议简直就是裸奔,通过这里开启 tls,可以让网关对外提供的是 HTTPS 协议,这样用户连接的 Elasticsearch 服务就自带 buffer 了,后端的 es 集群和网关直接可以做好网络流量隔离,集群不用动,简直完美。

为什么定义 TLS 不用指定证书,好用的软件不需要这么麻烦,就这样,不解释。

最后,通过设置 max_concurrency 为 1000,限制下并发数,避免野猴子把我们的后端的 Elasticsearch 给压挂了。

定义一个请求路由

router:

- name: my_router

default_flow: cross-cluster-search这里的名称 my_router 就是表示上面的服务入口的router 参数指定的值。

另外设置一个 default_flow 来将所有的请求都转发给一个名为 cross-cluster-search 的请求处理流程,还没定义,别急,马上。

定义请求处理流程

来啦,来啦,先定义两个 flow,如下,分别名为 v2-flow 和 v7-flow,每节配置的 filter 定义了一系列过滤器,用来对请求进行处理,这里就用了一个 elasticsearch 过滤器,也就是转发请求给指定的 Elasticsearch 后端服务器,了否?

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7然后,在定义额外一个名为 cross-cluster-search 的 flow,如下:

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow这个 flow 就是通过请求的路径的前缀来进行路由的过滤器,如果是 v2:开头的请求,则转发给 v2-flow 继续处理,如果是 v7: 开头的请求,则转发给 v7-flow 来处理,使用的用法和 CCS 是一样的。so easy!

对了,那是不是每个请求都需要加前缀啊,费事啊,没事,在这个 cross-cluster-search 的 filter 最后再加上一个 elasticsearch filter,前面前缀匹配不上的都会走它,假设默认都走 v7,最后完整的 flow 配置如下:

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow

- elasticsearch:

elasticsearch: v7 然后就没有然后了,因为就配置这些就行了。

启动网关

假设配置文件的路径为 sample-configs/cross-cluster-search.yml,运行如下命令:

➜ gateway git:(master) ✗ ./bin/gateway -config sample-configs/cross-cluster-search.yml

___ _ _____ __ __ __ _

/ _ \ /_\ /__ \/__\/ / /\ \ \/_\ /\_/\

/ /_\///_\\ / /\/_\ \ \/ \/ //_\\\_ _/

/ /_\\/ _ \/ / //__ \ /\ / _ \/ \

\____/\_/ \_/\/ \__/ \/ \/\_/ \_/\_/

[GATEWAY] A light-weight, powerful and high-performance elasticsearch gateway.

[GATEWAY] 1.0.0_SNAPSHOT, 2021-10-15 16:25:56, 3d0a1cd

[10-16 11:00:52] [INF] [app.go:228] initializing gateway.

[10-16 11:00:52] [INF] [instance.go:24] workspace: data/gateway/nodes/0

[10-16 11:00:52] [INF] [api.go:260] api listen at: http://0.0.0.0:2900

[10-16 11:00:52] [INF] [reverseproxy.go:257] elasticsearch [v7] hosts: [] => [192.168.3.188:9206]

[10-16 11:00:52] [INF] [entry.go:225] auto generating cert files

[10-16 11:00:52] [INF] [actions.go:223] elasticsearch [v2] is available

[10-16 11:00:52] [INF] [actions.go:223] elasticsearch [v7] is available

[10-16 11:00:53] [INF] [entry.go:296] entry [my_es_entry] listen at: https://0.0.0.0:8000

[10-16 11:00:53] [INF] [app.go:309] gateway is running now.可以看到网关输出了启动成功的日志,网关服务监听在 https://0.0.0.0:8000 。

试试访问网关

直接访问网关的 8000 端口,因为是网关自签的证书,加上 -k 来跳过证书的校验,如下:

➜ loadgen git:(master) ✗ curl -k https://localhost:8000

{

"name" : "LENOVO",

"cluster_name" : "es-v7140",

"cluster_uuid" : "npWjpIZmS8iP_p3GK01-xg",

"version" : {

"number" : "7.14.0",

"build_flavor" : "default",

"build_type" : "zip",

"build_hash" : "dd5a0a2acaa2045ff9624f3729fc8a6f40835aa1",

"build_date" : "2021-07-29T20:49:32.864135063Z",

"build_snapshot" : false,

"lucene_version" : "8.9.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}正如前面配置所配置的一样,默认请求访问的就是 v7 集群。

访问 v2 集群

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:/

{

"name" : "Solomon O'Sullivan",

"cluster_name" : "es-v246",

"cluster_uuid" : "cqlpjByvQVWDAv6VvRwPAw",

"version" : {

"number" : "2.4.6",

"build_hash" : "5376dca9f70f3abef96a77f4bb22720ace8240fd",

"build_timestamp" : "2017-07-18T12:17:44Z",

"build_snapshot" : false,

"lucene_version" : "5.5.4"

},

"tagline" : "You Know, for Search"

}查看集群信息:

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:_cluster/health\?pretty

{

"cluster_name" : "es-v246",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 5,

"active_shards" : 5,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 5,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 50.0

}插入一条文档:

➜ loadgen git:(master) ✗ curl-json -k https://localhost:8000/v2:medcl/doc/1 -d '{"name":"hello world"}'

{"_index":"medcl","_type":"doc","_id":"1","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"created":true}% 执行一个查询

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v2:medcl/_search\?q\=name:hello

{"took":78,"timed_out":false,"_shards":{"total":5,"successful":5,"failed":0},"hits":{"total":1,"max_score":0.19178301,"hits":[{"_index":"medcl","_type":"doc","_id":"1","_score":0.19178301,"_source":{"name":"hello world"}}]}}% 可以看到,所有的请求,不管是集群的操作,还是索引的增删改查都可以,而 Elasticsearch 自带的 CCS 是只读的,只能进行查询。

访问 v7 集群

➜ loadgen git:(master) ✗ curl -k https://localhost:8000/v7:/

{

"name" : "LENOVO",

"cluster_name" : "es-v7140",

"cluster_uuid" : "npWjpIZmS8iP_p3GK01-xg",

"version" : {

"number" : "7.14.0",

"build_flavor" : "default",

"build_type" : "zip",

"build_hash" : "dd5a0a2acaa2045ff9624f3729fc8a6f40835aa1",

"build_date" : "2021-07-29T20:49:32.864135063Z",

"build_snapshot" : false,

"lucene_version" : "8.9.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}Kibana 里面访问

完全没问题,有图有真相:

其他操作也类似,就不重复了。

完整的配置

path.data: data

path.logs: log

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 10000

network:

binding: 0.0.0.0:8000

tls:

enabled: true

flow:

- name: v2-flow

filter:

- elasticsearch:

elasticsearch: v2

- name: v7-flow

filter:

- elasticsearch:

elasticsearch: v7

- name: cross-cluster-search

filter:

- switch:

path_rules:

- prefix: "v2:"

flow: v2-flow

- prefix: "v7:"

flow: v7-flow

- elasticsearch:

elasticsearch: v7

router:

- name: my_router

default_flow: cross-cluster-search

elasticsearch:

- name: v2

enabled: true

endpoint: http://192.168.3.188:9202

- name: v7

enabled: true

endpoint: http://192.168.3.188:9206小结

好了,今天给大家分享的如何使用极限网关来进行 Elasticsearch 跨集群跨版本的操作就到这里了,希望大家周末玩的开心。😁