使用java rest client http向elasticsearch添加数据有编码问题

laoyang360 回复了问题 • 2 人关注 • 1 个回复 • 2635 次浏览 • 2017-09-19 07:15

ES如何全量查询大数据

laoyang360 回复了问题 • 5 人关注 • 2 个回复 • 14181 次浏览 • 2017-09-18 18:50

是否可以 限制 API 查询结果 的输出 (pipeline aggregation)

laoyang360 回复了问题 • 2 人关注 • 1 个回复 • 3270 次浏览 • 2017-09-18 18:48

Elasticsearch5.6 : [reindex] failed to parse field [script]

回复eboy 发起了问题 • 1 人关注 • 0 个回复 • 7830 次浏览 • 2017-09-18 17:55

你好,我想请问下,这个连接上都是基于Linux的。安装方式,那Windows的呢?我有试过直接install,但是总是在50%就报错说关闭了连接,有没有基于Windows的离线安装方式呢?

sunysk 回复了问题 • 2 人关注 • 1 个回复 • 2074 次浏览 • 2017-09-18 16:42

ES javaAPI 更新文档

lizhiang 回复了问题 • 2 人关注 • 2 个回复 • 2073 次浏览 • 2017-09-18 16:41

elasticsearch 5.5.2(5.6) startup error with x-pack-5.5.2(5.6)?

回复Kervin 发起了问题 • 1 人关注 • 0 个回复 • 2702 次浏览 • 2017-09-18 16:17

curator 自动化关闭分片前能否先将他们移动到一台备份机上

kennywu76 回复了问题 • 5 人关注 • 3 个回复 • 3180 次浏览 • 2017-09-18 16:15

【ES性能问题】matchquery 慢 慢 慢, 原因讨论?

kennywu76 回复了问题 • 3 人关注 • 3 个回复 • 11270 次浏览 • 2017-09-18 10:54

Received message from unsupported version: [2.0.0] minimal compatible version is: [5.0.0]

Cheetah 回复了问题 • 2 人关注 • 1 个回复 • 8663 次浏览 • 2017-09-18 09:55

今天运行最新的ES 5.6.0版本,出现java.lang.IllegalStateException: Unable to initialize modules

回复独行人945 回复了问题 • 1 人关注 • 1 个回复 • 3862 次浏览 • 2017-09-15 15:21

ES中可否控制字段输出的长度,只返回前300个字节的内容?

laoyang360 发表了文章 • 3 个评论 • 5124 次浏览 • 2017-09-15 11:56

举例:content字段是一篇正文,很长。我这边只需要前300个字节。

我可以通过_source控制输出content,

有没有办法控制content,只返回前300个字节的内容。

返回完再裁剪,我知道通过程序处理。

想知道有没有参数控制,直接返回给定长度的串内容?

举例:content字段是一篇正文,很长。我这边只需要前300个字节。

我可以通过_source控制输出content,

有没有办法控制content,只返回前300个字节的内容。

返回完再裁剪,我知道通过程序处理。

想知道有没有参数控制,直接返回给定长度的串内容?

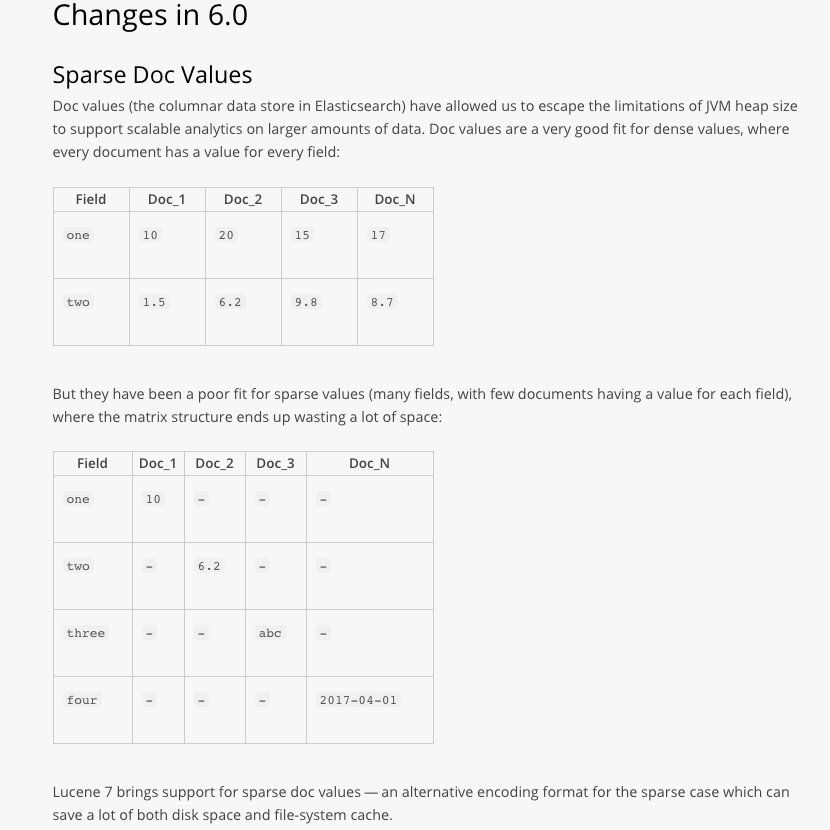

为何要避免往ES里写入稀疏数据

kennywu76 发表了文章 • 1 个评论 • 4316 次浏览 • 2017-09-15 11:24

https://www.elastic.co/guide/e ... rsity

Avoid sparsityedit

The data-structures behind Lucene, which Elasticsearch relies on in order to index and store data, work best with dense data, ie. when all documents have the same fields. This is especially true for fields that have norms enabled (which is the case for text fields by default) or doc values enabled (which is the case for numerics, date, ip and keyword by default).

The reason is that Lucene internally identifies documents with so-called doc ids, which are integers between 0 and the total number of documents in the index. These doc ids are used for communication between the internal APIs of Lucene: for instance searching on a term with a matchquery produces an iterator of doc ids, and these doc ids are then used to retrieve the value of the norm in order to compute a score for these documents. The way this norm lookup is implemented currently is by reserving one byte for each document. The norm value for a given doc id can then be retrieved by reading the byte at index doc_id. While this is very efficient and helps Lucene quickly have access to the norm values of every document, this has the drawback that documents that do not have a value will also require one byte of storage.

In practice, this means that if an index has M documents, norms will require M bytes of storage per field, even for fields that only appear in a small fraction of the documents of the index. Although slightly more complex with doc values due to the fact that doc values have multiple ways that they can be encoded depending on the type of field and on the actual data that the field stores, the problem is very similar. In case you wonder: fielddata, which was used in Elasticsearch pre-2.0 before being replaced with doc values, also suffered from this issue, except that the impact was only on the memory footprint since fielddata was not explicitly materialized on disk.

Note that even though the most notable impact of sparsity is on storage requirements, it also has an impact on indexing speed and search speed since these bytes for documents that do not have a field still need to be written at index time and skipped over at search time.

It is totally fine to have a minority of sparse fields in an index. But beware that if sparsity becomes the rule rather than the exception, then the index will not be as efficient as it could be.

This section mostly focused on norms and doc values because those are the two features that are most affected by sparsity. Sparsity also affect the efficiency of the inverted index (used to index text/keyword fields) and dimensional points (used to index geo_point and numerics) but to a lesser extent.

Here are some recommendations that can help avoid sparsity:

https://www.elastic.co/blog/index-vs-type

Fields that exist in one type will also consume resources for documents of types where this field does not exist. This is a general issue with Lucene indices: they don’t like sparsity. Sparse postings lists can’t be compressed efficiently because of high deltas between consecutive matches. And the issue is even worse with doc values: for speed reasons, doc values often reserve a fixed amount of disk space for every document, so that values can be addressed efficiently. This means that if Lucene establishes that it needs one byte to store all value of a given numeric field, it will also consume one byte for documents that don’t have a value for this field. Future versions of Elasticsearch will have improvements in this area but I would still advise you to model your data in a way that will limit sparsity as much as possible.

https://www.elastic.co/blog/sp ... ucene

[url=https://issues.apache.org/jira/browse/LUCENE-6863]https://issues.apache.org/jira/browse/LUCENE-6863[/url]

https://www.elastic.co/blog/el ... eased