filebeat采集可以采集多少个文件

Beats • cao 回复了问题 • 2 人关注 • 2 个回复 • 4486 次浏览 • 2018-12-17 17:45

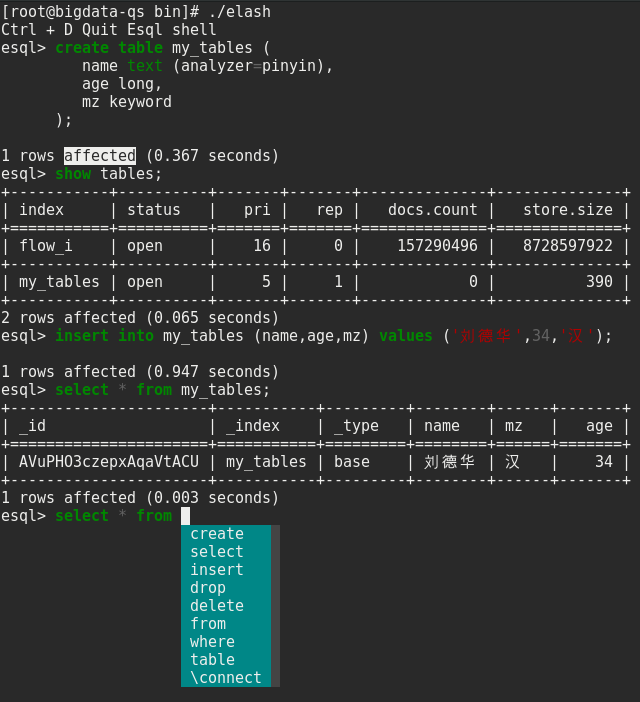

JDBC with ESQL

灌水区 • hill 发表了文章 • 0 个评论 • 2670 次浏览 • 2018-12-14 15:58

https://github.com/unimassystem/elasticsearch-jdbc

BasicDataSource basicDataSource = new BasicDataSource();

// 创建连接池 一次性创建多个连接池

// 连接池 创建连接 ---需要四个参数

basicDataSource.setDriverClassName("com.elasticsearch.jdbc.ElasticsearchDriver");

basicDataSource.setUrl("jdbc:elasticsearch://127.0.0.1:5000");

// 从连接池中获取连接

Connection conn = basicDataSource.getConnection();

String sql = "select SRC_IP,SRC_PORT from \"my_test-*\"";

Statement stmt = conn.createStatement();

ResultSet rs = stmt.executeQuery(sql);

while (rs.next()) {

System.out.println(rs.getString("SRC_IP"));

}

basicDataSource.close();

String sql = "select SRC_IP,SRC_PORT from my_test* where SRC_PORT between 10 and 100 limit 1000";

String url = "jdbc:elasticsearch://127.0.0.1:5000";

Connection connection = DriverManager.getConnection(url, "test", null);

Statement statement = connection.createStatement();

ResultSet rs = statement.executeQuery(sql);

ResultSetMetaData meta = rs.getMetaData();

String columns = "|";

for (int i = 0; i < meta.getColumnCount(); i++) {

columns += meta.getColumnLabel(i) + " | ";

}

System.out.println(columns);

while (rs.next()) {

String row = "|";

for (int i = 0; i < meta.getColumnCount(); i++) {

row += rs.getString(i) + " | ";

}

System.out.println(row);

}

Day 14 - 订单中心基于elasticsearch 的解决方案

Elasticsearch • blogsit 发表了文章 • 3 个评论 • 17012 次浏览 • 2018-12-14 15:24

项目背景:

15年去哪儿网酒店日均订单量达到30w+,随着多平台订单的聚合日均订单能达到100w左右。原来采用的热表分库方式,即将最近6个月的订单的放置在一张表中,将历史订单放在在history表中。history表存储全量的数据,当用户查询的下单时间跨度超过6个月即查询历史订单表,此分表方式热表的数据量为4000w左右,当时能解决的问题。但是显然不能满足携程艺龙订单接入的需求。如果继续按照热表方式,数据量将超过1亿条。全量数据表保存2年的可能就超过4亿的数据量。所以寻找有效途径解决此问题迫在眉睫。由于对这预计4亿的数据量还需按照预定日期、入住日期、离店日期、订单号、联系人姓名、电话、酒店名称、订单状态……等多个条件查询。所以简单按照某一个维度进行分表操作没有意义。ElasticSearch分布式搜索储存集群的引入,就是为了解决订单数据的存储与搜索的问题。

具体解决方案:

1、系统性能

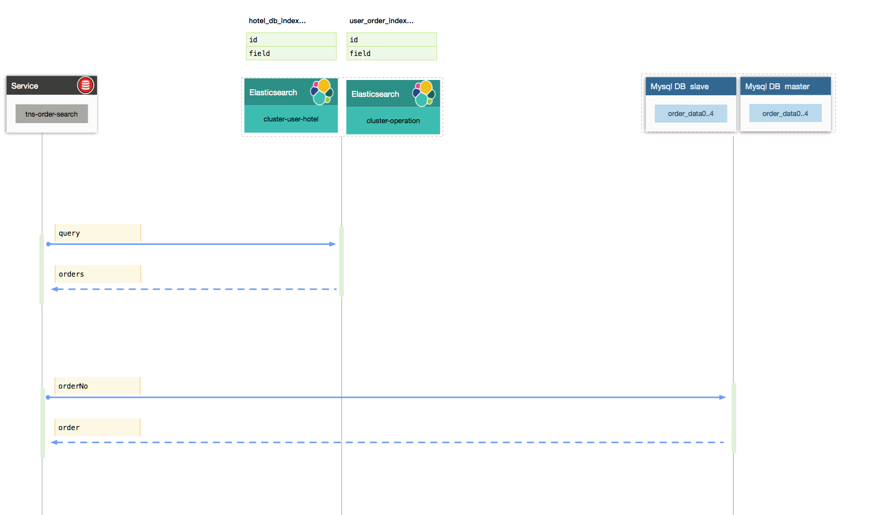

对订单模型进行抽象和分类,将常用搜索字段和基础属性字段剥离DB做分库分表。存储订单详情,ElasticSearch存储搜素字段。订单复杂查询直接走ElasticSearch。如下图:

通用数据存储模型

关键字段

■ 业务核心字段,用于查询过滤

系统字段

■ version 避免高并发操作导致数据覆盖

大字段

■ order_data订单详情数据(JSON)

■ 可灵活需要索引的字段返回的字段

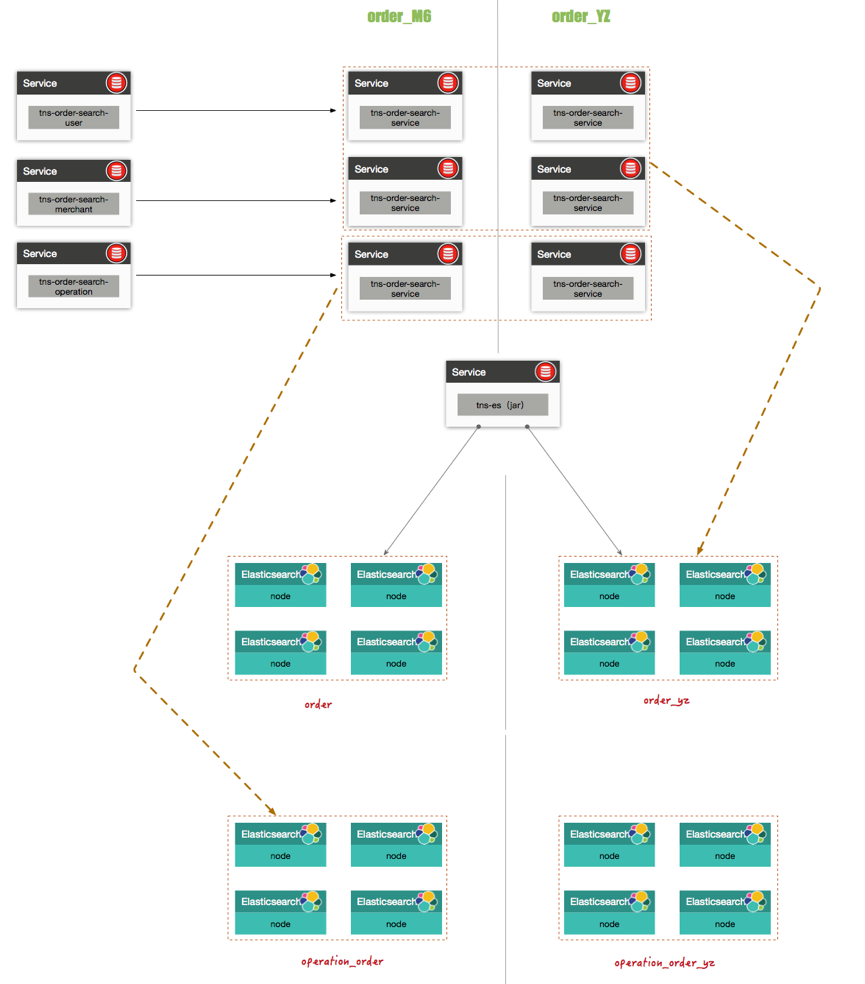

2、系统可用性

系统可用性保障:双机房高可用如下图。

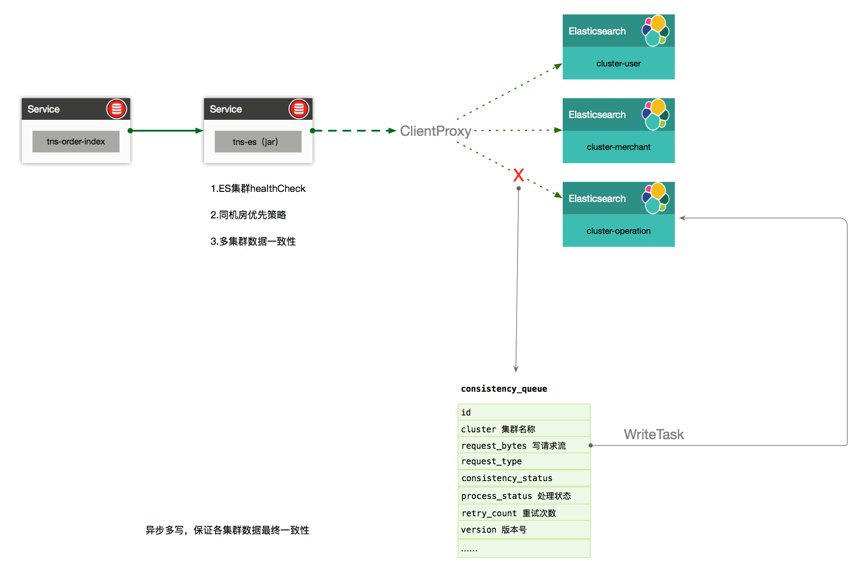

数据可用性保障:

一、异步多写保证数据一致性。

二、数据补充机制:

1、每天凌晨task扫描数据库热表数据与es数据版本进行比较。

2、将第三方推送过来数据中的,订单号即时插入订单同步队列表中。如果数据模型解析转换、持久化成功。删除队列中订单号。同时设置1分钟一次的task 扫描队列表。

3、推送第三方的数据也采用同样的方式。保证给第三方数据的准确性。

3、系统伸缩性

elasticSearch中索引设置了8个分片,目前Es单个索引的文档达到到1.4亿,合计达到2亿条数据占磁盘大小64G,集群机器磁盘容量240G。

kibana visualize terms中的字段是"string",但是索引中的mapping是date

Kibana • rochy 回复了问题 • 2 人关注 • 2 个回复 • 3620 次浏览 • 2018-12-15 10:06

es查询性能问题

Elasticsearch • rochy 回复了问题 • 3 人关注 • 1 个回复 • 3190 次浏览 • 2018-12-14 13:07

社区日报 第478期 (2018-12-14)

社区日报 • laoyang360 发表了文章 • 0 个评论 • 1999 次浏览 • 2018-12-14 11:12

http://t.cn/EyOugcQ

2、Elasticsearch最佳实践之核心概念与原理

http://t.cn/EUJa22D

3、Elasticsearch和Hive比较

http://t.cn/EUJaPGa

编辑:铭毅天下

归档:https://elasticsearch.cn/article/6196

订阅:https://tinyletter.com/elastic-daily

elasticsearch id

Elasticsearch • rochy 回复了问题 • 2 人关注 • 1 个回复 • 4847 次浏览 • 2018-12-14 13:02

elasticsearch单值桶

回复Elasticsearch • hnj1575565068 发起了问题 • 1 人关注 • 0 个回复 • 1749 次浏览 • 2018-12-14 09:46

写入压测导致cpu context switch 过高,cpu sys 过高,系统卡住

Elasticsearch • zqc0512 回复了问题 • 5 人关注 • 3 个回复 • 4099 次浏览 • 2019-01-07 10:58

filebeat收集日志时多条件创建索引

Beats • xuebin 回复了问题 • 2 人关注 • 2 个回复 • 5892 次浏览 • 2018-12-14 11:04

spring boot 2.0可以整合elasticsearch 5.4.0吗

Elasticsearch • qqq1234567 回复了问题 • 2 人关注 • 2 个回复 • 1720 次浏览 • 2018-12-13 18:20

kibana可视化 中怎么 截取keyword 信息

Kibana • zqc0512 回复了问题 • 5 人关注 • 4 个回复 • 5069 次浏览 • 2018-12-24 08:51

关于使用filebeat 收集日志的问题

默认分类 • rochy 回复了问题 • 2 人关注 • 1 个回复 • 3294 次浏览 • 2018-12-13 17:15

query cache和filter cache的区别和联系?

Elasticsearch • rochy 回复了问题 • 3 人关注 • 1 个回复 • 3091 次浏览 • 2018-12-13 17:13