如何使用极限网关实现 Elasticsearch 集群迁移至 Easysearch

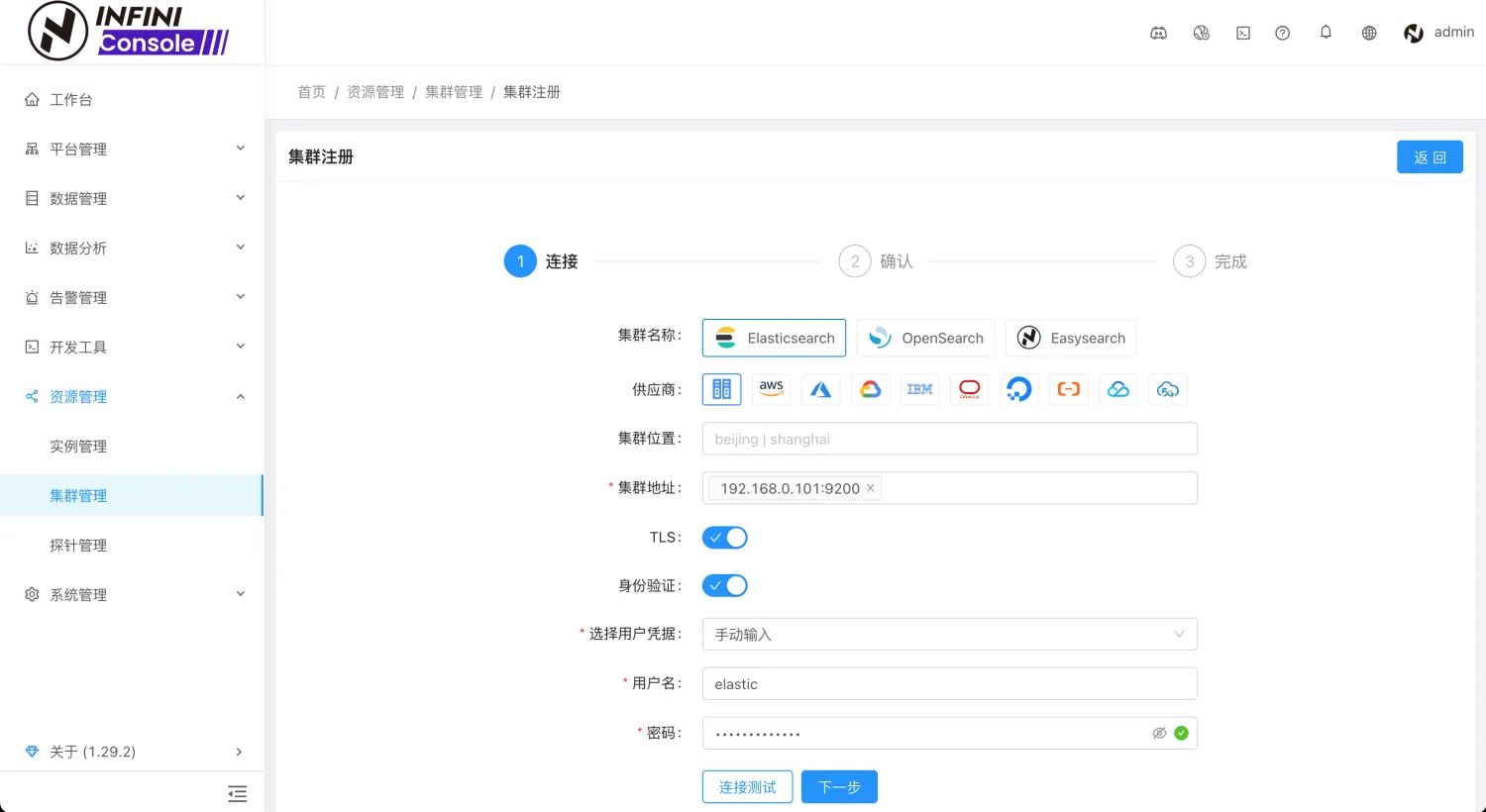

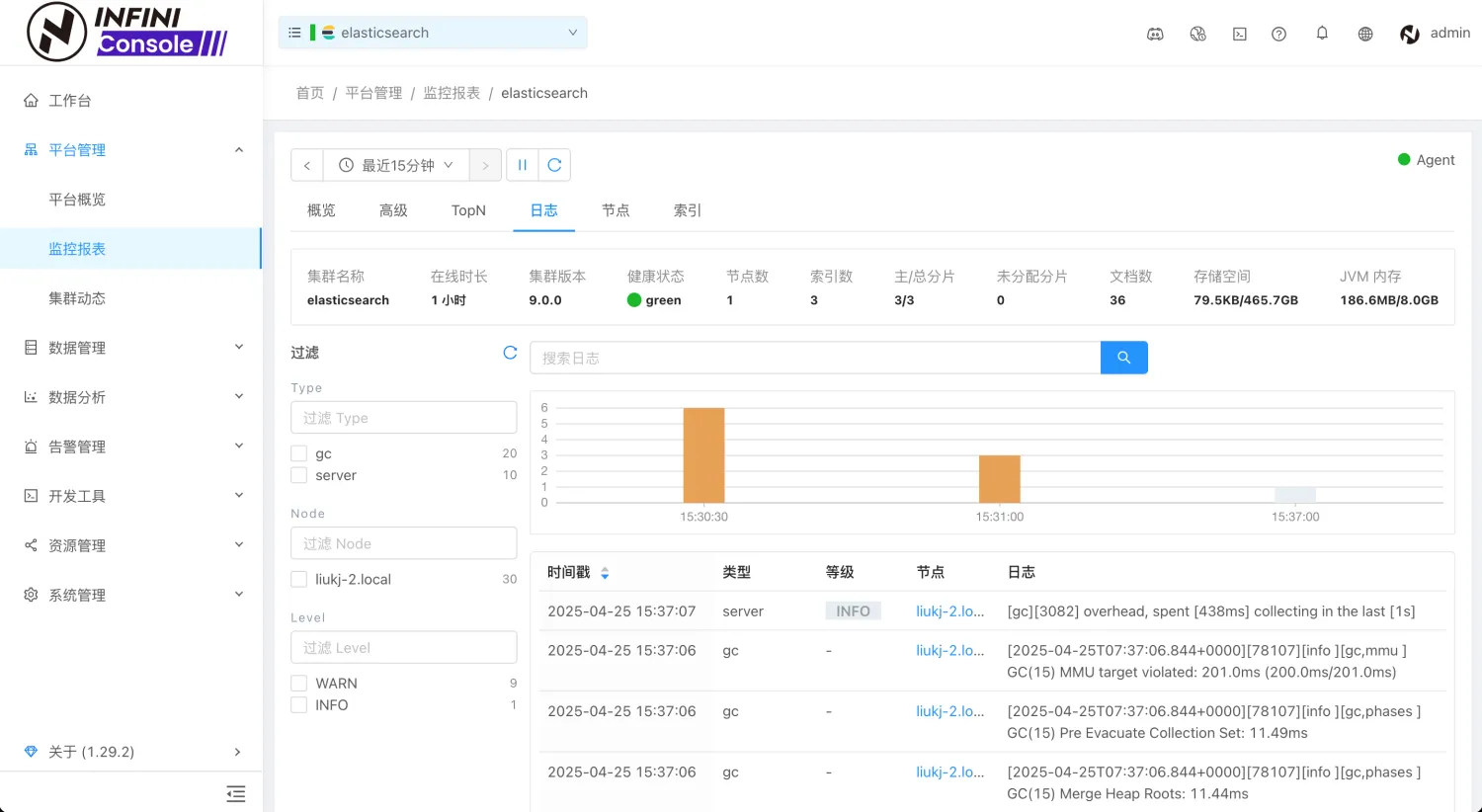

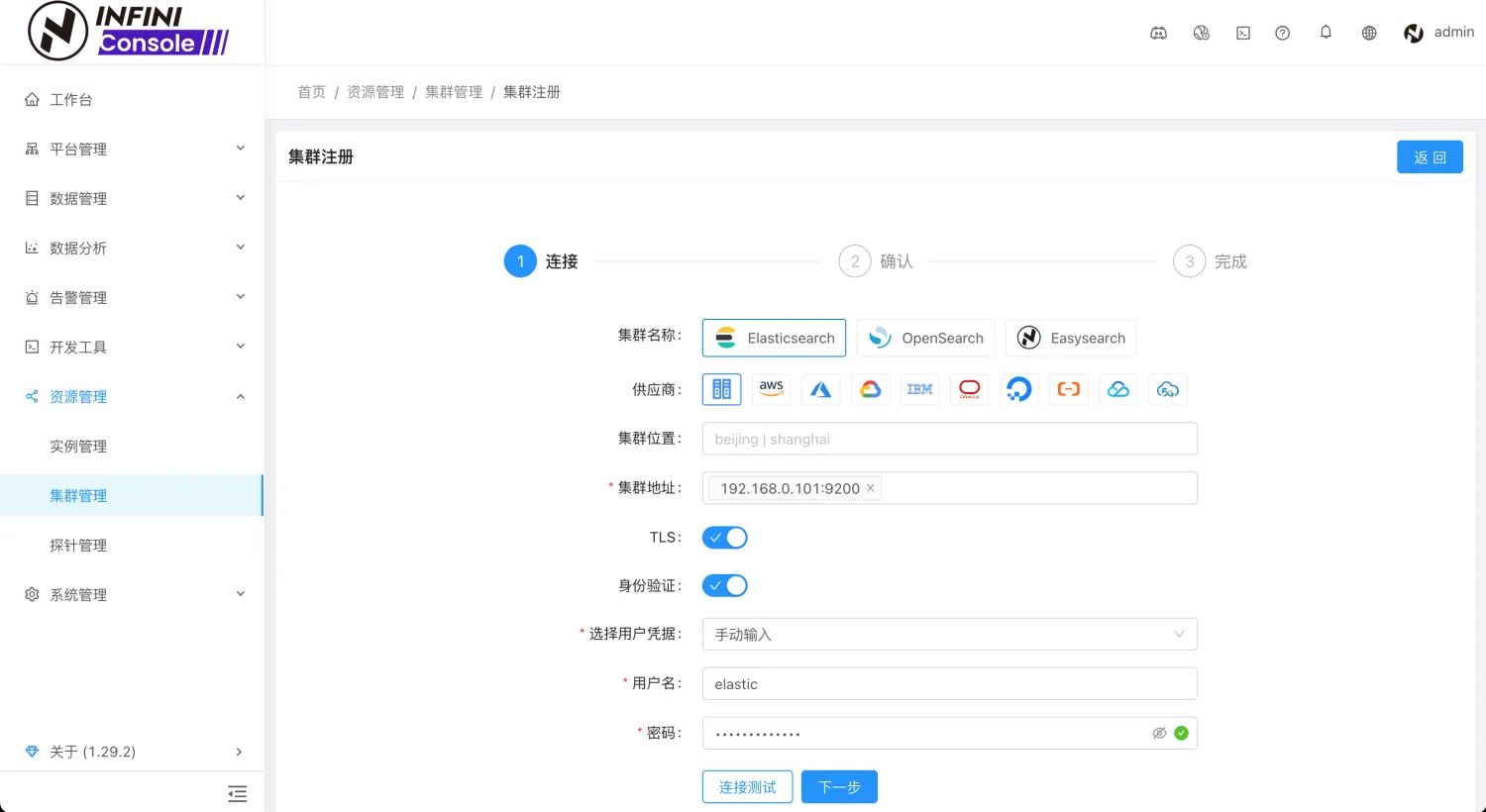

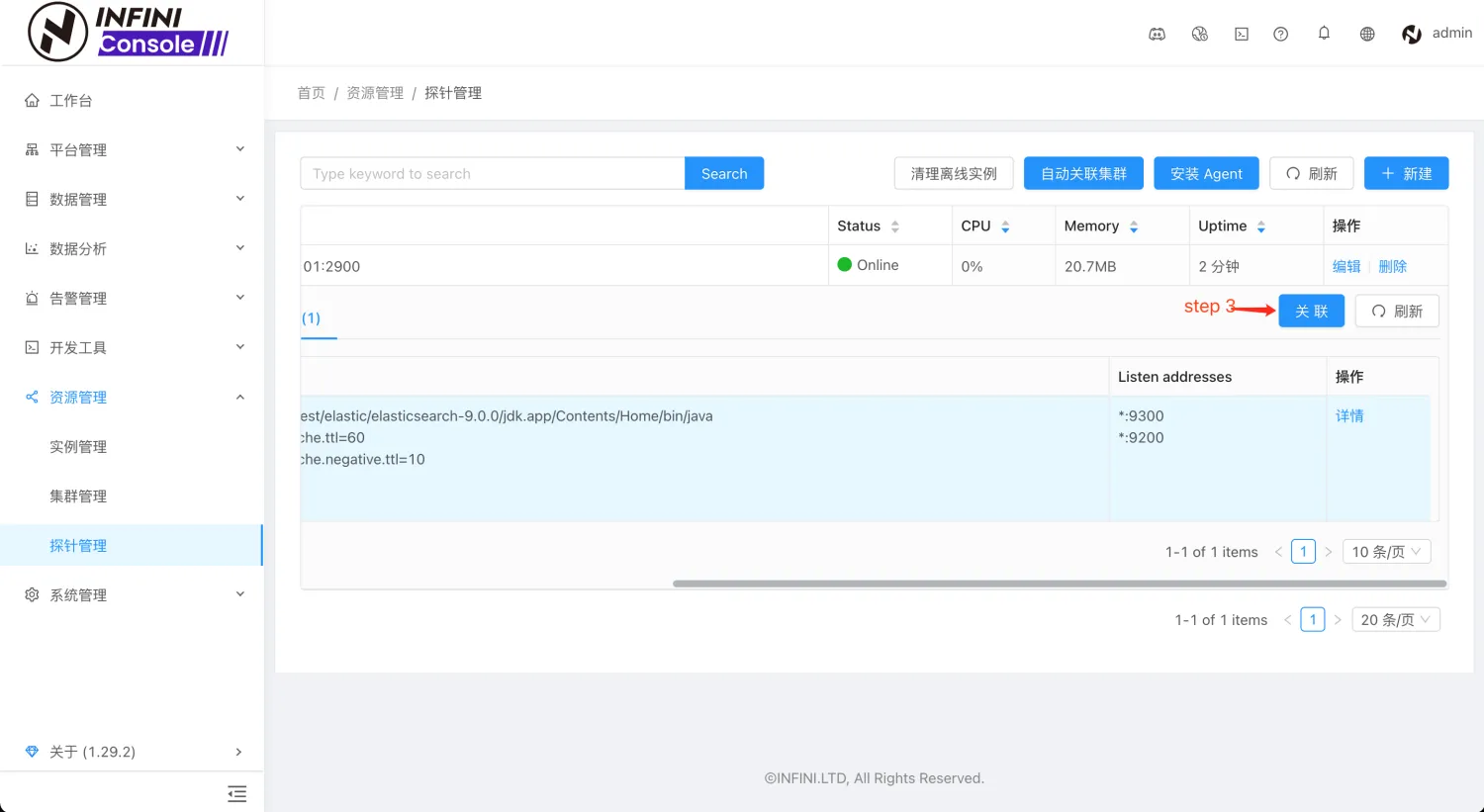

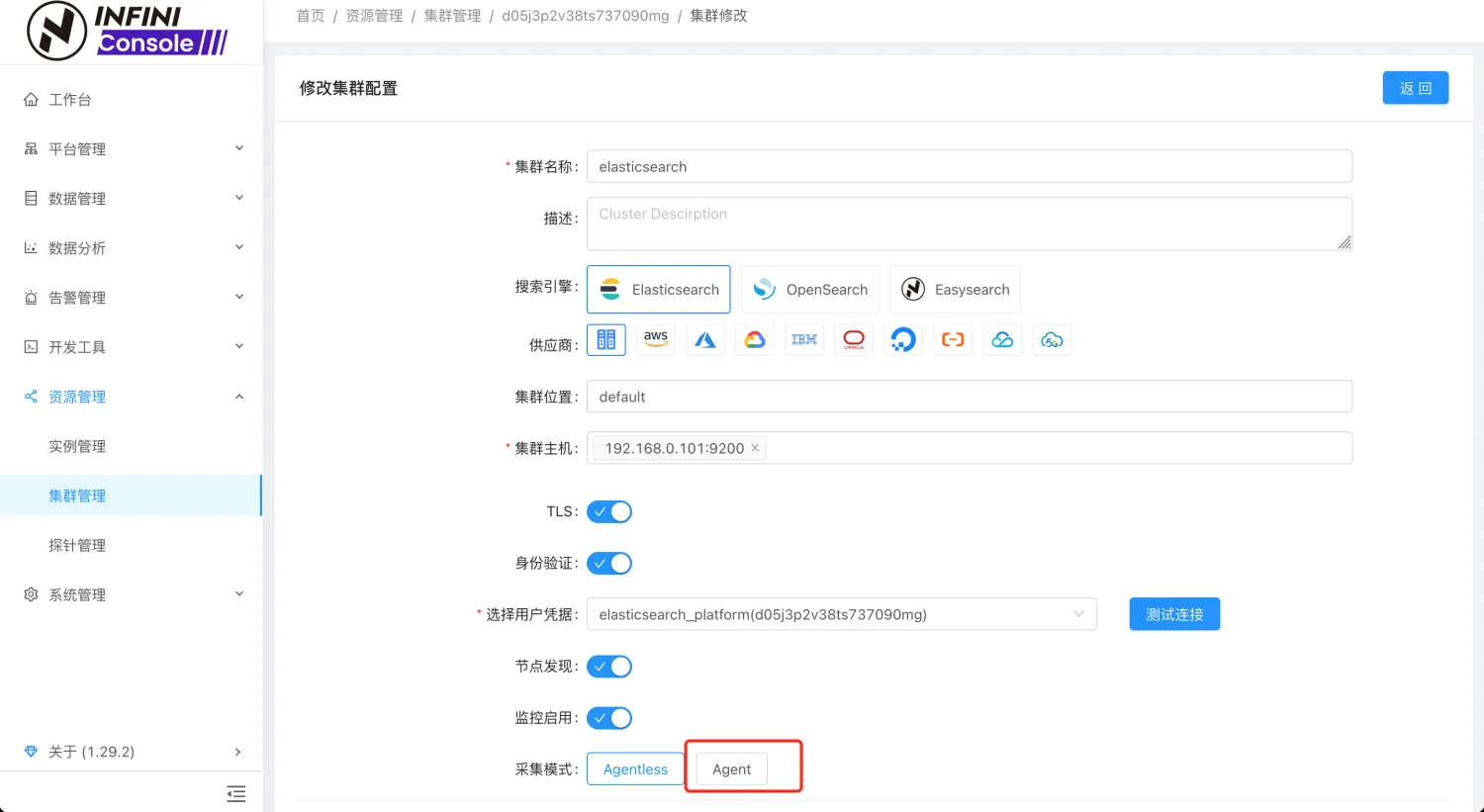

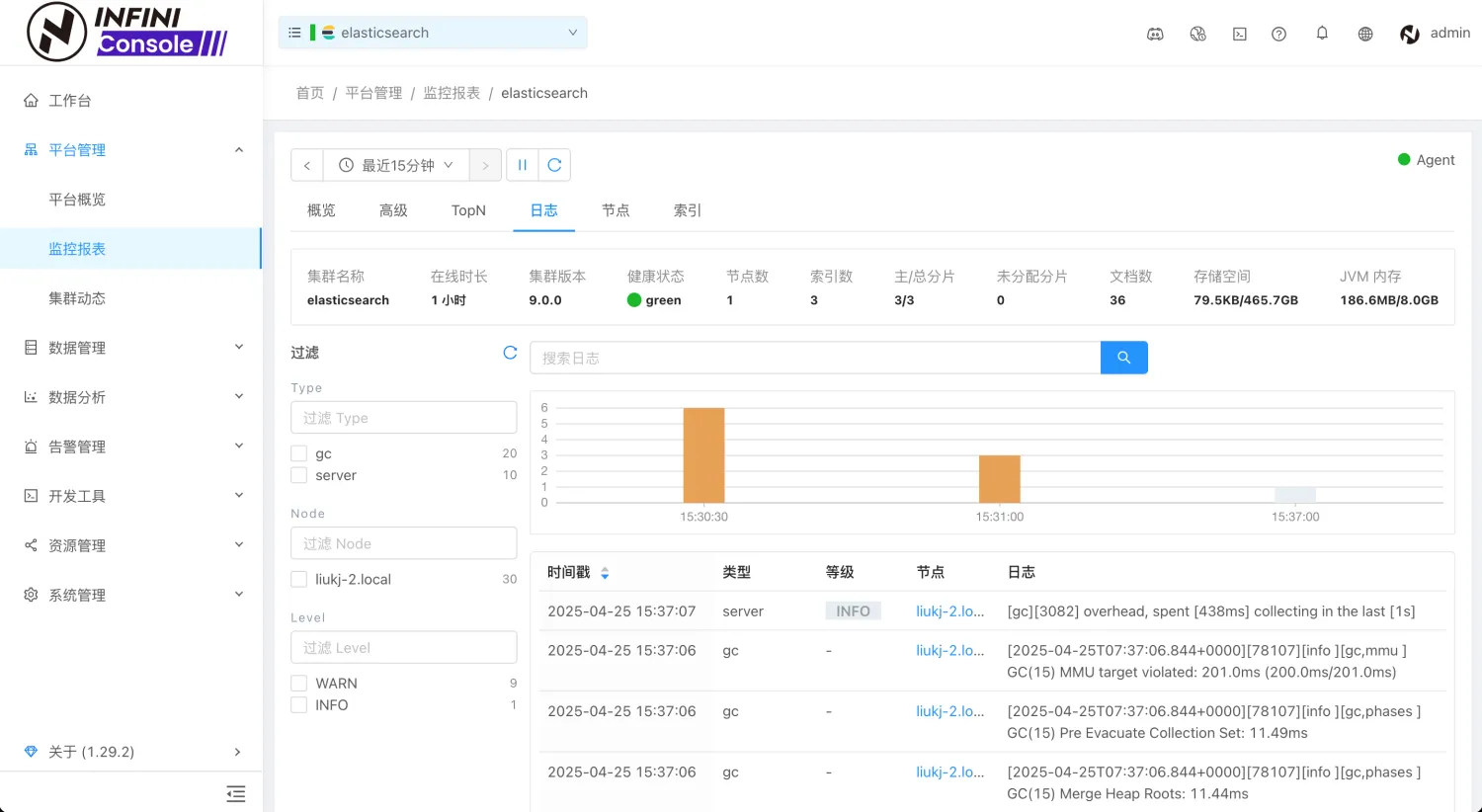

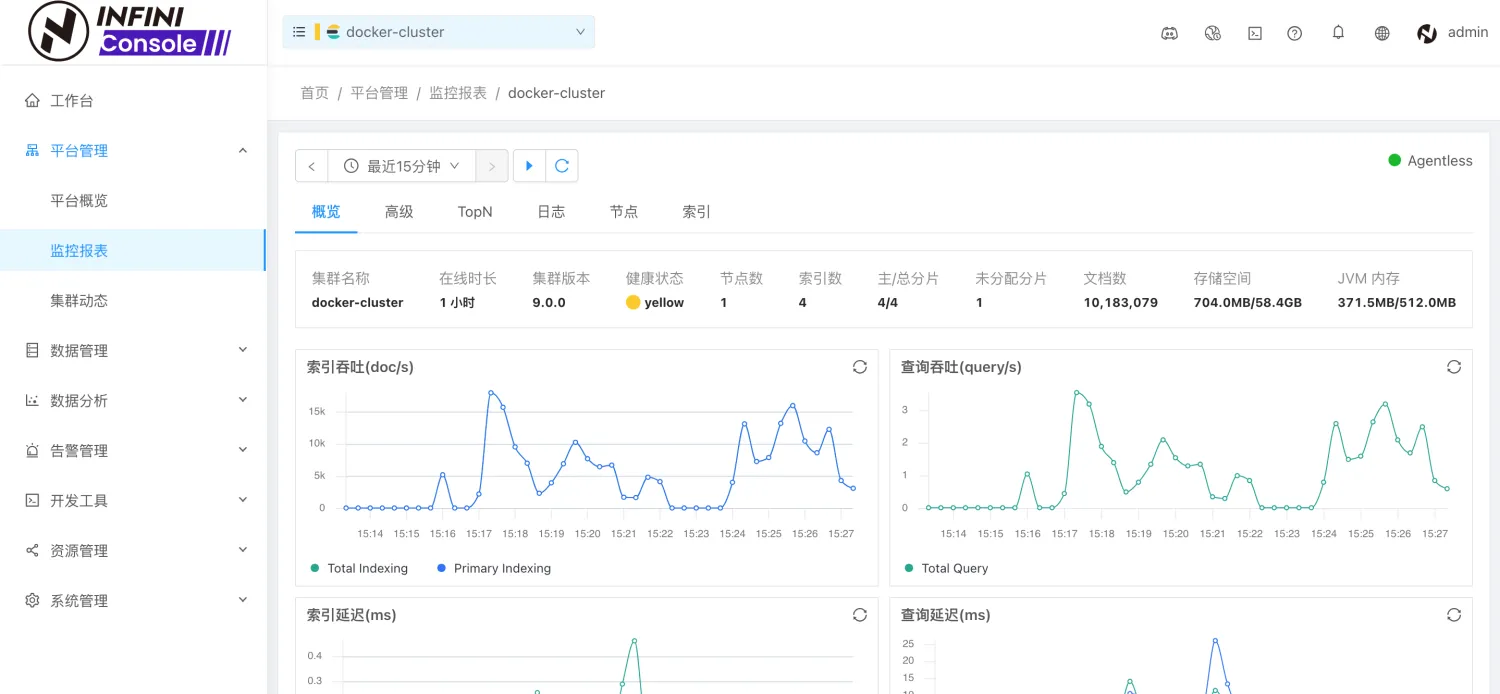

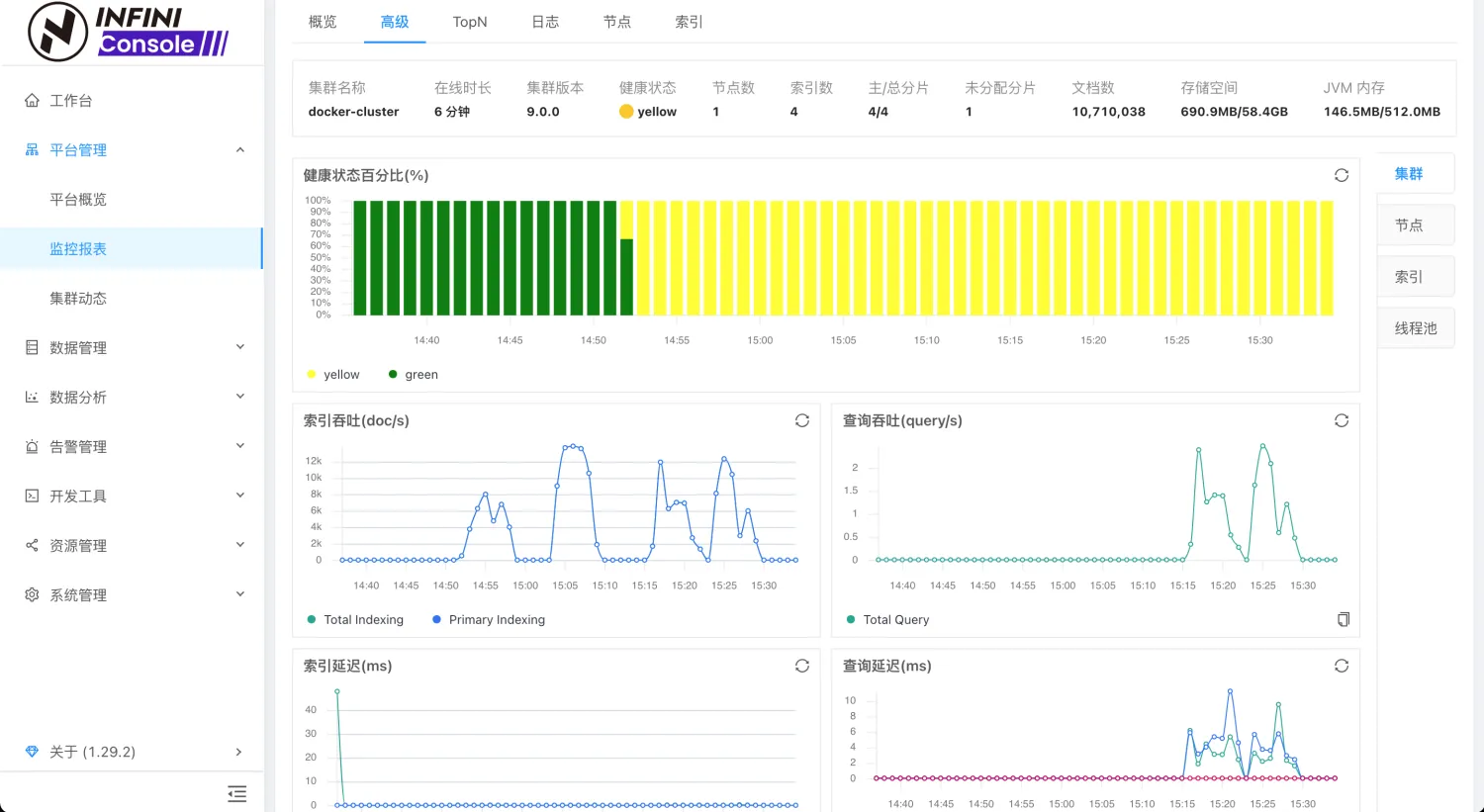

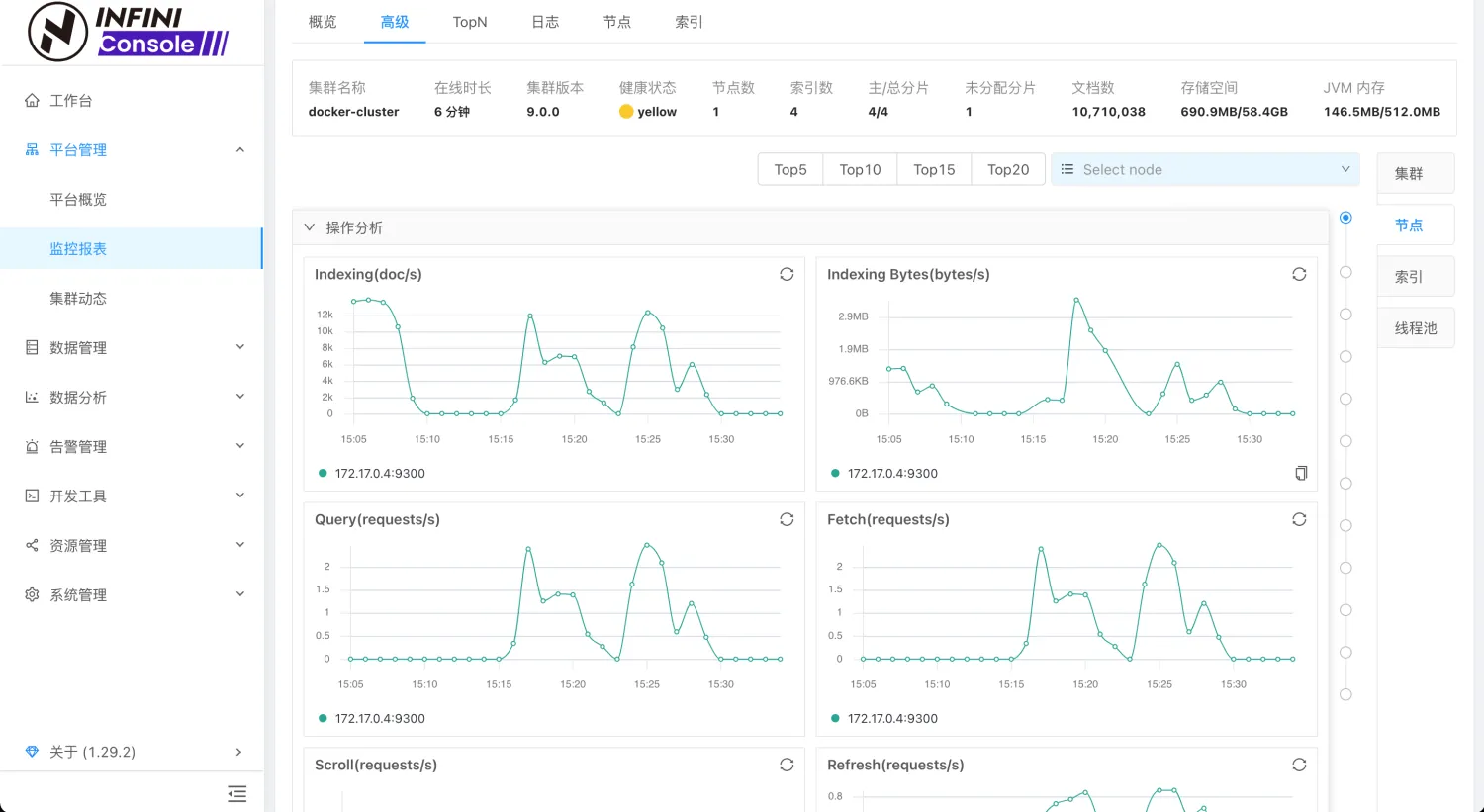

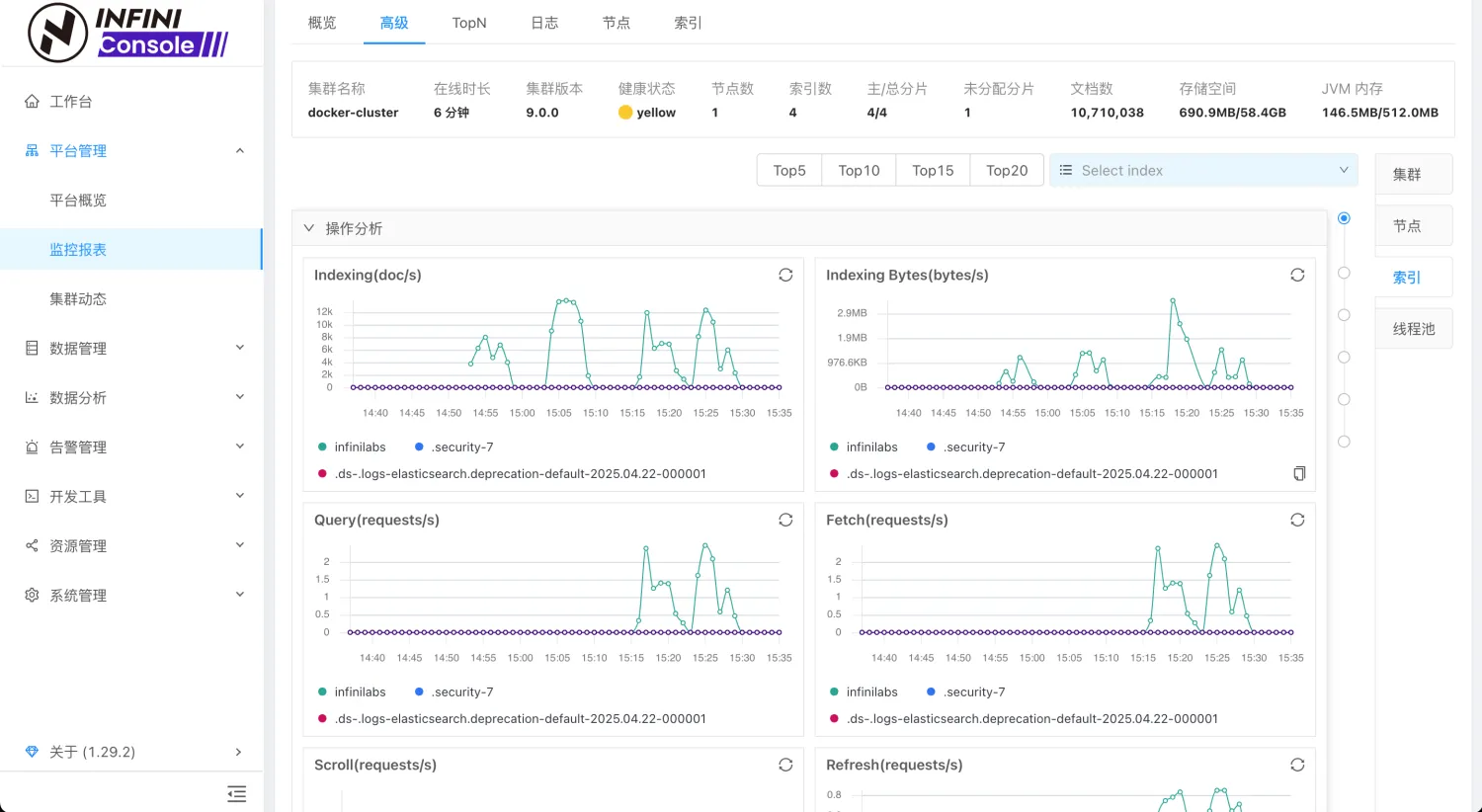

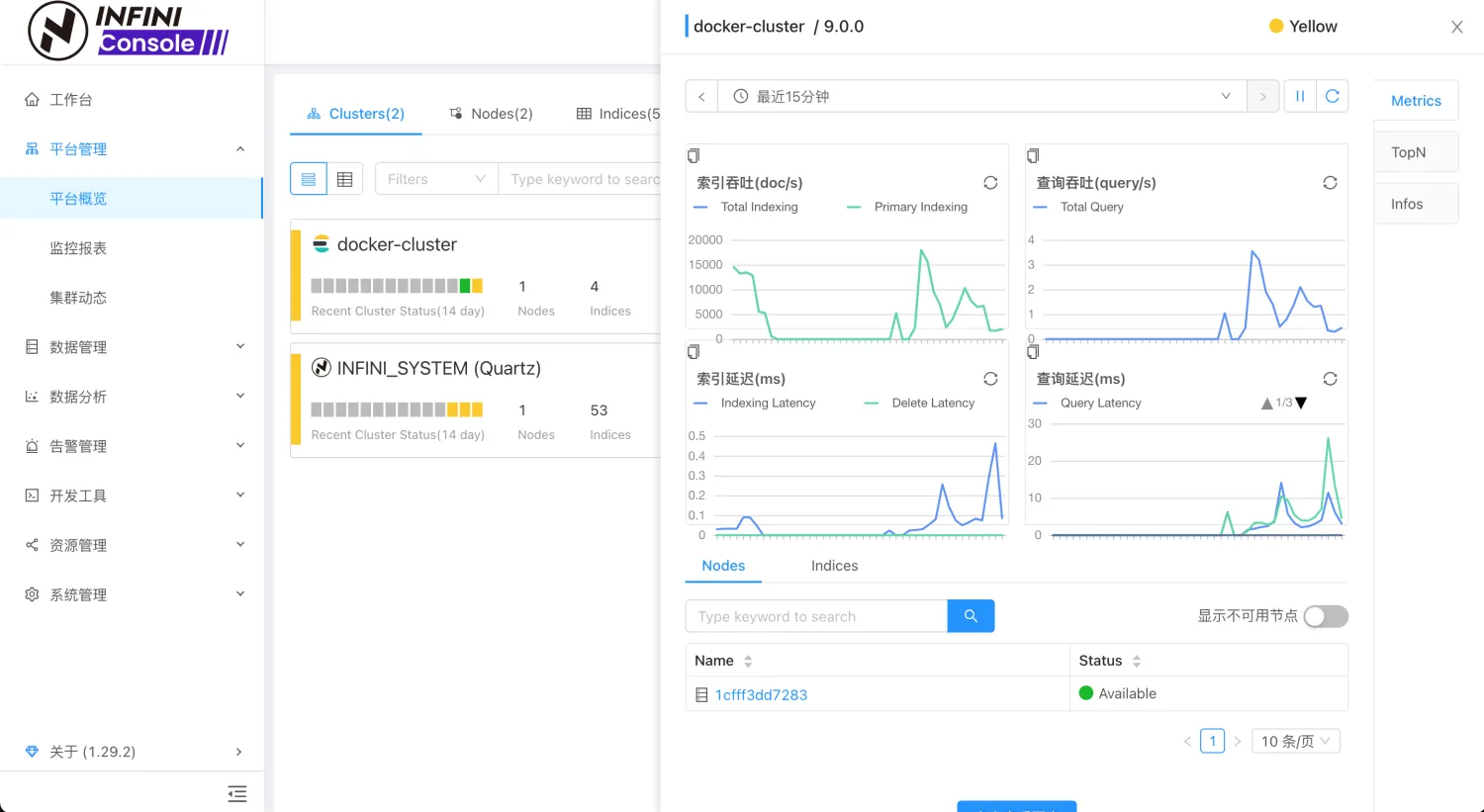

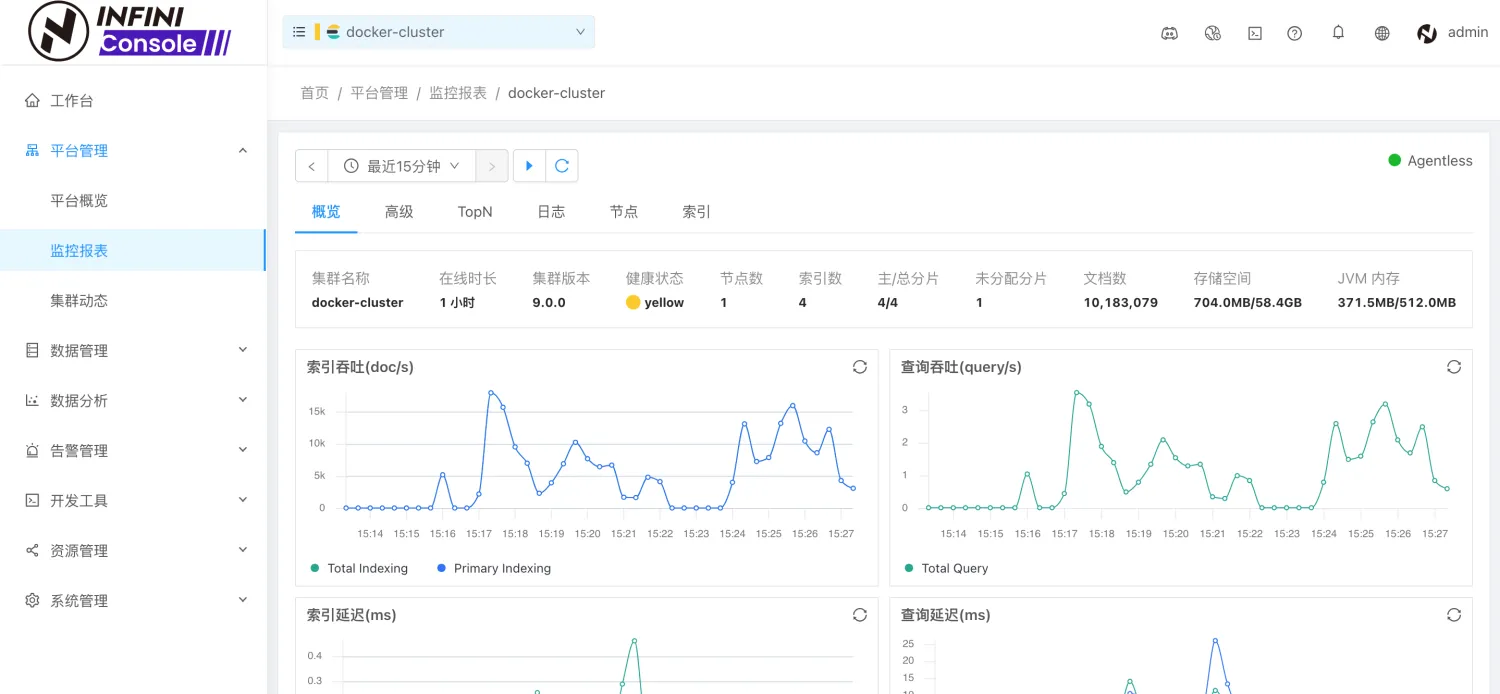

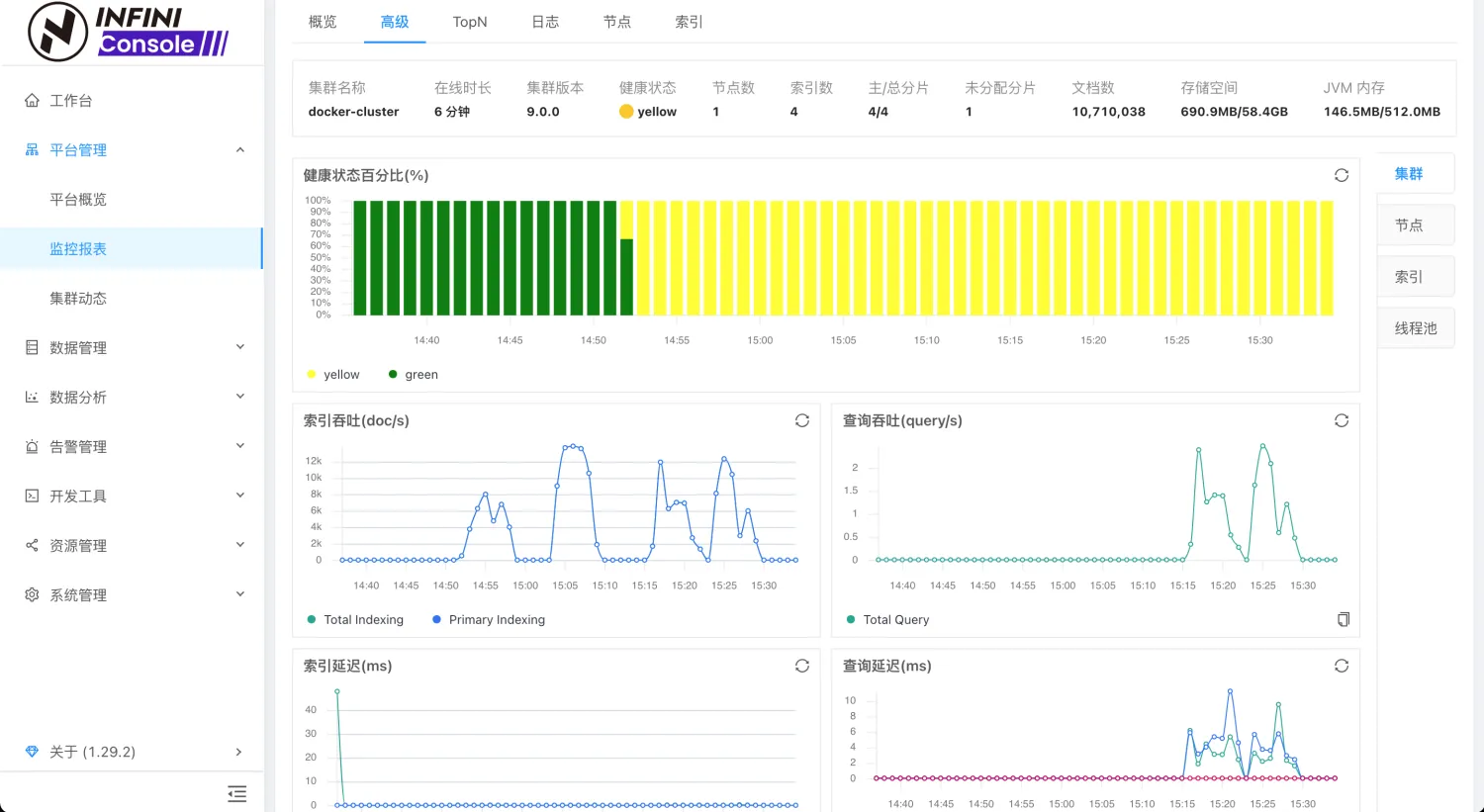

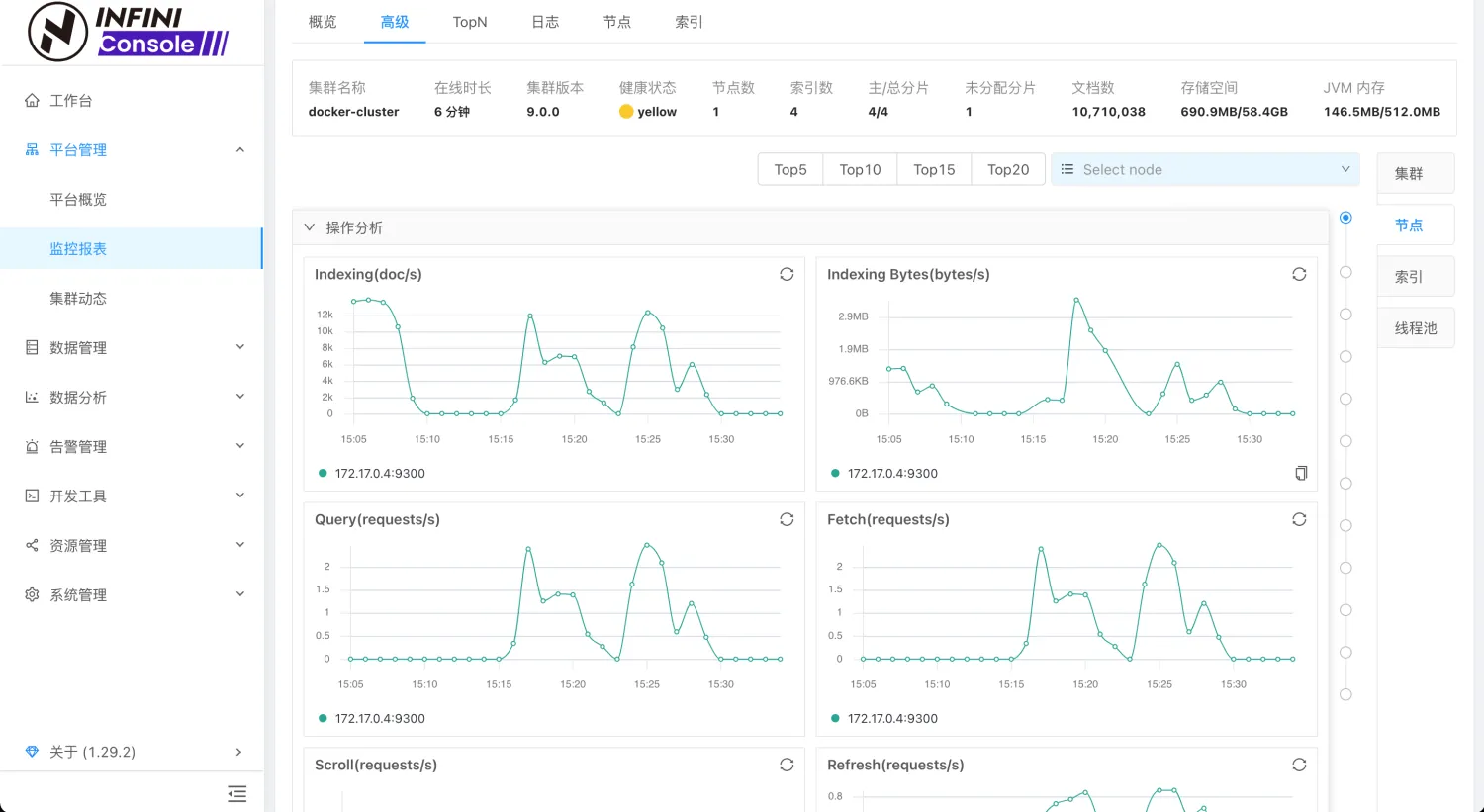

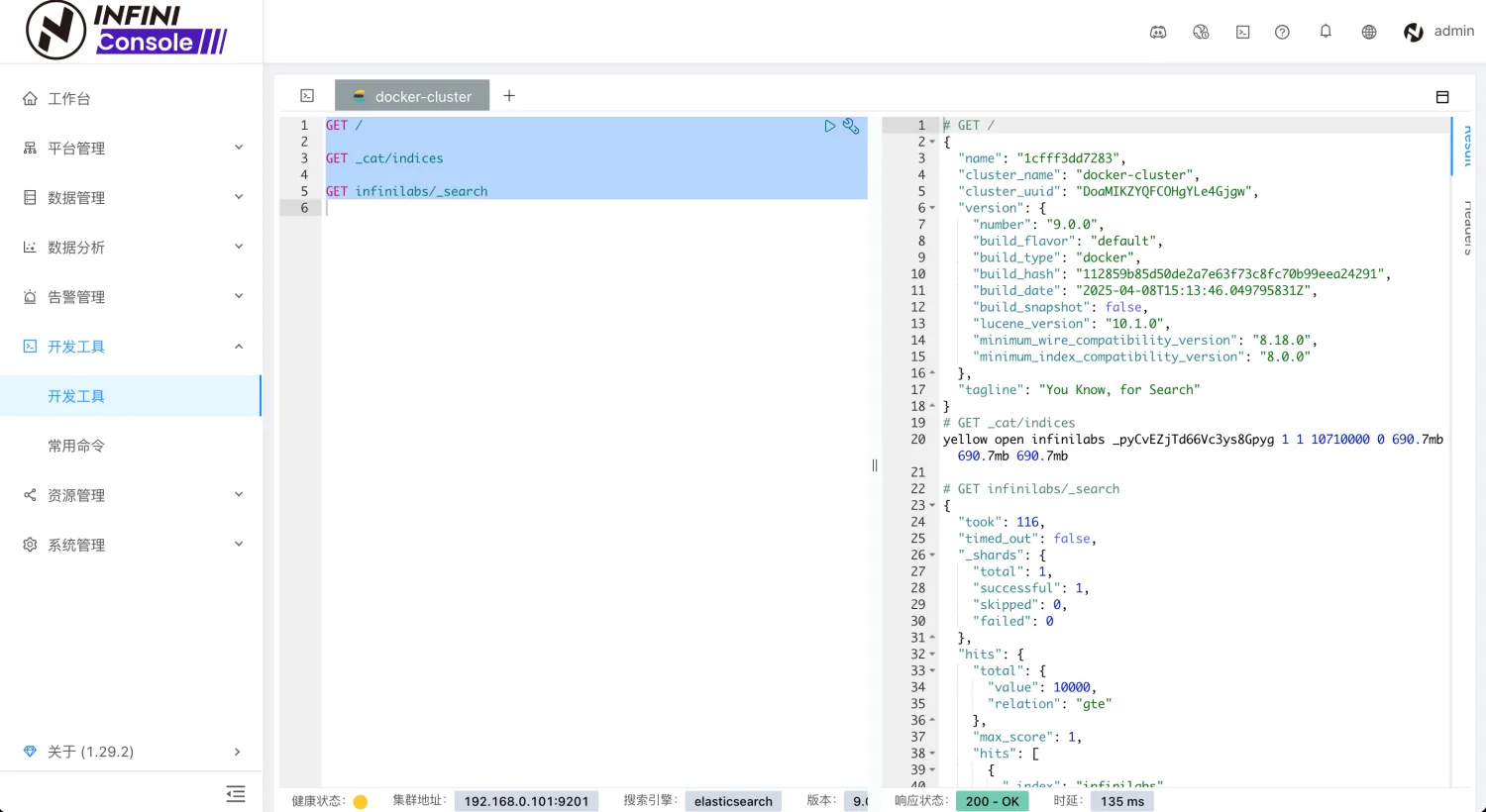

之前有博客介绍过通过 Reindex 的方法将 Elasticsearch 的数据迁移到 Easysearch 集群,今天再介绍一个方法,通过 极限网关(INFINI Gateway) 来进行数据迁移。

测试环境

| 软件 | 版本 |

|---|---|

| Easysearch | 1.12.0 |

| Elasticsearch | 7.17.29 |

| INFINI Gateway | 1.29.2 |

迁移步骤

- 选定要迁移的索引

- 在目标集群建立索引的 mapping 和 setting

- 准备 INFINI Gateway 迁移配置

- 运行 INFINI Gateway 进行数据迁移

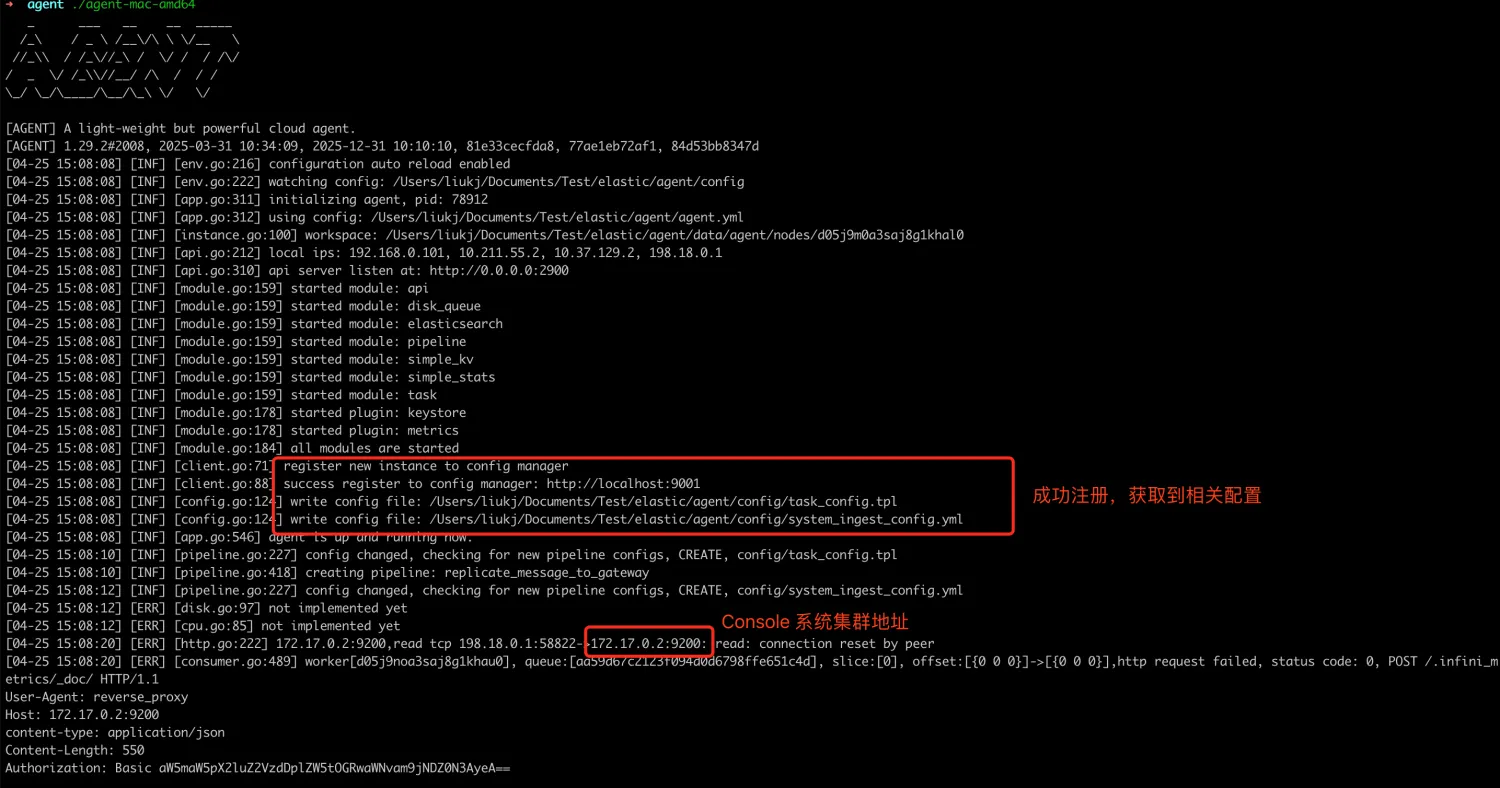

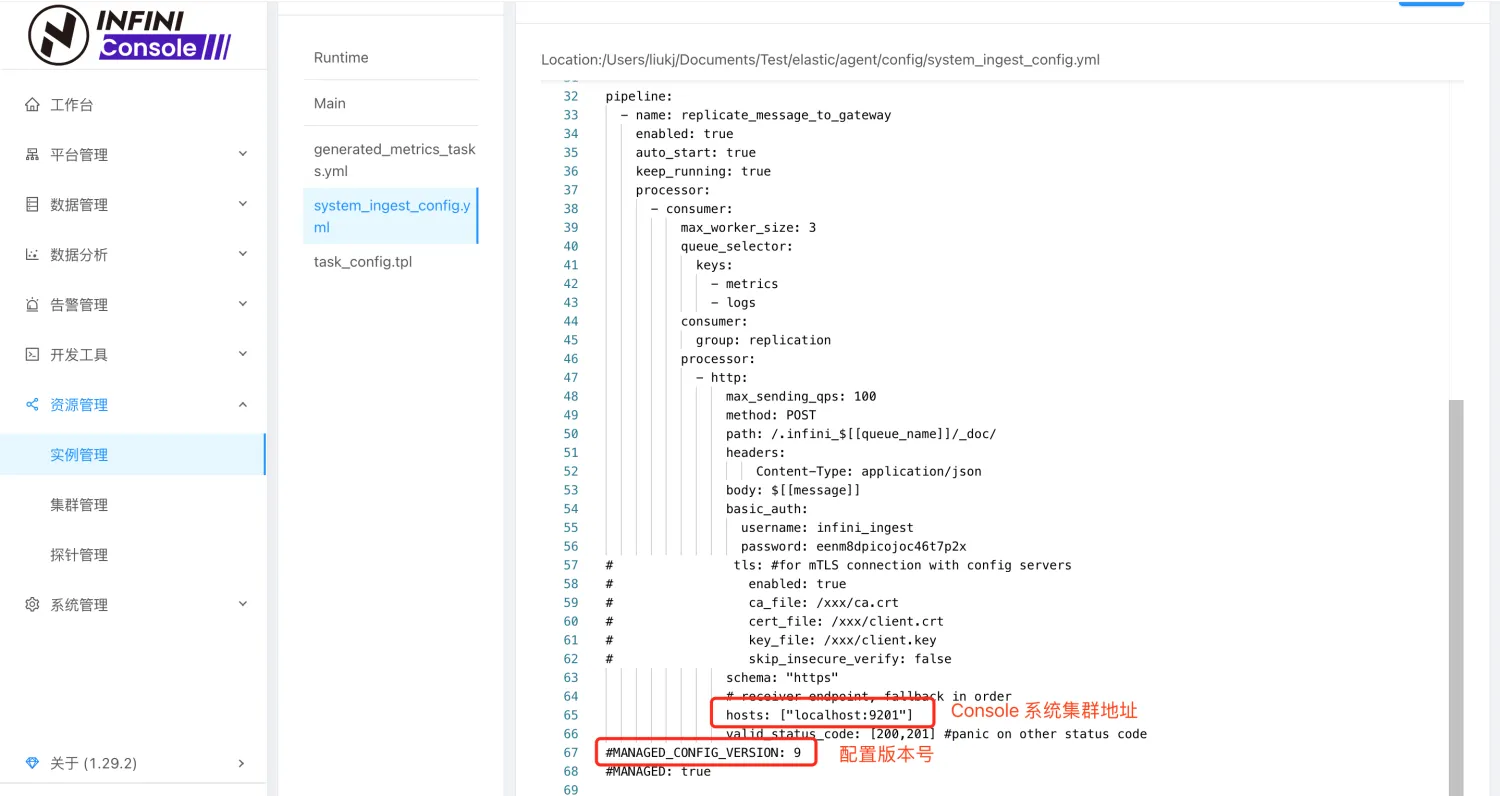

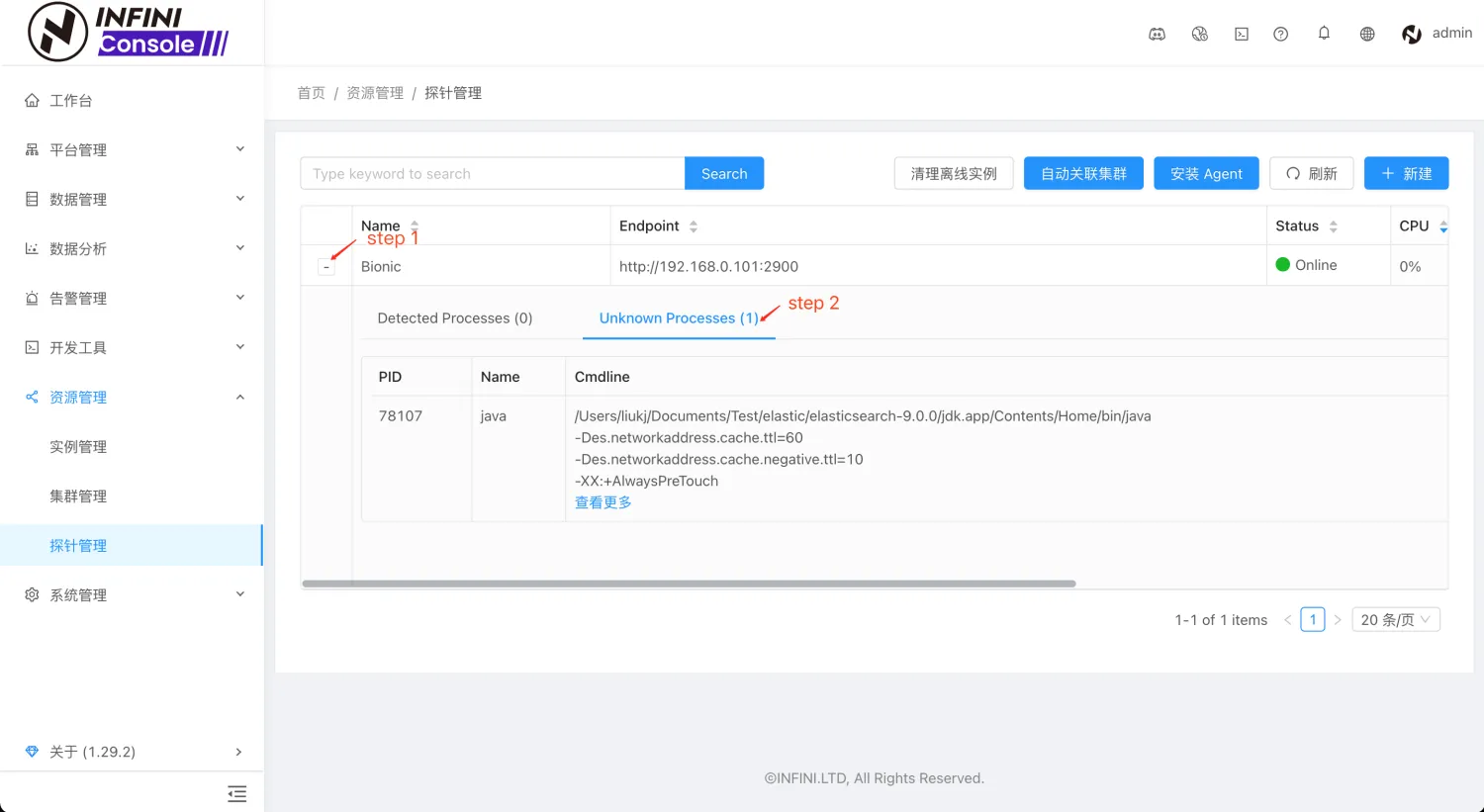

迁移实战

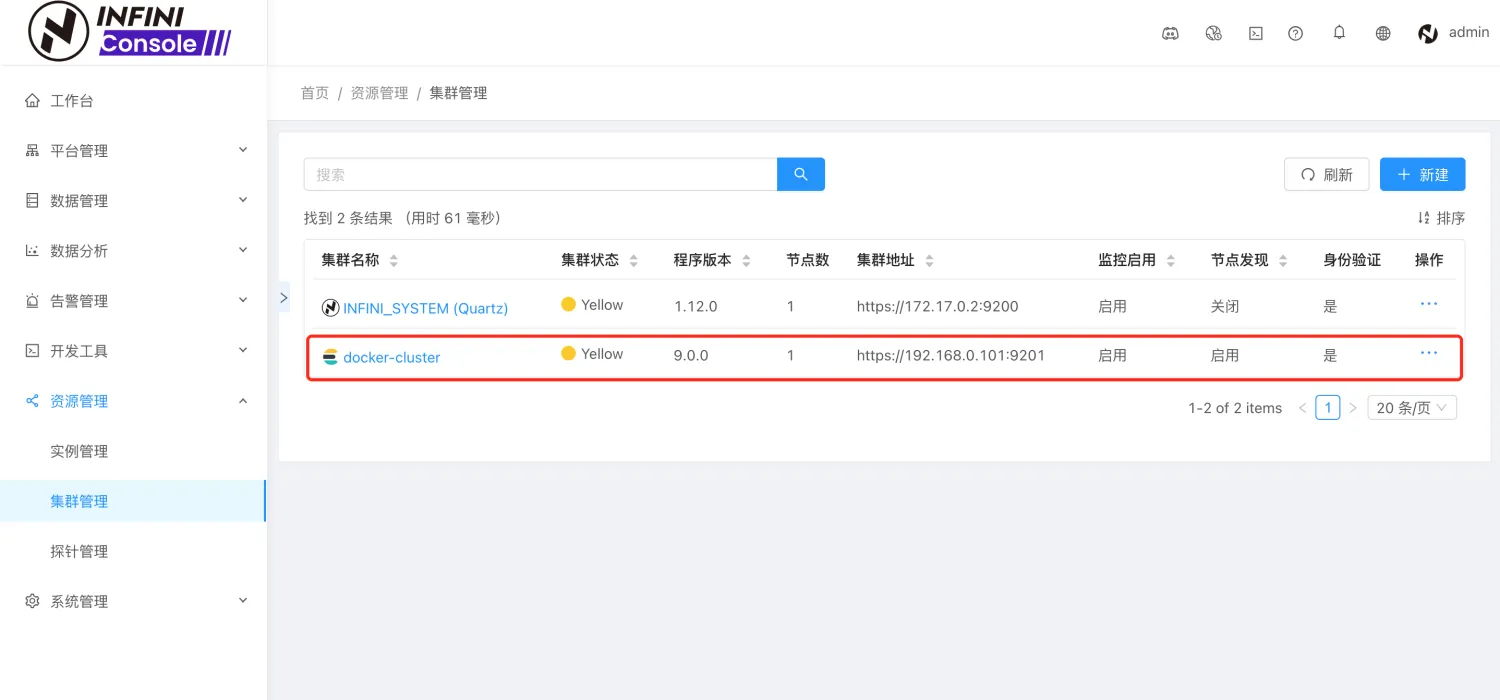

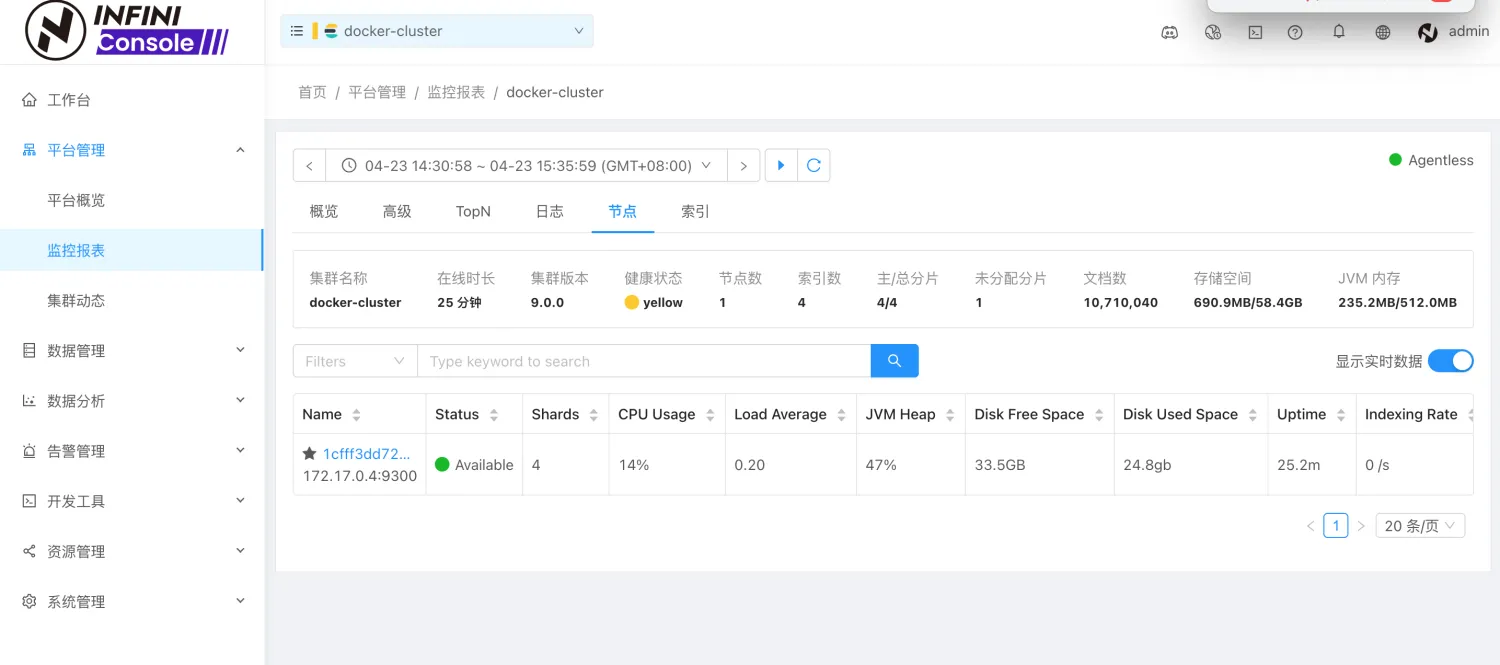

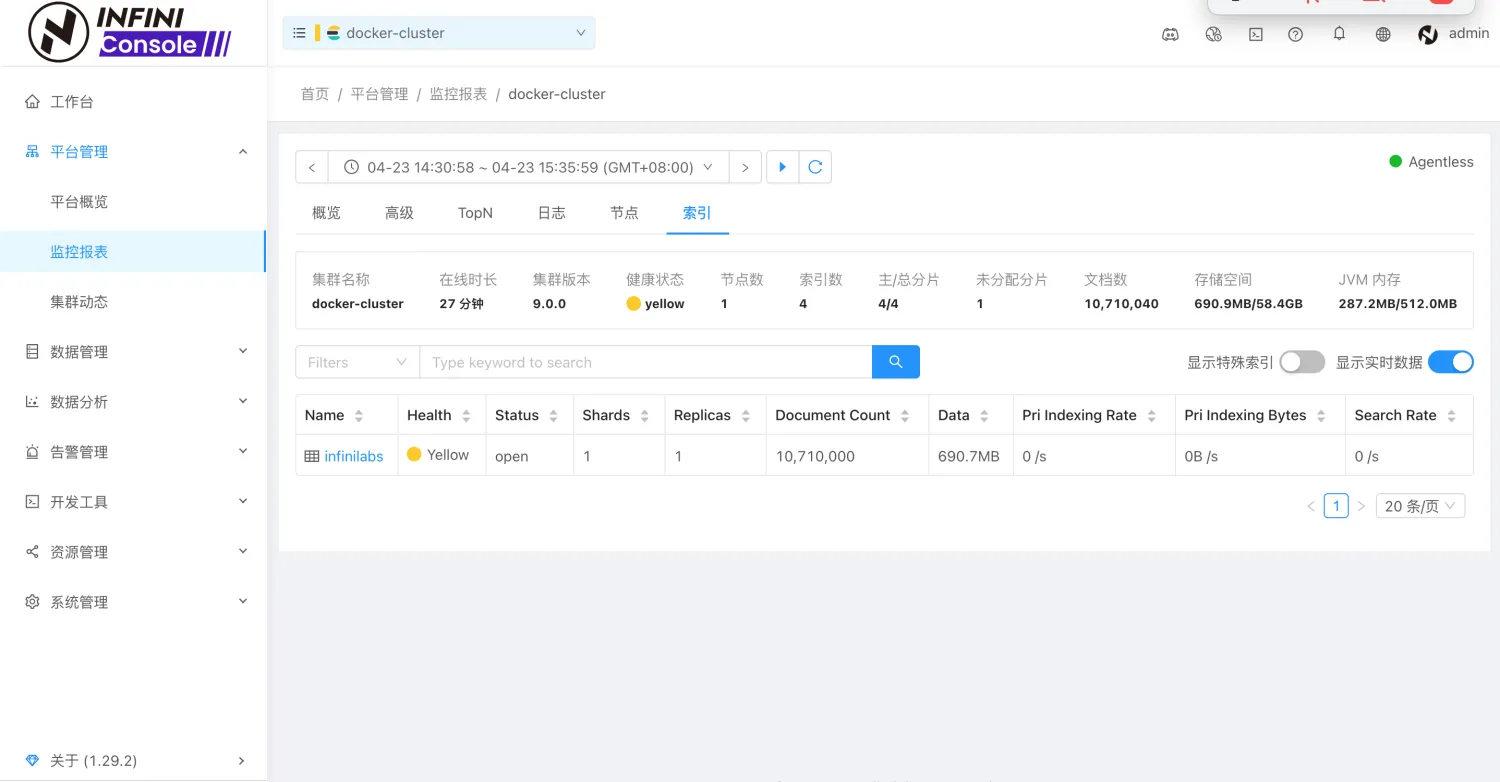

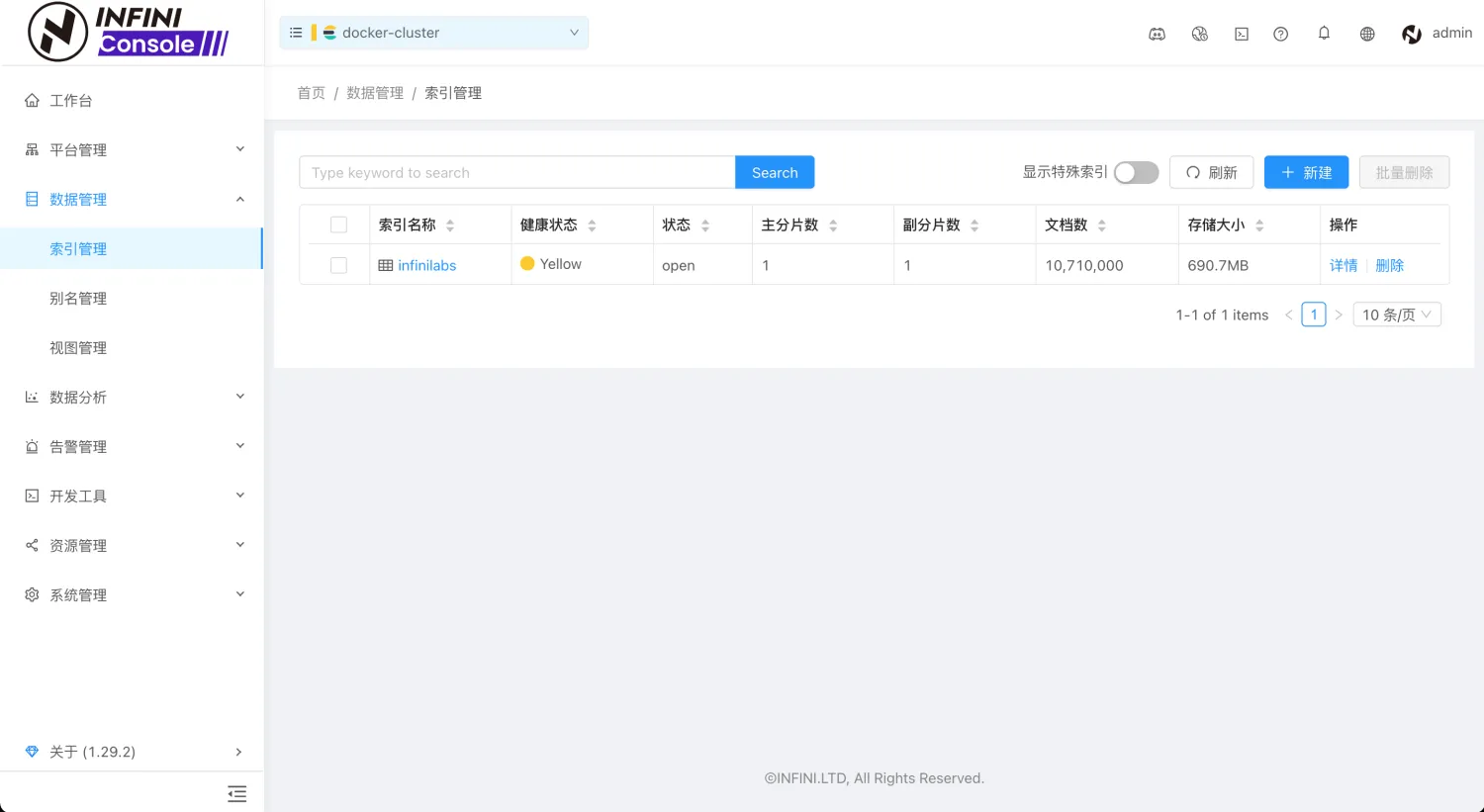

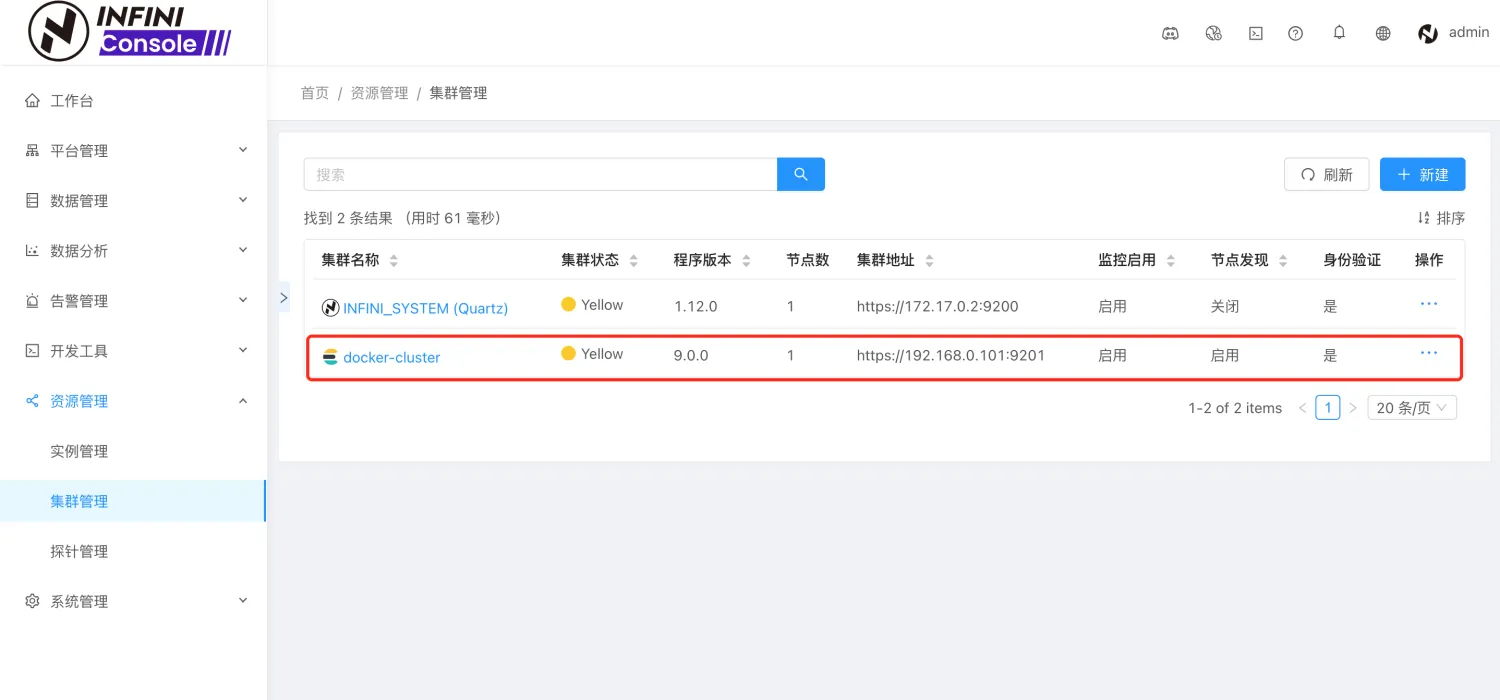

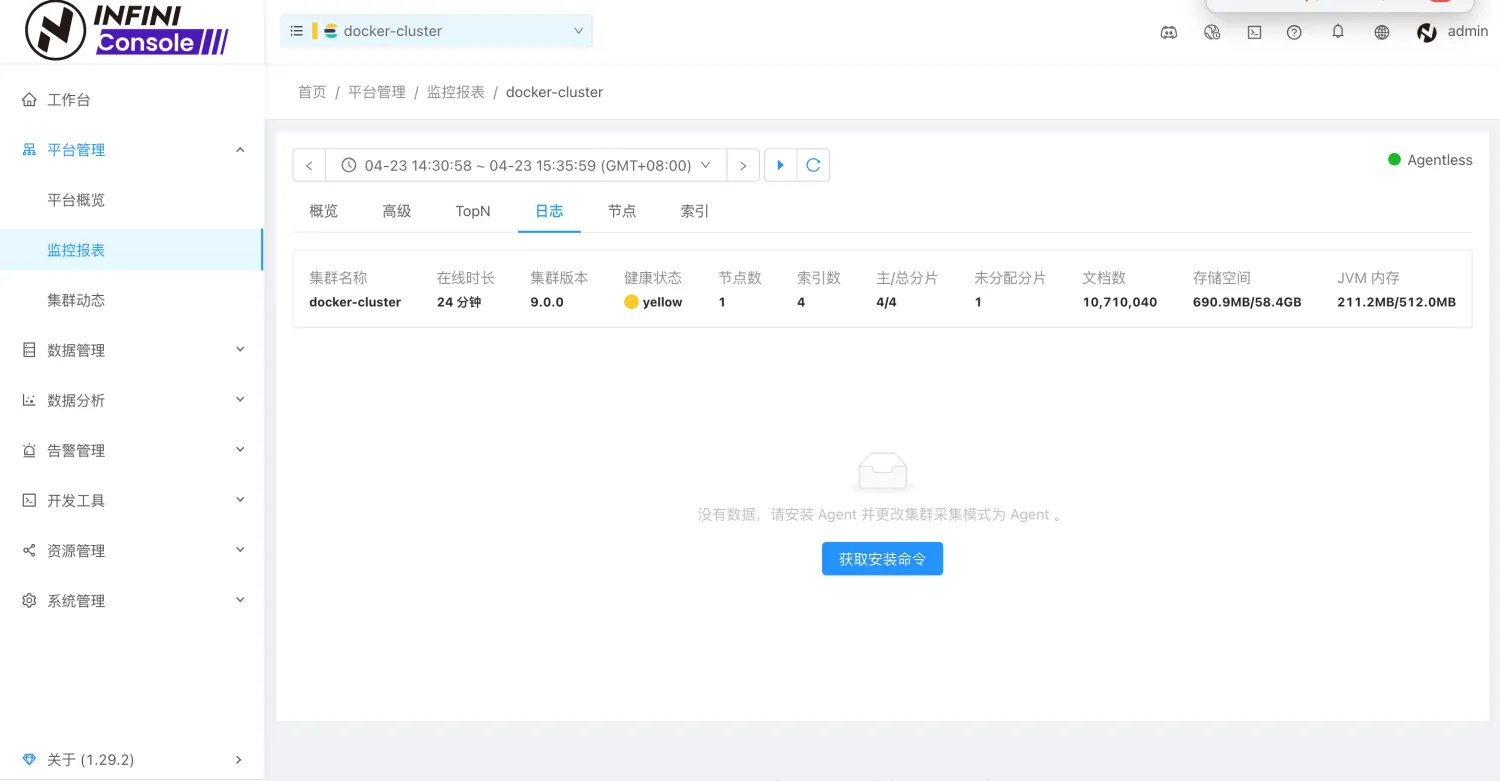

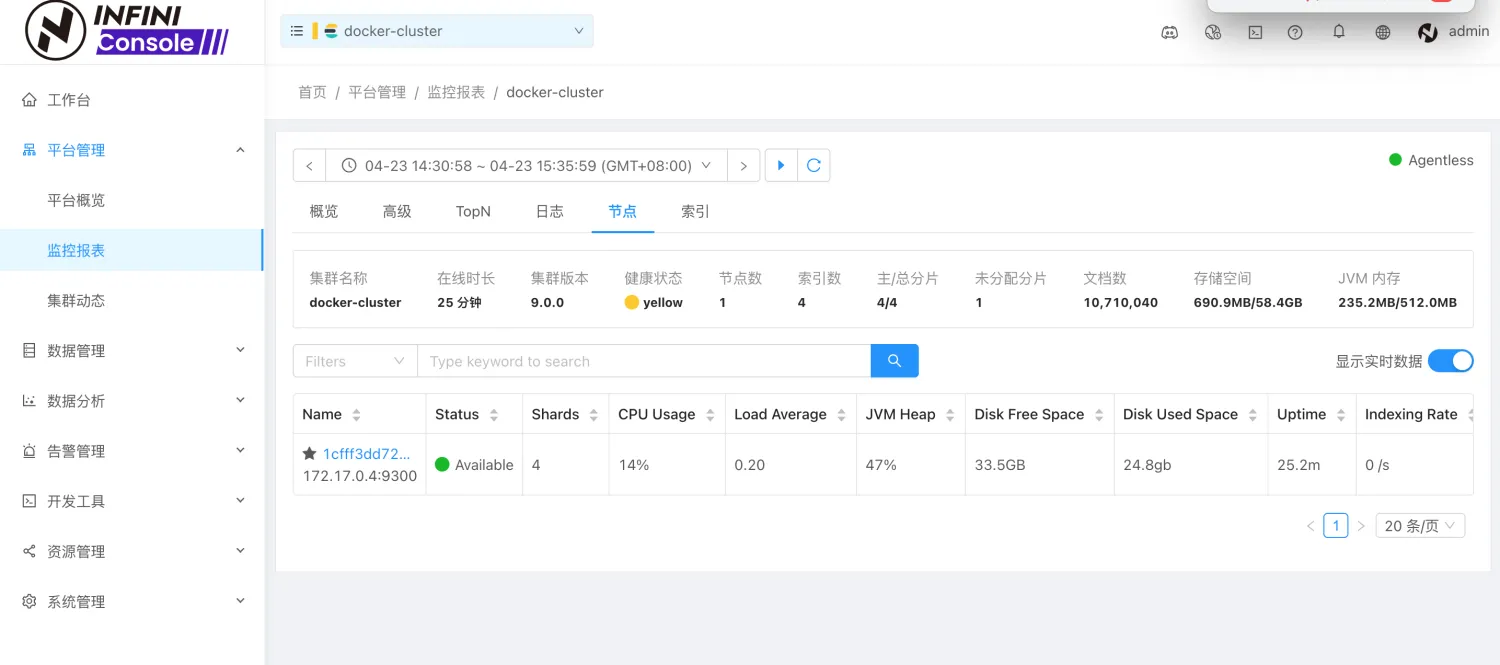

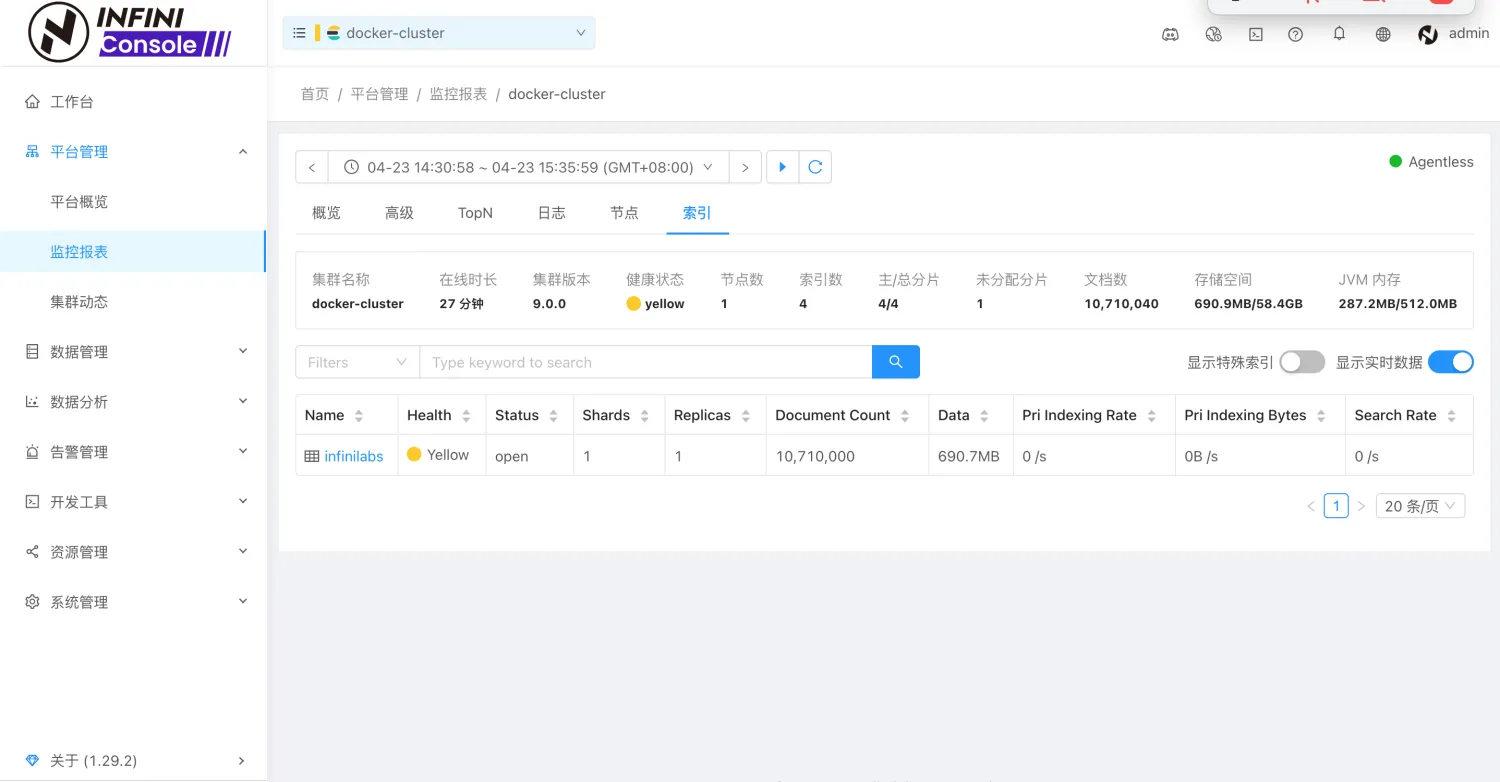

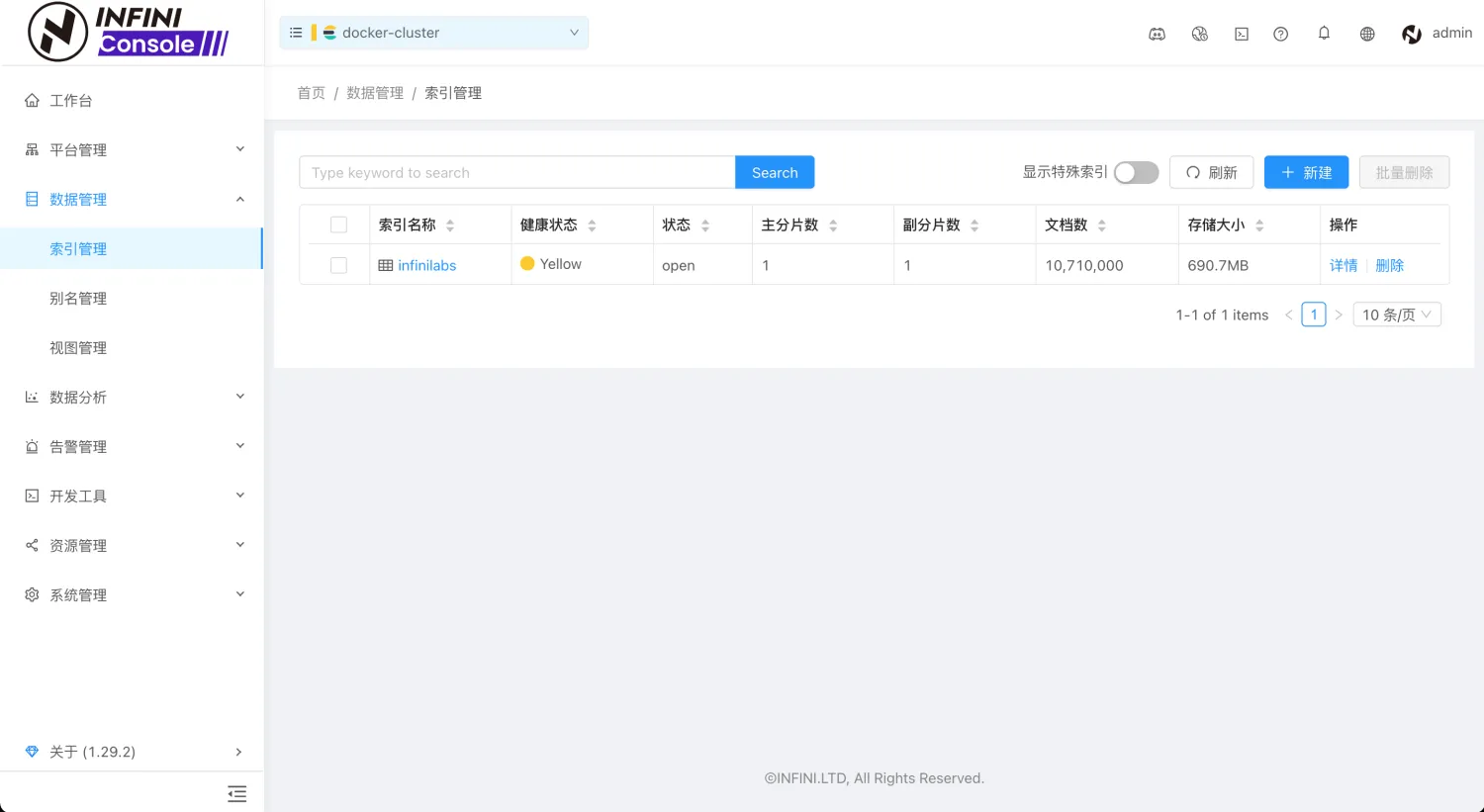

- 选定要迁移的索引

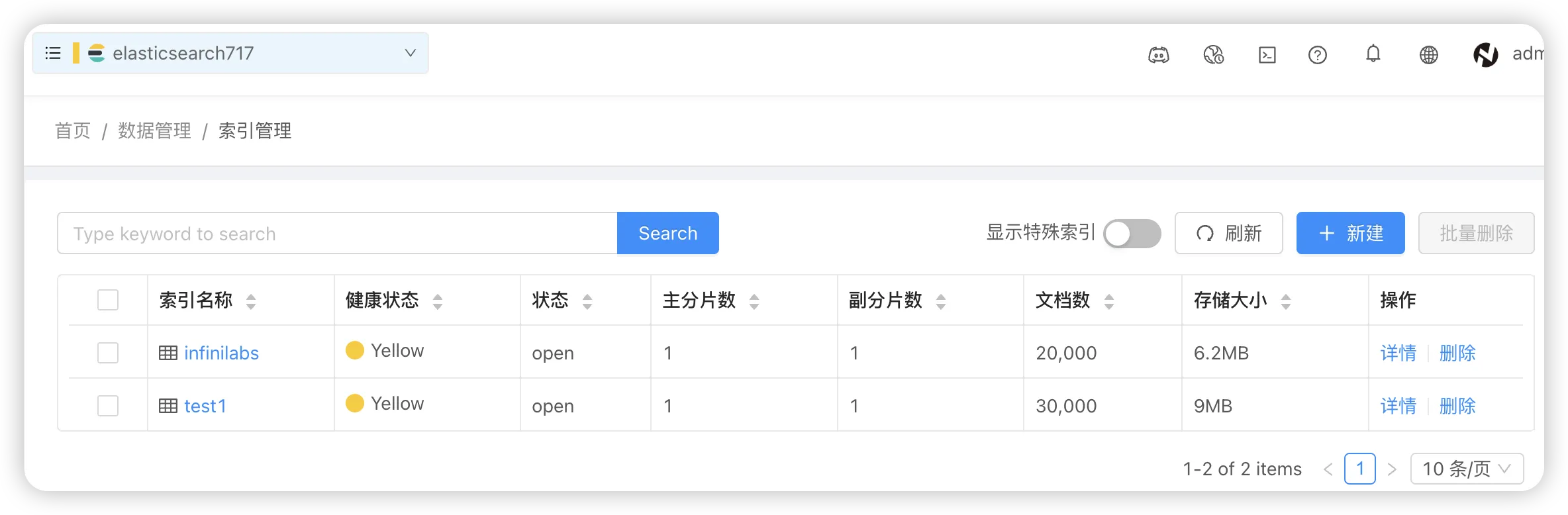

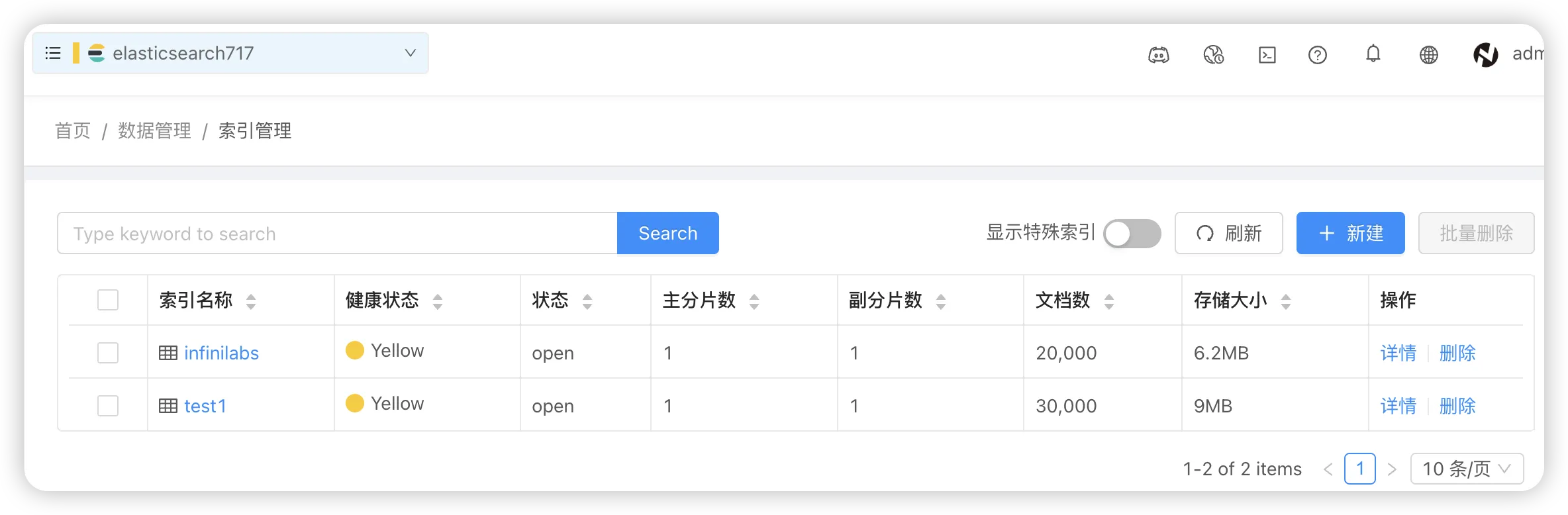

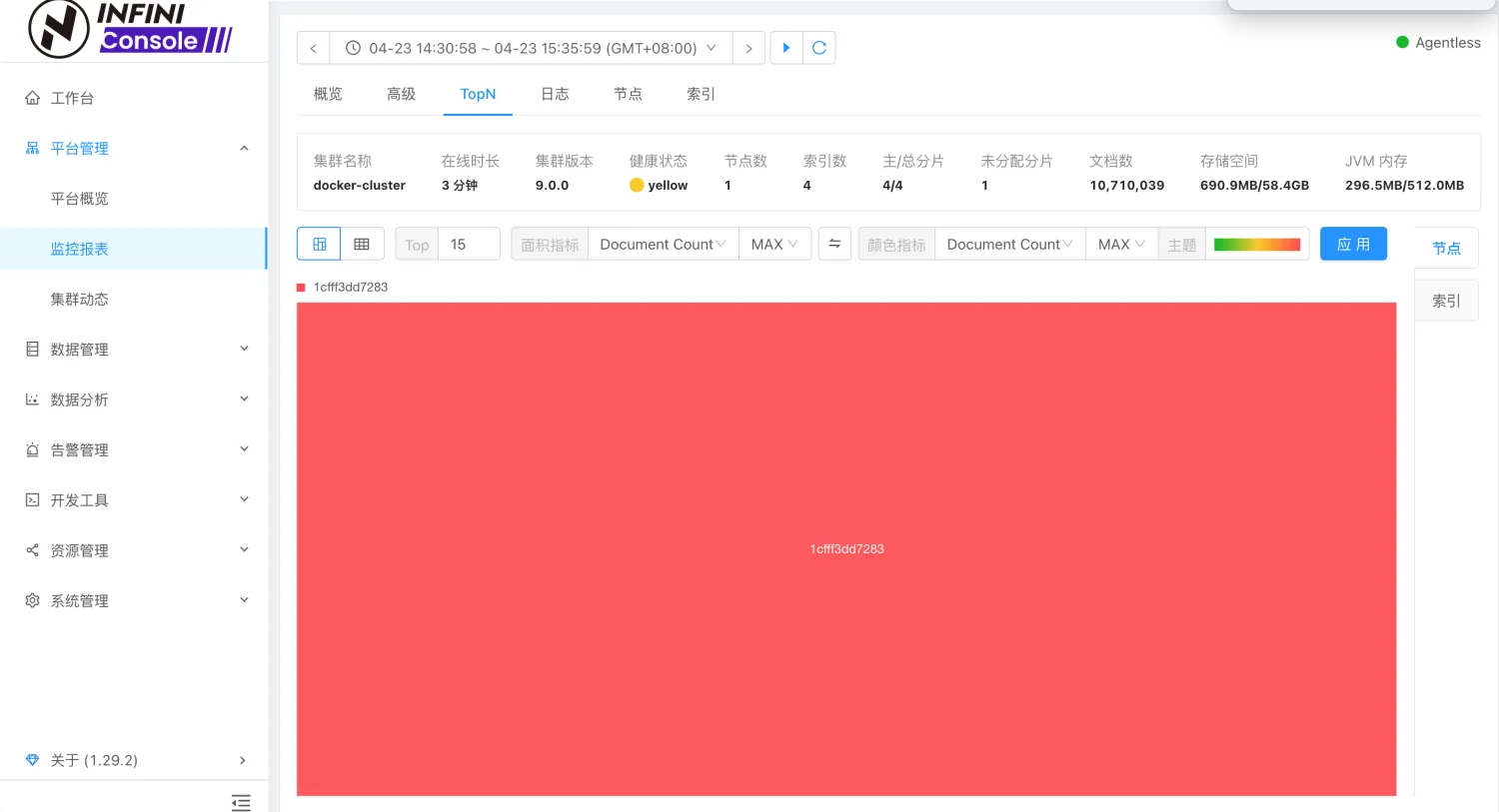

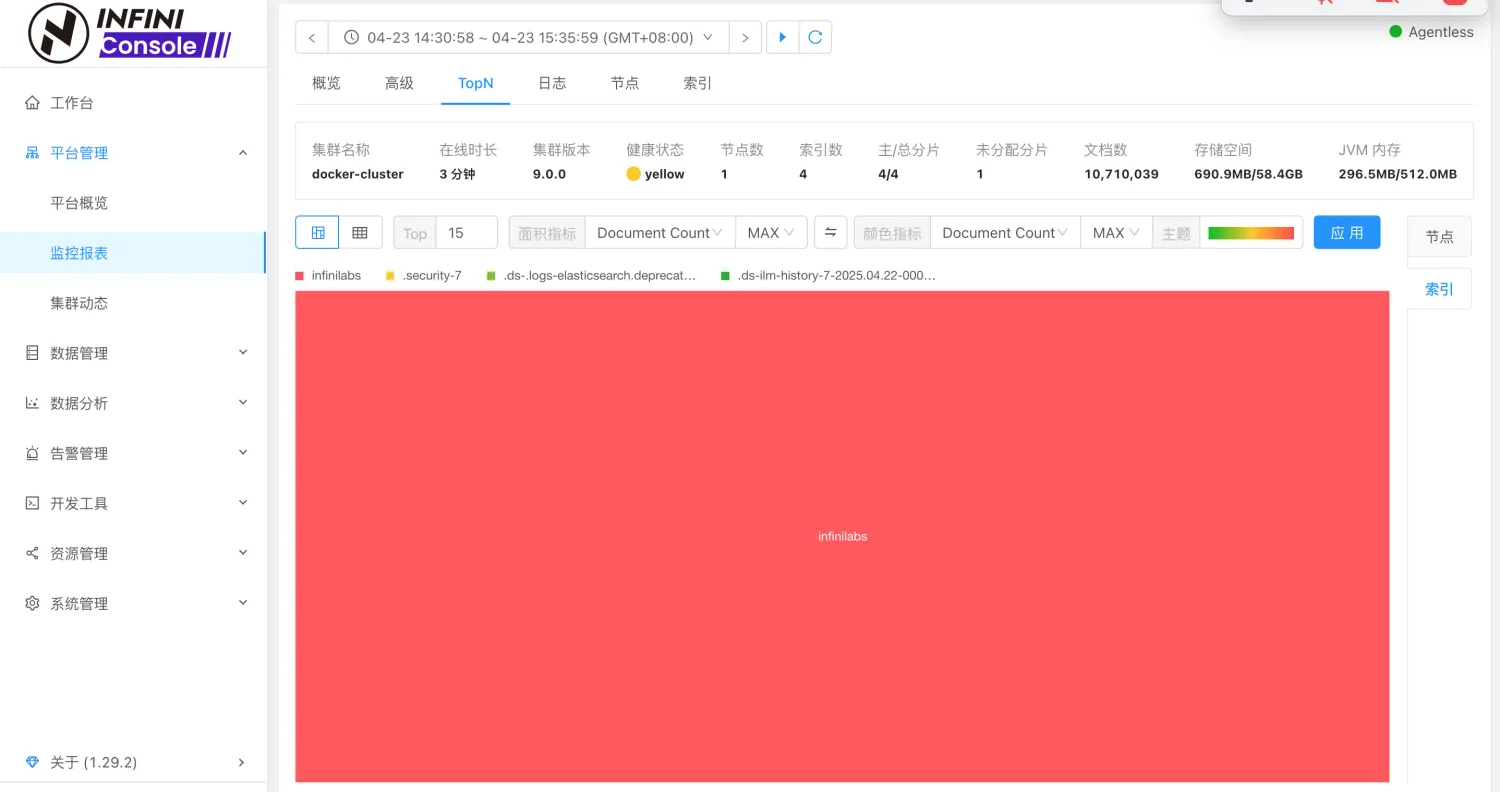

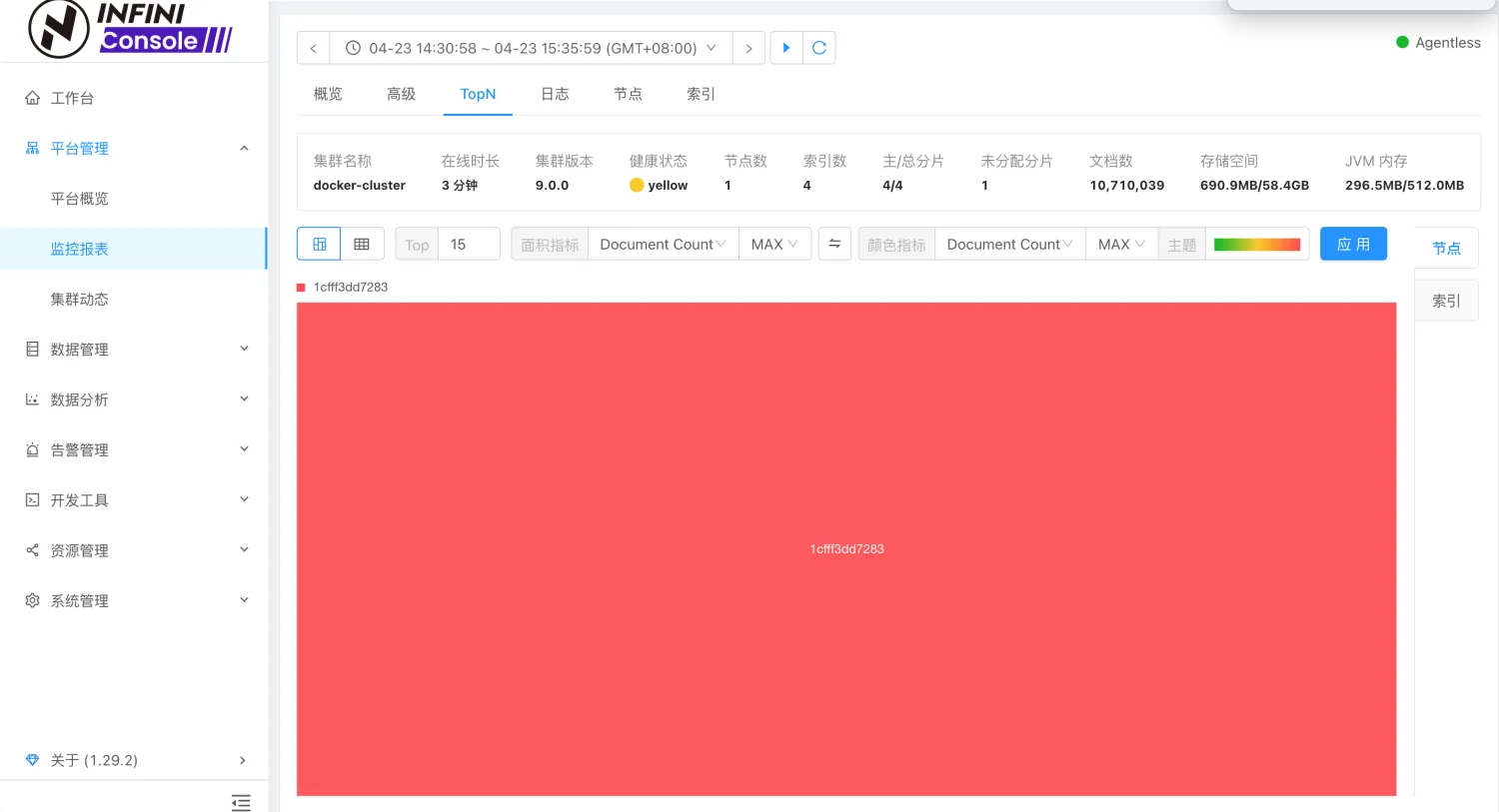

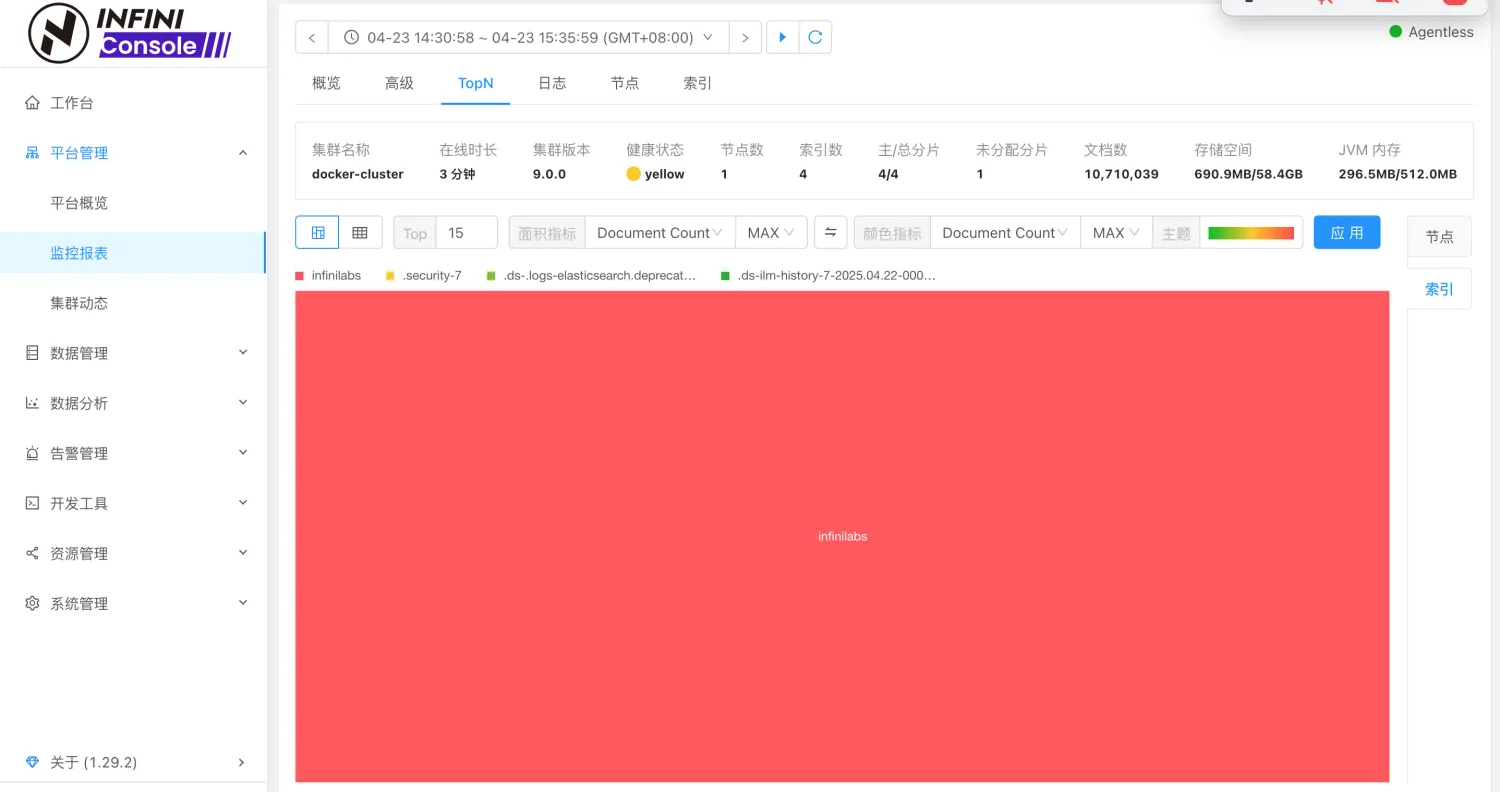

在 Elasticsearch 集群中选择目标索引:infinilabs 和 test1,没错,我们一次可以迁移多个。

- 在 Easysearch 集群使用源索引的 setting 和 mapping 建立目标索引。(略)

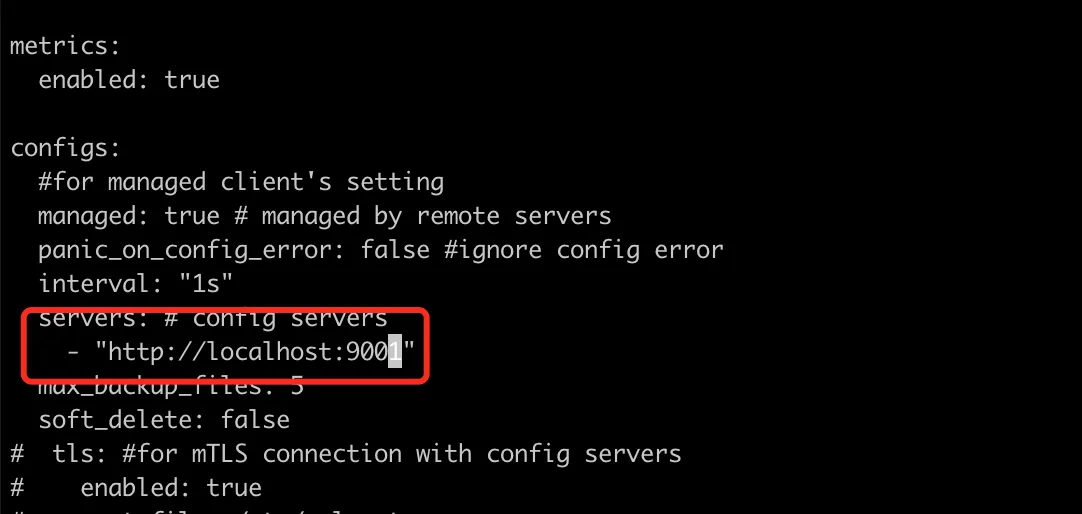

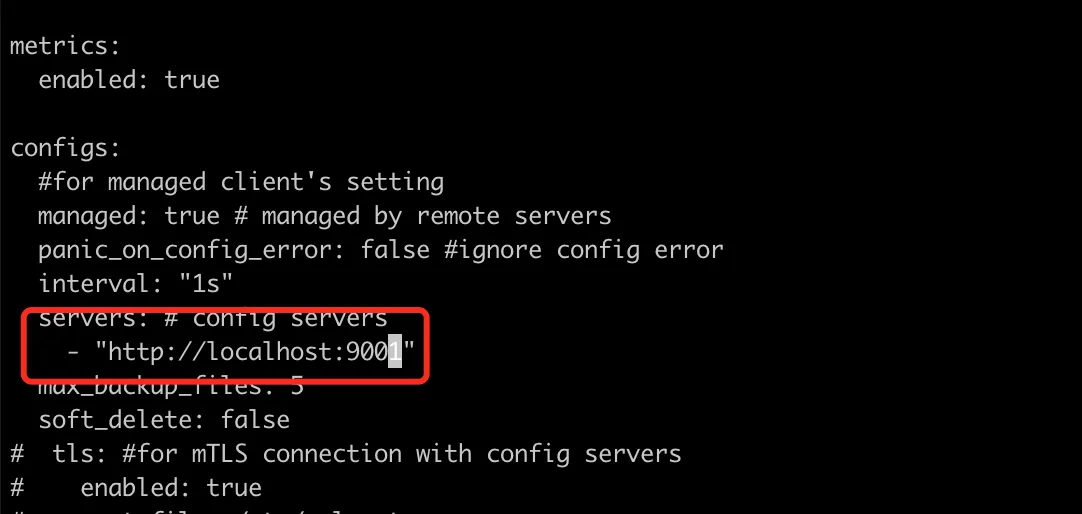

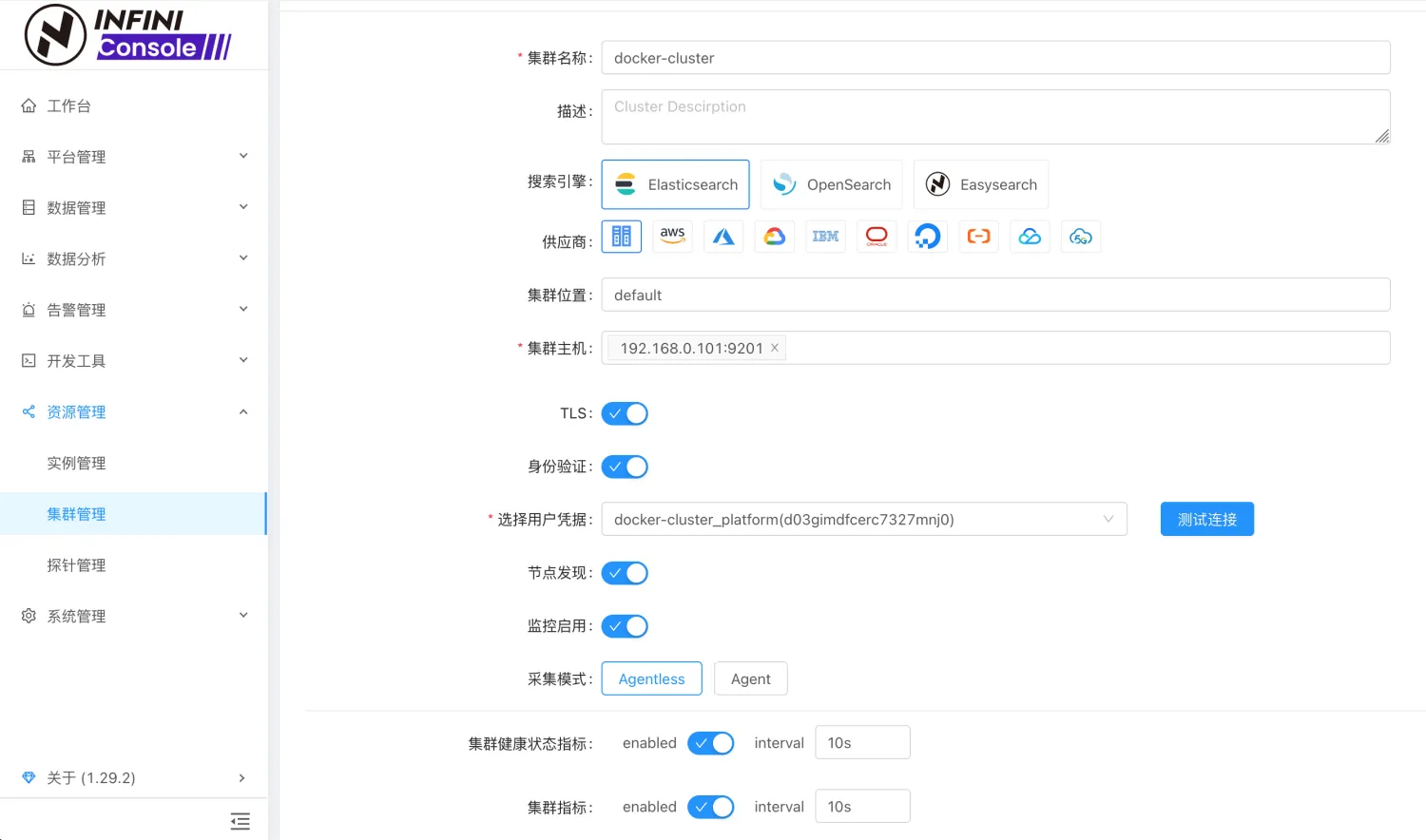

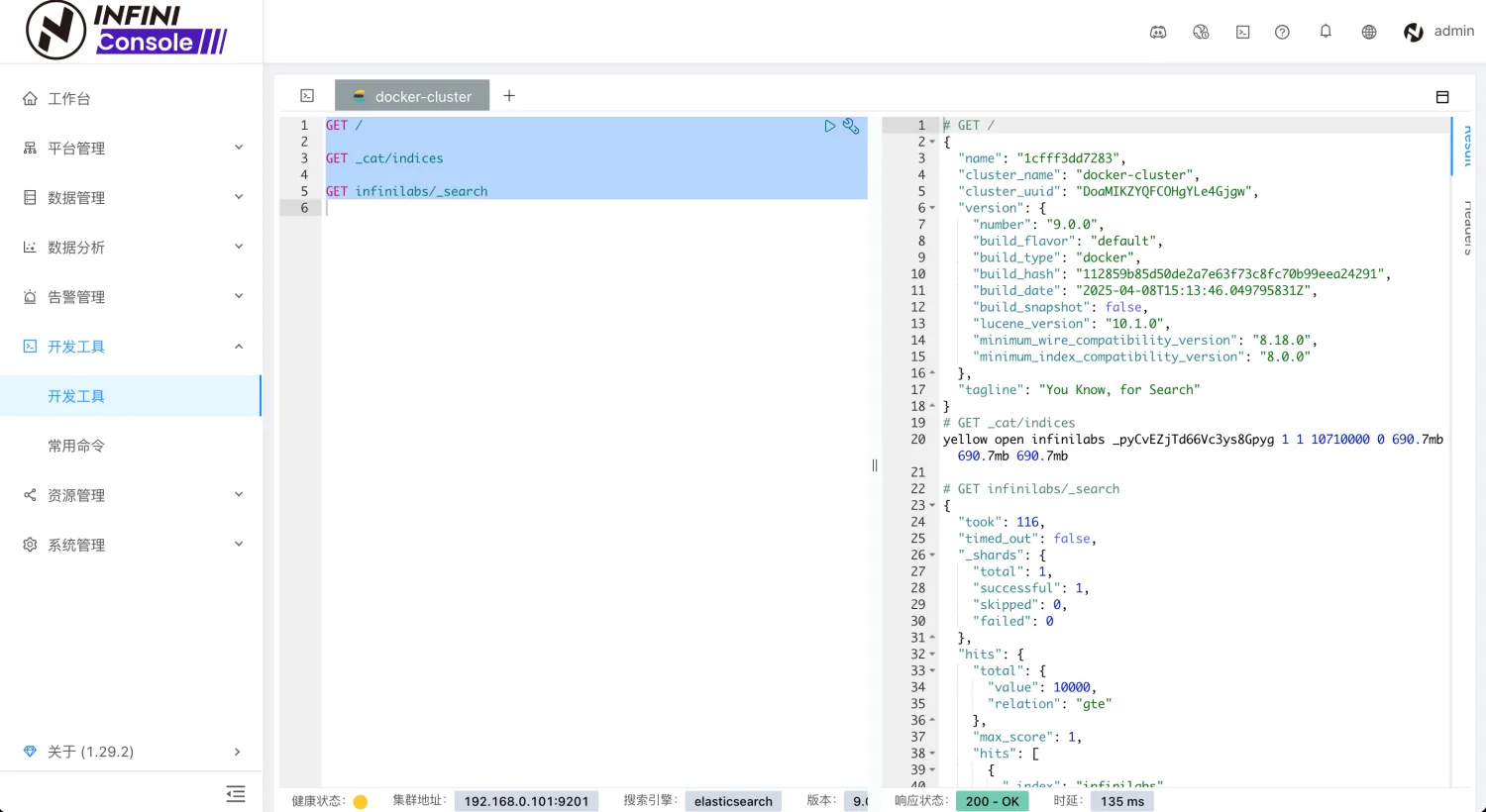

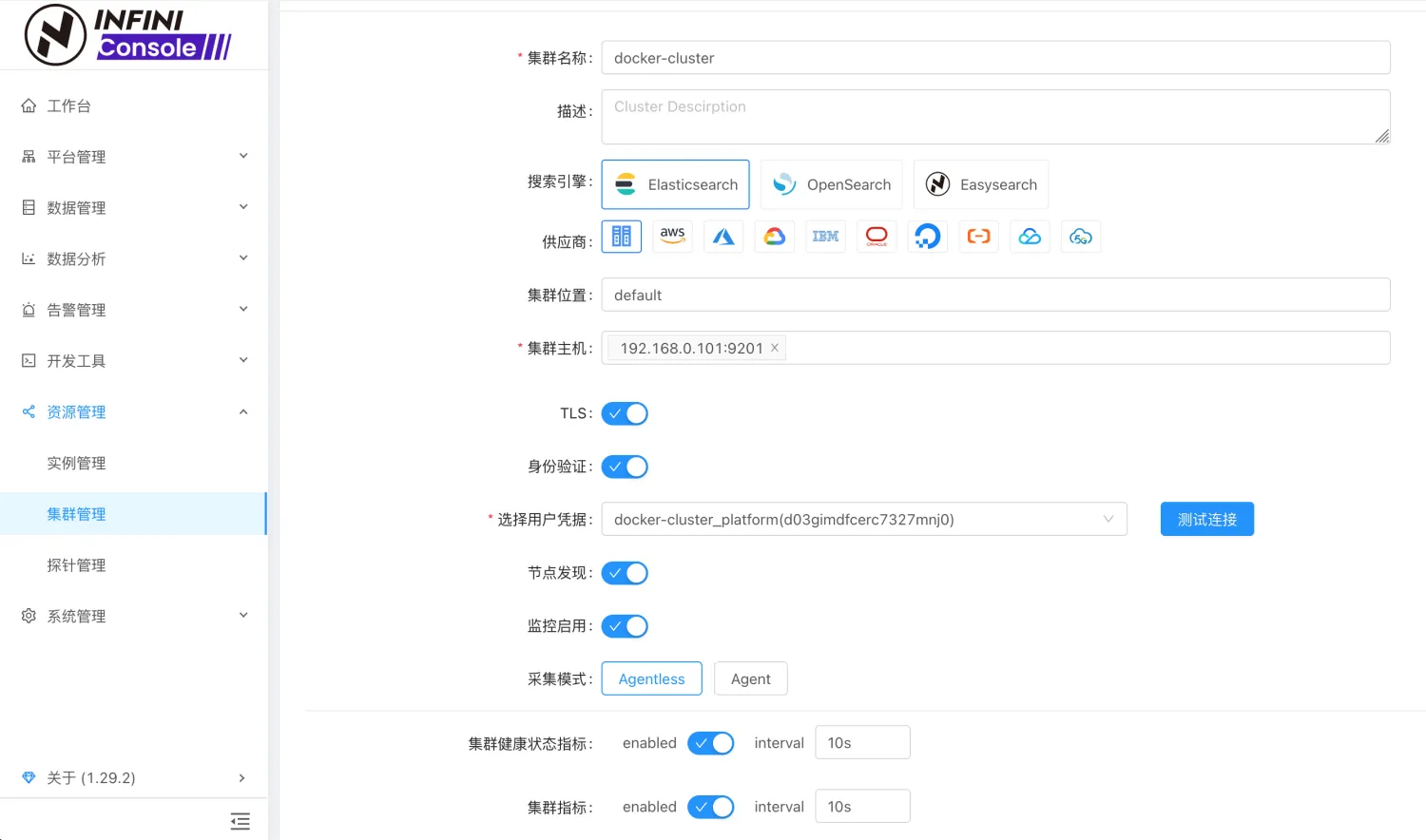

- INFINI Gateway 迁移配置准备

去 github 下载配置,修改下面的连接集群的部分

1 env:

2 LR_GATEWAY_API_HOST: 127.0.0.1:2900

3 SRC_ELASTICSEARCH_ENDPOINT: http://127.0.0.1:9200

4 DST_ELASTICSEARCH_ENDPOINT: http://127.0.0.1:9201

5 path.data: data

6 path.logs: log

7 progress_bar.enabled: true

8 configs.auto_reload: true

9

10 api:

11 enabled: true

12 network:

13 binding: $[[env.LR_GATEWAY_API_HOST]]

14

15 elasticsearch:

16 - name: source

17 enabled: true

18 endpoint: $[[env.SRC_ELASTICSEARCH_ENDPOINT]]

19 basic_auth:

20 username: elastic

21 password: goodgoodstudy

22

23 - name: target

24 enabled: true

25 endpoint: $[[env.DST_ELASTICSEARCH_ENDPOINT]]

26 basic_auth:

27 username: admin

28 password: 14da41c79ad2d744b90cpipeline 部分修改要迁移的索引名称,我们迁移 infinilabs 和 test1 两个索引。

31 pipeline:

32 - name: source_scroll

33 auto_start: true

34 keep_running: false

35 processor:

36 - es_scroll:

37 slice_size: 1

38 batch_size: 5000

39 indices: "infinilabs,test1"

40 elasticsearch: source

41 output_queue: source_index_dump

42 partition_size: 1

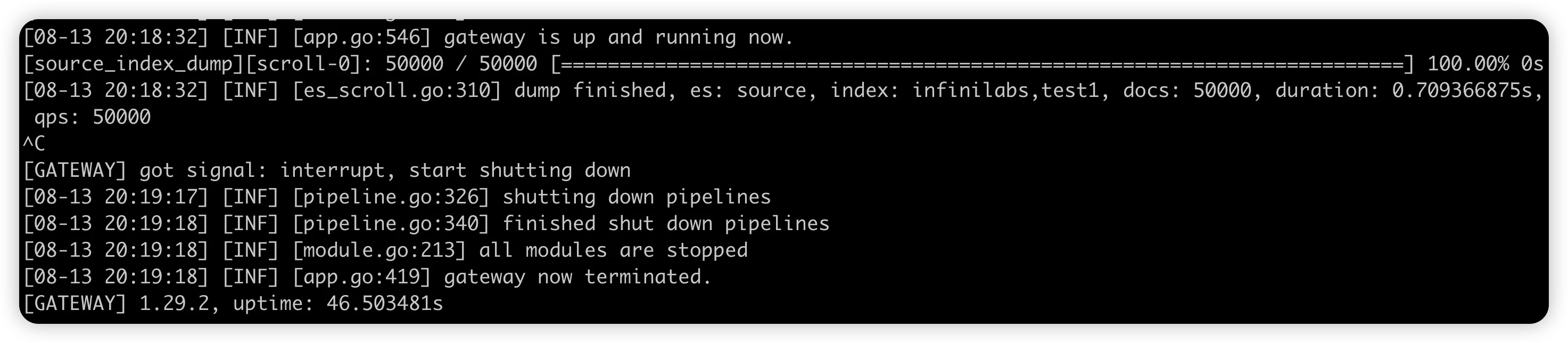

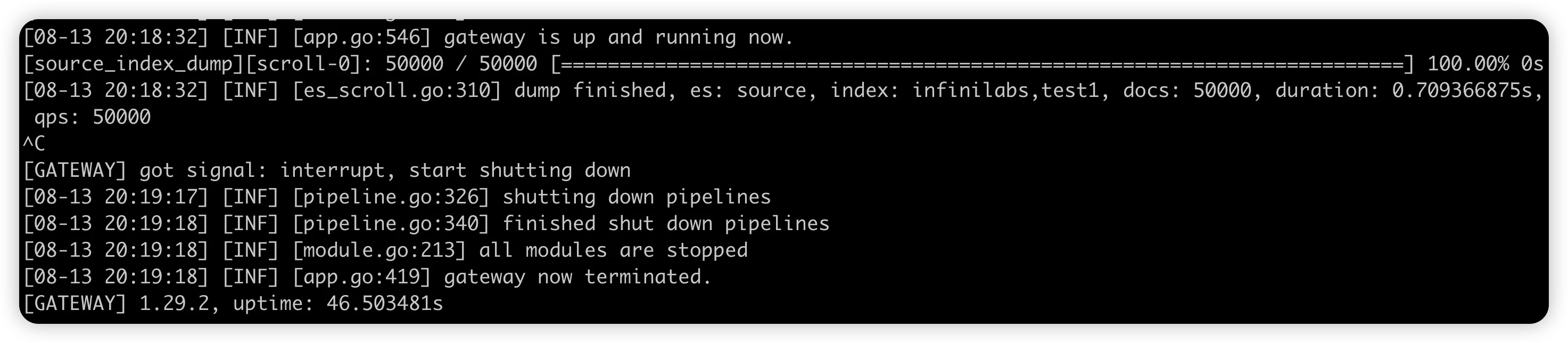

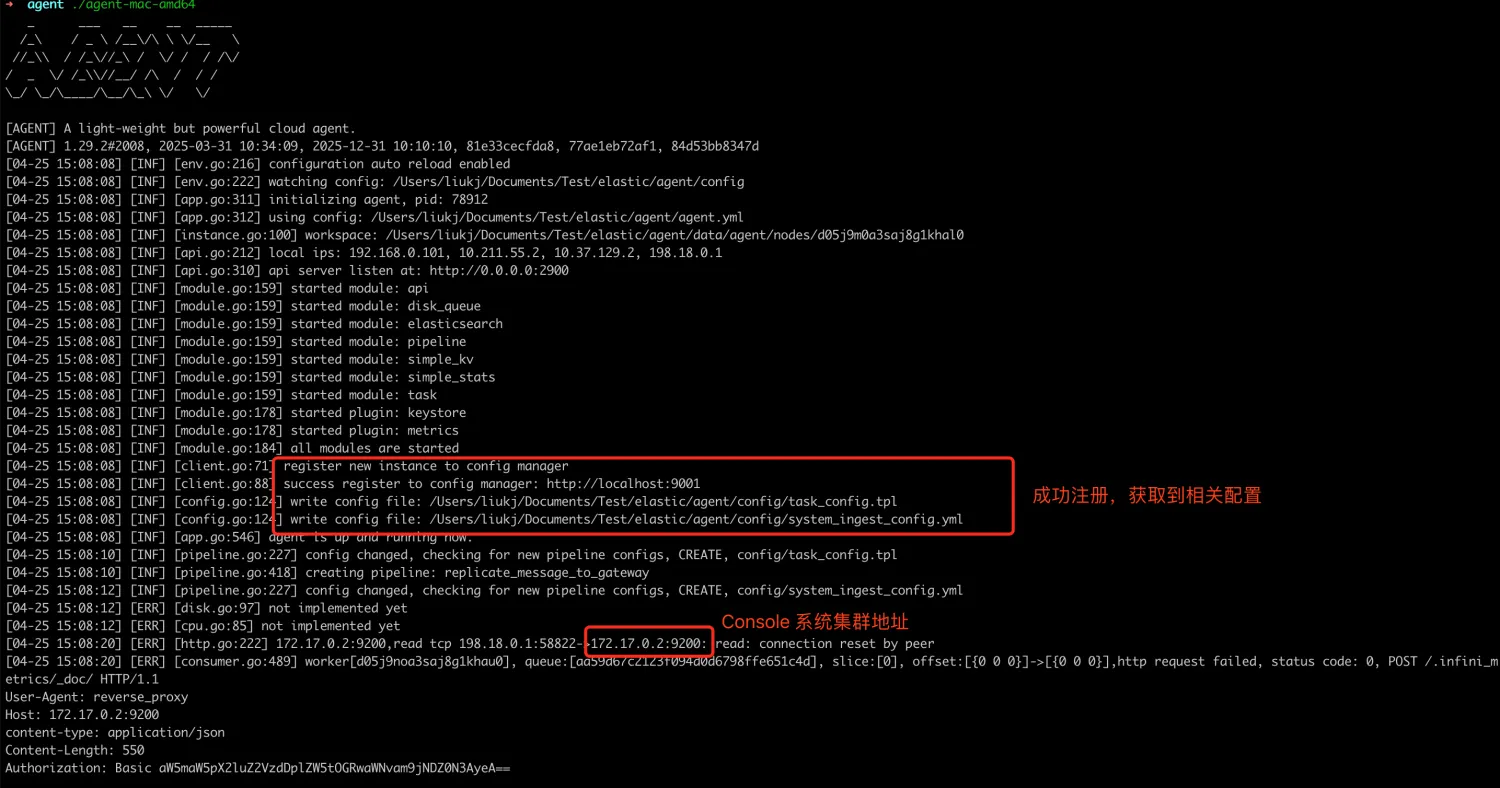

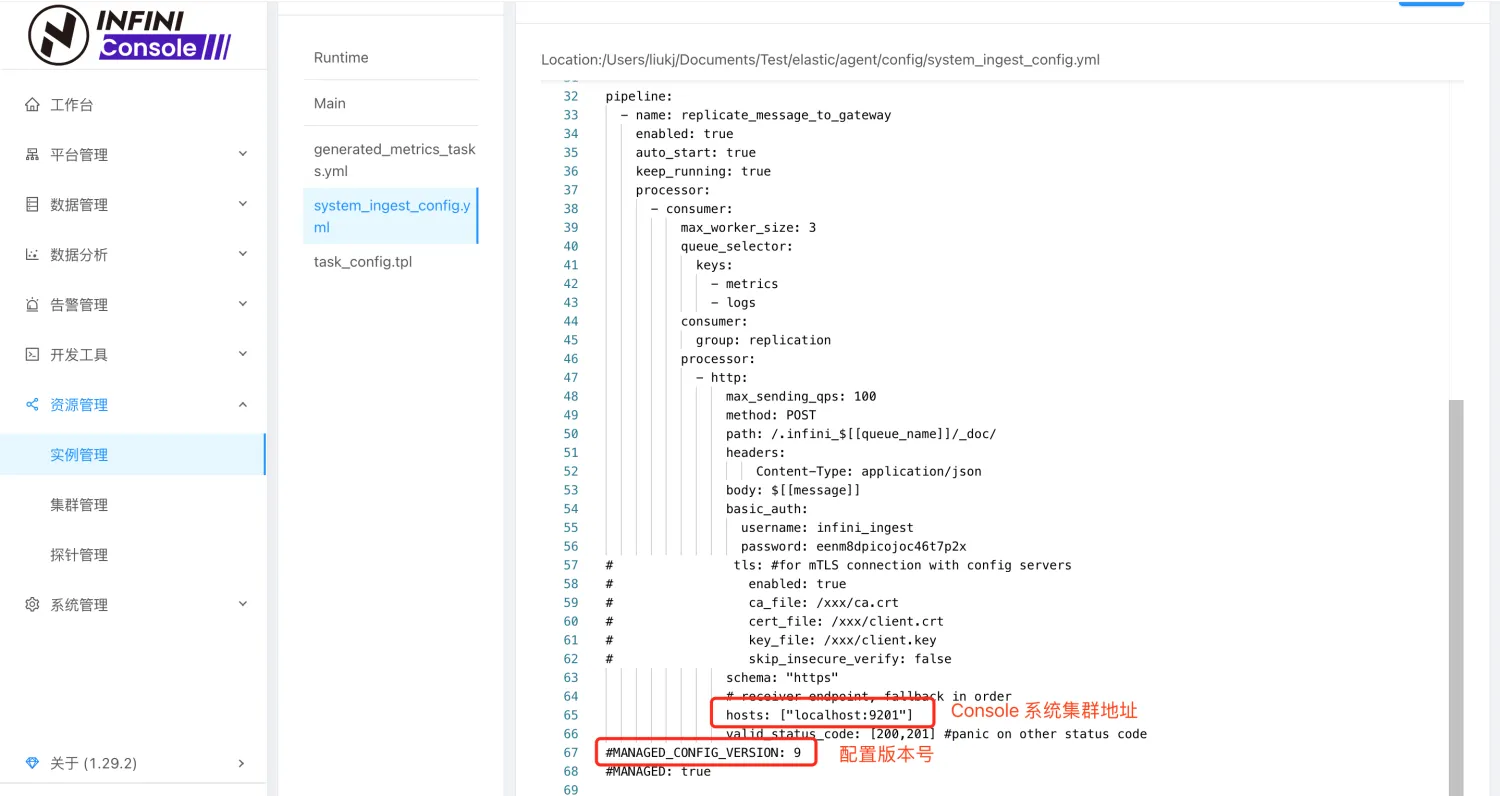

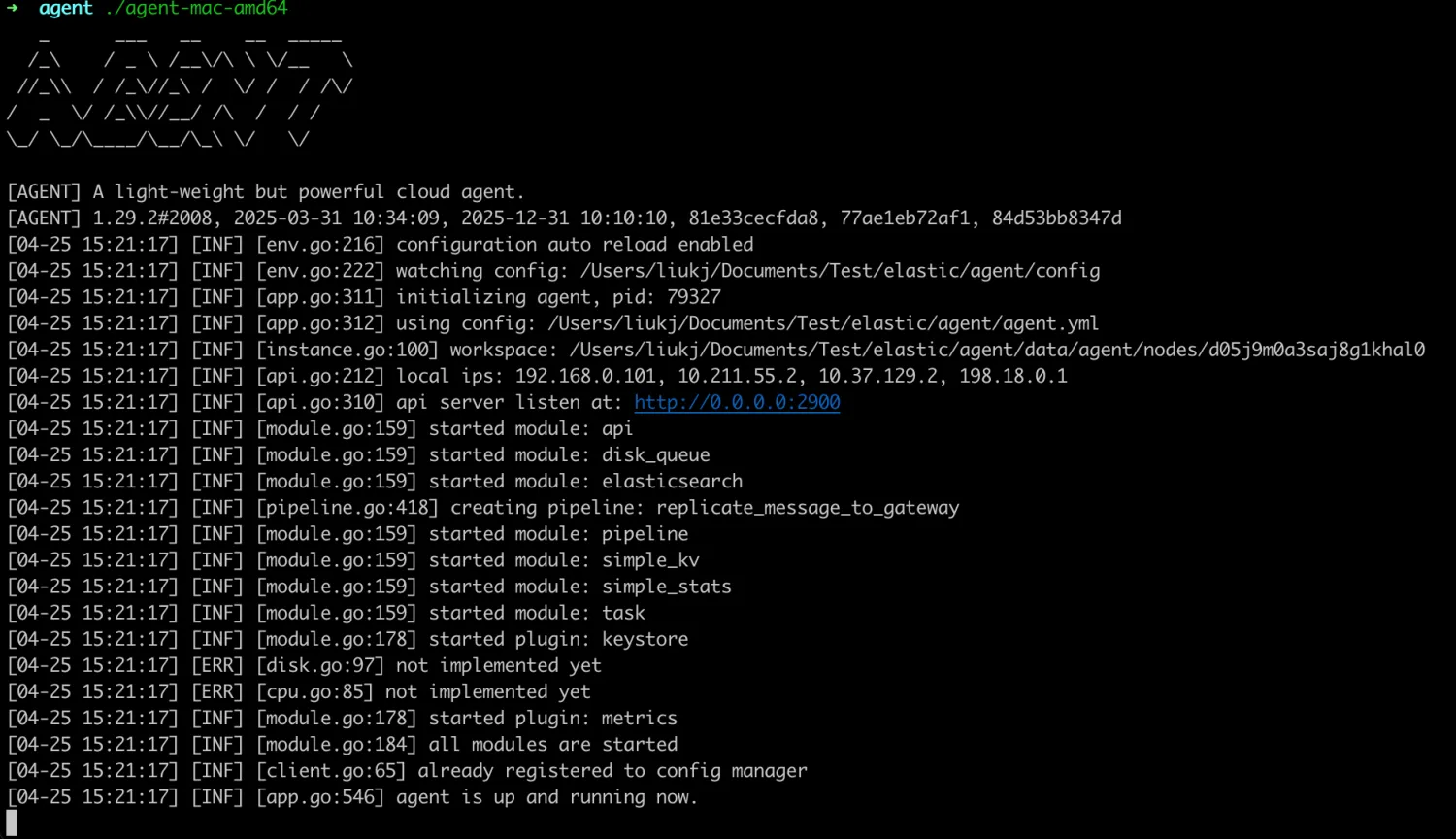

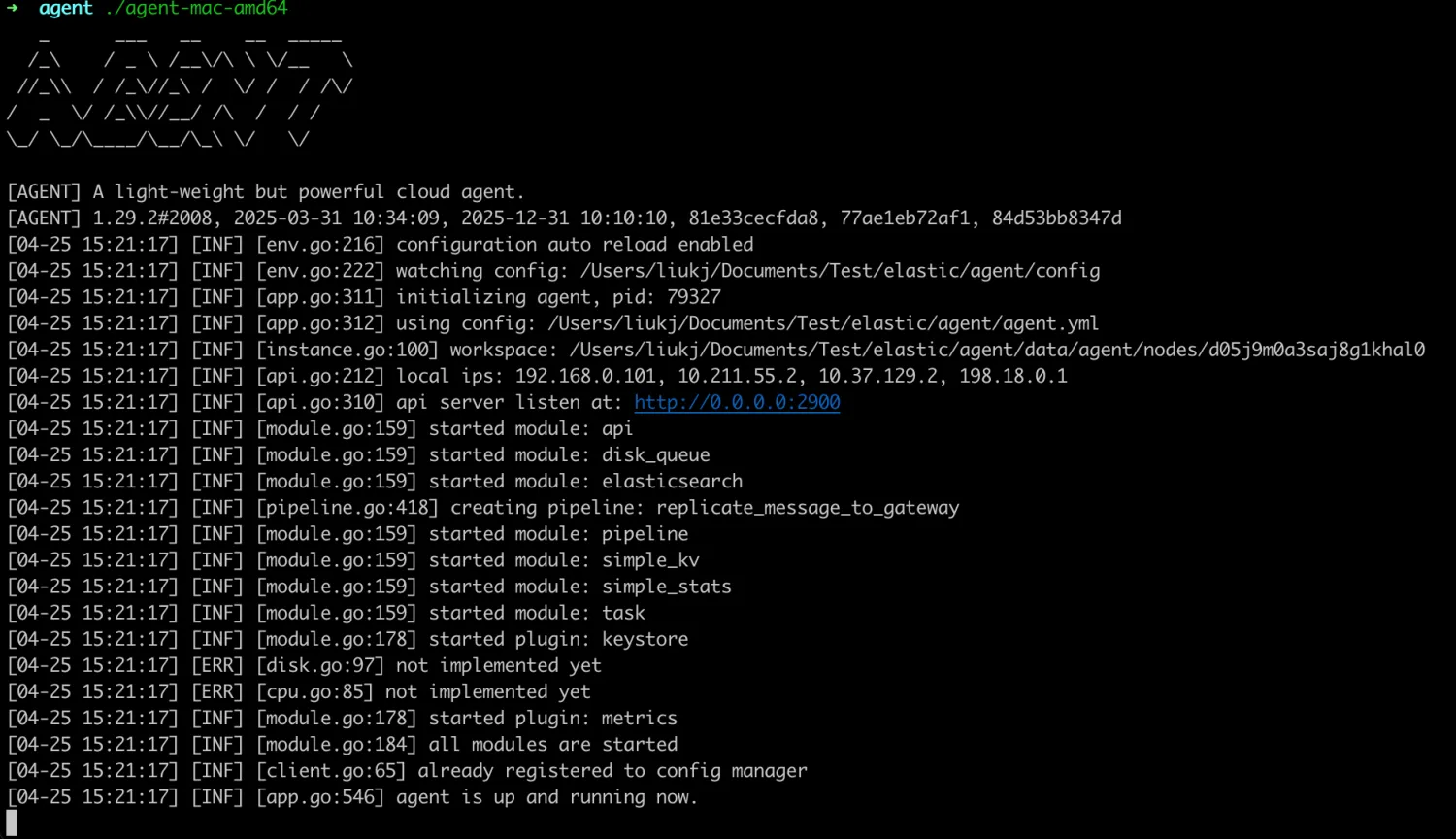

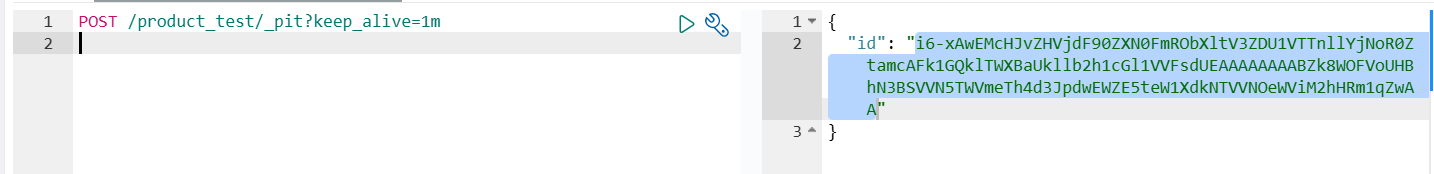

43 scroll_time: "5m"- 迁移数据

./gateway-mac-arm64

#如果你保存的配置文件名称不叫 gateway.yml,则需要加参数 -config 文件名

数据导入完成后,网关 ctrl+c 退出。

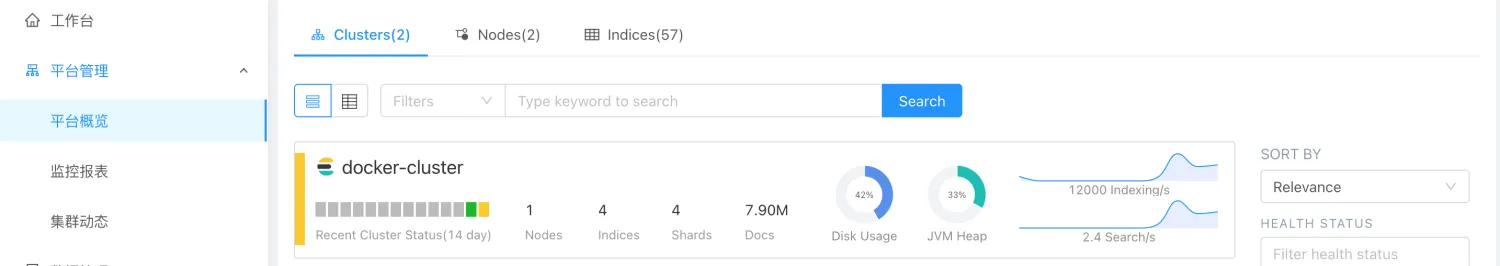

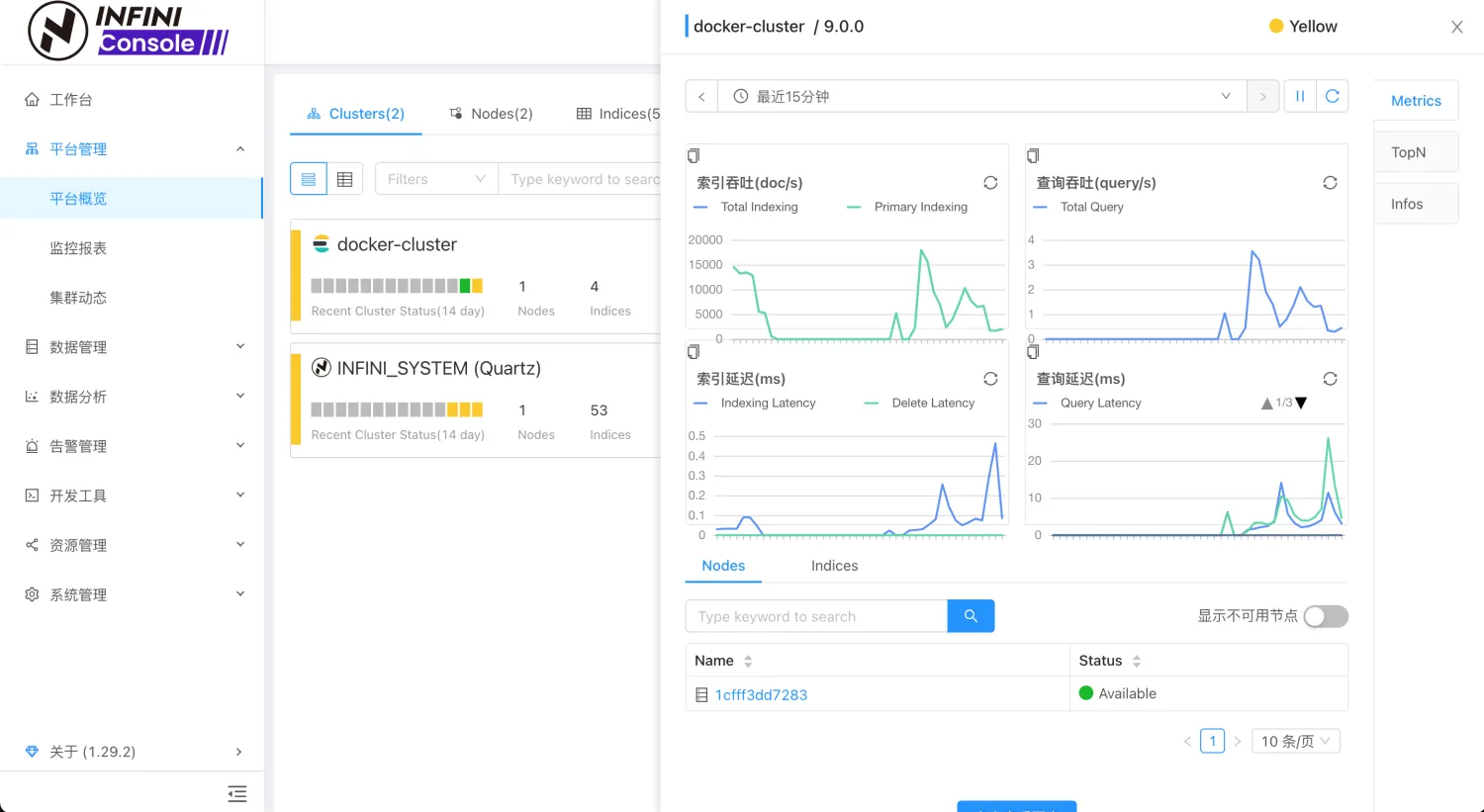

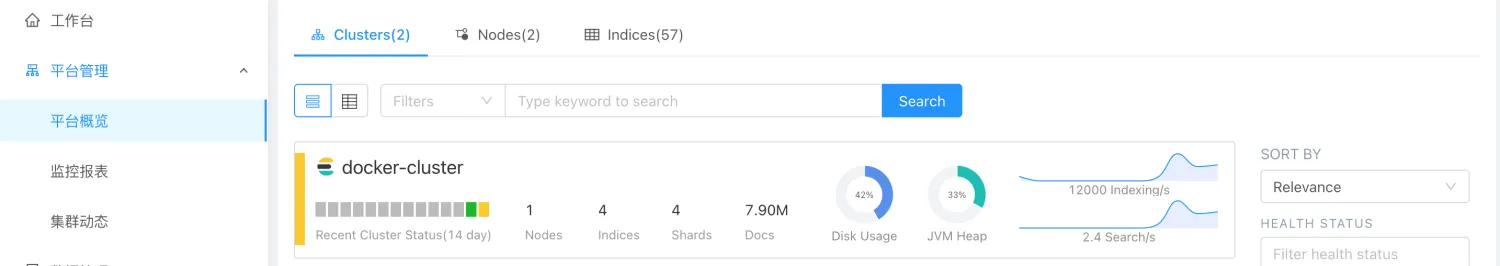

至此,数据迁移就完成了。下一篇我们来介绍 INFINI Gateway 的数据比对功能。

有任何问题,欢迎加我微信沟通。

关于极限网关(INFINI Gateway)

INFINI Gateway 是一个开源的面向搜索场景的高性能数据网关,所有请求都经过网关处理后再转发到后端的搜索业务集群。基于 INFINI Gateway,可以实现索引级别的限速限流、常见查询的缓存加速、查询请求的审计、查询结果的动态修改等等。

官网文档:https://docs.infinilabs.com/gateway

开源地址:https://github.com/infinilabs/gateway

之前有博客介绍过通过 Reindex 的方法将 Elasticsearch 的数据迁移到 Easysearch 集群,今天再介绍一个方法,通过 极限网关(INFINI Gateway) 来进行数据迁移。

测试环境

| 软件 | 版本 |

|---|---|

| Easysearch | 1.12.0 |

| Elasticsearch | 7.17.29 |

| INFINI Gateway | 1.29.2 |

迁移步骤

- 选定要迁移的索引

- 在目标集群建立索引的 mapping 和 setting

- 准备 INFINI Gateway 迁移配置

- 运行 INFINI Gateway 进行数据迁移

迁移实战

- 选定要迁移的索引

在 Elasticsearch 集群中选择目标索引:infinilabs 和 test1,没错,我们一次可以迁移多个。

- 在 Easysearch 集群使用源索引的 setting 和 mapping 建立目标索引。(略)

- INFINI Gateway 迁移配置准备

去 github 下载配置,修改下面的连接集群的部分

1 env:

2 LR_GATEWAY_API_HOST: 127.0.0.1:2900

3 SRC_ELASTICSEARCH_ENDPOINT: http://127.0.0.1:9200

4 DST_ELASTICSEARCH_ENDPOINT: http://127.0.0.1:9201

5 path.data: data

6 path.logs: log

7 progress_bar.enabled: true

8 configs.auto_reload: true

9

10 api:

11 enabled: true

12 network:

13 binding: $[[env.LR_GATEWAY_API_HOST]]

14

15 elasticsearch:

16 - name: source

17 enabled: true

18 endpoint: $[[env.SRC_ELASTICSEARCH_ENDPOINT]]

19 basic_auth:

20 username: elastic

21 password: goodgoodstudy

22

23 - name: target

24 enabled: true

25 endpoint: $[[env.DST_ELASTICSEARCH_ENDPOINT]]

26 basic_auth:

27 username: admin

28 password: 14da41c79ad2d744b90cpipeline 部分修改要迁移的索引名称,我们迁移 infinilabs 和 test1 两个索引。

31 pipeline:

32 - name: source_scroll

33 auto_start: true

34 keep_running: false

35 processor:

36 - es_scroll:

37 slice_size: 1

38 batch_size: 5000

39 indices: "infinilabs,test1"

40 elasticsearch: source

41 output_queue: source_index_dump

42 partition_size: 1

43 scroll_time: "5m"- 迁移数据

./gateway-mac-arm64

#如果你保存的配置文件名称不叫 gateway.yml,则需要加参数 -config 文件名

数据导入完成后,网关 ctrl+c 退出。

至此,数据迁移就完成了。下一篇我们来介绍 INFINI Gateway 的数据比对功能。

有任何问题,欢迎加我微信沟通。

关于极限网关(INFINI Gateway)

INFINI Gateway 是一个开源的面向搜索场景的高性能数据网关,所有请求都经过网关处理后再转发到后端的搜索业务集群。基于 INFINI Gateway,可以实现索引级别的限速限流、常见查询的缓存加速、查询请求的审计、查询结果的动态修改等等。

官网文档:https://docs.infinilabs.com/gateway

开源地址:https://github.com/infinilabs/gateway

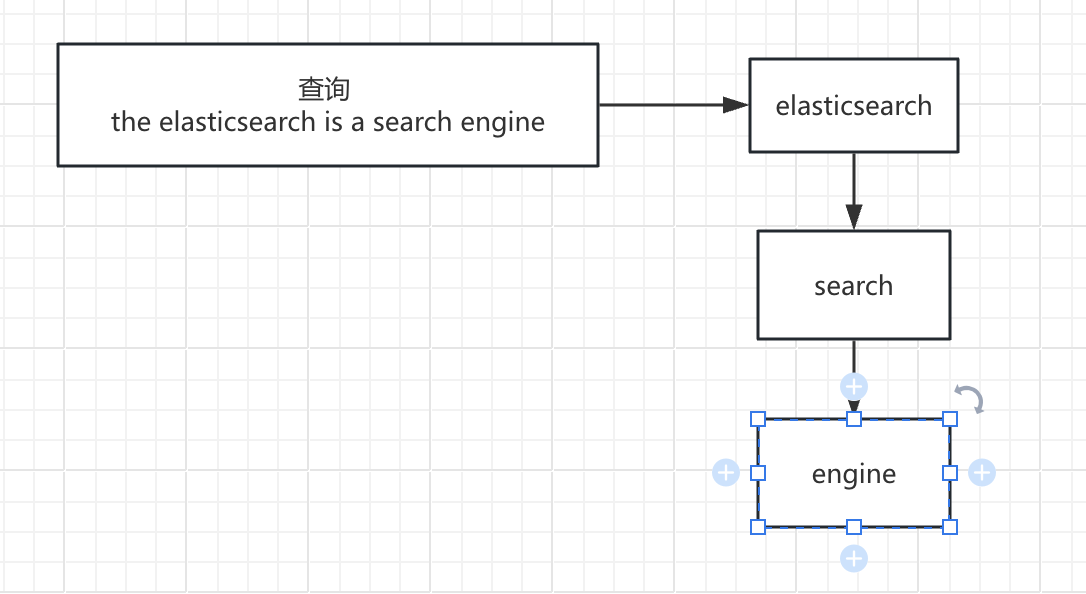

搜索百科(3):Elasticsearch — 搜索界的“流量明星”

大家好,我是 INFINI Labs 的石阳。

欢迎关注 《搜索百科》 专栏!每天 5 分钟,带你速览一款搜索相关的技术或产品,同时还会带你探索它们背后的技术原理、发展故事及上手体验等。

前两篇我们探讨了搜索技术的基石 Apache Lucene 和企业级搜索解决方案 Apache Solr。今天,我们来聊聊一个真正改变搜索游戏规则,但也充满争议的产品 — Elasticsearch。

引言

如果说 Lucene 是幕后英雄,那么 Elasticsearch 就是舞台中央的明星。借助 REST API、分布式架构、强大的生态系统,它让搜索 + 分析成为“马上可用”的服务形式。

在日志平台、可观察性、安全监控、AI 与语义检索等领域,Elasticsearch 的名字几乎成了默认选项。

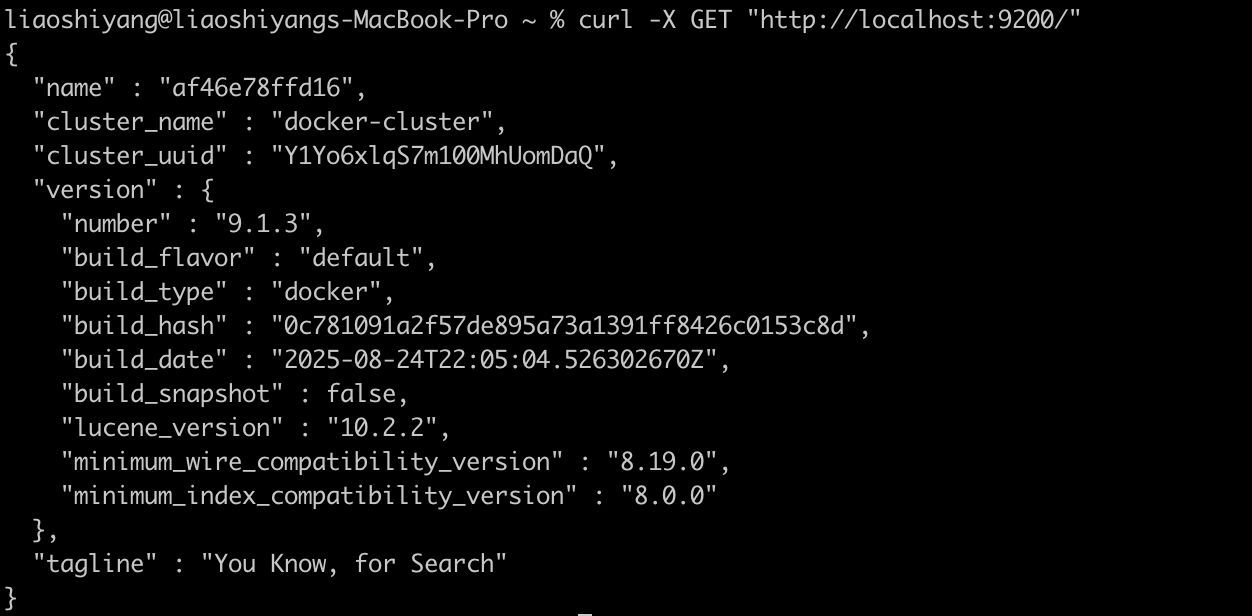

Elasticsearch 概述

Elasticsearch 是一个开源的分布式搜索和分析引擎,构建于 Apache Lucene 之上。作为一个检索平台,它可以实时存储结构化、非结构化和向量数据,提供快速的混合和向量搜索,支持可观测性与安全分析,并以高性能、高准确性和高相关性实现 AI 驱动的应用。

- 首次发布:2010 年 2 月

- 最新版本:9.1.3(截止 2025 年 9 月)

- 核心依赖:Apache Lucene

- 开源协议:AGPL v3

- 官方网址:https://www.elastic.co/elasticsearch/

- GitHub 仓库:https://github.com/elastic/elasticsearch

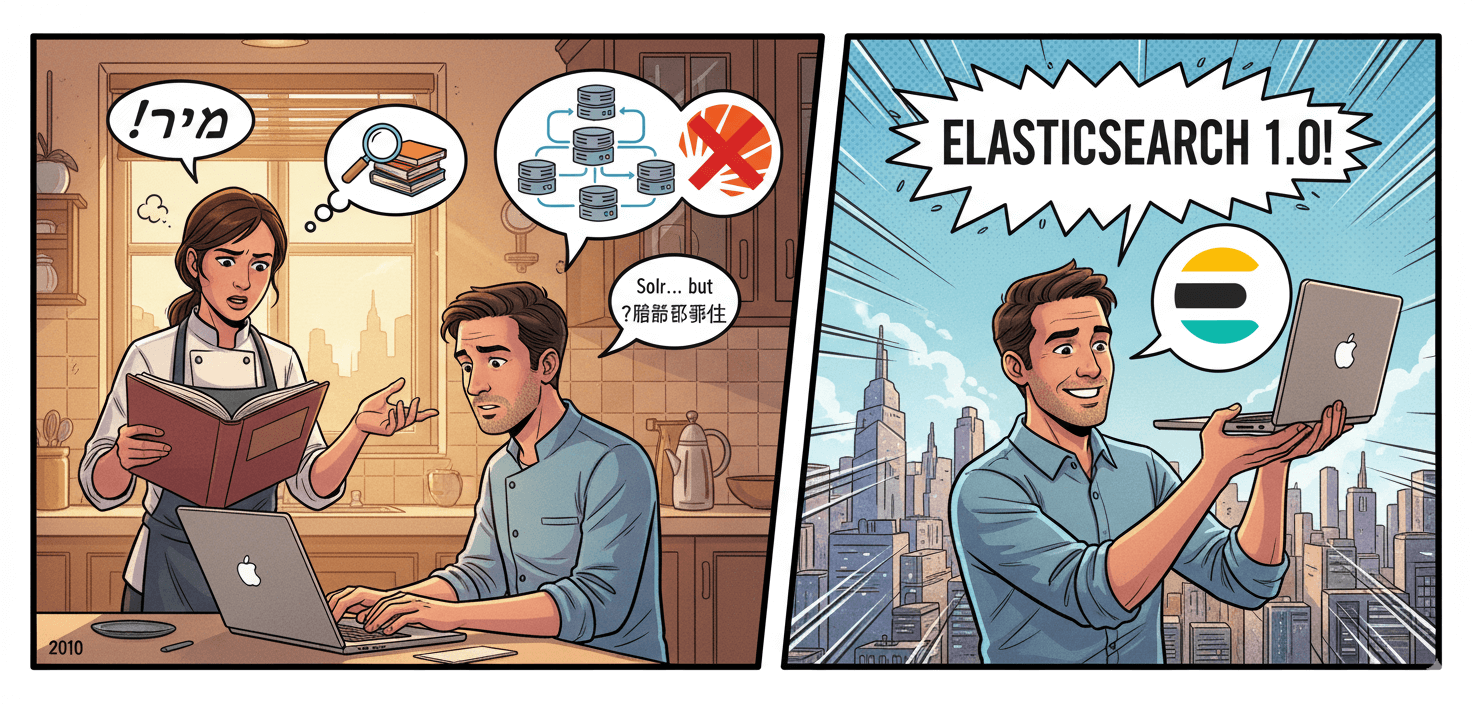

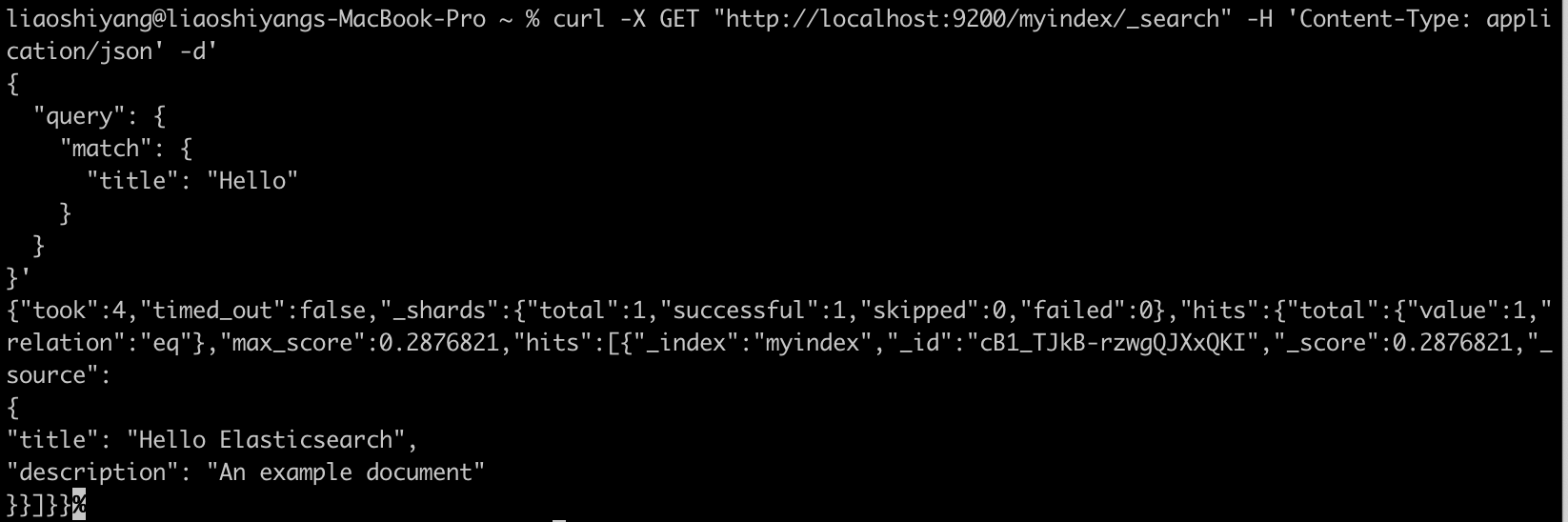

起源:从食谱搜索到全球“流量明星”

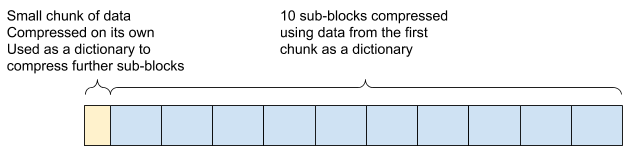

Elasticsearch 的故事始于以色列开发者 Shay Banon。2010 年,当时他在学习厨师课程的妻子需要一款能够快速搜索食谱的工具。虽然当时已经有 Solr 这样的搜索解决方案,但 Shay 认为它们对于分布式场景的支持不够完善。

基于之前开发 Compass(一个基于 Lucene 的搜索库)的经验,Shay 开始构建一个完全分布式的、基于 JSON 的搜索引擎。2010 年 2 月,Elasticsearch 的第一个版本发布。

随着用户日益增多、企业级需求增强,Shay 在 2012 年创立了 Elastic 公司,把 Elasticsearch 不仅作为开源项目,也逐渐商业化运营起来,包括提供托管服务、企业支持,加入 Logstash 日志处理、Kibana 可视化工具等,Elastic 公司也逐渐从一个纯搜索引擎项目演变为一个更广泛的“数据搜索与分析”平台。

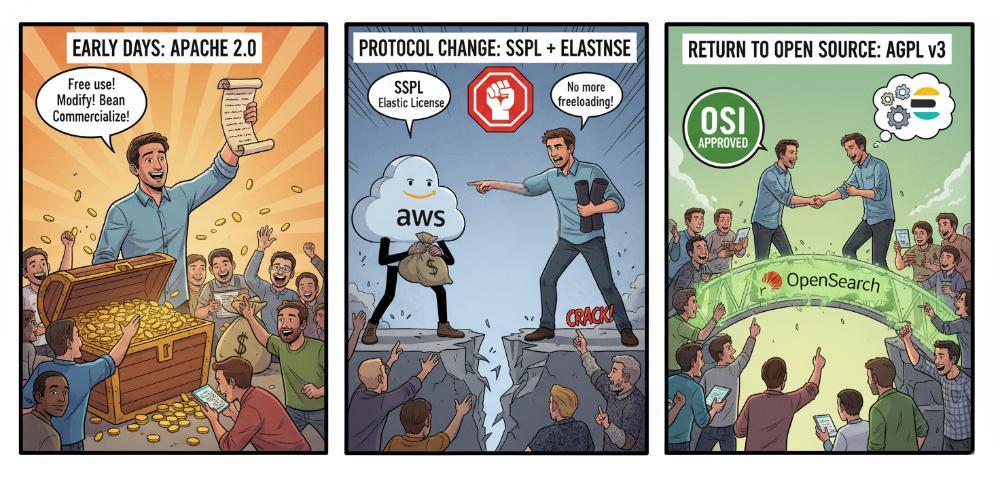

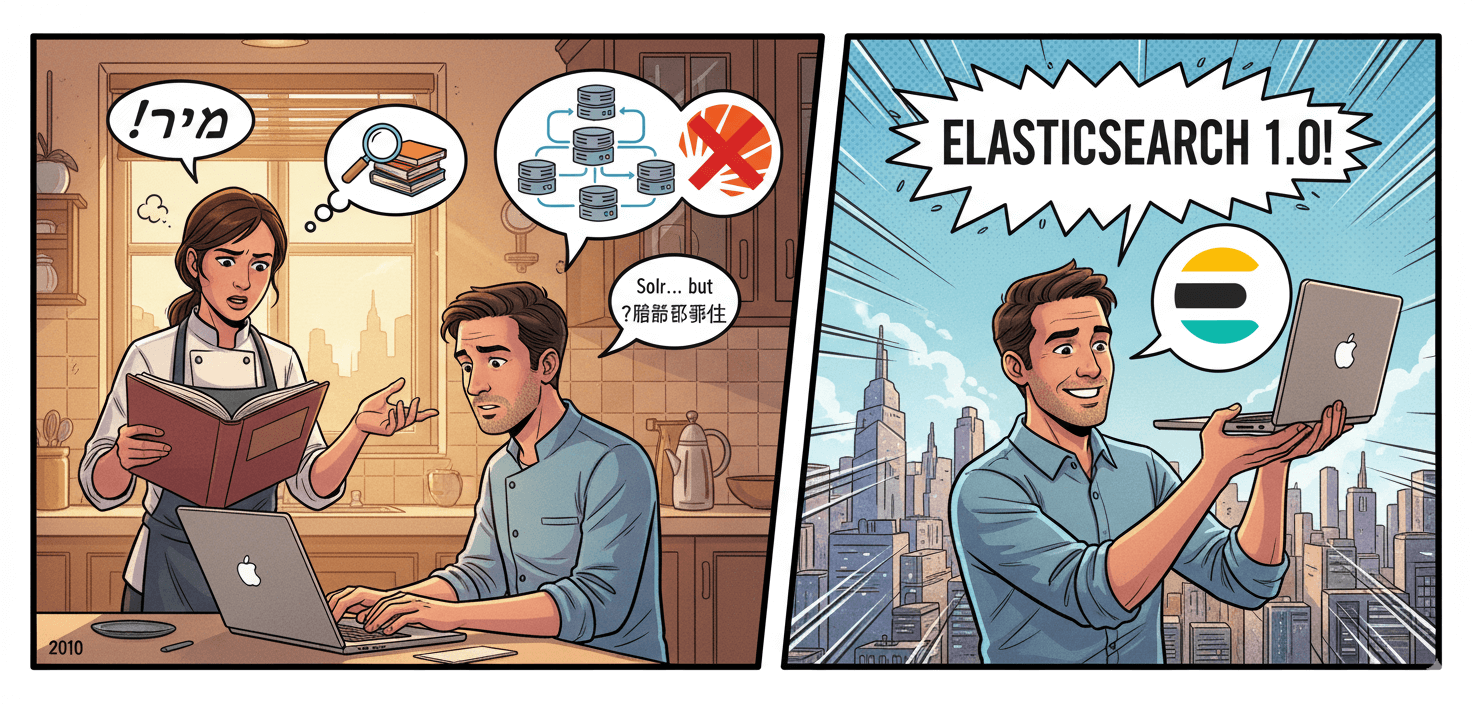

协议变更:开源和商业化的博弈

Elasticsearch 的发展并非一帆风顺。其历史上最具转折性的事件当属与 AWS 的冲突及随之而来的开源协议变更。

- 早期:Apache 2.0 协议

2010 年 Shay Banon 开源 Elasticsearch 时,最初采用的是 Apache 2.0 协议。Apache 2.0 属于宽松的自由协议,允许任何人免费使用、修改和商用(包括 SaaS 模式)。这帮助 Elasticsearch 快速壮大,成为事实上的“搜索引擎标准”。

- 协议变更:应对云厂商“白嫖”

随着 Elasticsearch 的流行,像 AWS(Amazon Web Services) 等云厂商直接将 Elasticsearch 做成托管服务,并从中获利。Elastic 公司认为这损害了他们的商业利益,因为云厂商“用开源赚钱,却没有回馈社区”。2021 年 1 月,Elastic 宣布 Elasticsearch 和 Kibana 不再采用 Apache 2.0,改为 双重协议:SSPL + Elastic License。这一步导致社区巨大分裂,AWS 带头将 Elasticsearch 分叉为 OpenSearch,并继续以 Apache 2.0 协议维护。

- 再次转向开源:AGPL v3

2024 年 3 月,Elastic 宣布新的版本(Elasticsearch 8.13 起)又新增 AGPL v3 作为一个开源许可选项。AGPL v3 既符合 OSI 真正开源标准,又能约束云厂商闭源托管服务,同时修复社区关系,Elastic 希望通过重新拥抱开源,减少分裂,吸引开发者回归。

Elasticsearch 从宽松到收紧,再到回归开源,是在社区生态与商业利益间寻找平衡的过程。

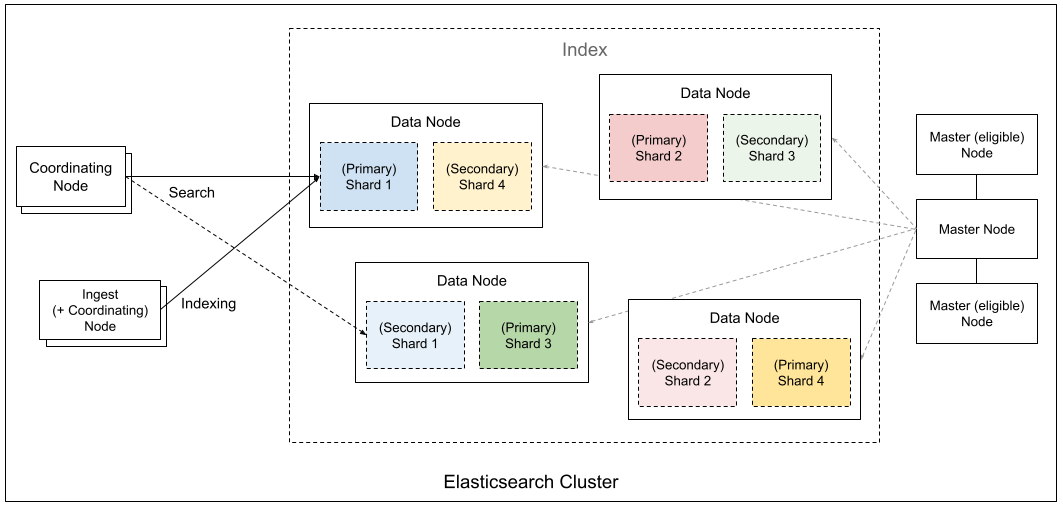

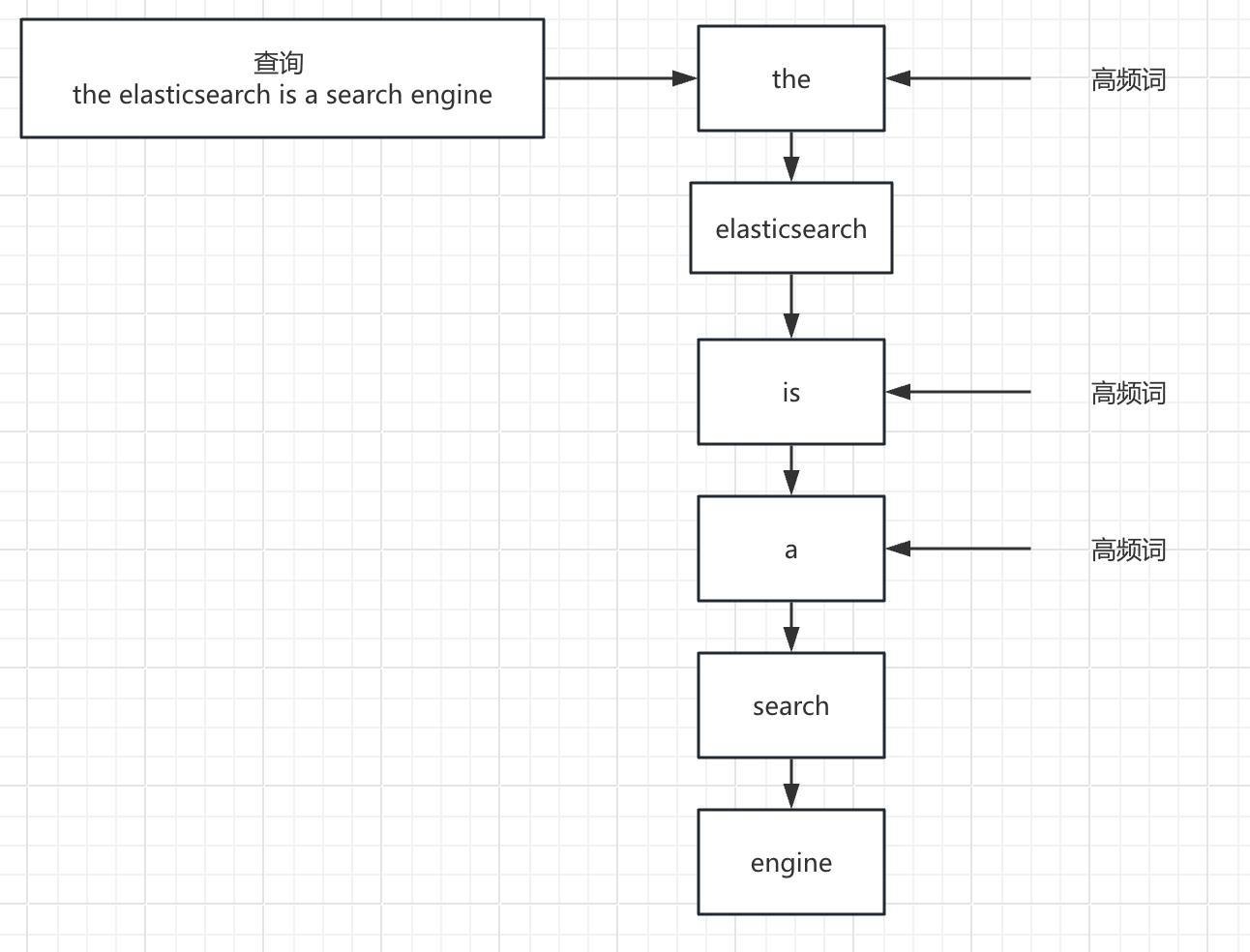

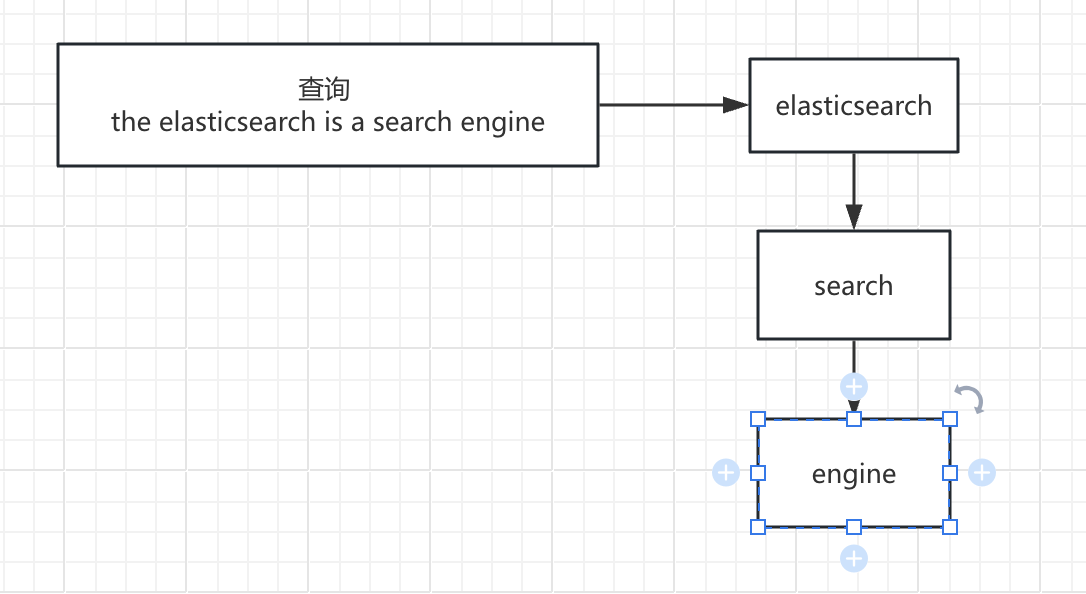

基本概念

要学习 Elasticsearch,得先了解其五大基本概览:集群、节点、分片、索引和文档。

- 集群(Cluster)

由一个或多个节点组成的整体,提供统一的搜索与存储服务。对外看起来像一个单一系统。

- 节点(Node)

集群中的一台服务器实例。节点有不同角色:

- Master 节点:负责集群管理(分片分配、元数据维护)。

- Data 节点:存储数据、处理搜索和聚合。

- Coordinating 节点:接收请求并调度任务。

- Ingest 节点:负责数据写入前的预处理。

- 索引(Index)

类似于传统数据库的“库”,按逻辑组织数据。一个索引往往对应一个业务场景(如日志、商品信息)。

- 分片(Shard)

为了让索引能水平扩展,Elasticsearch 会把索引拆分为多个 主分片,并为每个主分片创建 副本分片,提升高可用和查询性能。

- 文档(Document)

Elasticsearch 存储和检索的最小数据单元,通常是 JSON 格式。多个文档组成一个索引。

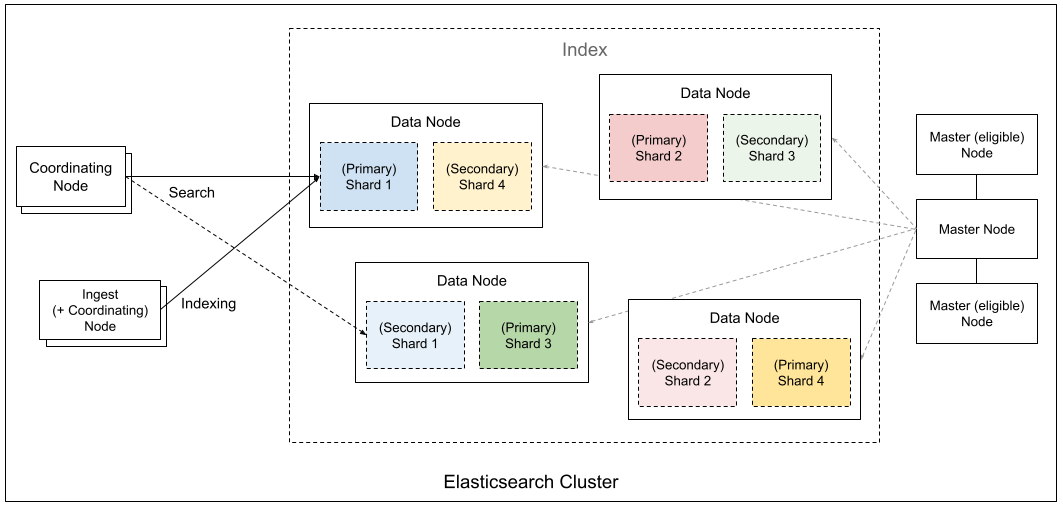

集群架构

Elasticsearch 通过 Master、Data、Coordinating、Ingest 等不同角色节点的协作,将数据切分成分片并分布式存储,实现了高可用、可扩展的搜索与分析引擎架构。

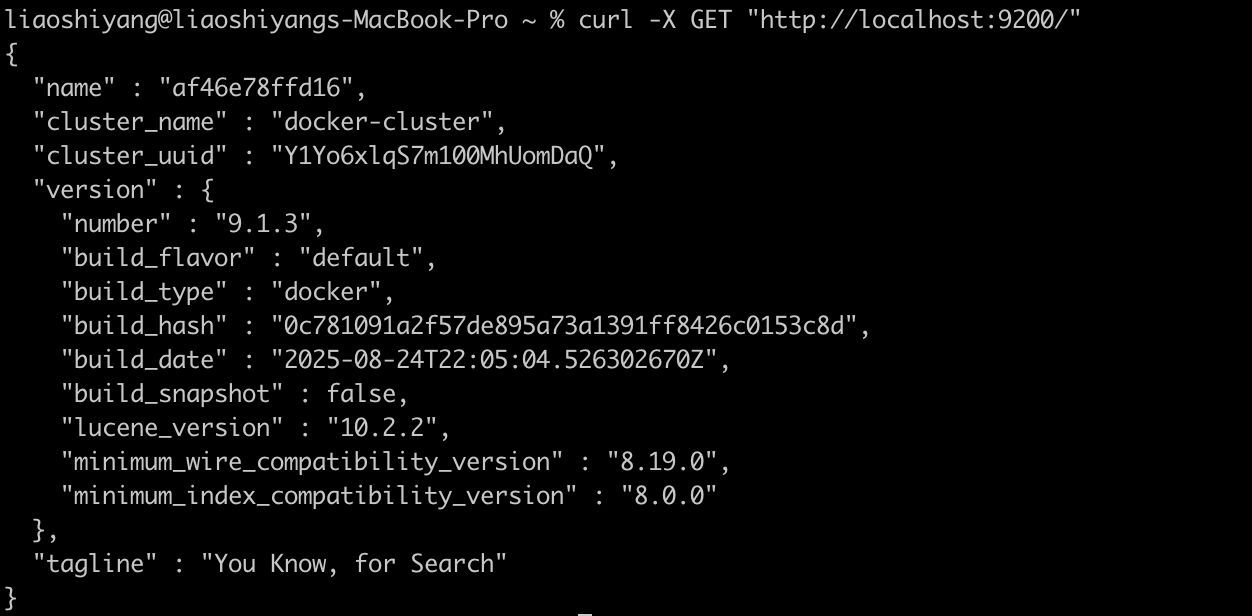

快速开始:5 分钟体验 Elasticsearch

1. 使用 Docker 启动

# 拉取最新镜像

docker pull docker.elastic.co/elasticsearch/elasticsearch:9.1.3

# 启动单节点集群

docker run -d --name elasticsearch \

-p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e "xpack.security.enabled=false" \

docker.elastic.co/elasticsearch/elasticsearch:9.1.32. 验证安装

# 检查集群状态

curl -X GET "http://localhost:9200/"

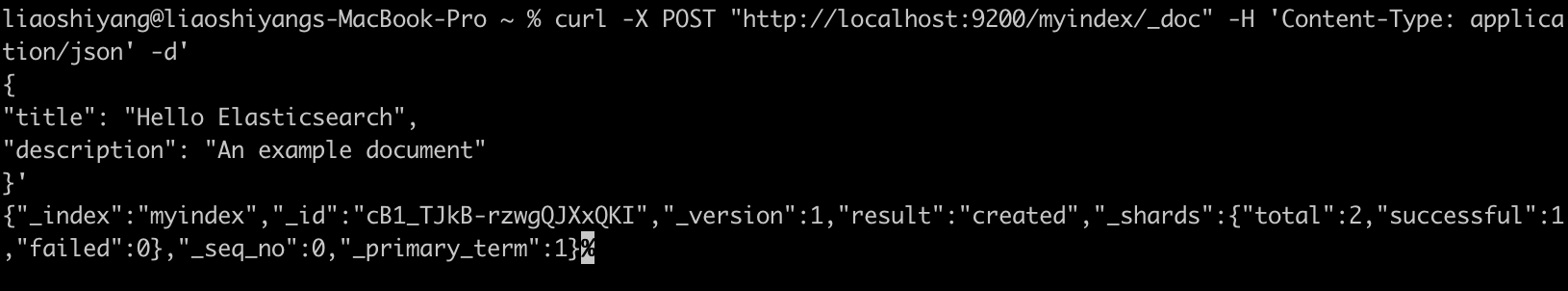

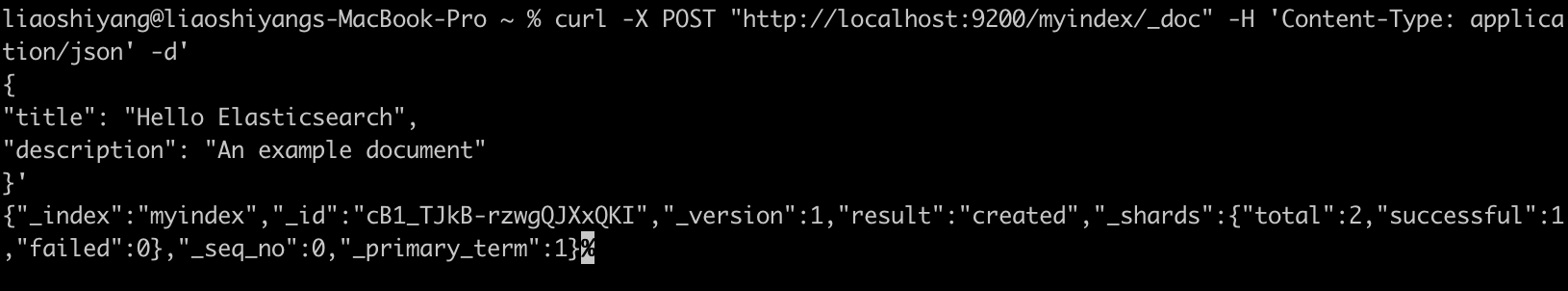

3. 索引文档

# 索引文档

curl -X POST "http://localhost:9200/myindex/_doc" -H 'Content-Type: application/json' -d'

{

"title": "Hello Elasticsearch",

"description": "An example document"

}'

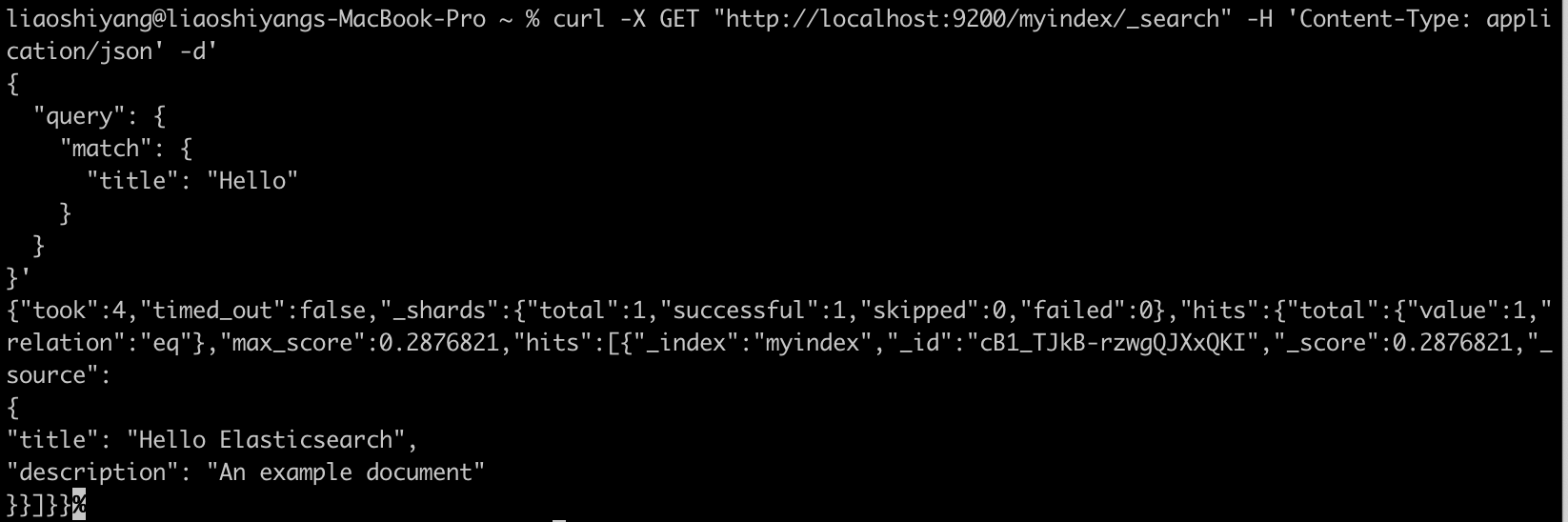

3. 搜索文档

# 搜索文档

curl -X GET "http://localhost:9200/myindex/_search" -H 'Content-Type: application/json' -d'

{

"query": {

"match": {

"title": "Hello"

}

}

}'

结语

Elasticsearch 是搜索与分析领域标杆性的产品。它将 Lucene 的能力包装起来,加上分布式、易用以及与数据可视化、安全监控等功能的整合,使搜索引擎从专业技术逐渐变为“随手可用”的基础设施。

虽然协议变动、与 OpenSearch 的分叉引发争议,但它在企业与开发者群体中的实际应用价值依然难以替代。

🚀 下期预告

下一篇我们将介绍 OpenSearch,探讨这个 Elasticsearch 分支项目的发展现状、技术特点以及与 Elasticsearch 的详细对比。如果您有特别关注的问题,欢迎提前提出!

💬 三连互动

- 你或公司最近在用 Elasticsearch 吗?拿来做了什么场景?

- 在 Elasticsearch 和 OpenSearch 之间做过技术选型?

- 对 Elasticsearch 的许可证变化有什么看法?

对搜索技术感兴趣的朋友,也欢迎加我微信(ID:lsy965145175)备注“搜索百科”,拉你进 搜索技术交流群,一起探讨与学习!

✨ 推荐阅读

🔗 参考

原文:https://infinilabs.cn/blog/2025/search-wiki-3-elasticsearch/

大家好,我是 INFINI Labs 的石阳。

欢迎关注 《搜索百科》 专栏!每天 5 分钟,带你速览一款搜索相关的技术或产品,同时还会带你探索它们背后的技术原理、发展故事及上手体验等。

前两篇我们探讨了搜索技术的基石 Apache Lucene 和企业级搜索解决方案 Apache Solr。今天,我们来聊聊一个真正改变搜索游戏规则,但也充满争议的产品 — Elasticsearch。

引言

如果说 Lucene 是幕后英雄,那么 Elasticsearch 就是舞台中央的明星。借助 REST API、分布式架构、强大的生态系统,它让搜索 + 分析成为“马上可用”的服务形式。

在日志平台、可观察性、安全监控、AI 与语义检索等领域,Elasticsearch 的名字几乎成了默认选项。

Elasticsearch 概述

Elasticsearch 是一个开源的分布式搜索和分析引擎,构建于 Apache Lucene 之上。作为一个检索平台,它可以实时存储结构化、非结构化和向量数据,提供快速的混合和向量搜索,支持可观测性与安全分析,并以高性能、高准确性和高相关性实现 AI 驱动的应用。

- 首次发布:2010 年 2 月

- 最新版本:9.1.3(截止 2025 年 9 月)

- 核心依赖:Apache Lucene

- 开源协议:AGPL v3

- 官方网址:https://www.elastic.co/elasticsearch/

- GitHub 仓库:https://github.com/elastic/elasticsearch

起源:从食谱搜索到全球“流量明星”

Elasticsearch 的故事始于以色列开发者 Shay Banon。2010 年,当时他在学习厨师课程的妻子需要一款能够快速搜索食谱的工具。虽然当时已经有 Solr 这样的搜索解决方案,但 Shay 认为它们对于分布式场景的支持不够完善。

基于之前开发 Compass(一个基于 Lucene 的搜索库)的经验,Shay 开始构建一个完全分布式的、基于 JSON 的搜索引擎。2010 年 2 月,Elasticsearch 的第一个版本发布。

随着用户日益增多、企业级需求增强,Shay 在 2012 年创立了 Elastic 公司,把 Elasticsearch 不仅作为开源项目,也逐渐商业化运营起来,包括提供托管服务、企业支持,加入 Logstash 日志处理、Kibana 可视化工具等,Elastic 公司也逐渐从一个纯搜索引擎项目演变为一个更广泛的“数据搜索与分析”平台。

协议变更:开源和商业化的博弈

Elasticsearch 的发展并非一帆风顺。其历史上最具转折性的事件当属与 AWS 的冲突及随之而来的开源协议变更。

- 早期:Apache 2.0 协议

2010 年 Shay Banon 开源 Elasticsearch 时,最初采用的是 Apache 2.0 协议。Apache 2.0 属于宽松的自由协议,允许任何人免费使用、修改和商用(包括 SaaS 模式)。这帮助 Elasticsearch 快速壮大,成为事实上的“搜索引擎标准”。

- 协议变更:应对云厂商“白嫖”

随着 Elasticsearch 的流行,像 AWS(Amazon Web Services) 等云厂商直接将 Elasticsearch 做成托管服务,并从中获利。Elastic 公司认为这损害了他们的商业利益,因为云厂商“用开源赚钱,却没有回馈社区”。2021 年 1 月,Elastic 宣布 Elasticsearch 和 Kibana 不再采用 Apache 2.0,改为 双重协议:SSPL + Elastic License。这一步导致社区巨大分裂,AWS 带头将 Elasticsearch 分叉为 OpenSearch,并继续以 Apache 2.0 协议维护。

- 再次转向开源:AGPL v3

2024 年 3 月,Elastic 宣布新的版本(Elasticsearch 8.13 起)又新增 AGPL v3 作为一个开源许可选项。AGPL v3 既符合 OSI 真正开源标准,又能约束云厂商闭源托管服务,同时修复社区关系,Elastic 希望通过重新拥抱开源,减少分裂,吸引开发者回归。

Elasticsearch 从宽松到收紧,再到回归开源,是在社区生态与商业利益间寻找平衡的过程。

基本概念

要学习 Elasticsearch,得先了解其五大基本概览:集群、节点、分片、索引和文档。

- 集群(Cluster)

由一个或多个节点组成的整体,提供统一的搜索与存储服务。对外看起来像一个单一系统。

- 节点(Node)

集群中的一台服务器实例。节点有不同角色:

- Master 节点:负责集群管理(分片分配、元数据维护)。

- Data 节点:存储数据、处理搜索和聚合。

- Coordinating 节点:接收请求并调度任务。

- Ingest 节点:负责数据写入前的预处理。

- 索引(Index)

类似于传统数据库的“库”,按逻辑组织数据。一个索引往往对应一个业务场景(如日志、商品信息)。

- 分片(Shard)

为了让索引能水平扩展,Elasticsearch 会把索引拆分为多个 主分片,并为每个主分片创建 副本分片,提升高可用和查询性能。

- 文档(Document)

Elasticsearch 存储和检索的最小数据单元,通常是 JSON 格式。多个文档组成一个索引。

集群架构

Elasticsearch 通过 Master、Data、Coordinating、Ingest 等不同角色节点的协作,将数据切分成分片并分布式存储,实现了高可用、可扩展的搜索与分析引擎架构。

快速开始:5 分钟体验 Elasticsearch

1. 使用 Docker 启动

# 拉取最新镜像

docker pull docker.elastic.co/elasticsearch/elasticsearch:9.1.3

# 启动单节点集群

docker run -d --name elasticsearch \

-p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e "xpack.security.enabled=false" \

docker.elastic.co/elasticsearch/elasticsearch:9.1.32. 验证安装

# 检查集群状态

curl -X GET "http://localhost:9200/"

3. 索引文档

# 索引文档

curl -X POST "http://localhost:9200/myindex/_doc" -H 'Content-Type: application/json' -d'

{

"title": "Hello Elasticsearch",

"description": "An example document"

}'

3. 搜索文档

# 搜索文档

curl -X GET "http://localhost:9200/myindex/_search" -H 'Content-Type: application/json' -d'

{

"query": {

"match": {

"title": "Hello"

}

}

}'

结语

Elasticsearch 是搜索与分析领域标杆性的产品。它将 Lucene 的能力包装起来,加上分布式、易用以及与数据可视化、安全监控等功能的整合,使搜索引擎从专业技术逐渐变为“随手可用”的基础设施。

虽然协议变动、与 OpenSearch 的分叉引发争议,但它在企业与开发者群体中的实际应用价值依然难以替代。

🚀 下期预告

下一篇我们将介绍 OpenSearch,探讨这个 Elasticsearch 分支项目的发展现状、技术特点以及与 Elasticsearch 的详细对比。如果您有特别关注的问题,欢迎提前提出!

💬 三连互动

- 你或公司最近在用 Elasticsearch 吗?拿来做了什么场景?

- 在 Elasticsearch 和 OpenSearch 之间做过技术选型?

- 对 Elasticsearch 的许可证变化有什么看法?

对搜索技术感兴趣的朋友,也欢迎加我微信(ID:lsy965145175)备注“搜索百科”,拉你进 搜索技术交流群,一起探讨与学习!

✨ 推荐阅读

🔗 参考

收起阅读 »原文:https://infinilabs.cn/blog/2025/search-wiki-3-elasticsearch/

ES 调优帖:Gateway 批量写入性能优化实践

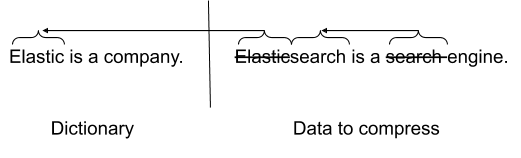

背景:bulk 优化的应用

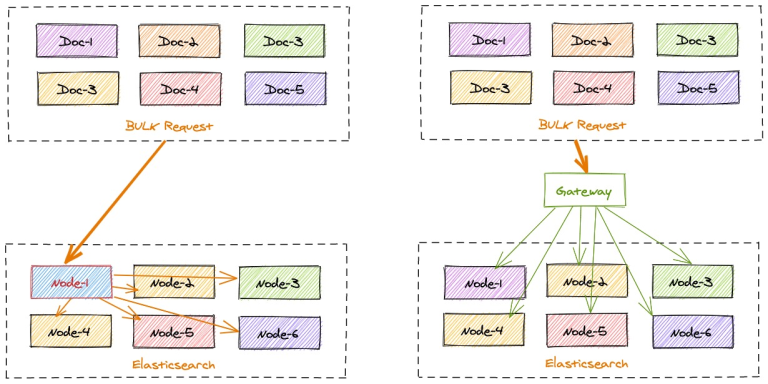

在 ES 的写入优化里,bulk 操作被广泛地用于批量处理数据。bulk 操作允许用户一次提交多个数据操作,如索引、更新、删除等,从而提高数据处理效率。bulk 操作的实现原理是,将数据操作请求打包成 HTTP 请求,并批量提交给 Elasticsearch 服务器。这样,Elasticsearch 服务器就可以一次处理多个数据操作,从而提高处理效率。

这种优化的核心价值在于减少了网络往返的次数和连接建立的开销。每一次单独的写入操作都需要经历完整的请求-响应周期,而批量写入则是将多个操作打包在一起,用一次通信完成原本需要多次交互的工作。这不仅仅节省了时间,更重要的是释放了系统资源,让服务器能够专注于真正的数据处理,而不是频繁的协议握手和状态维护。

这样的批量请求的确是可以优化写入请求的效率,让 ES 集群获得更多的资源去做写入请求的集中处理。但是除了客户端与 ES 集群的通讯效率优化,还有其他中间过程能优化么?

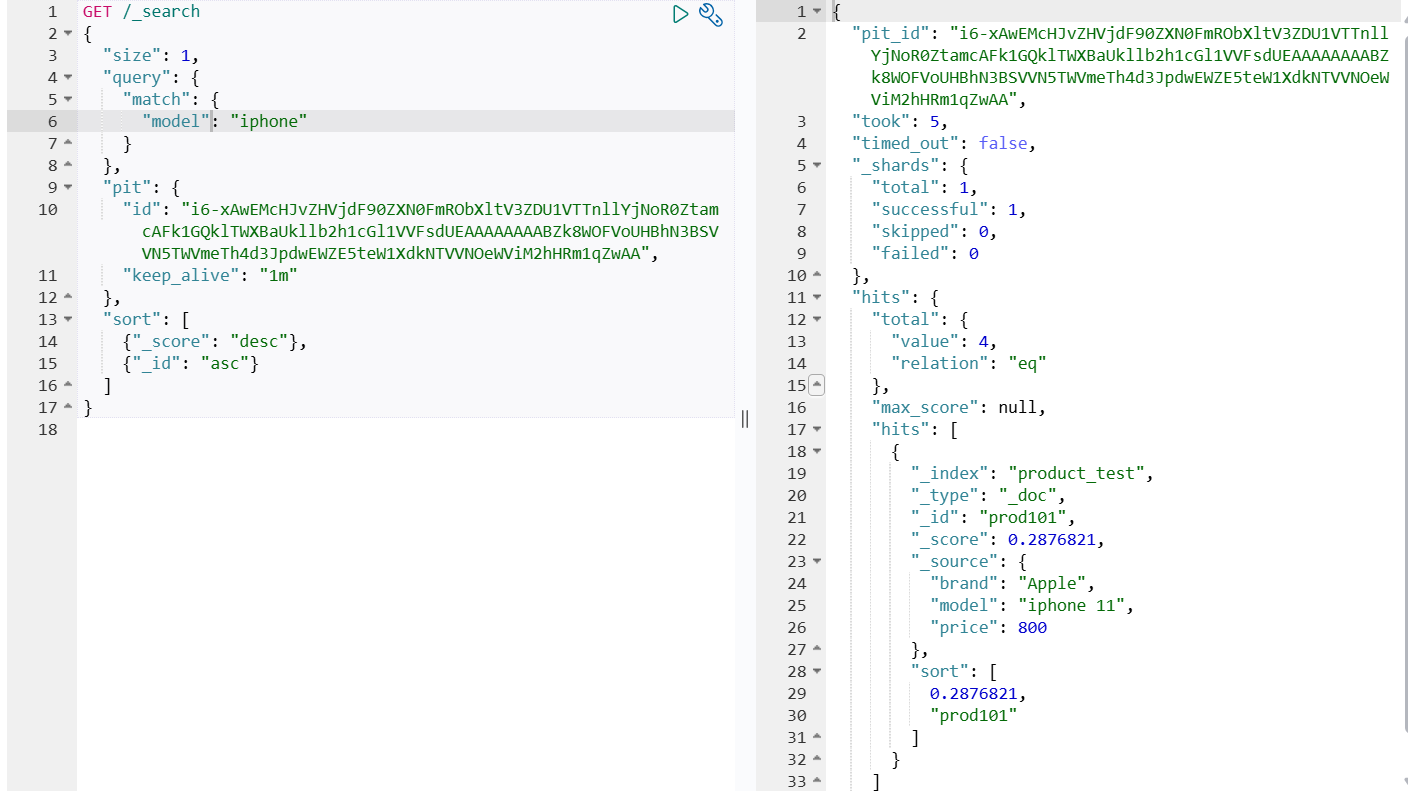

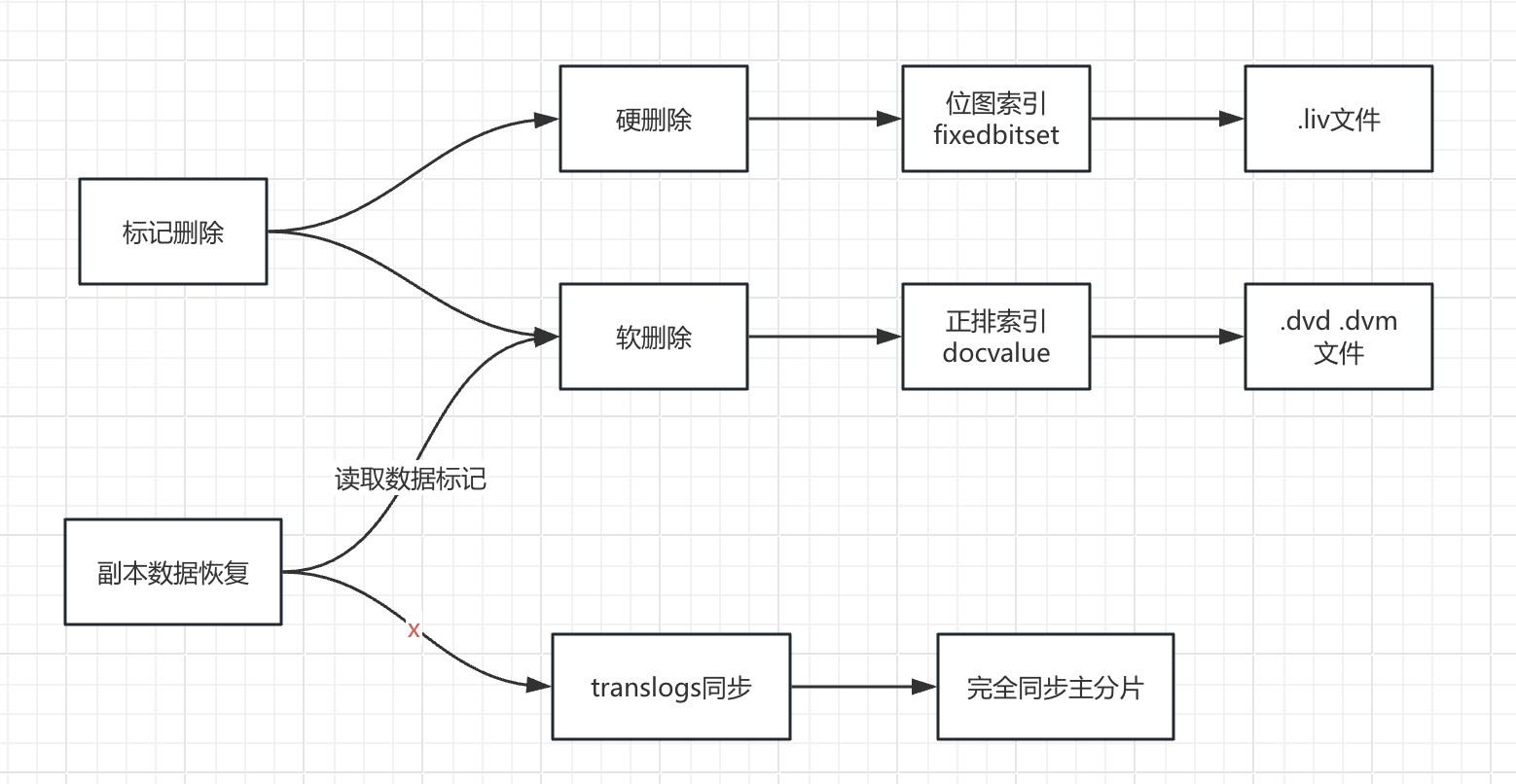

Gateway 的优化点

bulk 的优化理念是将日常零散的写入需求做集中化的处理,尽量减低日常请求的损耗,完成资源最大化的利用。简而言之就是“好钢用在刀刃上”。

但是 ES 在收到 bulk 写入请求后,也是需要协调节点根据文档的 id 计算所属的分片来将数据分发到对应的数据节点的。这个过程也是有一定损耗的,如果 bulk 请求中数据分布的很散,每个分片都需要进行写入,原本 bulk 集中写入的需求优势则还是没有得到最理想化的提升。

gateway 的写入加速则对 bulk 的优化理念的最大化补全。

gateway 可以本地计算每个索引文档对应后端 Elasticsearch 集群的目标存放位置,从而能够精准的进行写入请求定位。

在一批 bulk 请求中,可能存在多个后端节点的数据,bulk_reshuffle 过滤器用来将正常的 bulk 请求打散,按照目标节点或者分片进行拆分重新组装,避免 Elasticsearch 节点收到请求之后再次进行请求分发, 从而降低 Elasticsearch 集群间的流量和负载,也能避免单个节点成为热点瓶颈,确保各个数据节点的处理均衡,从而提升集群总体的索引吞吐能力。

整理的优化思路如下图:

优化实践

那我们来实践一下,看看 gateway 能提升多少的写入。

这里我们分 2 个测试场景:

- 基础集中写入测试,不带文档 id,直接批量写入。这个场景更像是日志或者监控数据采集的场景。

- 带文档 id 的写入测试,更偏向搜索场景或者大数据批同步的场景。

2 个场景都进行直接写入 ES 和 gateway 转发 ES 的效率比对。

测试材料除了需要备一个网关和一套 es 外,其余的内容如下:

测试索引 mapping 一致,名称区分:

PUT gateway_bulk_test

{

"settings": {

"number_of_shards": 6,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"timestamp": {

"type": "date",

"format": "strict_date_optional_time"

},

"field1": {

"type": "keyword"

},

"field2": {

"type": "keyword"

},

"field3": {

"type": "keyword"

},

"field4": {

"type": "integer"

},

"field5": {

"type": "keyword"

},

"field6": {

"type": "float"

}

}

}

}

PUT bulk_test

{

"settings": {

"number_of_shards": 6,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"timestamp": {

"type": "date",

"format": "strict_date_optional_time"

},

"field1": {

"type": "keyword"

},

"field2": {

"type": "keyword"

},

"field3": {

"type": "keyword"

},

"field4": {

"type": "integer"

},

"field5": {

"type": "keyword"

},

"field6": {

"type": "float"

}

}

}

}gateway 的配置文件如下:

path.data: data

path.logs: log

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 200000

network:

binding: 0.0.0.0:8000

flow:

- name: async_bulk

filter:

- bulk_reshuffle:

when:

contains:

_ctx.request.path: /_bulk

elasticsearch: prod

level: node

partition_size: 1

fix_null_id: true

- elasticsearch:

elasticsearch: prod #elasticsearch configure reference name

max_connection_per_node: 1000 #max tcp connection to upstream, default for all nodes

max_response_size: -1 #default for all nodes

balancer: weight

refresh: # refresh upstream nodes list, need to enable this feature to use elasticsearch nodes auto discovery

enabled: true

interval: 60s

filter:

roles:

exclude:

- master

router:

- name: my_router

default_flow: async_bulk

elasticsearch:

- name: prod

enabled: true

endpoints:

- https://127.0.0.1:9221

- https://127.0.0.1:9222

- https://127.0.0.1:9223

basic_auth:

username: admin

password: admin

pipeline:

- name: bulk_request_ingest

auto_start: true

keep_running: true

retry_delay_in_ms: 1000

processor:

- bulk_indexing:

max_connection_per_node: 100

num_of_slices: 3

max_worker_size: 30

idle_timeout_in_seconds: 10

bulk:

compress: false

batch_size_in_mb: 10

batch_size_in_docs: 10000

consumer:

fetch_max_messages: 100

queue_selector:

labels:

type: bulk_reshuffle测试脚本如下:

#!/usr/bin/env python3

"""

ES Bulk写入性能测试脚本

"""

import hashlib

import json

import random

import string

import time

from typing import List, Dict, Any

import requests

from concurrent.futures import ThreadPoolExecutor

from datetime import datetime

import urllib3

# 禁用SSL警告

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

class ESBulkTester:

def __init__(self):

# 配置变量 - 可修改

self.es_configs = [

{

"name": "ES直连",

"url": "https://127.0.0.1:9221",

"index": "bulk_test",

"username": "admin", # 修改为实际用户名

"password": "admin", # 修改为实际密码

"verify_ssl": False # HTTPS需要SSL验证

},

{

"name": "Gateway代理",

"url": "http://localhost:8000",

"index": "gateway_bulk_test",

"username": None, # 无需认证

"password": None,

"verify_ssl": False

}

]

self.batch_size = 10000 # 每次bulk写入条数

self.log_interval = 100000 # 每多少次bulk写入输出日志

# ID生成规则配置 - 前2位后5位

self.id_prefix_start = 1

self.id_prefix_end = 999 # 前3位: 01-999

self.id_suffix_start = 1

self.id_suffix_end = 9999 # 后4位: 0001-9999

# 当前ID计数器

self.current_prefix = self.id_prefix_start

self.current_suffix = self.id_suffix_start

def generate_id(self) -> str:

"""生成固定规则的ID - 前2位后5位"""

id_str = f"{self.current_prefix:02d}{self.current_suffix:05d}"

# 更新计数器

self.current_suffix += 1

if self.current_suffix > self.id_suffix_end:

self.current_suffix = self.id_suffix_start

self.current_prefix += 1

if self.current_prefix > self.id_prefix_end:

self.current_prefix = self.id_prefix_start

return id_str

def generate_random_hash(self, length: int = 32) -> str:

"""生成随机hash值"""

random_string = ''.join(random.choices(string.ascii_letters + string.digits, k=length))

return hashlib.md5(random_string.encode()).hexdigest()

def generate_document(self) -> Dict[str, Any]:

"""生成随机文档内容"""

return {

"timestamp": datetime.now().isoformat(),

"field1": self.generate_random_hash(),

"field2": self.generate_random_hash(),

"field3": self.generate_random_hash(),

"field4": random.randint(1, 1000),

"field5": random.choice(["A", "B", "C", "D"]),

"field6": random.uniform(0.1, 100.0)

}

def create_bulk_payload(self, index_name: str) -> str:

"""创建bulk写入payload"""

bulk_data = []

for _ in range(self.batch_size):

#doc_id = self.generate_id()

doc = self.generate_document()

# 添加index操作

bulk_data.append(json.dumps({

"index": {

"_index": index_name,

# "_id": doc_id

}

}))

bulk_data.append(json.dumps(doc))

return "\n".join(bulk_data) + "\n"

def bulk_index(self, config: Dict[str, Any], payload: str) -> bool:

"""执行bulk写入"""

url = f"{config['url']}/_bulk"

headers = {

"Content-Type": "application/x-ndjson"

}

# 设置认证信息

auth = None

if config.get('username') and config.get('password'):

auth = (config['username'], config['password'])

try:

response = requests.post(

url,

data=payload,

headers=headers,

auth=auth,

verify=config.get('verify_ssl', True),

timeout=30

)

return response.status_code == 200

except Exception as e:

print(f"Bulk写入失败: {e}")

return False

def refresh_index(self, config: Dict[str, Any]) -> bool:

"""刷新索引"""

url = f"{config['url']}/{config['index']}/_refresh"

# 设置认证信息

auth = None

if config.get('username') and config.get('password'):

auth = (config['username'], config['password'])

try:

response = requests.post(

url,

auth=auth,

verify=config.get('verify_ssl', True),

timeout=10

)

success = response.status_code == 200

print(f"索引刷新{'成功' if success else '失败'}: {config['index']}")

return success

except Exception as e:

print(f"索引刷新失败: {e}")

return False

def run_test(self, config: Dict[str, Any], round_num: int, total_iterations: int = 100000):

"""运行性能测试"""

test_name = f"{config['name']}-第{round_num}轮"

print(f"\n开始测试: {test_name}")

print(f"ES地址: {config['url']}")

print(f"索引名称: {config['index']}")

print(f"认证: {'是' if config.get('username') else '否'}")

print(f"每次bulk写入: {self.batch_size}条")

print(f"总计划写入: {total_iterations * self.batch_size}条")

print("-" * 50)

start_time = time.time()

success_count = 0

error_count = 0

for i in range(1, total_iterations + 1):

payload = self.create_bulk_payload(config['index'])

if self.bulk_index(config, payload):

success_count += 1

else:

error_count += 1

# 每N次输出日志

if i % self.log_interval == 0:

elapsed_time = time.time() - start_time

rate = i / elapsed_time if elapsed_time > 0 else 0

print(f"已完成 {i:,} 次bulk写入, 耗时: {elapsed_time:.2f}秒, 速率: {rate:.2f} bulk/秒")

end_time = time.time()

total_time = end_time - start_time

total_docs = total_iterations * self.batch_size

print(f"\n{test_name} 测试完成!")

print(f"总耗时: {total_time:.2f}秒")

print(f"成功bulk写入: {success_count:,}次")

print(f"失败bulk写入: {error_count:,}次")

print(f"总文档数: {total_docs:,}条")

print(f"平均速率: {success_count/total_time:.2f} bulk/秒")

print(f"文档写入速率: {total_docs/total_time:.2f} docs/秒")

print("=" * 60)

return {

"test_name": test_name,

"config_name": config['name'],

"round": round_num,

"es_url": config['url'],

"index": config['index'],

"total_time": total_time,

"success_count": success_count,

"error_count": error_count,

"total_docs": total_docs,

"bulk_rate": success_count/total_time,

"doc_rate": total_docs/total_time

}

def run_comparison_test(self, total_iterations: int = 10000):

"""运行双地址对比测试"""

print("ES Bulk写入性能测试开始")

print(f"测试时间: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}")

print("=" * 60)

results = []

rounds = 2 # 每个地址测试2轮

# 循环测试所有配置

for config in self.es_configs:

print(f"\n开始测试配置: {config['name']}")

print("*" * 40)

for round_num in range(1, rounds + 1):

# 运行测试

result = self.run_test(config, round_num, total_iterations)

results.append(result)

# 每轮结束后刷新索引

print(f"\n第{round_num}轮测试完成,执行索引刷新...")

self.refresh_index(config)

# 重置ID计数器

if round_num == 1:

# 第1轮:使用初始ID范围(新增数据)

print("第1轮:新增数据模式")

else:

# 第2轮:重复使用相同ID(更新数据模式)

print("第2轮:数据更新模式,复用第1轮ID")

self.current_prefix = self.id_prefix_start

self.current_suffix = self.id_suffix_start

print(f"{config['name']} 第{round_num}轮测试结束\n")

# 输出对比结果

print("\n性能对比结果:")

print("=" * 80)

# 按配置分组显示结果

config_results = {}

for result in results:

config_name = result['config_name']

if config_name not in config_results:

config_results[config_name] = []

config_results[config_name].append(result)

for config_name, rounds_data in config_results.items():

print(f"\n{config_name}:")

total_time = 0

total_bulk_rate = 0

total_doc_rate = 0

for round_data in rounds_data:

print(f" 第{round_data['round']}轮:")

print(f" 耗时: {round_data['total_time']:.2f}秒")

print(f" Bulk速率: {round_data['bulk_rate']:.2f} bulk/秒")

print(f" 文档速率: {round_data['doc_rate']:.2f} docs/秒")

print(f" 成功率: {round_data['success_count']/(round_data['success_count']+round_data['error_count'])*100:.2f}%")

total_time += round_data['total_time']

total_bulk_rate += round_data['bulk_rate']

total_doc_rate += round_data['doc_rate']

avg_bulk_rate = total_bulk_rate / len(rounds_data)

avg_doc_rate = total_doc_rate / len(rounds_data)

print(f" 平均性能:")

print(f" 总耗时: {total_time:.2f}秒")

print(f" 平均Bulk速率: {avg_bulk_rate:.2f} bulk/秒")

print(f" 平均文档速率: {avg_doc_rate:.2f} docs/秒")

# 整体对比

if len(config_results) >= 2:

config_names = list(config_results.keys())

config1_avg = sum([r['bulk_rate'] for r in config_results[config_names[0]]]) / len(config_results[config_names[0]])

config2_avg = sum([r['bulk_rate'] for r in config_results[config_names[1]]]) / len(config_results[config_names[1]])

if config1_avg > config2_avg:

faster = config_names[0]

rate_diff = config1_avg - config2_avg

else:

faster = config_names[1]

rate_diff = config2_avg - config1_avg

print(f"\n整体性能对比:")

print(f"{faster} 平均性能更好,bulk速率高 {rate_diff:.2f} bulk/秒")

print(f"性能提升: {(rate_diff/min(config1_avg, config2_avg)*100):.1f}%")

def main():

"""主函数"""

tester = ESBulkTester()

# 运行测试(每次bulk 1万条,300次bulk = 300万条文档)

tester.run_comparison_test(total_iterations=300)

if __name__ == "__main__":

main()1. 日志场景:不带 id 写入

测试条件:

- bulk 写入数据不带文档 id

- 每批次 bulk 10000 条数据,总共写入 30w 数据

这里把

反馈结果:

性能对比结果:

================================================================================

ES直连:

第1轮:

耗时: 152.29秒

Bulk速率: 1.97 bulk/秒

文档速率: 19699.59 docs/秒

成功率: 100.00%

平均性能:

总耗时: 152.29秒

平均Bulk速率: 1.97 bulk/秒

平均文档速率: 19699.59 docs/秒

Gateway代理:

第1轮:

耗时: 115.63秒

Bulk速率: 2.59 bulk/秒

文档速率: 25944.35 docs/秒

成功率: 100.00%

平均性能:

总耗时: 115.63秒

平均Bulk速率: 2.59 bulk/秒

平均文档速率: 25944.35 docs/秒

整体性能对比:

Gateway代理 平均性能更好,bulk速率高 0.62 bulk/秒

性能提升: 31.7%2. 业务场景:带文档 id 的写入

测试条件:

- bulk 写入数据带有文档 id,两次测试写入的文档 id 生成规则一致且重复。

- 每批次 bulk 10000 条数据,总共写入 30w 数据

这里把 py 脚本中 第 99 行 和 第 107 行的注释打开。

反馈结果:

性能对比结果:

================================================================================

ES直连:

第1轮:

耗时: 155.30秒

Bulk速率: 1.93 bulk/秒

文档速率: 19317.39 docs/秒

成功率: 100.00%

平均性能:

总耗时: 155.30秒

平均Bulk速率: 1.93 bulk/秒

平均文档速率: 19317.39 docs/秒

Gateway代理:

第1轮:

耗时: 116.73秒

Bulk速率: 2.57 bulk/秒

文档速率: 25700.06 docs/秒

成功率: 100.00%

平均性能:

总耗时: 116.73秒

平均Bulk速率: 2.57 bulk/秒

平均文档速率: 25700.06 docs/秒

整体性能对比:

Gateway代理 平均性能更好,bulk速率高 0.64 bulk/秒

性能提升: 33.0%小结

不管是日志场景还是业务价值更重要的大数据或者搜索数据同步场景, gateway 的写入加速都能平稳的节省 25%-30% 的写入耗时。

关于极限网关(INFINI Gateway)

INFINI Gateway 是一个开源的面向搜索场景的高性能数据网关,所有请求都经过网关处理后再转发到后端的搜索业务集群。基于 INFINI Gateway,可以实现索引级别的限速限流、常见查询的缓存加速、查询请求的审计、查询结果的动态修改等等。

官网文档:https://docs.infinilabs.com/gateway

开源地址:https://github.com/infinilabs/gateway

作者:金多安,极限科技(INFINI Labs)搜索运维专家,Elastic 认证专家,搜索客社区日报责任编辑。一直从事与搜索运维相关的工作,日常会去挖掘 ES / Lucene 方向的搜索技术原理,保持搜索相关技术发展的关注。

原文:https://infinilabs.cn/blog/2025/gateway-bulk-write-performance-optimization/

背景:bulk 优化的应用

在 ES 的写入优化里,bulk 操作被广泛地用于批量处理数据。bulk 操作允许用户一次提交多个数据操作,如索引、更新、删除等,从而提高数据处理效率。bulk 操作的实现原理是,将数据操作请求打包成 HTTP 请求,并批量提交给 Elasticsearch 服务器。这样,Elasticsearch 服务器就可以一次处理多个数据操作,从而提高处理效率。

这种优化的核心价值在于减少了网络往返的次数和连接建立的开销。每一次单独的写入操作都需要经历完整的请求-响应周期,而批量写入则是将多个操作打包在一起,用一次通信完成原本需要多次交互的工作。这不仅仅节省了时间,更重要的是释放了系统资源,让服务器能够专注于真正的数据处理,而不是频繁的协议握手和状态维护。

这样的批量请求的确是可以优化写入请求的效率,让 ES 集群获得更多的资源去做写入请求的集中处理。但是除了客户端与 ES 集群的通讯效率优化,还有其他中间过程能优化么?

Gateway 的优化点

bulk 的优化理念是将日常零散的写入需求做集中化的处理,尽量减低日常请求的损耗,完成资源最大化的利用。简而言之就是“好钢用在刀刃上”。

但是 ES 在收到 bulk 写入请求后,也是需要协调节点根据文档的 id 计算所属的分片来将数据分发到对应的数据节点的。这个过程也是有一定损耗的,如果 bulk 请求中数据分布的很散,每个分片都需要进行写入,原本 bulk 集中写入的需求优势则还是没有得到最理想化的提升。

gateway 的写入加速则对 bulk 的优化理念的最大化补全。

gateway 可以本地计算每个索引文档对应后端 Elasticsearch 集群的目标存放位置,从而能够精准的进行写入请求定位。

在一批 bulk 请求中,可能存在多个后端节点的数据,bulk_reshuffle 过滤器用来将正常的 bulk 请求打散,按照目标节点或者分片进行拆分重新组装,避免 Elasticsearch 节点收到请求之后再次进行请求分发, 从而降低 Elasticsearch 集群间的流量和负载,也能避免单个节点成为热点瓶颈,确保各个数据节点的处理均衡,从而提升集群总体的索引吞吐能力。

整理的优化思路如下图:

优化实践

那我们来实践一下,看看 gateway 能提升多少的写入。

这里我们分 2 个测试场景:

- 基础集中写入测试,不带文档 id,直接批量写入。这个场景更像是日志或者监控数据采集的场景。

- 带文档 id 的写入测试,更偏向搜索场景或者大数据批同步的场景。

2 个场景都进行直接写入 ES 和 gateway 转发 ES 的效率比对。

测试材料除了需要备一个网关和一套 es 外,其余的内容如下:

测试索引 mapping 一致,名称区分:

PUT gateway_bulk_test

{

"settings": {

"number_of_shards": 6,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"timestamp": {

"type": "date",

"format": "strict_date_optional_time"

},

"field1": {

"type": "keyword"

},

"field2": {

"type": "keyword"

},

"field3": {

"type": "keyword"

},

"field4": {

"type": "integer"

},

"field5": {

"type": "keyword"

},

"field6": {

"type": "float"

}

}

}

}

PUT bulk_test

{

"settings": {

"number_of_shards": 6,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"timestamp": {

"type": "date",

"format": "strict_date_optional_time"

},

"field1": {

"type": "keyword"

},

"field2": {

"type": "keyword"

},

"field3": {

"type": "keyword"

},

"field4": {

"type": "integer"

},

"field5": {

"type": "keyword"

},

"field6": {

"type": "float"

}

}

}

}gateway 的配置文件如下:

path.data: data

path.logs: log

entry:

- name: my_es_entry

enabled: true

router: my_router

max_concurrency: 200000

network:

binding: 0.0.0.0:8000

flow:

- name: async_bulk

filter:

- bulk_reshuffle:

when:

contains:

_ctx.request.path: /_bulk

elasticsearch: prod

level: node

partition_size: 1

fix_null_id: true

- elasticsearch:

elasticsearch: prod #elasticsearch configure reference name

max_connection_per_node: 1000 #max tcp connection to upstream, default for all nodes

max_response_size: -1 #default for all nodes

balancer: weight

refresh: # refresh upstream nodes list, need to enable this feature to use elasticsearch nodes auto discovery

enabled: true

interval: 60s

filter:

roles:

exclude:

- master

router:

- name: my_router

default_flow: async_bulk

elasticsearch:

- name: prod

enabled: true

endpoints:

- https://127.0.0.1:9221

- https://127.0.0.1:9222

- https://127.0.0.1:9223

basic_auth:

username: admin

password: admin

pipeline:

- name: bulk_request_ingest

auto_start: true

keep_running: true

retry_delay_in_ms: 1000

processor:

- bulk_indexing:

max_connection_per_node: 100

num_of_slices: 3

max_worker_size: 30

idle_timeout_in_seconds: 10

bulk:

compress: false

batch_size_in_mb: 10

batch_size_in_docs: 10000

consumer:

fetch_max_messages: 100

queue_selector:

labels:

type: bulk_reshuffle测试脚本如下:

#!/usr/bin/env python3

"""

ES Bulk写入性能测试脚本

"""

import hashlib

import json

import random

import string

import time

from typing import List, Dict, Any

import requests

from concurrent.futures import ThreadPoolExecutor

from datetime import datetime

import urllib3

# 禁用SSL警告

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

class ESBulkTester:

def __init__(self):

# 配置变量 - 可修改

self.es_configs = [

{

"name": "ES直连",

"url": "https://127.0.0.1:9221",

"index": "bulk_test",

"username": "admin", # 修改为实际用户名

"password": "admin", # 修改为实际密码

"verify_ssl": False # HTTPS需要SSL验证

},

{

"name": "Gateway代理",

"url": "http://localhost:8000",

"index": "gateway_bulk_test",

"username": None, # 无需认证

"password": None,

"verify_ssl": False

}

]

self.batch_size = 10000 # 每次bulk写入条数

self.log_interval = 100000 # 每多少次bulk写入输出日志

# ID生成规则配置 - 前2位后5位

self.id_prefix_start = 1

self.id_prefix_end = 999 # 前3位: 01-999

self.id_suffix_start = 1

self.id_suffix_end = 9999 # 后4位: 0001-9999

# 当前ID计数器

self.current_prefix = self.id_prefix_start

self.current_suffix = self.id_suffix_start

def generate_id(self) -> str:

"""生成固定规则的ID - 前2位后5位"""

id_str = f"{self.current_prefix:02d}{self.current_suffix:05d}"

# 更新计数器

self.current_suffix += 1

if self.current_suffix > self.id_suffix_end:

self.current_suffix = self.id_suffix_start

self.current_prefix += 1

if self.current_prefix > self.id_prefix_end:

self.current_prefix = self.id_prefix_start

return id_str

def generate_random_hash(self, length: int = 32) -> str:

"""生成随机hash值"""

random_string = ''.join(random.choices(string.ascii_letters + string.digits, k=length))

return hashlib.md5(random_string.encode()).hexdigest()

def generate_document(self) -> Dict[str, Any]:

"""生成随机文档内容"""

return {

"timestamp": datetime.now().isoformat(),

"field1": self.generate_random_hash(),

"field2": self.generate_random_hash(),

"field3": self.generate_random_hash(),

"field4": random.randint(1, 1000),

"field5": random.choice(["A", "B", "C", "D"]),

"field6": random.uniform(0.1, 100.0)

}

def create_bulk_payload(self, index_name: str) -> str:

"""创建bulk写入payload"""

bulk_data = []

for _ in range(self.batch_size):

#doc_id = self.generate_id()

doc = self.generate_document()

# 添加index操作

bulk_data.append(json.dumps({

"index": {

"_index": index_name,

# "_id": doc_id

}

}))

bulk_data.append(json.dumps(doc))

return "\n".join(bulk_data) + "\n"

def bulk_index(self, config: Dict[str, Any], payload: str) -> bool:

"""执行bulk写入"""

url = f"{config['url']}/_bulk"

headers = {

"Content-Type": "application/x-ndjson"

}

# 设置认证信息

auth = None

if config.get('username') and config.get('password'):

auth = (config['username'], config['password'])

try:

response = requests.post(

url,

data=payload,

headers=headers,

auth=auth,

verify=config.get('verify_ssl', True),

timeout=30

)

return response.status_code == 200

except Exception as e:

print(f"Bulk写入失败: {e}")

return False

def refresh_index(self, config: Dict[str, Any]) -> bool:

"""刷新索引"""

url = f"{config['url']}/{config['index']}/_refresh"

# 设置认证信息

auth = None

if config.get('username') and config.get('password'):

auth = (config['username'], config['password'])

try:

response = requests.post(

url,

auth=auth,

verify=config.get('verify_ssl', True),

timeout=10

)

success = response.status_code == 200

print(f"索引刷新{'成功' if success else '失败'}: {config['index']}")

return success

except Exception as e:

print(f"索引刷新失败: {e}")

return False

def run_test(self, config: Dict[str, Any], round_num: int, total_iterations: int = 100000):

"""运行性能测试"""

test_name = f"{config['name']}-第{round_num}轮"

print(f"\n开始测试: {test_name}")

print(f"ES地址: {config['url']}")

print(f"索引名称: {config['index']}")

print(f"认证: {'是' if config.get('username') else '否'}")

print(f"每次bulk写入: {self.batch_size}条")

print(f"总计划写入: {total_iterations * self.batch_size}条")

print("-" * 50)

start_time = time.time()

success_count = 0

error_count = 0

for i in range(1, total_iterations + 1):

payload = self.create_bulk_payload(config['index'])

if self.bulk_index(config, payload):

success_count += 1

else:

error_count += 1

# 每N次输出日志

if i % self.log_interval == 0:

elapsed_time = time.time() - start_time

rate = i / elapsed_time if elapsed_time > 0 else 0

print(f"已完成 {i:,} 次bulk写入, 耗时: {elapsed_time:.2f}秒, 速率: {rate:.2f} bulk/秒")

end_time = time.time()

total_time = end_time - start_time

total_docs = total_iterations * self.batch_size

print(f"\n{test_name} 测试完成!")

print(f"总耗时: {total_time:.2f}秒")

print(f"成功bulk写入: {success_count:,}次")

print(f"失败bulk写入: {error_count:,}次")

print(f"总文档数: {total_docs:,}条")

print(f"平均速率: {success_count/total_time:.2f} bulk/秒")

print(f"文档写入速率: {total_docs/total_time:.2f} docs/秒")

print("=" * 60)

return {

"test_name": test_name,

"config_name": config['name'],

"round": round_num,

"es_url": config['url'],

"index": config['index'],

"total_time": total_time,

"success_count": success_count,

"error_count": error_count,

"total_docs": total_docs,

"bulk_rate": success_count/total_time,

"doc_rate": total_docs/total_time

}

def run_comparison_test(self, total_iterations: int = 10000):

"""运行双地址对比测试"""

print("ES Bulk写入性能测试开始")

print(f"测试时间: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}")

print("=" * 60)

results = []

rounds = 2 # 每个地址测试2轮

# 循环测试所有配置

for config in self.es_configs:

print(f"\n开始测试配置: {config['name']}")

print("*" * 40)

for round_num in range(1, rounds + 1):

# 运行测试

result = self.run_test(config, round_num, total_iterations)

results.append(result)

# 每轮结束后刷新索引

print(f"\n第{round_num}轮测试完成,执行索引刷新...")

self.refresh_index(config)

# 重置ID计数器

if round_num == 1:

# 第1轮:使用初始ID范围(新增数据)

print("第1轮:新增数据模式")

else:

# 第2轮:重复使用相同ID(更新数据模式)

print("第2轮:数据更新模式,复用第1轮ID")

self.current_prefix = self.id_prefix_start

self.current_suffix = self.id_suffix_start

print(f"{config['name']} 第{round_num}轮测试结束\n")

# 输出对比结果

print("\n性能对比结果:")

print("=" * 80)

# 按配置分组显示结果

config_results = {}

for result in results:

config_name = result['config_name']

if config_name not in config_results:

config_results[config_name] = []

config_results[config_name].append(result)

for config_name, rounds_data in config_results.items():

print(f"\n{config_name}:")

total_time = 0

total_bulk_rate = 0

total_doc_rate = 0

for round_data in rounds_data:

print(f" 第{round_data['round']}轮:")

print(f" 耗时: {round_data['total_time']:.2f}秒")

print(f" Bulk速率: {round_data['bulk_rate']:.2f} bulk/秒")

print(f" 文档速率: {round_data['doc_rate']:.2f} docs/秒")

print(f" 成功率: {round_data['success_count']/(round_data['success_count']+round_data['error_count'])*100:.2f}%")

total_time += round_data['total_time']

total_bulk_rate += round_data['bulk_rate']

total_doc_rate += round_data['doc_rate']

avg_bulk_rate = total_bulk_rate / len(rounds_data)

avg_doc_rate = total_doc_rate / len(rounds_data)

print(f" 平均性能:")

print(f" 总耗时: {total_time:.2f}秒")

print(f" 平均Bulk速率: {avg_bulk_rate:.2f} bulk/秒")

print(f" 平均文档速率: {avg_doc_rate:.2f} docs/秒")

# 整体对比

if len(config_results) >= 2:

config_names = list(config_results.keys())

config1_avg = sum([r['bulk_rate'] for r in config_results[config_names[0]]]) / len(config_results[config_names[0]])

config2_avg = sum([r['bulk_rate'] for r in config_results[config_names[1]]]) / len(config_results[config_names[1]])

if config1_avg > config2_avg:

faster = config_names[0]

rate_diff = config1_avg - config2_avg

else:

faster = config_names[1]

rate_diff = config2_avg - config1_avg

print(f"\n整体性能对比:")

print(f"{faster} 平均性能更好,bulk速率高 {rate_diff:.2f} bulk/秒")

print(f"性能提升: {(rate_diff/min(config1_avg, config2_avg)*100):.1f}%")

def main():

"""主函数"""

tester = ESBulkTester()

# 运行测试(每次bulk 1万条,300次bulk = 300万条文档)

tester.run_comparison_test(total_iterations=300)

if __name__ == "__main__":

main()1. 日志场景:不带 id 写入

测试条件:

- bulk 写入数据不带文档 id

- 每批次 bulk 10000 条数据,总共写入 30w 数据

这里把

反馈结果:

性能对比结果:

================================================================================

ES直连:

第1轮:

耗时: 152.29秒

Bulk速率: 1.97 bulk/秒

文档速率: 19699.59 docs/秒

成功率: 100.00%

平均性能:

总耗时: 152.29秒

平均Bulk速率: 1.97 bulk/秒

平均文档速率: 19699.59 docs/秒

Gateway代理:

第1轮:

耗时: 115.63秒

Bulk速率: 2.59 bulk/秒

文档速率: 25944.35 docs/秒

成功率: 100.00%

平均性能:

总耗时: 115.63秒

平均Bulk速率: 2.59 bulk/秒

平均文档速率: 25944.35 docs/秒

整体性能对比:

Gateway代理 平均性能更好,bulk速率高 0.62 bulk/秒

性能提升: 31.7%2. 业务场景:带文档 id 的写入

测试条件:

- bulk 写入数据带有文档 id,两次测试写入的文档 id 生成规则一致且重复。

- 每批次 bulk 10000 条数据,总共写入 30w 数据

这里把 py 脚本中 第 99 行 和 第 107 行的注释打开。

反馈结果:

性能对比结果:

================================================================================

ES直连:

第1轮:

耗时: 155.30秒

Bulk速率: 1.93 bulk/秒

文档速率: 19317.39 docs/秒

成功率: 100.00%

平均性能:

总耗时: 155.30秒

平均Bulk速率: 1.93 bulk/秒

平均文档速率: 19317.39 docs/秒

Gateway代理:

第1轮:

耗时: 116.73秒

Bulk速率: 2.57 bulk/秒

文档速率: 25700.06 docs/秒

成功率: 100.00%

平均性能:

总耗时: 116.73秒

平均Bulk速率: 2.57 bulk/秒

平均文档速率: 25700.06 docs/秒

整体性能对比:

Gateway代理 平均性能更好,bulk速率高 0.64 bulk/秒

性能提升: 33.0%小结

不管是日志场景还是业务价值更重要的大数据或者搜索数据同步场景, gateway 的写入加速都能平稳的节省 25%-30% 的写入耗时。

关于极限网关(INFINI Gateway)

INFINI Gateway 是一个开源的面向搜索场景的高性能数据网关,所有请求都经过网关处理后再转发到后端的搜索业务集群。基于 INFINI Gateway,可以实现索引级别的限速限流、常见查询的缓存加速、查询请求的审计、查询结果的动态修改等等。

官网文档:https://docs.infinilabs.com/gateway

开源地址:https://github.com/infinilabs/gateway

收起阅读 »作者:金多安,极限科技(INFINI Labs)搜索运维专家,Elastic 认证专家,搜索客社区日报责任编辑。一直从事与搜索运维相关的工作,日常会去挖掘 ES / Lucene 方向的搜索技术原理,保持搜索相关技术发展的关注。

原文:https://infinilabs.cn/blog/2025/gateway-bulk-write-performance-optimization/

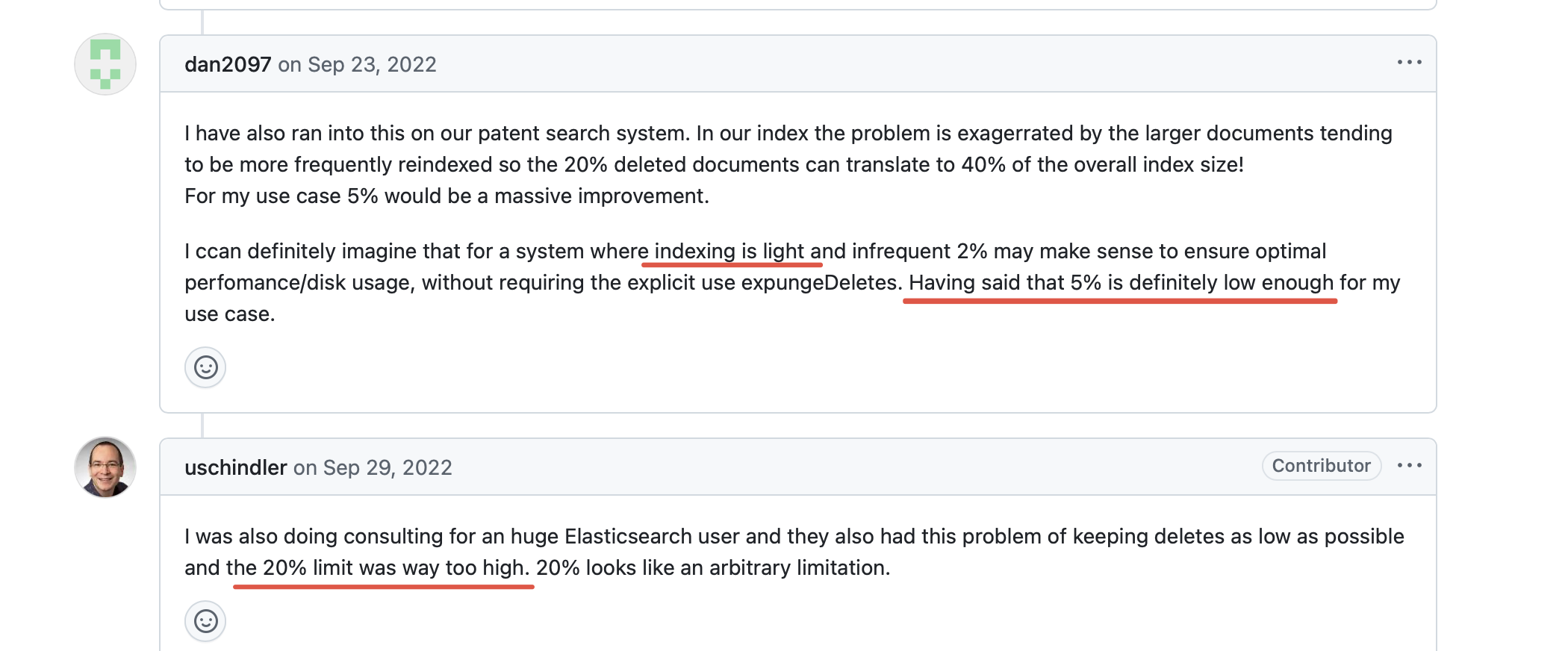

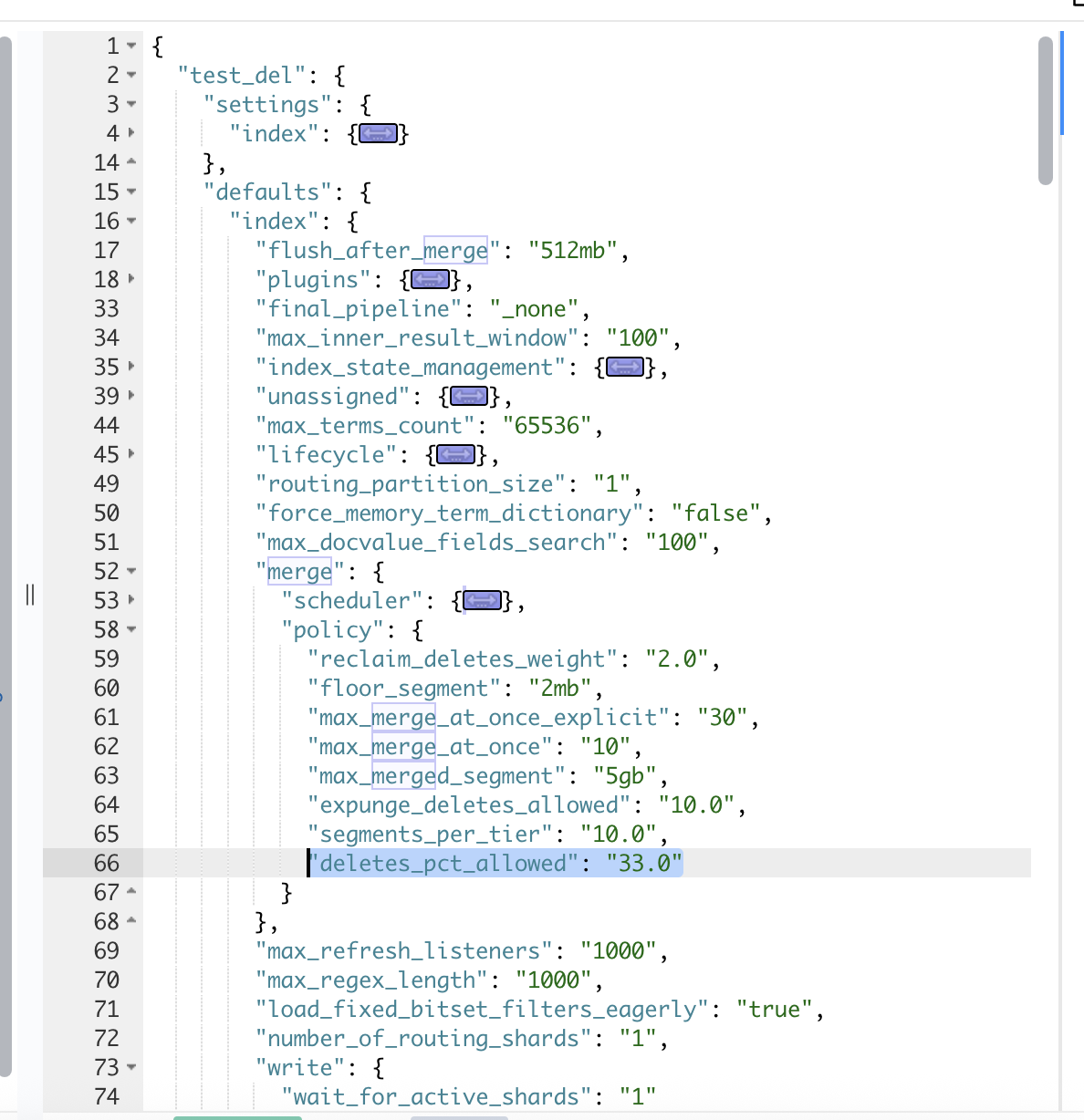

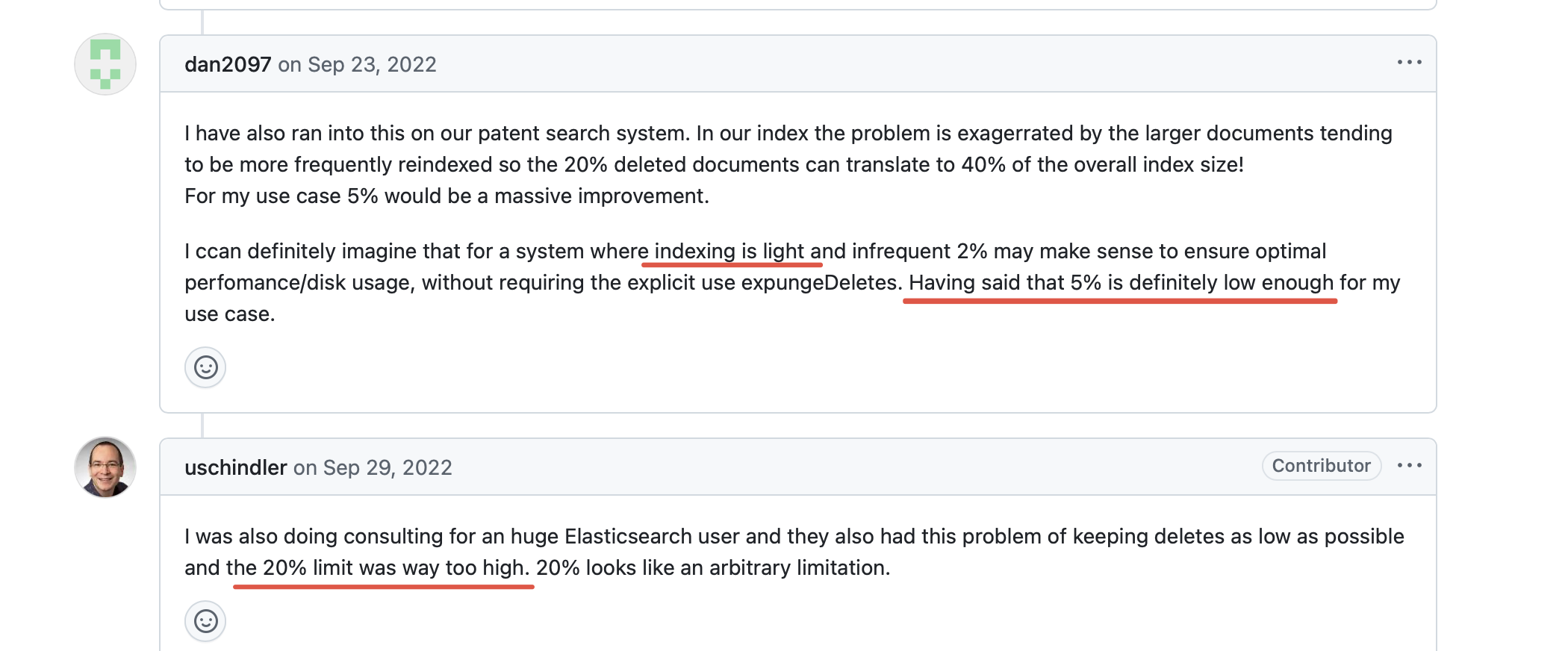

ES 调优帖:关于索引合并参数 index.merge.policy.deletePctAllowed 的取值优化

最近发现了 lucene 9.5 版本把 merge 策略的默认参数改了。

* GITHUB#11761: TieredMergePolicy now allowed a maximum allowable deletes percentage of down to 5%, and the default

maximum allowable deletes percentage is changed from 33% to 20%. (Marc D'Mello)也就是 index.merge.policy.deletePctAllowed 最小值可以取 5%(原来是 20%),而默认值为 20%(原来是 33%)。

这是一个控制索引中已删除文档的占比的参数,简单来说,调低这个参数能够降低存储大小,同时也需要更多的 cpu 和内存资源来完成这个调优。

通过这个帖子的讨论,大家可以发现,“实践出真知”,这次的参数调整是 lucene 社区对于用户积极反馈的采纳。因此,对于老版本的用户,也可以在 deletepct 比较高的场景下,调优这个参数,当然一切生产调整都需要经过测试。

对于 ES 的新用户来说,这时候可能冒出了下面这些问题

- 这个参数反馈的已删除文档占比 deletepct 是什么?

- 它怎么计算的呢?较高的 deletepct 会有什么影响?

- 较低的 deletepct 为什么会有更多的资源消耗?

- 除了调优这个参数还有什么优化办法么?

伴随这些问题,来探讨一下这个参数的来源和作用。

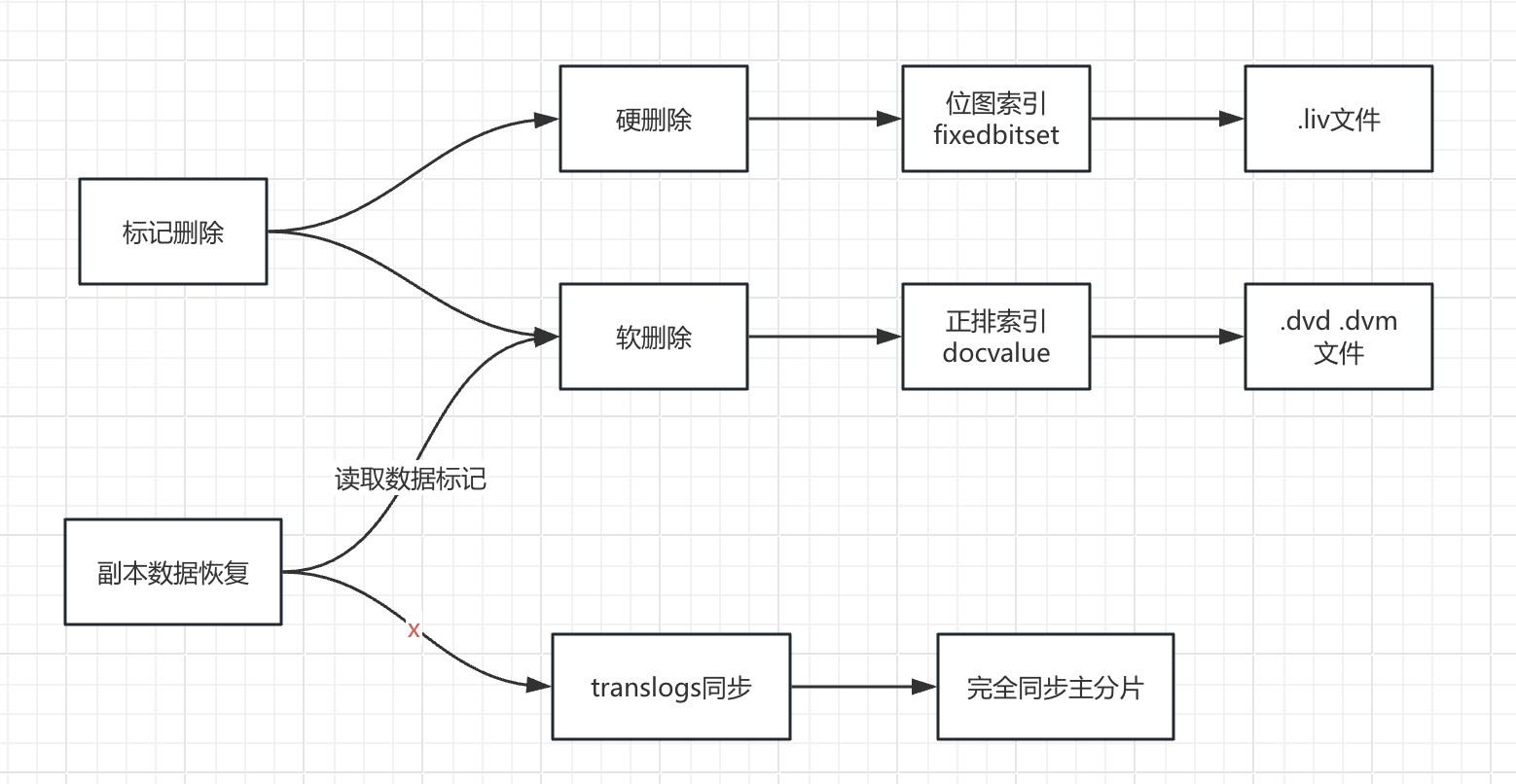

deletePctAllowed:软删除的遗留

在 Lucene 中,软删除是一种标记文档以便后续逻辑删除的机制,而不是立即从索引中物理删除文档。

但是这些软删除文档又不是永久存在的,deletePctAllowed 表示索引中允许存在的软删除文档占总文档数的最大百分比。

当软删除文档的比例达到或超过 deletePctAllowed 所设定的阈值时,Lucene 会触发索引合并操作。这是因为在合并过程中,那些被软删除的文档会被物理地从索引中移除,从而减少索引的存储空间占用。

当 deletePctAllowed 设置过低时,会频繁触发索引合并,因合并操作需大量磁盘 I/O、CPU 和内存资源,会使写入性能显著下降,磁盘 I/O 压力增大。假设 deletePctAllowed 为 0,则每次写入都需要消耗额外的资源来做 segment 的合并。

deletePctAllowed 过高,索引会容纳大量软删除文档,占用过多磁盘空间,增加存储成本且可能导致磁盘空间不足。查询时要过滤大量软删除文档,使查询响应时间变长、性能下降。同时也观察到,在使用 soft-deleted 特性后,文档更新和 refresh 也会受到影响,deletePctAllowed 过高,文档更新/refresh 操作耗时也会明显上升。

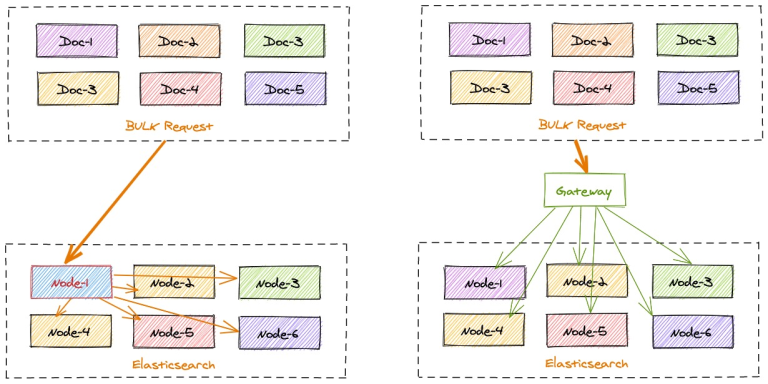

deletePctAllowed 的实际效果

从上面的解释看,index.merge.policy.deletePctAllowed 这个参数仿佛并不难理解,但实际上这个参数是应用到各个 segment 级别的,并且 segment 对这个参数的触发条件也是有限制(过小的 segment 并不会因为这个参数触发合并操作)。在多分片多 segment 的条件下,索引对 deletePctAllowed 参数实际的应用效果并不完全一致。因此,可以做个实际测试来看 deletePctAllowed 对索引产生的效果。

这里创建一个一千万文档的索引,然后全量更新一遍,看最后 deletePctAllowed 会保留多少的被删除文档。

GET test_del/_count

{

"count": 10000000,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

}

}

# 查看 delete 文档数量

GET test_del/_stats

···

"primaries": {

"docs": {

"count": 10000000,

"deleted": 0

},

···

这里的 deletePctAllowed 还是使用的 33%。

更新任务命令:

POST test_del/_update_by_query?wait_for_completion=false

{

"query": {

"match_all": {}

},

"script": {

"source": "ctx._source.field_name = 'new_value'",

"lang": "painless"

}

}完成后,

# 任务状态

···

"task": {

"node": "28HymM3xTESGMPRD3LvtCg",

"id": 10385666,

"type": "transport",

"action": "indices:data/write/update/byquery",

"status": {

"total": 10000000,

"updated": 10000000,# 这里可以看到全量更新

"created": 0,

"deleted": 0,

"batches": 10000,

"version_conflicts": 0,

"noops": 0,

"retries": {

"bulk": 0,

"search": 0

},

"throttled_millis": 0,

"requests_per_second": -1,

"throttled_until_millis": 0

}

···

# 索引的状态

GET test_del/_stats

···

"_all": {

"primaries": {

"docs": {

"count": 10000000,

"deleted": 809782

},

···实际删除文档与非删除文档的比例为 8.09%。

现在尝试调低 index.merge.policy.deletes_pct_allowed到 20%。

PUT test_del/_settings

{"index.merge.policy.deletes_pct_allowed":20}

由于之前删除文档占比过低,调整参数并不会触发新的 merge,因此需要重新全量更新数据查看一下是否有改变。

最终得到的索引状态如下:

GET test_del/_stats

···

"primaries": {

"docs": {

"count": 10000000,

"deleted": 190458

}

···这次得到的实际删除文档与非删除文档的比例为 1.9%

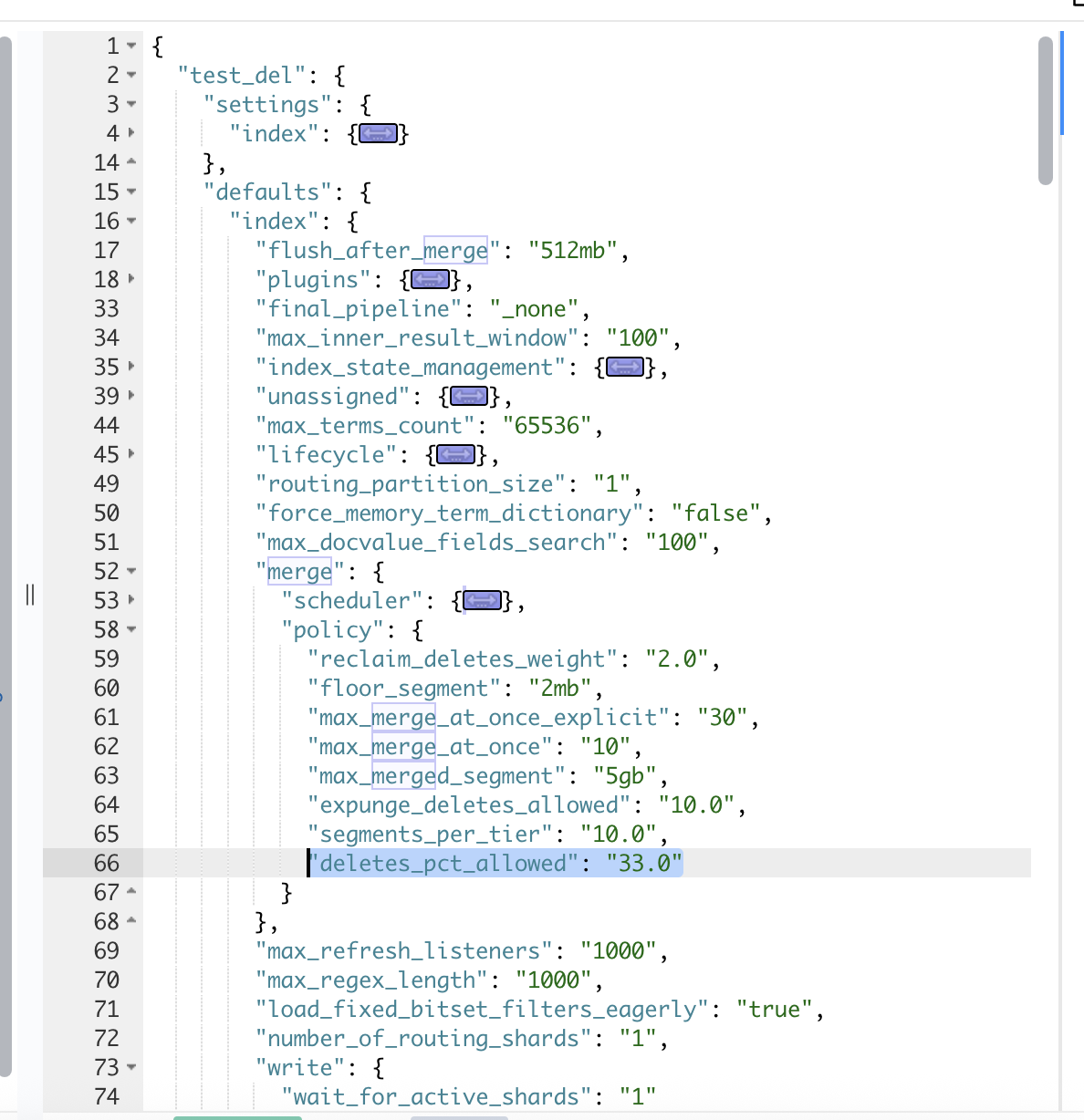

deletes_pct_allowed 默认值的调整

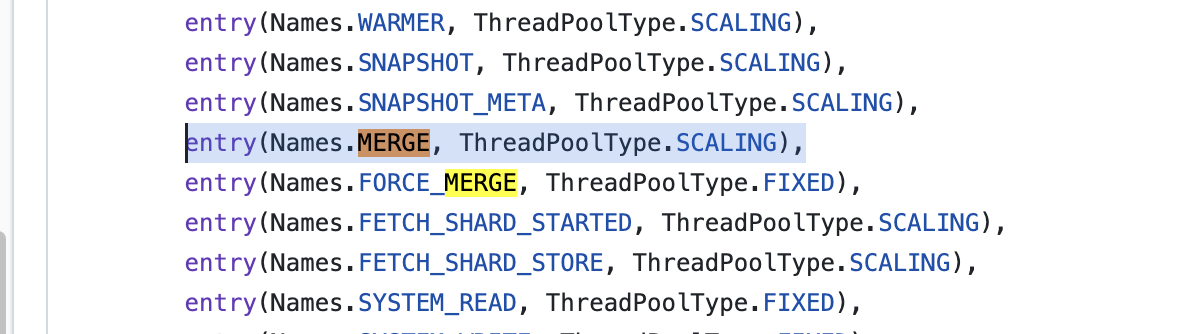

上面提到 deletePctAllowed 设置过低时,会频繁触发索引合并,而合并任务的线程使用线程类型是 SCALING 的,是一种动态扩展使用 cpu 的策略。

那么,当 deletePctAllowed 设置过低时,merge 任务增加,cpu 线程使用增加。集群的 cpu 和磁盘的使用会随着写入增加,deletePctAllowed 降低产生了放大效果。

所以,在没有大量数据支撑的条件下,ES 的使用者们往往会选择业务低峰期使用 forcemerge 来降低文档删除比,因为 forcemerge 的线程类型是 fixed,并且为 1,对 cpu 和磁盘的压力更加可控,同时 forcemerge 的 deletePctAllowed 默认阈值是 10%,更加低。

而社区中,大家的实际反馈则更倾向使用较低的 deletePctAllowed 阈值,特别是小索引小写入的情况下。

并且提供了相应的测试结果

#### RUN 1

Test config:

Single node domain

Instance type: EC2 m5.4xlarge

Updates: 50% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 39gb | 37gb |

| Deleted docs percent | 22% | 18% |

| Avg. CPU | (42 - 53)% | (43 - 55)% |

| Write throughput | 11 - 15 mbps | 11 - 17 mbps |

| Indexing latency | 0.15 - 0.36 ms | 0.15 - 0.39 ms |

| P90 search latency | 14.9 ms | 13.2 ms |

| P90 term query latency | 13.7 ms | 13.5 ms |

#### RUN 2

Test config:

Single node domain

Instance type: EC2 m5.4xlarge

Updates: 75% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 19.4gb | 17.7gb |

| Deleted docs percent | 22.8% | 15% |

| Avg. CPU | (43 - 53)% | (46 - 53)% |

| Write throughput | 9 - 14.5 mbps | 10 - 15.9 mbps |

| Indexing latency | 0.14 - 0.33 ms | 0.14 - 0.28 ms |

| P90 search latency | 15.9 ms | 13.5 ms |

| P90 term query latency | 15.7 ms | 13.9 ms |

#### RUN 3

Test config:

Single node domain

Instance type: EC2 m5.4xlarge

Updates: 80% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 15.9gb | 14.6gb |

| Deleted docs percent | 24% | 18% |

| Avg. CPU | (46 - 52)% | (47 - 52)% |

| Write throughput | 9 - 13 mbps | 10 - 15 mbps |

| Indexing latency | 0.14 - 0.28 ms | 0.13 - 0.26 ms |

| P90 search latency | 15.3 ms | 13.6 ms |

| P90 term query latency | 15.2 ms | 13.4 ms |

#### RUN 4

Test config:

Single node domain

Instance type: EC2 m5.2xlarge

Updates: 80% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 21.6gb | 17.8gb |

| Deleted docs percent | 30% | 18% |

| Avg. CPU | (71 - 89)% | (83 - 90)% |

| Write throughput | 6 - 12 mbps | 10 - 15 mbps |

| indexing latency | 0.21 - 0.30 ms | 0.20 - 0.31 ms |

| P90 search latency | 15.4 ms | 16.3 ms |

| P90 term query latency | 15.4 ms | 14.8 ms |

在测试中给出的结论是:

- CPU 和 IO 吞吐量没有明显增加。

- 由于索引中删除的文档数量较少,搜索延迟更少。

- 减少被删除文档占用的磁盘空间浪费

但是也需要注意,这里的测试索引和消耗资源并不大,有些业务量较大的索引还是需要重新做相关压力测试。

另一种调优思路

那除了降低 deletePctAllowed 和使用 forcemerge,还有其他方法么?

这里一个pr,提供一个综合性的解决方案,作者把两个 merge 策略进行了合并,在主动合并的间隙添加 forcemerge 检测方法,遇到可执行的时间段(资源使用率低),主动发起对单个 segment 的 forcemerge,这里 segment 得删选大小更加低,这样对 forcemerge 的任务耗时也更低,最终减少索引的删除文档占比。

简单的理解就是,利用了集群资源的“碎片时间”去完成主动的 forcemerge。也是一种可控且优质的调优方式。

关于极限科技(INFINI Labs)

极限科技,全称极限数据(北京)科技有限公司,是一家专注于实时搜索与数据分析的软件公司。旗下品牌极限实验室(INFINI Labs)致力于打造极致易用的数据探索与分析体验。

极限科技是一支年轻的团队,采用天然分布式的方式来进行远程协作,员工分布在全球各地,希望通过努力成为中国乃至全球企业大数据实时搜索分析产品的首选,为中国技术品牌输出添砖加瓦。

作者:金多安,极限科技(INFINI Labs)搜索运维专家,Elastic 认证专家,搜索客社区日报责任编辑。一直从事与搜索运维相关的工作,日常会去挖掘 ES / Lucene 方向的搜索技术原理,保持搜索相关技术发展的关注。

原文:https://infinilabs.cn/blog/2025/index-merge-policy-deletepctallowed/

最近发现了 lucene 9.5 版本把 merge 策略的默认参数改了。

* GITHUB#11761: TieredMergePolicy now allowed a maximum allowable deletes percentage of down to 5%, and the default

maximum allowable deletes percentage is changed from 33% to 20%. (Marc D'Mello)也就是 index.merge.policy.deletePctAllowed 最小值可以取 5%(原来是 20%),而默认值为 20%(原来是 33%)。

这是一个控制索引中已删除文档的占比的参数,简单来说,调低这个参数能够降低存储大小,同时也需要更多的 cpu 和内存资源来完成这个调优。

通过这个帖子的讨论,大家可以发现,“实践出真知”,这次的参数调整是 lucene 社区对于用户积极反馈的采纳。因此,对于老版本的用户,也可以在 deletepct 比较高的场景下,调优这个参数,当然一切生产调整都需要经过测试。

对于 ES 的新用户来说,这时候可能冒出了下面这些问题

- 这个参数反馈的已删除文档占比 deletepct 是什么?

- 它怎么计算的呢?较高的 deletepct 会有什么影响?

- 较低的 deletepct 为什么会有更多的资源消耗?

- 除了调优这个参数还有什么优化办法么?

伴随这些问题,来探讨一下这个参数的来源和作用。

deletePctAllowed:软删除的遗留

在 Lucene 中,软删除是一种标记文档以便后续逻辑删除的机制,而不是立即从索引中物理删除文档。

但是这些软删除文档又不是永久存在的,deletePctAllowed 表示索引中允许存在的软删除文档占总文档数的最大百分比。

当软删除文档的比例达到或超过 deletePctAllowed 所设定的阈值时,Lucene 会触发索引合并操作。这是因为在合并过程中,那些被软删除的文档会被物理地从索引中移除,从而减少索引的存储空间占用。

当 deletePctAllowed 设置过低时,会频繁触发索引合并,因合并操作需大量磁盘 I/O、CPU 和内存资源,会使写入性能显著下降,磁盘 I/O 压力增大。假设 deletePctAllowed 为 0,则每次写入都需要消耗额外的资源来做 segment 的合并。

deletePctAllowed 过高,索引会容纳大量软删除文档,占用过多磁盘空间,增加存储成本且可能导致磁盘空间不足。查询时要过滤大量软删除文档,使查询响应时间变长、性能下降。同时也观察到,在使用 soft-deleted 特性后,文档更新和 refresh 也会受到影响,deletePctAllowed 过高,文档更新/refresh 操作耗时也会明显上升。

deletePctAllowed 的实际效果

从上面的解释看,index.merge.policy.deletePctAllowed 这个参数仿佛并不难理解,但实际上这个参数是应用到各个 segment 级别的,并且 segment 对这个参数的触发条件也是有限制(过小的 segment 并不会因为这个参数触发合并操作)。在多分片多 segment 的条件下,索引对 deletePctAllowed 参数实际的应用效果并不完全一致。因此,可以做个实际测试来看 deletePctAllowed 对索引产生的效果。

这里创建一个一千万文档的索引,然后全量更新一遍,看最后 deletePctAllowed 会保留多少的被删除文档。

GET test_del/_count

{

"count": 10000000,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

}

}

# 查看 delete 文档数量

GET test_del/_stats

···

"primaries": {

"docs": {

"count": 10000000,

"deleted": 0

},

···

这里的 deletePctAllowed 还是使用的 33%。

更新任务命令:

POST test_del/_update_by_query?wait_for_completion=false

{

"query": {

"match_all": {}

},

"script": {

"source": "ctx._source.field_name = 'new_value'",

"lang": "painless"

}

}完成后,

# 任务状态

···

"task": {

"node": "28HymM3xTESGMPRD3LvtCg",

"id": 10385666,

"type": "transport",

"action": "indices:data/write/update/byquery",

"status": {

"total": 10000000,

"updated": 10000000,# 这里可以看到全量更新

"created": 0,

"deleted": 0,

"batches": 10000,

"version_conflicts": 0,

"noops": 0,

"retries": {

"bulk": 0,

"search": 0

},

"throttled_millis": 0,

"requests_per_second": -1,

"throttled_until_millis": 0

}

···

# 索引的状态

GET test_del/_stats

···

"_all": {

"primaries": {

"docs": {

"count": 10000000,

"deleted": 809782

},

···实际删除文档与非删除文档的比例为 8.09%。

现在尝试调低 index.merge.policy.deletes_pct_allowed到 20%。

PUT test_del/_settings

{"index.merge.policy.deletes_pct_allowed":20}

由于之前删除文档占比过低,调整参数并不会触发新的 merge,因此需要重新全量更新数据查看一下是否有改变。

最终得到的索引状态如下:

GET test_del/_stats

···

"primaries": {

"docs": {

"count": 10000000,

"deleted": 190458

}

···这次得到的实际删除文档与非删除文档的比例为 1.9%

deletes_pct_allowed 默认值的调整

上面提到 deletePctAllowed 设置过低时,会频繁触发索引合并,而合并任务的线程使用线程类型是 SCALING 的,是一种动态扩展使用 cpu 的策略。

那么,当 deletePctAllowed 设置过低时,merge 任务增加,cpu 线程使用增加。集群的 cpu 和磁盘的使用会随着写入增加,deletePctAllowed 降低产生了放大效果。

所以,在没有大量数据支撑的条件下,ES 的使用者们往往会选择业务低峰期使用 forcemerge 来降低文档删除比,因为 forcemerge 的线程类型是 fixed,并且为 1,对 cpu 和磁盘的压力更加可控,同时 forcemerge 的 deletePctAllowed 默认阈值是 10%,更加低。

而社区中,大家的实际反馈则更倾向使用较低的 deletePctAllowed 阈值,特别是小索引小写入的情况下。

并且提供了相应的测试结果

#### RUN 1

Test config:

Single node domain

Instance type: EC2 m5.4xlarge

Updates: 50% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 39gb | 37gb |

| Deleted docs percent | 22% | 18% |

| Avg. CPU | (42 - 53)% | (43 - 55)% |

| Write throughput | 11 - 15 mbps | 11 - 17 mbps |

| Indexing latency | 0.15 - 0.36 ms | 0.15 - 0.39 ms |

| P90 search latency | 14.9 ms | 13.2 ms |

| P90 term query latency | 13.7 ms | 13.5 ms |

#### RUN 2

Test config:

Single node domain

Instance type: EC2 m5.4xlarge

Updates: 75% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 19.4gb | 17.7gb |

| Deleted docs percent | 22.8% | 15% |

| Avg. CPU | (43 - 53)% | (46 - 53)% |

| Write throughput | 9 - 14.5 mbps | 10 - 15.9 mbps |

| Indexing latency | 0.14 - 0.33 ms | 0.14 - 0.28 ms |

| P90 search latency | 15.9 ms | 13.5 ms |

| P90 term query latency | 15.7 ms | 13.9 ms |

#### RUN 3

Test config:

Single node domain

Instance type: EC2 m5.4xlarge

Updates: 80% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 15.9gb | 14.6gb |

| Deleted docs percent | 24% | 18% |

| Avg. CPU | (46 - 52)% | (47 - 52)% |

| Write throughput | 9 - 13 mbps | 10 - 15 mbps |

| Indexing latency | 0.14 - 0.28 ms | 0.13 - 0.26 ms |

| P90 search latency | 15.3 ms | 13.6 ms |

| P90 term query latency | 15.2 ms | 13.4 ms |

#### RUN 4

Test config:

Single node domain

Instance type: EC2 m5.2xlarge

Updates: 80% of the total request

Baseline:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "33.0"

Target:

OS_2.3

"index.merge.policy.deletes_pct_allowed" : "20.0"

| Metrics | Baseline | Target |

------------------------------------

| Store size | 21.6gb | 17.8gb |

| Deleted docs percent | 30% | 18% |

| Avg. CPU | (71 - 89)% | (83 - 90)% |

| Write throughput | 6 - 12 mbps | 10 - 15 mbps |

| indexing latency | 0.21 - 0.30 ms | 0.20 - 0.31 ms |

| P90 search latency | 15.4 ms | 16.3 ms |

| P90 term query latency | 15.4 ms | 14.8 ms |

在测试中给出的结论是:

- CPU 和 IO 吞吐量没有明显增加。

- 由于索引中删除的文档数量较少,搜索延迟更少。

- 减少被删除文档占用的磁盘空间浪费

但是也需要注意,这里的测试索引和消耗资源并不大,有些业务量较大的索引还是需要重新做相关压力测试。

另一种调优思路

那除了降低 deletePctAllowed 和使用 forcemerge,还有其他方法么?

这里一个pr,提供一个综合性的解决方案,作者把两个 merge 策略进行了合并,在主动合并的间隙添加 forcemerge 检测方法,遇到可执行的时间段(资源使用率低),主动发起对单个 segment 的 forcemerge,这里 segment 得删选大小更加低,这样对 forcemerge 的任务耗时也更低,最终减少索引的删除文档占比。

简单的理解就是,利用了集群资源的“碎片时间”去完成主动的 forcemerge。也是一种可控且优质的调优方式。

关于极限科技(INFINI Labs)

极限科技,全称极限数据(北京)科技有限公司,是一家专注于实时搜索与数据分析的软件公司。旗下品牌极限实验室(INFINI Labs)致力于打造极致易用的数据探索与分析体验。

极限科技是一支年轻的团队,采用天然分布式的方式来进行远程协作,员工分布在全球各地,希望通过努力成为中国乃至全球企业大数据实时搜索分析产品的首选,为中国技术品牌输出添砖加瓦。

收起阅读 »作者:金多安,极限科技(INFINI Labs)搜索运维专家,Elastic 认证专家,搜索客社区日报责任编辑。一直从事与搜索运维相关的工作,日常会去挖掘 ES / Lucene 方向的搜索技术原理,保持搜索相关技术发展的关注。

原文:https://infinilabs.cn/blog/2025/index-merge-policy-deletepctallowed/

谈谈 ES 6.8 到 7.10 的功能变迁(6)- 其他

这是 ES 7.10 相较于 ES 6.8 新增内容的最后一篇,主要涉及算分方法和同义词加载的部分。

自定义算分:script_score 2.0

Elasticsearch 7.0 引入了新一代的函数分数功能,称为 script_score 查询。这一新功能提供了一种更简单、更灵活的方式来为每条记录生成排名分数。script_score 查询由一组函数构成,包括算术函数和距离函数,用户可以根据需要混合和匹配这些函数,以构建任意的分数计算逻辑。这种模块化的结构使得使用更加简便,同时也为更多用户提供了这一重要功能的访问权限。通过 script_score,用户可以根据复杂的业务逻辑自定义评分,而不仅仅依赖于传统的 TF-IDF 或 BM25 算法。例如,可以根据文档的地理位置、时间戳、或其他自定义字段的值来调整评分,从而更精确地控制搜索结果的排序。

script_score 是 ES 对 function score 功能的一个迭代替换。

常用函数

基本函数

用于对字段值或评分进行基本的数学运算。

doc[<field>].value 获取文档中某个字段的值。

"script": {

"source": "doc['price'].value * 1.2"

}算术运算

支持加 (+)、减 (-)、乘 (*)、除 (/)、取模 (%) 等操作。

"script": {

"source": "doc['price'].value + (doc['discount'].value * 0.5)"

}Saturation 函数

saturation 函数用于对字段值进行饱和处理,限制字段值对评分的影响范围。

"script": {

"source": "saturation(doc['<field_name>'].value, <pivot>)"

}<field_name>: 需要处理的字段。<pivot>: 饱和点(pivot),当字段值达到该值时,评分增益趋于饱和。

//在这个示例中,`likes` 字段的值在达到 `100` 后,对评分的影响会趋于饱和。

{

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "saturation(doc['likes'].value, 100)"

}

}

}

}Sigmoid 函数

sigmoid 函数用于对字段值进行 S 形曲线变换,平滑地调整字段值对评分的影响。

"script": {

"source": "sigmoid(doc['<field_name>'].value, <pivot>, <exponent>)"

}-

需要处理的字段。 -

中心点(pivot),S 形曲线的中点。 -

指数,控制曲线的陡峭程度。

//在这个示例中,`likes` 字段的值在 `50` 附近对评分的影响最为显著,而随着值远离 `50`,影响会逐渐平滑。

{

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "sigmoid(doc['likes'].value, 50, 0.5)"

}

}

}

}距离衰减函数

用于衰减计算地理位置的函数。

//相关函数

double decayGeoLinear(String originStr, String scaleStr, String offsetStr, double decay, GeoPoint docValue)

double decayGeoExp(String originStr, String scaleStr, String offsetStr, double decay, GeoPoint docValue)

double decayGeoGauss(String originStr, String scaleStr, String offsetStr, double decay, GeoPoint docValue)

"script" : {

"source" : "decayGeoExp(params.origin, params.scale, params.offset, params.decay, doc['location'].value)",

"params": {

"origin": "40, -70.12",

"scale": "200km",

"offset": "0km",

"decay" : 0.2

}

}数值衰减函数

用于衰减计算数值的函数。

//相关函数

double decayNumericLinear(double origin, double scale, double offset, double decay, double docValue)

double decayNumericExp(double origin, double scale, double offset, double decay, double docValue)

double decayNumericGauss(double origin, double scale, double offset, double decay, double docValue)

"script" : {

"source" : "decayNumericLinear(params.origin, params.scale, params.offset, params.decay, doc['dval'].value)",

"params": {

"origin": 20,

"scale": 10,

"decay" : 0.5,

"offset" : 0

}

}日期衰减函数

用于衰减计算日期的函数。

//相关函数

double decayDateLinear(String originStr, String scaleStr, String offsetStr, double decay, JodaCompatibleZonedDateTime docValueDate)

double decayDateExp(String originStr, String scaleStr, String offsetStr, double decay, JodaCompatibleZonedDateTime docValueDate)

double decayDateGauss(String originStr, String scaleStr, String offsetStr, double decay, JodaCompatibleZonedDateTime docValueDate)

"script" : {

"source" : "decayDateGauss(params.origin, params.scale, params.offset, params.decay, doc['date'].value)",

"params": {

"origin": "2008-01-01T01:00:00Z",

"scale": "1h",

"offset" : "0",

"decay" : 0.5

}

}随机函数

用于生成随机评分。 randomNotReproducible` 生成一个随机评分。

"script" : {

"source" : "randomNotReproducible()"

}randomReproducible 使用种子值生成可重复的随机评分。

"script" : {

"source" : "randomReproducible(Long.toString(doc['_seq_no'].value), 100)"

}字段值因子

用于根据字段值调整评分。 _field_valuefactor` 根据字段值调整评分。

"script" : {

"source" : "Math.log10(doc['field'].value * params.factor)",

params" : {

"factor" : 5

}

}其他实用函数

- Math.log:计算对数,

Math.log(doc['price'].value) - Math.sqrt:计算平方根,

Math.sqrt(doc['popularity'].value) - Math.pow:计算幂次,

Math.pow(doc['score'].value, 2)

同义词字段重加载

Elasticsearch 7.3 引入了同义词字段重加载功能,允许用户在更新同义词文件后,无需重新索引即可使更改生效。

这一功能极大地简化了同义词管理的流程,尤其是在需要频繁更新同义词的场景下。通过 _reload_search_analyzers API,用户可以重新加载指定索引的分词器,从而使新的同义词规则立即生效。

注意,虽然同义词词典能被热加载,但是已经生成的索引数据不会被修改。

测试代码

PUT /my_index

{

"settings": {

"index" : {

"analysis" : {

"analyzer" : {

"my_synonyms" : {

"tokenizer" : "whitespace",

"filter" : ["synonym"]

}

},

"filter" : {

"synonym" : {

"type" : "synonym_graph",

"synonyms_path" : "analysis/synonym.txt",

"updateable" : true

}

}

}

}

},

"mappings": {

"properties": {

"text": {

"type": "text",

"analyzer" : "standard",

"search_analyzer": "my_synonyms"

}

}

}

}

POST /my_index/_reload_search_analyzers执行上述请求后,Elasticsearch 会重新加载 my_index 索引的分析器,使最新的同义词规则生效。

case insensitive 参数

case_insensitive 参数允许用户在执行精确匹配查询时忽略大小写。

这一功能特别适用于需要处理大小写不敏感数据的场景,例如用户名、标签或分类代码等。通过设置 case_insensitive 为 true,用户可以在不修改数据的情况下,实现对大小写不敏感的查询,从而简化查询逻辑并提高搜索的准确性。

测试代码

//在这个示例中,`term` 查询会匹配 `user` 字段值为 `JohnDoe`、`johndoe` 或 `JOHNDOE` 的文档,而忽略大小写差异。

{

"query": {

"term": {

"user": {

"value": "JohnDoe",

"case_insensitive": true

}

}

}

}小结

Elasticsearch 作为一款强大的开源搜索和分析引擎,其版本的不断迭代带来了诸多显著的改进与优化。对比 Elasticsearch 6.8,Elasticsearch 7.10 在多个方面展现出了新的功能和特性,极大地提升了用户体验和系统性能。这系列文章简短的介绍了各个方面的新功能和优化,希望能给大家一定的帮助。

推荐阅读

- 谈谈 ES 6.8 到 7.10 的功能变迁(1)- 性能优化篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(2)- 字段类型篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(3)- 查询方法篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(4)- 聚合功能篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(5)- 任务和集群管理

关于极限科技(INFINI Labs)

极限科技,全称极限数据(北京)科技有限公司,是一家专注于实时搜索与数据分析的软件公司。旗下品牌极限实验室(INFINI Labs)致力于打造极致易用的数据探索与分析体验。

极限科技是一支年轻的团队,采用天然分布式的方式来进行远程协作,员工分布在全球各地,希望通过努力成为中国乃至全球企业大数据实时搜索分析产品的首选,为中国技术品牌输出添砖加瓦。

作者:金多安,极限科技(INFINI Labs)搜索运维专家,Elastic 认证专家,搜索客社区日报责任编辑。一直从事与搜索运维相关的工作,日常会去挖掘 ES / Lucene 方向的搜索技术原理,保持搜索相关技术发展的关注。

原文:https://infinilabs.cn/blog/2025/feature-evolution-from-elasticsearch-6.8-to-7.10-part-6/

这是 ES 7.10 相较于 ES 6.8 新增内容的最后一篇,主要涉及算分方法和同义词加载的部分。

自定义算分:script_score 2.0

Elasticsearch 7.0 引入了新一代的函数分数功能,称为 script_score 查询。这一新功能提供了一种更简单、更灵活的方式来为每条记录生成排名分数。script_score 查询由一组函数构成,包括算术函数和距离函数,用户可以根据需要混合和匹配这些函数,以构建任意的分数计算逻辑。这种模块化的结构使得使用更加简便,同时也为更多用户提供了这一重要功能的访问权限。通过 script_score,用户可以根据复杂的业务逻辑自定义评分,而不仅仅依赖于传统的 TF-IDF 或 BM25 算法。例如,可以根据文档的地理位置、时间戳、或其他自定义字段的值来调整评分,从而更精确地控制搜索结果的排序。

script_score 是 ES 对 function score 功能的一个迭代替换。

常用函数

基本函数

用于对字段值或评分进行基本的数学运算。

doc[<field>].value 获取文档中某个字段的值。

"script": {

"source": "doc['price'].value * 1.2"

}算术运算

支持加 (+)、减 (-)、乘 (*)、除 (/)、取模 (%) 等操作。

"script": {

"source": "doc['price'].value + (doc['discount'].value * 0.5)"

}Saturation 函数

saturation 函数用于对字段值进行饱和处理,限制字段值对评分的影响范围。

"script": {

"source": "saturation(doc['<field_name>'].value, <pivot>)"

}<field_name>: 需要处理的字段。<pivot>: 饱和点(pivot),当字段值达到该值时,评分增益趋于饱和。

//在这个示例中,`likes` 字段的值在达到 `100` 后,对评分的影响会趋于饱和。

{

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "saturation(doc['likes'].value, 100)"

}

}

}

}Sigmoid 函数

sigmoid 函数用于对字段值进行 S 形曲线变换,平滑地调整字段值对评分的影响。

"script": {

"source": "sigmoid(doc['<field_name>'].value, <pivot>, <exponent>)"

}-

需要处理的字段。 -

中心点(pivot),S 形曲线的中点。 -

指数,控制曲线的陡峭程度。

//在这个示例中,`likes` 字段的值在 `50` 附近对评分的影响最为显著,而随着值远离 `50`,影响会逐渐平滑。

{

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "sigmoid(doc['likes'].value, 50, 0.5)"

}

}

}

}距离衰减函数

用于衰减计算地理位置的函数。

//相关函数

double decayGeoLinear(String originStr, String scaleStr, String offsetStr, double decay, GeoPoint docValue)

double decayGeoExp(String originStr, String scaleStr, String offsetStr, double decay, GeoPoint docValue)

double decayGeoGauss(String originStr, String scaleStr, String offsetStr, double decay, GeoPoint docValue)

"script" : {

"source" : "decayGeoExp(params.origin, params.scale, params.offset, params.decay, doc['location'].value)",

"params": {

"origin": "40, -70.12",

"scale": "200km",

"offset": "0km",

"decay" : 0.2

}

}数值衰减函数

用于衰减计算数值的函数。

//相关函数

double decayNumericLinear(double origin, double scale, double offset, double decay, double docValue)

double decayNumericExp(double origin, double scale, double offset, double decay, double docValue)

double decayNumericGauss(double origin, double scale, double offset, double decay, double docValue)

"script" : {

"source" : "decayNumericLinear(params.origin, params.scale, params.offset, params.decay, doc['dval'].value)",

"params": {

"origin": 20,

"scale": 10,

"decay" : 0.5,

"offset" : 0

}

}日期衰减函数

用于衰减计算日期的函数。

//相关函数

double decayDateLinear(String originStr, String scaleStr, String offsetStr, double decay, JodaCompatibleZonedDateTime docValueDate)

double decayDateExp(String originStr, String scaleStr, String offsetStr, double decay, JodaCompatibleZonedDateTime docValueDate)

double decayDateGauss(String originStr, String scaleStr, String offsetStr, double decay, JodaCompatibleZonedDateTime docValueDate)

"script" : {

"source" : "decayDateGauss(params.origin, params.scale, params.offset, params.decay, doc['date'].value)",

"params": {

"origin": "2008-01-01T01:00:00Z",

"scale": "1h",

"offset" : "0",

"decay" : 0.5

}

}随机函数

用于生成随机评分。 randomNotReproducible` 生成一个随机评分。

"script" : {

"source" : "randomNotReproducible()"

}randomReproducible 使用种子值生成可重复的随机评分。

"script" : {

"source" : "randomReproducible(Long.toString(doc['_seq_no'].value), 100)"

}字段值因子

用于根据字段值调整评分。 _field_valuefactor` 根据字段值调整评分。

"script" : {

"source" : "Math.log10(doc['field'].value * params.factor)",

params" : {

"factor" : 5

}

}其他实用函数

- Math.log:计算对数,

Math.log(doc['price'].value) - Math.sqrt:计算平方根,

Math.sqrt(doc['popularity'].value) - Math.pow:计算幂次,

Math.pow(doc['score'].value, 2)

同义词字段重加载

Elasticsearch 7.3 引入了同义词字段重加载功能,允许用户在更新同义词文件后,无需重新索引即可使更改生效。

这一功能极大地简化了同义词管理的流程,尤其是在需要频繁更新同义词的场景下。通过 _reload_search_analyzers API,用户可以重新加载指定索引的分词器,从而使新的同义词规则立即生效。

注意,虽然同义词词典能被热加载,但是已经生成的索引数据不会被修改。

测试代码

PUT /my_index

{

"settings": {

"index" : {

"analysis" : {

"analyzer" : {

"my_synonyms" : {

"tokenizer" : "whitespace",

"filter" : ["synonym"]

}

},

"filter" : {

"synonym" : {

"type" : "synonym_graph",

"synonyms_path" : "analysis/synonym.txt",

"updateable" : true

}

}

}

}

},

"mappings": {

"properties": {

"text": {

"type": "text",

"analyzer" : "standard",

"search_analyzer": "my_synonyms"

}

}

}

}

POST /my_index/_reload_search_analyzers执行上述请求后,Elasticsearch 会重新加载 my_index 索引的分析器,使最新的同义词规则生效。

case insensitive 参数

case_insensitive 参数允许用户在执行精确匹配查询时忽略大小写。

这一功能特别适用于需要处理大小写不敏感数据的场景,例如用户名、标签或分类代码等。通过设置 case_insensitive 为 true,用户可以在不修改数据的情况下,实现对大小写不敏感的查询,从而简化查询逻辑并提高搜索的准确性。

测试代码

//在这个示例中,`term` 查询会匹配 `user` 字段值为 `JohnDoe`、`johndoe` 或 `JOHNDOE` 的文档,而忽略大小写差异。

{

"query": {

"term": {

"user": {

"value": "JohnDoe",

"case_insensitive": true

}

}

}

}小结

Elasticsearch 作为一款强大的开源搜索和分析引擎,其版本的不断迭代带来了诸多显著的改进与优化。对比 Elasticsearch 6.8,Elasticsearch 7.10 在多个方面展现出了新的功能和特性,极大地提升了用户体验和系统性能。这系列文章简短的介绍了各个方面的新功能和优化,希望能给大家一定的帮助。

推荐阅读

- 谈谈 ES 6.8 到 7.10 的功能变迁(1)- 性能优化篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(2)- 字段类型篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(3)- 查询方法篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(4)- 聚合功能篇

- 谈谈 ES 6.8 到 7.10 的功能变迁(5)- 任务和集群管理

关于极限科技(INFINI Labs)

极限科技,全称极限数据(北京)科技有限公司,是一家专注于实时搜索与数据分析的软件公司。旗下品牌极限实验室(INFINI Labs)致力于打造极致易用的数据探索与分析体验。

极限科技是一支年轻的团队,采用天然分布式的方式来进行远程协作,员工分布在全球各地,希望通过努力成为中国乃至全球企业大数据实时搜索分析产品的首选,为中国技术品牌输出添砖加瓦。

收起阅读 »作者:金多安,极限科技(INFINI Labs)搜索运维专家,Elastic 认证专家,搜索客社区日报责任编辑。一直从事与搜索运维相关的工作,日常会去挖掘 ES / Lucene 方向的搜索技术原理,保持搜索相关技术发展的关注。

原文:https://infinilabs.cn/blog/2025/feature-evolution-from-elasticsearch-6.8-to-7.10-part-6/

谈谈 ES 6.8 到 7.10 的功能变迁(5)- 任务和集群管理

这一篇我们继续了解 ES 7.10 相较于 ES 6.8 调优的集群管理和任务管理的方法,主要有断联查询的主动取消、投票节点角色、异步查询和可搜索快照四个功能。

Query 自动取消

对于一个完善的产品来说,当一个任务发起链接主动断联的时候,服务端与之相关的任务应该也都被回收。但是这个特性到了 elasticsearch 7.4 版本才有了明确的声明。

Elasticsearch now automatically terminates queries sent through the _search endpoint when the initiating connection is closed.

相关的 PR 和 issue 在这里,对源码有兴趣的同学可以挖掘一下。

PR:https://github.com/elastic/elasticsearch/pull/43332

issue:https://github.com/elastic/elasticsearch/issues/43105

简单来说,ES 接受在某个查询的 http 链接断掉的时候,与其相关的父子任务的自动取消。原来的场景下可能需要手工一个个关闭。

实际测试

利用 painless 模拟复杂查询,下面这个查询在测试集群上能维持 5s 左右

GET /_search?max_concurrent_shard_requests=1

{

"query": {

"bool": {

"must": [

{

"script": {

"script": {

"lang": "painless",

"source": """

long sum = 0;

for (int i = 0; i < 100000; i++) {

sum += i;

}

return true;

"""

}

}

},

{

"script": {

"script": {

"lang": "painless",

"source": """

long product = 1;

for (int i = 1; i < 100000; i++) {

product *= i;

}

return true;

"""

}

}

},

{

"script": {

"script": {

"lang": "painless",

"source": """

long factorial = 1;

for (int i = 1; i < 100000; i++) {

factorial *= i;

}

long squareSum = 0;

for (int j = 0; j < 100000; j++) {

squareSum += j * j;

}

return true;

"""

}

}

},

{

"script": {

"script": {

"lang": "painless",

"source": """

long fib1 = 0;

long fib2 = 1;

long next;

for (int i = 0; i < 100000; i++) {

next = fib1 + fib2;

fib1 = fib2;

fib2 = next;

}

return true;

"""

}

}

}

]

}

}

}查看任务被终止的状态

GET /_tasks?detailed=true&actions=*search*测试脚本,判断上面该查询被取消后是否还可以查到任务

import requests

import multiprocessing

import time

from requests.exceptions import RequestException

from datetime import datetime

# Elasticsearch 地址

#ES_URL = "http://localhost:9210" # 6.8版本地址

ES_URL = "http://localhost:9201"

# 耗时查询的 DSL

LONG_RUNNING_QUERY = {"size":0,

"query": {

"bool": {

"must": [

{

"script": {

"script": {

"lang": "painless",

"source": """

long sum = 0;

for (int i = 0; i < 100000; i++) {

sum += i;

}

return true;

"""

}

}

},

{

"script": {

"script": {

"lang": "painless",

"source": """

long product = 1;

for (int i = 1; i < 100000; i++) {

product *= i;

}

return true;

"""

}

}

},

{

"script": {

"script": {

"lang": "painless",

"source": """

long factorial = 1;

for (int i = 1; i < 100000; i++) {

factorial *= i;

}

long squareSum = 0;

for (int j = 0; j < 100000; j++) {

squareSum += j * j;

}

return true;

"""

}

}

},

{

"script": {

"script": {

"lang": "painless",

"source": """

long fib1 = 0;

long fib2 = 1;

long next;

for (int i = 0; i < 100000; i++) {

next = fib1 + fib2;

fib1 = fib2;

fib2 = next;

}

return true;

"""

}

}

}

]

}

}

}

# 用于同步的事件对象

query_finished = multiprocessing.Event()

# 新增:进程终止标志位

process_terminated = multiprocessing.Event()

# 定义一个函数用于添加时间戳到日志

def log_with_timestamp(message,*message1):

timestamp = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

print(f"[{timestamp}] {message}+{message1}")

# 发起查询的函数

def run_query():

try:

log_with_timestamp("发起查询...")

session = requests.Session()

response = session.post(

f"{ES_URL}/_search",

json=LONG_RUNNING_QUERY,

stream=True # 启用流式请求,允许后续中断

)

try:

# 尝试读取响应内容(如果连接未被中断)

if response.status_code == 200:

log_with_timestamp("查询完成,结果:", response.json())

else:

log_with_timestamp("查询失败,错误信息:", response.text)

except RequestException as e:

log_with_timestamp("请求被中断:", e)

finally:

# 标记查询完成

query_finished.set()

# 中断连接的信号函数

def interrupt_signal():

time.sleep(1) # 等待 1 秒

log_with_timestamp("发出中断查询信号...")

# 标记可以中断查询了

query_finished.set()

# 检测任务是否存在的函数

def check_task_exists():

# 等待进程终止标志位

process_terminated.wait()

max_retries = 3

retries = 0

time.sleep(1) #1s后检查

while retries < max_retries:

log_with_timestamp("检查任务是否存在...")

tasks_url = f"{ES_URL}/_tasks?detailed=true&actions=*search*"

try:

tasks_response = requests.get(tasks_url)

if tasks_response.status_code == 200:

tasks = tasks_response.json().get("nodes")

if tasks:

log_with_timestamp("任务仍存在:", tasks)

else:

log_with_timestamp("任务已消失")

break

else:

log_with_timestamp("获取任务列表失败,错误信息:", tasks_response.text)

except RequestException as e:

log_with_timestamp(f"检测任务失败(第 {retries + 1} 次重试): {e}")

retries += 1

time.sleep(1) # 等待 1 秒后重试

if retries == max_retries:

log_with_timestamp("达到最大重试次数,无法检测任务状态。")

# 主函数

def main():

# 启动查询进程

query_process = multiprocessing.Process(target=run_query)

query_process.start()

# 启动中断信号进程

interrupt_process = multiprocessing.Process(target=interrupt_signal)

interrupt_process.start()

# 等待中断信号

query_finished.wait()

# 检查查询进程是否还存活并终止它

if query_process.is_alive():

log_with_timestamp("尝试中断查询进程...")

query_process.terminate()

log_with_timestamp("查询进程已终止")

# 新增:设置进程终止标志位

process_terminated.set()

# 启动任务检测进程

check_process = multiprocessing.Process(target=check_task_exists)

check_process.start()

# 等待所有进程完成

query_process.join()

interrupt_process.join()

check_process.join()

if __name__ == "__main__":

main()实际测试结果:

# 6.8 版本

[2025-02-08 15:17:21] 发起查询...+()

[2025-02-08 15:17:22] 发出中断查询信号...+()

[2025-02-08 15:17:22] 尝试中断查询进程...+()

[2025-02-08 15:17:22] 查询进程已终止+()

[2025-02-08 15:17:23] 检查任务是否存在...+()

[2025-02-08 15:17:23] 任务仍存在:+({'fYMNv_KxQGCGzhgfMxPXuA': {......}},)可以看到在查询任务被终止后 1s 再去检查,任务仍然存在

# 7.10 版本

[2025-02-08 15:18:16] 发起查询...+()

[2025-02-08 15:18:17] 发出中断查询信号...+()

[2025-02-08 15:18:17] 尝试中断查询进程...+()